Brain MRI Segmentation Using U-Net and SegNet: A Comparative

Study Across Modalities with Robust Loss Functions

Gouranga Bala

a

, Hiranmay Mondal and Amit Sethi

b

Department of Electrical Engineering, Indian Institute of Technology Bombay, Powai, Mumbai, India

Keywords:

Brain MRI, Segmentation, U-Net, SegNet, Robust Loss Functions, Robust Dice Loss, Brain Tumor

Segmentation, Medical Image Analysis.

Abstract:

This paper presents a comprehensive comparative study of brain tumor segmentation using two well-known

Convolutional Neural Network (CNN) architectures, U-Net and SegNet, across multiple MRI modalities,

specifically T2-weighted and Fluid Attenuated Inversion Recovery (FLAIR) images from the BraTS 2020

dataset. We evaluated the performance of these models using four different loss functions: Dice Loss, Focal

Loss, Adaptive Robust Loss, and the novel Robust Dice Loss. Our contributions are twofold: first, we provide a

detailed comparison of the performance of U-Net and SegNet for brain tumor segmentation across distinct MRI

modalities, offering insights into the role of modality-specific features in segmentation outcomes. Second, we

introduce the novel Robust Dice Loss, which significantly improved SegNet’s training efficiency, allowing it

to handle challenging segmentation scenarios involving data imbalance and intricate tumor boundaries with

much greater ease. Our results indicate that U-Net generally outperforms SegNet in terms of segmentation

accuracy, particularly when trained with Adaptive Robust Loss. However, the introduction of Robust Dice

Loss enabled SegNet to achieve competitive performance, particularly with the FLAIR modality, demonstrat-

ing its potential as an effective alternative. This study emphasizes the importance of selecting appropriate loss

functions to handle imbalanced data and enhance model performance, thereby contributing valuable insights

for the advancement of automated medical image analysis and its clinical utility in neuro-oncology.

1 INTRODUCTION

Brain tumors remain one of the most challenging

diseases in neuro-oncology, necessitating precise di-

agnosis and treatment planning. Magnetic Reso-

nance Imaging (MRI) plays a crucial role in the di-

agnosis of brain tumors, offering multiple modali-

ties, including T1-weighted (T1), T2-weighted (T2),

contrast-enhanced T1 (T1CE) and fluid-attenuated in-

version recovery (FLAIR). These modalities provide

complementary information about the anatomy and

pathology of brain tissues, enhancing diagnostic ac-

curacy and segmentation efficacy. However, man-

ual segmentation of brain tumors in MRI images is

labor-intensive, subject to significant inter- and intra-

observer variability, and requires substantial exper-

tise, making automated segmentation methods highly

desirable.

Deep learning methods, particularly Convolu-

tional Neural Networks (CNNs), have revolution-

a

https://orcid.org/0009-0007-7822-0985

b

https://orcid.org/0000-0002-8634-1804

ized automated medical image segmentation. CNNs

can learn complex hierarchical features directly from

data, outperforming traditional methods. Among

these, U-Net and SegNet have emerged as prominent

architectures in medical image segmentation due to

their efficiency and adaptability to complex datasets.

U-Net is well-known for its encoder-decoder archi-

tecture with skip connections that preserve spatial in-

formation, facilitating precise segmentation of intri-

cate boundaries (Ronneberger et al., 2015). SegNet,

on the other hand, employs a similar encoder-decoder

structure but emphasizes computational efficiency by

omitting explicit skip connections (Badrinarayanan

et al., 2017).

Despite their success, the performance of CNN

models in medical segmentation tasks is heavily in-

fluenced by the choice of loss function. Brain tumor

segmentation often suffers from class imbalance, as

tumor regions typically constitute only a small frac-

tion of the overall image. Standard loss functions

may struggle to focus adequately on these small, clin-

ically significant regions. To address this challenge,

246

Bala, G., Mondal, H. and Sethi, A.

Brain MRI Segmentation Using U-Net and SegNet: A Comparative Study Across Modalities with Robust Loss Functions.

DOI: 10.5220/0013302900003911

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 18th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2025) - Volume 1, pages 246-254

ISBN: 978-989-758-731-3; ISSN: 2184-4305

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

advanced loss functions such as Dice Loss (Milletari

et al., 2016), Focal Loss (Lin and et al., 2017), Robust

Dice Loss, and Adaptive Robust Loss (Barron, 2017)

have been developed to prioritize regions of interest

and mitigate class imbalance issues.

This study aims to provide a comparative evalu-

ation of U-Net and SegNet models for brain tumor

segmentation across MRI modalities. By analyzing

these models under the influence of robust loss func-

tions, particularly the novel Robust Dice Loss, this

work highlights how different loss functions address

class imbalance and improve segmentation accuracy.

The insights gained can help advance automated med-

ical image analysis and contribute to enhancing clini-

cal workflows and patient outcomes.

2 RELATED WORKS

Brain tumor segmentation is a critical task in medical

imaging, fundamental for diagnosis, treatment plan-

ning, and monitoring of therapeutic outcomes. How-

ever, accurately segmenting brain tumors is challeng-

ing due to their variability in size, shape, and ap-

pearance across patients. This section provides an

overview of the evolution of brain tumor segmen-

tation techniques, from traditional machine learning

to modern deep learning-based approaches, and dis-

cusses the key advancements in robust loss functions

and hybrid models that address current challenges.

2.1 Traditional Approaches

Early approaches to brain tumor segmentation re-

lied heavily on generative and discriminative mod-

els. Generative models, such as atlas-based tech-

niques, leveraged predefined anatomical knowledge

to identify abnormalities. For instance, Prastawa et al.

(Prastawa and et al., 2004) utilized the ICBM brain at-

las to compare patient images, isolating tumor regions

using posterior probabilities and thresholding. Simi-

larly, Khotanlou et al. (Khotanlou and et al., 2008)

and Popuri et al. (Popuri and et al., 2012) employed

brain symmetry and iterative refinement to detect tu-

mor regions. Despite their utility, these methods of-

ten struggled with significant tumor-induced defor-

mations, resulting in segmentation inaccuracies.

On the other hand, discriminative models focused

on local image features using pixel-based measures,

texture analysis, and neighborhood histograms. Ma-

chine learning algorithms such as Support Vector Ma-

chines (SVMs), Fuzzy C-means (FCM), and Deci-

sion Forests (DFs) were commonly applied to clas-

sify pixels based on local characteristics. While effec-

tive in simple segmentation tasks, these methods did

not incorporate the contextual information necessary

to delineate complex tumor boundaries, especially for

multi-class segmentation.

2.2 Deep Learning-Based Approaches

The introduction of deep learning, particularly

Convolutional Neural Networks (CNNs), marked a

paradigm shift in medical image segmentation by

eliminating the need for manual feature engineering.

CNNs learn hierarchical features directly from the

data, capturing both local and global structures ef-

fectively. U-Net, introduced by Ronneberger et al.

(Ronneberger et al., 2015), has been especially influ-

ential in medical imaging due to its encoder-decoder

structure and skip connections, which help retain spa-

tial information and enhance segmentation precision,

particularly for small or intricate regions.

SegNet, proposed by Badrinarayanan et al.

(Badrinarayanan et al., 2017), features a similar

encoder-decoder architecture but omits explicit skip

connections, focusing instead on computational effi-

ciency. Although U-Net generally provides superior

accuracy for brain tumor segmentation, SegNet is a

competitive choice for settings with limited computa-

tional resources. CNNs have been extensively evalu-

ated on datasets like BraTS (Menze and et al., 2015),

consistently achieving state-of-the-art results in brain

tumor segmentation.

2.3 Advanced Loss Functions

Despite the success of CNNs, brain tumor segmen-

tation presents unique challenges, such as class im-

balance, where tumor regions often occupy only a

small portion of the overall image. To address these

challenges, several robust loss functions have been

developed: Dice Loss (Milletari et al., 2016) fo-

cuses on maximizing the overlap between predicted

and ground truth regions, making it particularly suit-

able for medical segmentation tasks with imbalanced

classes. Focal Loss (Lin and et al., 2017) empha-

sizes difficult-to-classify samples, ensuring improved

attention to smaller and complex tumor regions. The

novel Robust Dice Loss introduced in this study in-

troduces tunable parameters that adaptively prioritize

regions with higher errors, further enhancing segmen-

tation accuracy, particularly in challenging scenarios

involving intricate boundaries.

These advanced loss functions help CNNs focus

on small but clinically significant tumor regions, ulti-

mately improving segmentation performance.

Brain MRI Segmentation Using U-Net and SegNet: A Comparative Study Across Modalities with Robust Loss Functions

247

2.4 Hybrid Approaches

Recent advancements in the field has seen approaches

which combines deep learning models with tradi-

tional machine learning techniques to address the

limitations of standalone models. Rao et al. (Rao

and et al., 2020) incorporated CNN-derived features

into ensemble learning frameworks, while Saouli et

al. (Saouli and et al., 2020) utilized ensemble-based

methods to enhance the robustness and generalizabil-

ity of CNN-based segmentations. These approaches

leverage the strengths of CNNs for feature extraction

alongside the robustness of ensemble methods, thus

addressing limitations such as overfitting and variabil-

ity in performance.

2.5 Summary and Motivation for the

Present Study

The evolution from traditional models to deep

learning-based segmentation has greatly improved the

accuracy and reliability of brain tumor segmentation.

However, the challenges of class imbalance, complex

tumor boundaries, and the variability in MRI modali-

ties remain significant obstacles. This study builds on

previous research by providing a detailed comparative

analysis of U-Net and SegNet architectures for brain

tumor segmentation across separate MRI modalities

(T2 and FLAIR). By introducing the novel Robust

Dice Loss and exploring its impact on the segmen-

tation performance of these models, our work aims

to contribute new insights into how the choice of loss

functions can enhance model performance, address-

ing key challenges in brain tumor segmentation.

• We provide a comprehensive comparison of U-

Net and SegNet for brain tumor segmentation

across distinct MRI modalities.

• We introduce and evaluate the novel Robust Dice

Loss, demonstrating its potential to enhance the

training dynamics of SegNet, particularly in sce-

narios involving class imbalance and challenging

tumor boundaries.

These contributions are expected to advance au-

tomated brain tumor segmentation, with the potential

to significantly impact clinical workflows in neuro-

oncology by enhancing accuracy and reducing the

variability in segmentation outcomes.

3 METHODOLOGY

The methodology adopted in this study involves the

application of U-Net and SegNet models to segment

brain tumors across MRI modalities. To address chal-

lenges related to class imbalance and intricate tumor

boundaries, we employ multiple robust loss functions.

The following subsections detail the various compo-

nents of our methodology.

3.1 Data Preparation

Dataset: The Brain Tumor Segmentation (BraTS)

2020 dataset was used in this study. It contains

preoperative magnetic resonance imaging scans

in four imaging modalities: T1-weighted (T1),

T2-weighted (T2), T1-weighted contrast-enhanced

(T1CE), and fluid-attenuated inversion recovery

(FLAIR). Each modality highlights different aspects

of tumor structure, such as the enhancing tumor,

the nonenhancing tumor core, and the surrounding

edema. These complementary views make the dataset

ideal for tumor segmentation tasks.

Preprocessing: To prepare the data for training, we

applied the following steps:

• Normalization: The intensity values of each MRI

modality were scaled to a common range to re-

duce variability caused by different scanner set-

tings.

• Resizing and Slice Extraction: The 3D volumes

were split into 2D slices along the transverse plane

for training, simplifying the segmentation task

and focusing on one slice at a time. All MRI scans

were resized to 240×240 pixels to reduce compu-

tational cost while maintaining sufficient detail.

Data Augmentation: To improve the diversity of

training data and reduce overfitting, several augmen-

tation techniques were used:

• Rotation and Flipping: Random rotations within

a range of −15

◦

to 15

◦

and horizontal/vertical

flips were applied to simulate variations in patient

orientation.

• Elastic Deformation: Deformations were intro-

duced to mimic the variability of natural tissue,

enhancing the model’s ability to handle irregular

shapes.

• Intensity Adjustments: Random noise and con-

trast changes were added to simulate differences

in imaging conditions and scanners, making the

model more robust to real-world variations.

These preprocessing and enhancement steps en-

sured that the data set was consistent and diverse,

helping the models learn effectively while improving

their ability to generalize to unseen data.

BIOIMAGING 2025 - 12th International Conference on Bioimaging

248

3.2 Model Implementation

3.2.1 Architectures

We employed the U-Net and SegNet architectures for

brain tumor segmentation. Both are encoder-decoder

networks, but differ in how they retain and reconstruct

spatial information.

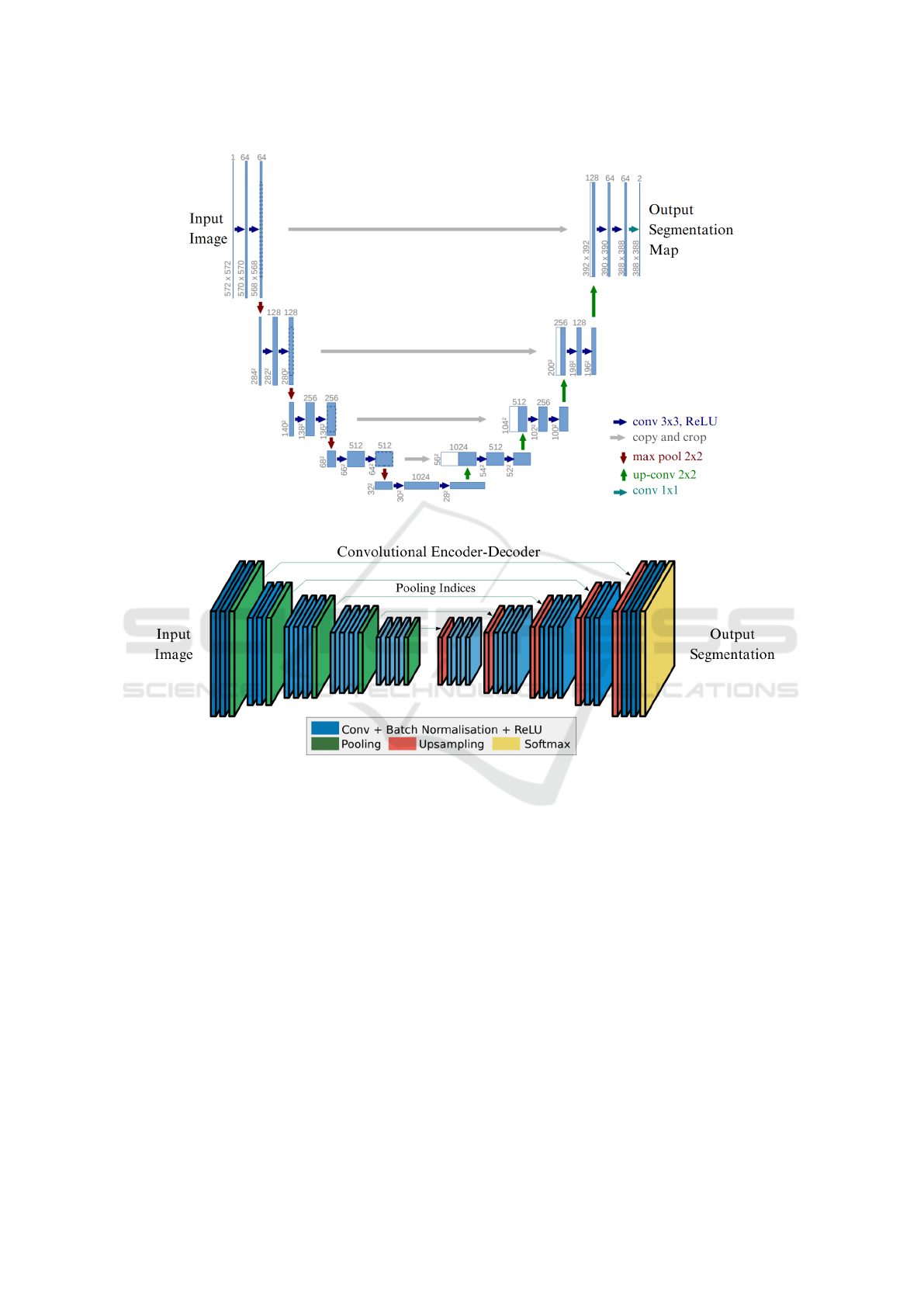

Figure 1a illustrates the U-Net architecture, which

uses skip connections to directly transfer high-

resolution feature maps from the encoder to the de-

coder, allowing precise recovery of spatial details

(Ronneberger et al., 2015). The encoder consists of

repeated 3×3 convolutions with ReLU activation, fol-

lowed by 2×2 max-pooling. The decoder performs

2×2 transposed convolutions (up-convolutions) con-

catenated with the corresponding encoder output. It

has 23 convolutional layers and uses ReLU as the ac-

tivation function.

In contrast, SegNet, depicted in Figure 1b, prior-

itizes computational efficiency by using max-pooling

indices to guide upsampling in the decoder (Badri-

narayanan et al., 2017). Its encoder mirrors VGG16,

with multiple convolutional layers and 2×2 max-

pooling. The decoder reconstructs dense feature

maps using pooling indices, reducing memory re-

quirements. SegNet has 13 convolutional layers at

its encoder and decoder with ReLU as the activation

function.

3.3 Loss Functions

The segmentation models were trained using four dif-

ferent loss functions to handle challenges such as

class imbalance and to refine the focus on difficult re-

gions. The loss functions used are as follows:

• Dice Loss: This is a commonly used metric for

medical image segmentation, particularly effec-

tive for handling class imbalance by focusing on

maximizing the overlap between the predicted and

ground truth regions. The Dice loss is defined as:

Dice Loss = 1 −

2

∑

i

p

i

g

i

∑

i

p

2

i

+

∑

i

g

2

i

(1)

where p

i

and g

i

represent the predicted and

ground truth values for voxel i.

• Focal Loss: Focal Loss is used to emphasize

difficult-to-classify examples by reducing the in-

fluence of easy-to-classify regions, thereby help-

ing to address the issue of class imbalance effec-

tively. Focal Loss is formulated as:

Focal Loss(p

t

) = −(1 − p

t

)

γ

· log(p

t

) (2)

where p

t

represents the model’s estimated proba-

bility for the target class, and γ is a tunable param-

eter that controls the rate at which easy examples

are down-weighted.

• Robust Dice Loss: The segmentation To improve

segmentation accuracy, particularly for error-

prone regions, we introduced a novel Robust Dice

Loss that adapts the emphasis on higher-error re-

gions through a tunable parameter λ. This loss is

defined as:

Robust Dice Loss = 1 −

2

∑

i

p

λ

i

g

i

∑

i

p

2λ

i

+

∑

i

g

2

i

(3)

The parameter λ allows adaptive prioritization of

regions with high errors, which is particularly use-

ful for ensuring focus on the most challenging

parts of the segmentation task. In our work we

have kept the value of λ as 2.

• Adaptive Robust Loss: Adaptive Robust Loss

combines properties of several existing robust loss

functions and adjusts the degree of robustness dy-

namically during training to enhance segmenta-

tion performance in complex scenarios. The loss

function is defined as:

AR Loss(x, α, c) = |α−2|

x

c

2

|α − 2| + 1

!

α

2

− 1

(4)

Here, x represents the input, α is a parameter

controlling the robustness level, and c is a scal-

ing constant. This formulation allows the loss to

adapt based on the characteristics of the data dur-

ing training, effectively managing the model’s ro-

bustness.

3.4 Training and Evaluation

The dataset was split into training, validation, and

testing sets using an 70:15:15 ratio. Training was

conducted using the Adam optimizer with an initial

learning rate of 0.001, which was reduced by a fac-

tor of 0.5 if validation loss plateaued. Early stop-

ping was employed to prevent overfitting, with a pa-

tience of 15 epochs. The models were trained for

up to 100 epochs. we have used Dice Coefficient as

evaluation metrics To assess the segmentation perfor-

mance, which measures the overlap between the pre-

dicted segmentation and ground truth. We have done

qualitative analysis of the performance by visual in-

spection of segmentation results to interpret the im-

pact of different loss functions, particularly in captur-

ing fine boundaries and complex tumor regions.

Brain MRI Segmentation Using U-Net and SegNet: A Comparative Study Across Modalities with Robust Loss Functions

249

(a) U-Net Architecture (Ronneberger et al., 2015)

(b) SegNet Architecture (Badrinarayanan et al., 2017)

Figure 1: Visual comparison of U-Net and SegNet architectures. Figures are adapted with permission from the cited sources.

4 RESULTS

The results of our experiments demonstrate the effec-

tiveness of U-Net and SegNet for brain tumor seg-

mentation using different MRI modalities and robust

loss functions. Below, we present the findings for

each model, modality, and loss function.

Table 1 presents the segmentation accuracy

achieved by the U-Net and SegNet models for T2-

weighted (T2) and Fluid Attenuated Inversion Recov-

ery (FLAIR) MRI modalities using four different loss

functions: Dice Loss, Focal Loss, Robust Dice Loss,

and Adaptive Robust Loss. The results indicate that

both U-Net and SegNet achieve optimal performance

when trained with advanced loss functions, partic-

ularly Adaptive Robust Loss for U-Net and Robust

Dice Loss for SegNet.

The choice of loss function significantly influ-

ences the segmentation performance of both U-

Net and SegNet models. Specifically, Robust Dice

Loss demonstrated substantial improvements for Seg-

Net, achieving the highest accuracy with the FLAIR

modality, yielding a Dice coefficient of 0.801. This

result suggests that the adaptive nature of the Ro-

bust Dice Loss enables SegNet to effectively over-

come some of its architectural limitations, such as the

absence of skip connections, by focusing more effi-

ciently on challenging regions.

Adaptive Robust Loss outperformed the other loss

functions when used with U-Net, yielding Dice scores

of 0.775 and 0.847 for the T2 and FLAIR modalities,

BIOIMAGING 2025 - 12th International Conference on Bioimaging

250

Table 1: Performance comparison of models across different modalities and loss functions. Bold values indicate the best

performance for each row.

Model Modality Dice Loss Focal Loss Robust Dice Loss Adaptive Robust Loss

U-Net T2 0.705 0.725 0.443 0.775

U-Net FLAIR 0.448 0.453 0.475 0.847

SegNet T2 0.563 0.581 0.619 0.562

SegNet FLAIR 0.598 0.537 0.801 0.547

respectively. This result suggests that the flexibility

of Adaptive Robust Loss aligns well with U-Net’s ar-

chitecture, which relies heavily on its skip connec-

tions to retain spatial information throughout the seg-

mentation process. The dynamic adjustment of the

loss function’s robustness during training may help U-

Net more accurately segment complex tumor regions

while reducing the impact of noise.

In comparison, Dice Loss and Focal Loss showed

mixed outcomes. Although they produced reasonably

competitive results with U-Net, especially with the T2

modality, they struggled with generalizing effectively

in the case of SegNet, particularly with the T2 modal-

ity. This suggests that SegNet’s reliance on pooled

feature indices may hinder its capacity to effectively

leverage global context, especially without the adap-

tive emphasis provided by the advanced loss func-

tions. The advanced loss functions, such as Adaptive

Robust Loss and Robust Dice Loss, therefore prove

to be more suitable for addressing the challenges of

brain tumor segmentation, such as class imbalance

and intricate tumor boundaries.

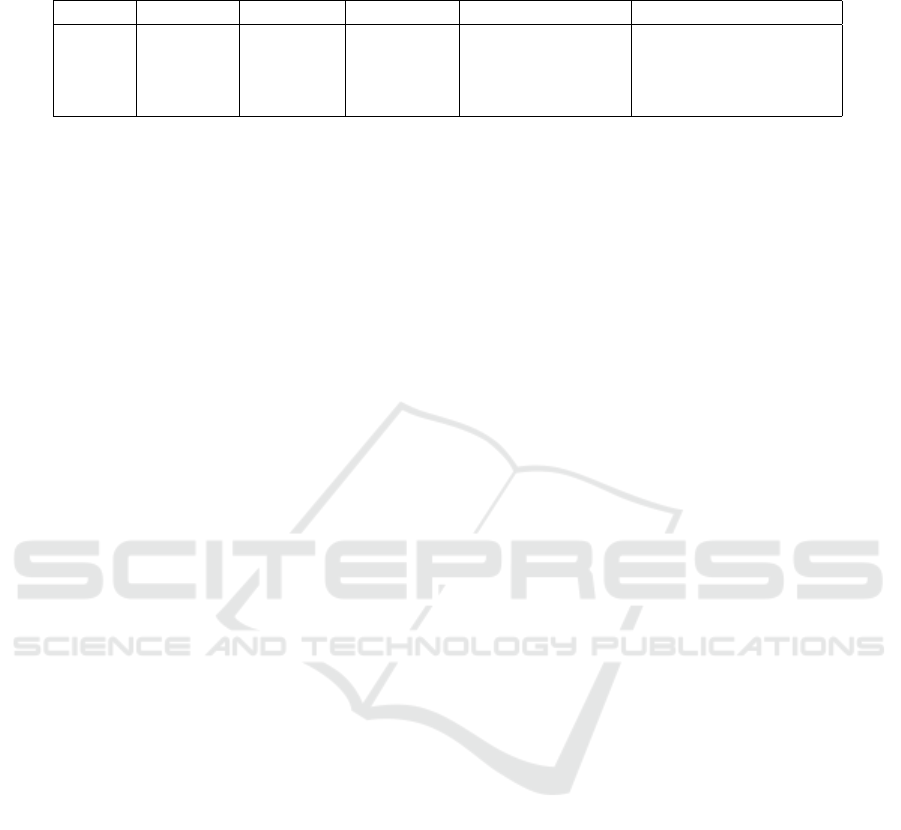

A visual analysis of segmentation performance is

provided in Figures 2, 3, and 4, showing the predicted

segmentation masks generated by U-Net and SegNet

for T2 and FLAIR modalities using various loss func-

tions. Figure 2 demonstrates the segmentation outputs

from the U-Net model for the T2 modality. Notably,

the use of Adaptive Robust Loss yields the most well-

defined tumor boundaries, demonstrating the highest

segmentation quality among the evaluated loss func-

tions, with fewer errors around the boundaries. This

observation aligns with the quantitative results where

Adaptive Robust Loss resulted in the highest Dice

scores for U-Net across both modalities.

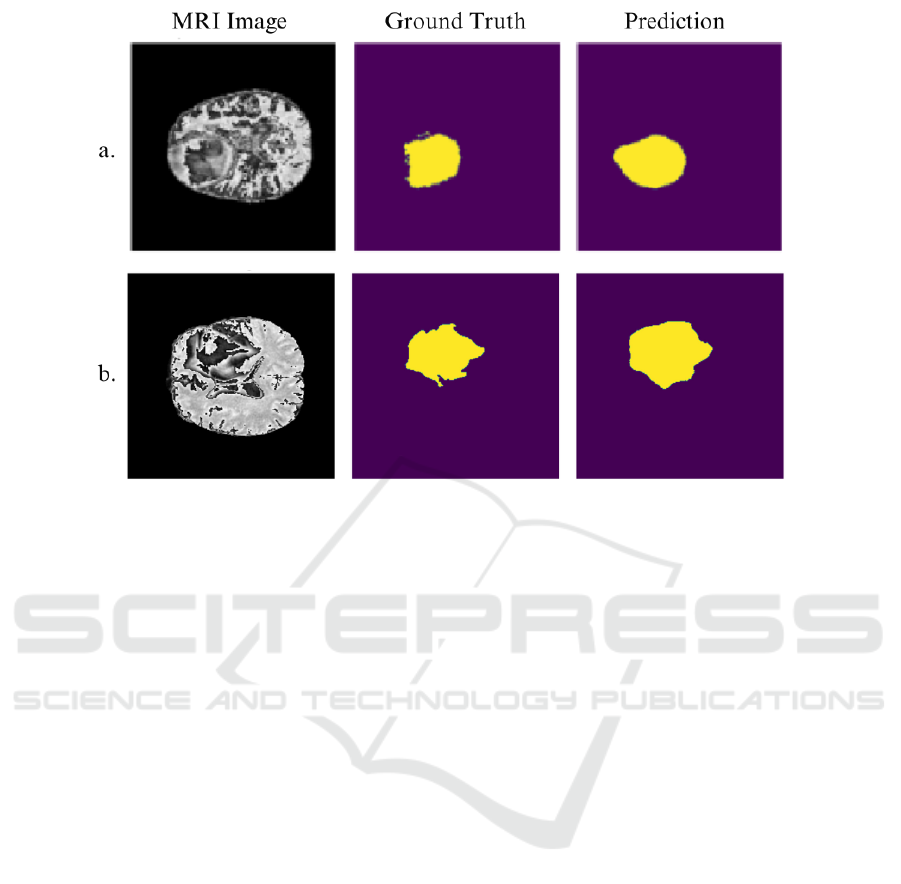

Figure 4 illustrates the impact of Robust Dice Loss

on SegNet’s performance with the FLAIR modality.

Compared to Dice Loss, Robust Dice Loss facilitated

more precise delineation of tumor regions, particu-

larly along the boundary of the tumor. The adap-

tive feature of the Robust Dice Loss appears to be

crucial for improving SegNet’s segmentation perfor-

mance, allowing it to handle the fine-grained details

that Dice Loss struggles to capture.

The results also indicate that segmentation perfor-

mance was consistently higher for the FLAIR modal-

ity compared to T2 for both models. This outcome

can be attributed to the improved contrast provided by

FLAIR images, which enhances the visibility of the

tumor and surrounding tissues, allowing the models

to achieve better segmentation results. The advanced

nature of the loss functions (specifically Adaptive Ro-

bust Loss for U-Net and Robust Dice Loss for Seg-

Net) further amplified these gains by focusing effec-

tively on regions of high variability and complexity.

In summary, the results from both quantitative and

qualitative analyses highlight the critical role of loss

function selection in enhancing the performance of

segmentation models for brain tumor imaging. Adap-

tive Robust Loss significantly boosts U-Net’s per-

formance by dynamically adjusting to segmentation

complexities, while Robust Dice Loss provides Seg-

Net with an adaptive focus that compensates for its in-

herent architectural limitations. These advanced loss

functions prove especially effective in addressing the

class imbalance issues inherent in medical image seg-

mentation and in achieving superior delineation of

complex tumor boundaries.

Furthermore, the differences in performance be-

tween the T2 and FLAIR modalities emphasize

the importance of selecting the appropriate imaging

modality to facilitate tumor visibility. The FLAIR

modality, in particular, allows for better segmentation

accuracy due to enhanced contrast, which becomes

even more beneficial when combined with adaptive

and robust loss functions.

The experimental outcomes suggest that the in-

tegration of sophisticated loss functions, tailored to

both the network architecture and the characteristics

of the imaging modality, is essential for advancing

the performance of automatic segmentation in neuro-

oncology. Future research could extend these exper-

iments to larger datasets that include a wider variety

of MRI modalities and employ hybrid approaches that

leverage multiple architectures and loss functions.

Additionally, the integration of attention mechanisms

or multi-modal inputs could potentially enhance seg-

mentation capabilities by allowing the model to ex-

ploit complementary features across different MRI

modalities.

Brain MRI Segmentation Using U-Net and SegNet: A Comparative Study Across Modalities with Robust Loss Functions

251

Figure 2: U-Net predictions for the T2 modality using different loss functions (a. Focal, b. Dice, c. Robust Dice, d. Adaptive

Robust Loss). The Adaptive Robust Loss results in superior boundary delineation and segmentation completeness.

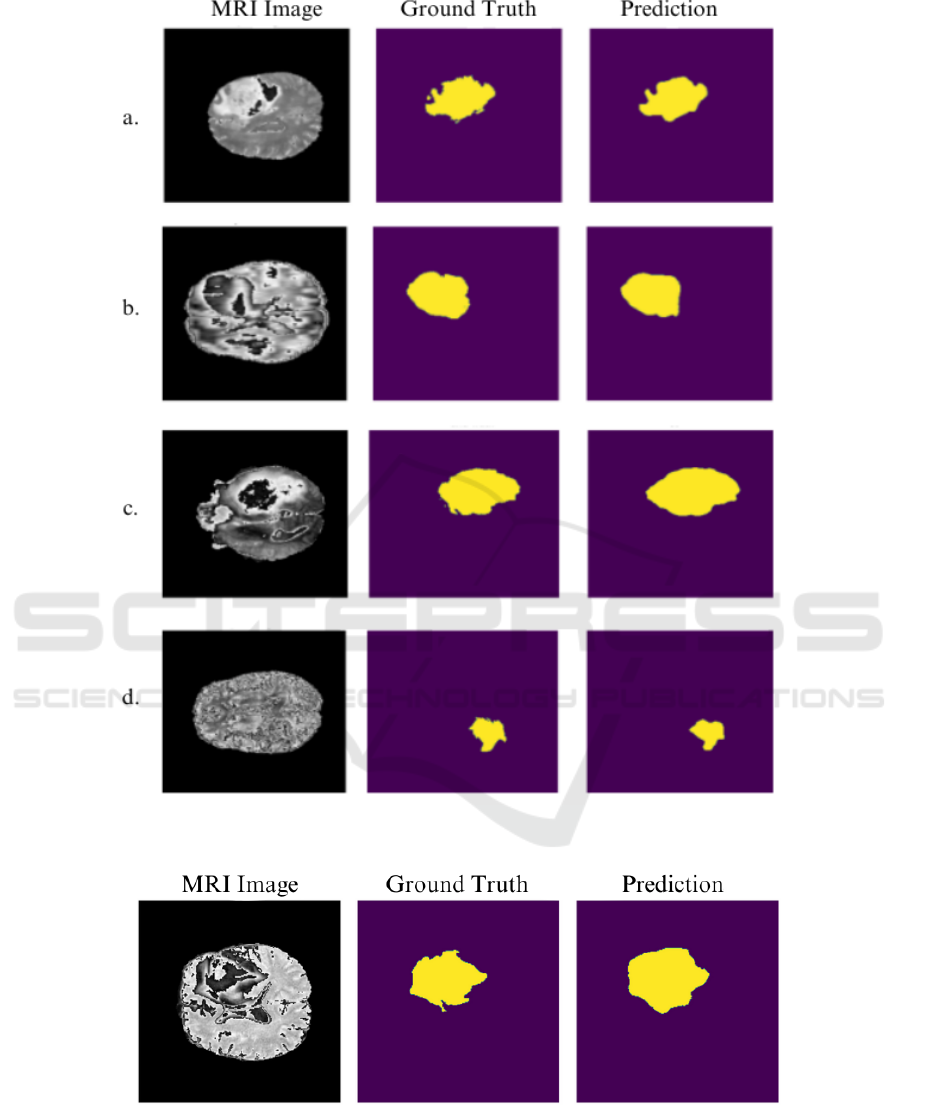

Figure 3: SegNet predictions for the T2 modality using Dice Loss. The segmentation output exhibits incomplete boundary

delineation, indicating challenges in handling subtle boundaries with this loss function.

4.1 Discussion

The experiments demonstrate that U-Net generally

outperformed SegNet, particularly when using Adap-

tive Robust Loss. This superior performance is at-

tributed to the synergy between U-Net’s skip connec-

tions and the adaptive capabilities of the loss func-

BIOIMAGING 2025 - 12th International Conference on Bioimaging

252

Figure 4: SegNet predictions for the FLAIR modality using a. Dice Loss and b. Robust Dice Loss. The use of Robust Dice

Loss produces more precise and contiguous segmentation, particularly around tumor boundaries, compared to Dice Loss.

tion, which together enhance spatial accuracy and ef-

fectively handle complex tumor boundaries. The re-

sults also emphasize the significance of the FLAIR

modality, which consistently yielded better segmen-

tation outcomes due to enhanced contrast, allowing

more precise differentiation of tumor regions.

SegNet’s performance improved significantly

with the novel Robust Dice Loss, particularly for the

FLAIR modality. This suggests that Robust Dice Loss

is well-suited to SegNet, compensating for its archi-

tectural limitations by focusing more adaptively on

challenging tumor regions. The advanced loss func-

tions—Adaptive Robust for U-Net and Robust Dice

for SegNet—demonstrated effectiveness in address-

ing class imbalance, ensuring that both models fo-

cus appropriately on underrepresented regions during

training.

The findings highlight that a tailored combination

of architecture, modality, and loss function is essen-

tial for optimal brain tumor segmentation. The choice

of loss function plays a crucial role in handling the

inherent challenges of medical image segmentation,

such as class imbalance and intricate boundary delin-

eation.

5 CONCLUSIONS

This study presented a comparative analysis of U-

Net and SegNet for brain tumor segmentation using

the BraTS 2020 dataset. We evaluated their perfor-

mance on T2 and FLAIR MRI modalities using four

loss functions: Dice, Focal, Robust Dice, and Adap-

tive Robust Loss. U-Net, particularly with Adaptive

Robust Loss, demonstrated superior accuracy, bene-

fiting from the adaptability of this loss function. Con-

versely, the newly introduced Robust Dice Loss sig-

nificantly enhanced SegNet’s performance, especially

on the FLAIR modality, by adaptively prioritizing

challenging areas.

The findings indicate that advanced loss functions

are key to enhancing segmentation performance, par-

ticularly for models like SegNet that lack mechanisms

to preserve spatial detail. The results also empha-

size the role of modality-specific characteristics, with

FLAIR providing improved segmentation outcomes

over T2 due to better contrast.

Future work could focus on evaluating additional

CNN architectures, incorporating attention mecha-

nisms, and exploring multi-modal training to leverage

the complementary features of MRI scans. Further

validation of these models in a clinical setting would

provide critical insights into their real-world appli-

cability, enhancing their potential impact in neuro-

oncology.

Brain MRI Segmentation Using U-Net and SegNet: A Comparative Study Across Modalities with Robust Loss Functions

253

ACKNOWLEDGMENTS

We thank the creators of the BraTS 2020 dataset

for providing an invaluable resource that has enabled

this research. We also acknowledge the open-source

community for making numerous tools and resources

freely available, empowering researchers worldwide.

Additionally, we acknowledge ChatGPT for its assis-

tance in refining and improving the manuscript.

REFERENCES

Badrinarayanan, V., Kendall, A., and Cipolla, R. (2017).

Segnet: A deep convolutional encoder-decoder ar-

chitecture for image segmentation. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

39(12):2481–2495.

Barron, J. T. (2017). A more general robust loss function.

CoRR, abs/1701.03077.

Khotanlou, H. and et al. (2008). Brain tumor segmentation

using symmetry analysis and template deformation in

mri. International Journal of Imaging Systems and

Technology, 18(1):42–52.

Lin, T.-Y. and et al. (2017). Focal loss for dense object

detection. pages 2980–2988.

Menze, B. H. and et al. (2015). The multimodal brain tumor

image segmentation benchmark (brats). IEEE Trans-

actions on Medical Imaging, 34(10):1993–2024.

Milletari, F., Navab, N., and Ahmadi, S.-A. (2016). V-

net: Fully convolutional neural networks for volumet-

ric medical image segmentation. pages 565–571.

Popuri, K. and et al. (2012). Symmetry-based brain tu-

mor segmentation using probabilistic features and

confidence levels. In Medical Image Computing

and Computer-Assisted Intervention (MICCAI), pages

123–130. Springer.

Prastawa, M. and et al. (2004). A brain tumor segmentation

framework based on outlier detection. Medical Image

Analysis, 8(3):275–283.

Rao, A. and et al. (2020). A hybrid cnn and ensemble learn-

ing approach for brain tumor segmentation. arXiv

preprint arXiv:2002.01092.

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-net:

Convolutional networks for biomedical image seg-

mentation. CoRR, pages 234–241.

Saouli, R. and et al. (2020). An ensemble-based deep

learning framework for brain tumor segmentation.

Computer Methods and Programs in Biomedicine,

189:105753.

BIOIMAGING 2025 - 12th International Conference on Bioimaging

254