Environment Setup and Model Benchmark of the MuFoRa Dataset

Islam Fadl

1

, Torsten Sch

¨

on

3

, Valentino Behret

2

, Thomas Brandmeier

1

, Frank Palme

2

and Thomas Helmer

1

1

CARISSMA Institute of Safety in Future Mobility (C-ISAFE), Technische Hochschule Ingolstadt,

Esplanade 10, Ingolstadt, Germany

2

Laboratory for 3D Measuring Systems and Computer Vision, Department of Mechanical, Automotive and Aeronautical

Engineering, Munich University of Applied Sciences (MUAS), Dachauer Straße 98b, Munich, Germany

3

AIMotion Bavaria, Technische Hochschule Ingolstadt, Ingolstadt, Germany

Islam.Fadl@carissma.eu, {Valentino.Behret, Frank.Palme}@hm.edu,

Keywords:

Dataset, Multimodal, Benchmark, Adverse Weather, Computer Vision, Perception.

Abstract:

Adverse meteorological conditions, particularly fog and rain, present significant challenges to computer vi-

sion algorithms and autonomous systems. This work presents MuFoRa

a

a novel, controllable, and measured

multimodal dataset recorded at CARISSMA’s indoor test facility, specifically designed to assess perceptual

difficulties in foggy and rainy environments. The dataset bridges research gap in the public benchmarking

datasets, where quantifiable weather parameters are lacking. The proposed dataset comprises synchronized

data from two sensor modalities: RGB stereo cameras and LiDAR sensors, captured under varying intensities

of fog and rain. The dataset incorporates synchronized meteorological annotations, such as visibility through

fog and precipitation levels of rain, and the study contributes a detailed explanation of the diverse weather

effects observed during data collection in the methods section. The dataset’s utility is demonstrated through

a baseline evaluation example, assessing the performance degradation of state-of-the-art YOLO11 and DETR

2D object detection algorithms under controlled and quantifiable adverse weather conditions. The public re-

lease of the dataset

b

facilitates various benchmarking and quantitative assessments of advanced multimodal

computer vision and deep learning models under the challenging conditions of fog and rain.

a

MuFoRa – A Multimodal Dataset of Traffic Elements Under Controllable and Measured Conditions of

Fog and Rain

b

https://doi.org/10.5281/zenodo.14175611

1 INTRODUCTION

Autonomous vehicles rely on perception systems, in-

cluding radars, cameras, and LiDARs, for (critical)

driving decisions. However, real-world driving sce-

narios present unmeasured and uncontrolled amounts

of rain and fog, creating ambiguity in algorithm per-

formance under adverse weather conditions. To ad-

dress this challenge, the BARCS project aims to de-

velop a safe operator-free shuttle bus for last-mile

transport in rural areas. To assess perception sys-

tem limitations in adverse weather, the project uti-

lizes the CARISSMA-THI indoor test facility in In-

golstadt, Germany (Vriesman et al., 2020), (Sezgin

et al., 2023), where cameras and LiDARs are tested

under measurable, controllable, reproducible and re-

alistic rain and fog conditions (Graf et al., 2023).

This well-defined environment allows for the cre-

ation of a benchmark dataset, crucial for evaluating

object detection models and other perception algo-

rithms, which clearly defines its limitations in adverse

weather conditions.

This study evaluates the performance of state-of-

the-art object detection models across dry, rainy, and

foggy conditions. To facilitate this research, a mul-

timodal dataset is publicly released comprising syn-

chronized, rectified, and calibrated RGB images and

point clouds (Behret et al., 2025). The data were cap-

tured at incremental distances (5 - 50 meters) between

traffic elements and vehicle-mounted sensors, allow-

ing for comprehensive analysis. The dataset enables

researchers to assess the specific distortions and hin-

drances affecting individual sensor modalities, partic-

ularly cameras and LiDARs, in adverse weather. The

Fadl, I., Schön, T., Behret, V., Brandmeier, T., Palme, F. and Helmer, T.

Environment Setup and Model Benchmark of the MuFoRa Dataset.

DOI: 10.5220/0013307900003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 3: VISAPP, pages

729-737

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

729

work focuses on two main objectives:

(1) Creating and releasing a novel multi-

modal benchmarking dataset for researching adverse

weather conditions implications on autonomous driv-

ing perception algorithms, in controllable and mea-

surable environments and their corresponding anno-

tations at a sequence-level.

(2) Evaluating state-of-the-art object detection

models with 2D images in various fog and rain con-

ditions, i.e. when visibility is approximately 10, 30,

and 100 meters during foggy conditions, and at rain

precipitation of 20, 40, 60, 80, and 100 mm/h.

By addressing these aspects, this research con-

tributes to developing a more robust evaluation

methodology of perception systems in autonomous

vehicles, enhancing their performance across various

weather conditions.

2 RELATED WORK

The development of robust autonomous driving sys-

tems that can operate effectively in adverse weather

conditions remains a significant challenge in the field

(Jokela et al., 2019). Although numerous datasets

have been released in recent years to tackle this is-

sue, a comprehensive dataset with measured weather

parameters is still lacking. This literature review

explores the current landscape of datasets used for

benchmarking and advancing autonomous driving

systems, with a specific focus on those designed to

address challenging weather conditions such as fog

and rain.

Several large-scale datasets have been instrumen-

tal in advancing autonomous driving research. The

Waymo Open Dataset (Sun et al., 2020) provides

a comprehensive collection of sensor data from ur-

ban and suburban environments, including some ad-

verse weather scenarios. Similarly, the KITTI dataset

(Geiger et al., 2013) offers a diverse range of urban

and rural scenes, while the more recent KITTI-360

(Liao et al., 2022) expands on this with 360-degree

views. The nuScenes dataset (Caesar et al., 2020)

provides multimodal sensor data from urban envi-

ronments in Boston and Singapore, including various

weather conditions.

However, these datasets, while valuable, do not

offer controllable and measurable adverse weather

conditions, limiting their utility for systematic eval-

uation of autonomous driving systems in challenging

environments. This gap has led to the development of

specialized datasets focusing on adverse weather.

DAWN (Detection in Adverse Weather Nature)

(Kenk and Hassaballah, 2020) and SID (Stereo Im-

age Dataset) (El-Shair et al., 2024) datasets specifi-

cally address vehicle detection in diverse traffic envi-

ronments (urban, highway and freeway) with adverse

weather, including fog, snow, rain, and sandstorms.

Similarly, datasets like ONCE (Mao et al., 2021),

and BDD100K (Yu et al., 2020) offer valuable real-

world scenarios, but their lack of control over weather

conditions limits their effectiveness for a measurable

evaluation in adverse weather.

Synthetic datasets have emerged as an alternative

to the need for diverse and adverse weather condi-

tions. Datasets like SYNTHIA (Ros et al., 2016)

offer various weather scenarios with synthetic data.

RainyCityscapes (Hu et al., 2019) stand out for syn-

thetic rain removal added to the original Cityscapes

(Cordts et al., 2016). WEDGE dataset (Marathe et al.,

2023) uses generative vision-language models to cre-

ate a multi-weather autonomous driving dataset, of-

fering 16 extreme weather conditions. This approach

allows for more control over weather conditions but

may not fully provide quantifiable weather effects, be-

sides the challenge of bridging the simulation-reality

gap resulting from synthetic dataset usage (Hu et al.,

2019).

Recent research has highlighted the importance

of multimodal approaches in adverse weather. (Bi-

jelic et al., 2020) demonstrated the effectiveness of

deep multimodal sensor fusion in fog conditions, em-

phasizing the need for datasets that include multiple

sensor modalities. The SMART-Rain dataset (Zhang

et al., 2023) provides a degradation evaluation dataset

specifically for autonomous driving in rain.

The impact of adverse weather on different sensor

types has been a subject of significant research, (Sez-

gin et al., 2023) discussed the challenges in object

detection under rainy and low light conditions, while

(Heinzler et al., 2019) examined weather influence on

automotive LiDAR sensors. (Jokela et al., 2019) con-

ducted testing and validation focusing on automotive

LiDAR sensors in fog and snow.

Recent trends in the field include the exploration

of radar as a potential replacement for LiDAR in

all-weather mapping and localization (Burnett et al.,

2023), and the development of voxel-based 3D object

detection methods (Deng et al., 2021) (Deng et al.,

2021) (Zhou and Tuzel, 2017) which may be more

robust to adverse weather conditions.

While datasets such as ONCE (Mao et al., 2021),

BDD100K (Yu et al., 2020), and ACDC (Sakaridis

et al., 2021) offer valuable real-world scenarios, their

lack of control over weather conditions limits their ef-

fectiveness for specific, measurable evaluations.

Despite advancements, there is still a lack of

datasets with controllable and measurable adverse

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

730

Table 1: Comparison of datasets focusing on weather effects using different attributes.

Attribute MuFoRa WEDGE DAWN SID

Modalities Images and Point

Clouds

Images Images Images

Place Indoors Synthetic

(DALL-E)

Urban, Highway University campus,

urban and residential

areas

Weather Effects Fog, Rain, Dry,

Dim, Dark

16 Weather

Effects

Fog, Snow, Rain,

Sandstorms

Clear, Snow, Cloudy,

Rain, Overcast

Measured Weather

Effects

Yes No No No

Sequences / Frames 90 Sequences 3660 Images 1000 Images 27 Sequences

weather conditions, especially for fog and rain. The

development of a novel public dataset with control-

lable fog and rain addresses this gap, offering a valu-

able tool for benchmarking and improving deep learn-

ing models, such as depth estimation and object de-

tection, in measurable adverse weather. This could

significantly enhance the safety and reliability of au-

tonomous systems across diverse conditions. To our

knowledge, this is the first public multimodal dataset

featuring both measured rain precipitation and mea-

surable visibility in foggy conditions, all created in

a controlled environment at the CARISSMA-THI in-

door test facility. Table 1 presents an overview of

datasets focusing on different weather conditions.

3 METHODS

3.1 Experiment Setup

Data collection is conducted under three weather sce-

narios to establish various thresholds for computer vi-

sion algorithms in adverse weather conditions. Ad-

ditionally, during each weather condition, three tar-

get objects are placed at varying distances from the

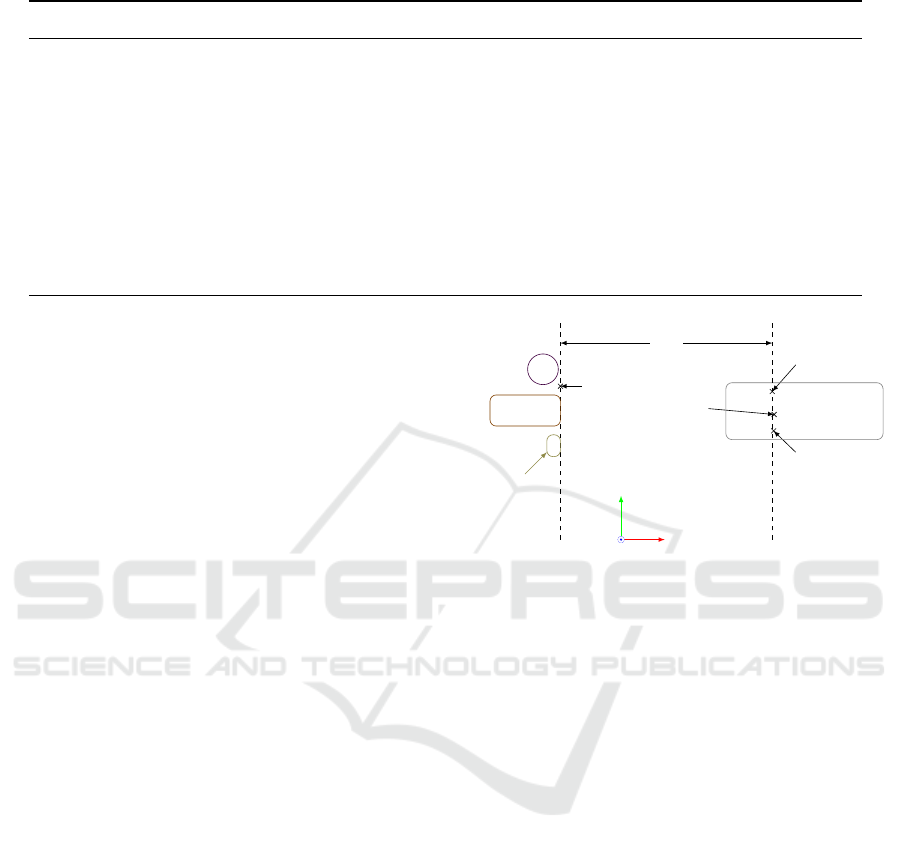

stereo cameras and LiDARS Figure 1. The scenarios

include

(1) Dry conditions with normal, dim light and

darkness.

(2) A ramp of fog resulting in a continuous visi-

bility gradient from 8 to 100 meters.

(3) Five precipitation levels of rain.

To generate ground truth data for object detection,

the relative positions of the sensors to the objects have

to be determined. This can be achieved by calibrating

marker positions using a total station. As Figure 1

shows, for each distance d

O,i

a marker was placed on

the ground. These markers are used to determine the

distance of the three objects (1) ball, used for calibra-

x

y

Qb2 left

ZED2i

Qb2 right

Vehicle

with

sensors

Marker

d

O,i

Ball

Vehicle

Pedestrian

Figure 1: Sensor setup during the calibration process using

the total station.

tion, (2) vehicle, and (3) pedestrian in the x-direction

from the sensors. In total, ten markers were placed

on the ground to cover the distances from 5 m to 50 m

with a step size of 5 m.

The sensors and marker positions were calibrated

using the total station before starting the data record-

ing. The calibration had to be repeated each record-

ing day since the vehicle with the sensor setup was

moved in between the recording days. As Table 4

(see Appendix) shows the sensor positions could be

reproduced from one day to another within a toler-

ance (3σ) of about ∼0.05 m in x-direction, ∼0.08 m

in y-direction, and less than >0.01 m in z-direction.

3.2 Dataset Description

Firstly, the images and point clouds are collected for

the three target objects in dry conditions with three

light settings. The target objects are static at 10 dif-

ferent distances with increments of 5 meters, starting

from 5 to 50 meters away from the sensors mounted

on the vehicle. Secondly, The same data collec-

tion procedure is repeated under rain. At each dis-

tance, data is collected under five different precipita-

tion rates of 20, 40, 60, 80, and 100 mm/h. Therefore,

50 unique sequences of rain are generated. Lastly, the

Environment Setup and Model Benchmark of the MuFoRa Dataset

731

Table 2: MuFoRa dataset summary under different weather conditions.

Weather Condition Light Setting / Details Sequences Images Point Clouds

Dry

Light 10 813 827

Dim (headlights) 10 900 900

Dark 10 900 870

Total (All Dry Settings) 30 2,613 2,597

Fog Visibility ramp increases as fog diffuses 10 29,404 17,629

Rain Five precipitation intensities (20–100 mm/h) 50 3,810 4,518

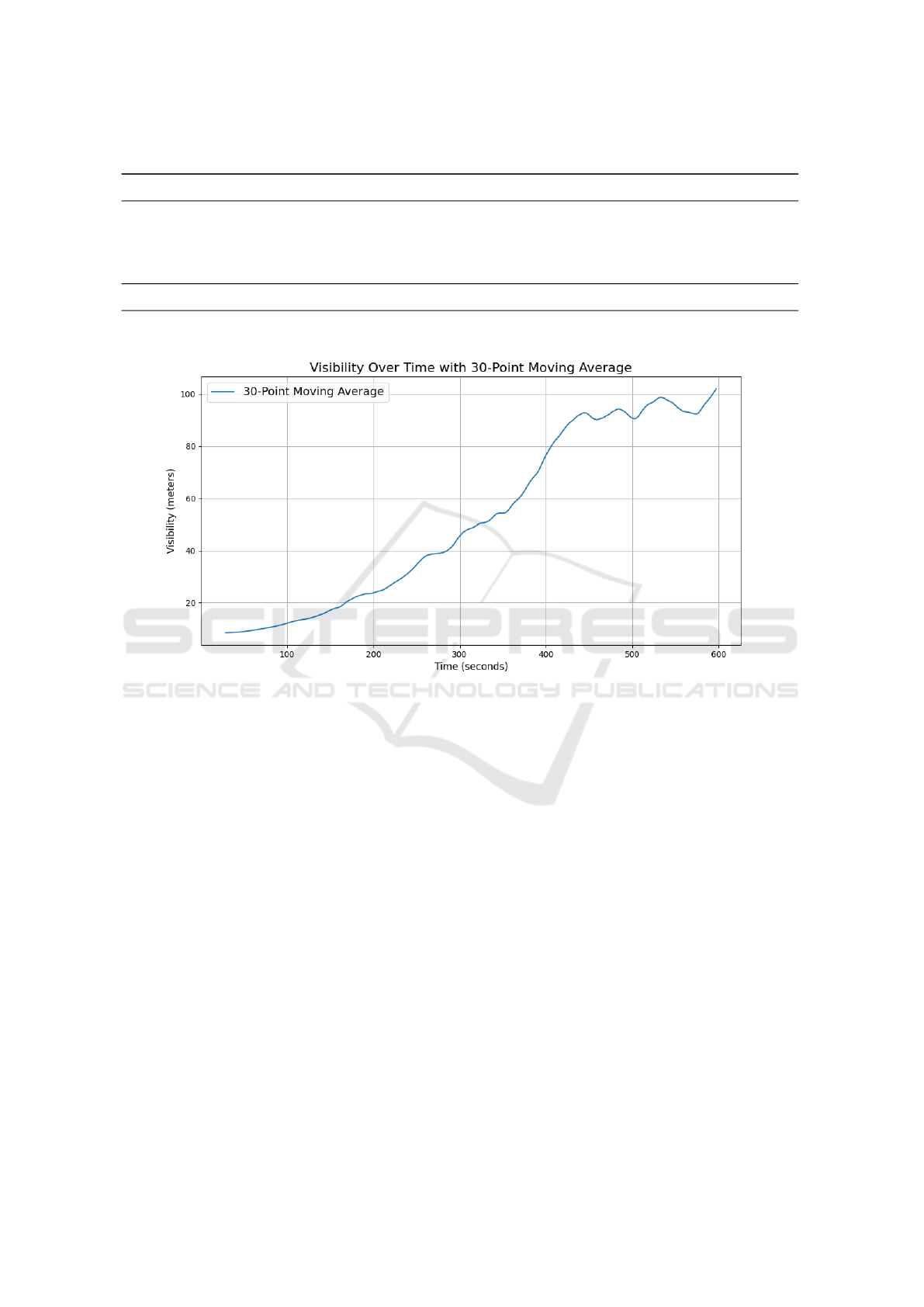

Figure 2: Average visibility across 10 measurements.

hall is saturated with fog until the measured visibil-

ity level drops to approximately 8 meters in the hall

as shown in Figure 2. The fog gradually dissipates

until the measured visibility reaches 100 meters. In

this case, the fog data is collected continuously for 10

minutes on average and synchronized with the visi-

bility level allowing for weather-level annotation for

the fog-visibility variables, resulting in 10 unique se-

quences of fog data. Table 2 is an overview of the col-

lected images and point clouds, moreover, Figure 7,

Figure 8, Figure 9 and (see Appendix) illustrate the

coupled effect on the images collected of distance on

one hand, and adverse weather such as rain and fog

on the other hand.

The inherent characteristics of fog pose significant

challenges in measuring visibility using state-of-the-

art devices (Gultepe et al., 2007). Instruments based

on obstruction and contamination measurements rely

on comparing the scattering and diffusion of emit-

ted and received laser light to determine visibility in

foggy environments (Miclea et al., 2020), (Lakra and

Avishek, 2022) and (World Meteorological Organiza-

tion, 2008).

At CARISSMA’s indoor test hall facility,

VISIC620 is used to measure localized visibility

starting from maximum fog density, corresponding

to a minimum visibility of approximately 8 meters

shown in Figure 8. The fog gradually dissipates, in-

creasing measured visibility as illustrated in Figure 2

until the traffic elements or target objects become

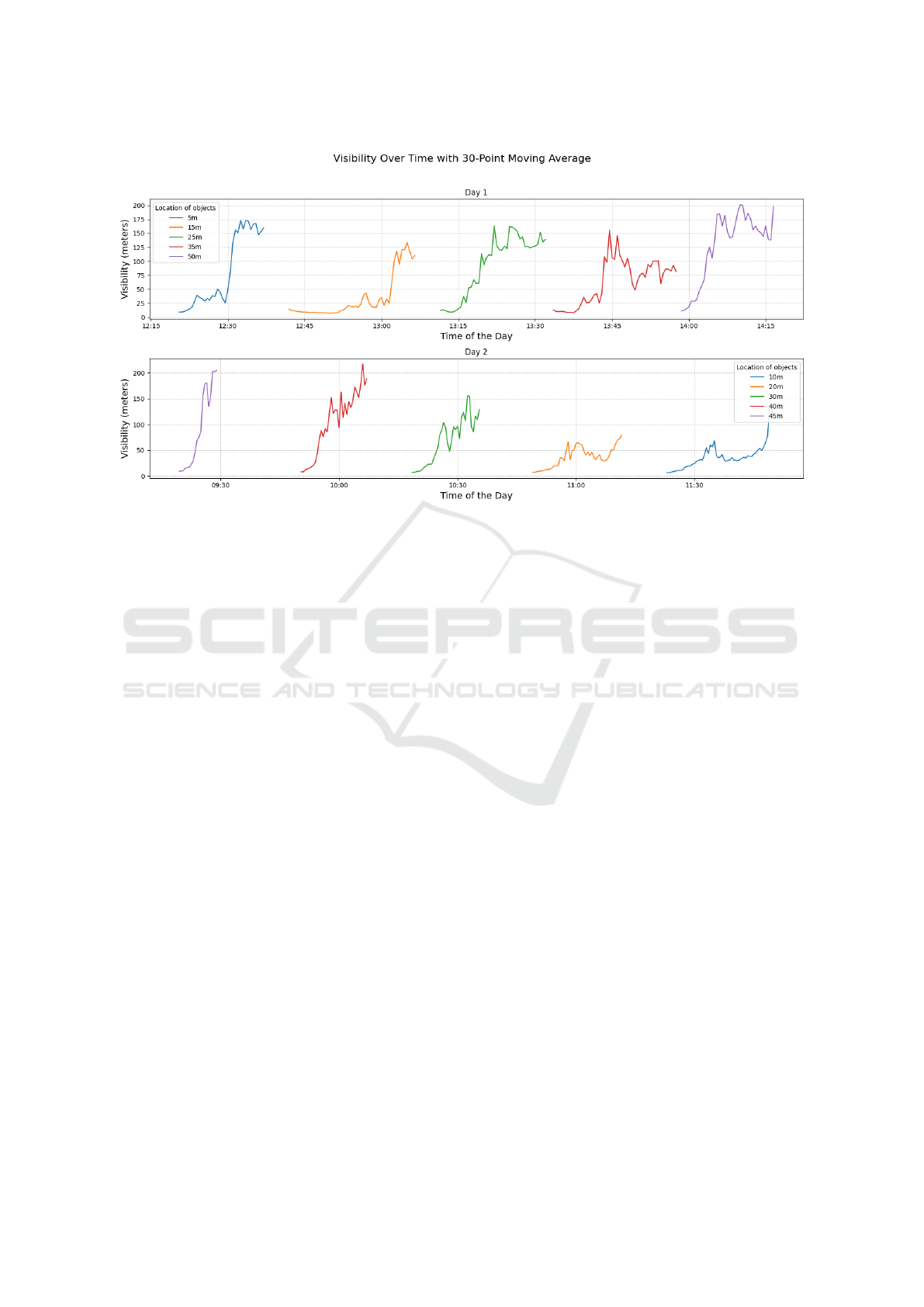

humanly visible. Figure 3 shows the visibility curves

for each experiment with time stamps, in which

the target objects are positioned at a fixed distance

from the sensors, during which the fog dissipates

gradually.

Visibility measurements fluctuate due to heat dis-

sipation and convection as fog contacts cold surfaces,

such as the hall’s ground and side walls. This interac-

tion causes the movement of fog layers and variations

in fog density, which affect the received laser of the

visibility measurement devices ((World Meteorolog-

ical Organization, 2008), 2008; (SICK AG, 2018)).

Therefore, to mitigate these fluctuations, a represen-

tative test sample of images or point clouds should

span at least 30 consecutive seconds to match the av-

erage visibility recorded around the same period, as

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

732

Figure 3: Measured visibility inside CARISSMA’s test hall during two days of data collection under fog.

demonstrated in the following evaluation section.

4 EVALUATION

The utility of MuFoRa multimodal dataset, which

captures target objects under adverse weather condi-

tions and is designed for diverse applications in per-

ception, deep learning, and computer vision research,

is demonstrated through an example application. The

performance of pre-trained YOLO11x (Jocher et al.,

2023) and DETR (backbone R50)(Carion et al., 2020)

object detection models are evaluated on sampled im-

ages of the dataset using one metric, which is the con-

fidence score.

The evaluation methodology involves two key

steps:

Evaluation Dataset. The evaluation sample consists

of representative images from dry, rain, and fog con-

ditions with corresponding weather conditions and

distance annotations. Since the variations among

frames for a given unique sequence under dry and

rain conditions are minimal, only 20 images are se-

lected for each unique sequence for evaluation. To

conduct a standardized and robust assessment of the

model under fog, images must be selected from 30

consecutive seconds or more to overcome fluctuation

in visibility measurements as explained in the meth-

ods section. The starting distance for fog evaluation is

10 meters, and only 5 distance increments were used

10, 20, 30, 40, and 50 meters, under visibility levels

of approximately 10, 30, and 100 meters.

Model Inference. Using the COCO pre-trained

YOLO11x and DETR models, the inference is run on

the defined test sample of images to generate predic-

tions. The model’s outputs predicted bounding boxes,

class IDs, and confidence scores. The confidence

threshold is set to 0.5. In detection mode, the con-

fidence score for each detected object combines the

objectness and class probability scores. The formula

is as follows:

C = P

object

· P

class

(1)

where: C represents the confidence score for the

detected object, P

object

denotes the objectness score,

i.e., the probability that a bounding box contains any

object, P

class

is the probability that the object within

the bounding box belongs to a specific class.

This score evaluates the model’s certainty that a

detected object is present and correctly classified. In

this case, a threshold is applied to C to filter out low-

confidence detections, which helps reduce false posi-

tives.

Figure 4 and Figure 5 shows the confidence score

of both models for the Person class under dry, three

fog, and five rain conditions with several increments

of distances. The confidence scores reflect the detec-

tion scores of one target object used, i.e. the 4Active

male pedestrian. With both models, the degradation

of the confidence score is noticeable after 30 meters.

While YOLO results in detections under dry weather

conditions at all distances, its degradation of confi-

dence score is more steep. Moreover, YOLO fails to

make detections under adverse rain and low visibil-

ity at further distances, whereas DETR is more capa-

Environment Setup and Model Benchmark of the MuFoRa Dataset

733

Table 3: Detection confidence scores of Yolo11x and DETR(R50) for Person class under various conditions.

Weather Conditions Distance (m) Yolo11 Confidence Score DETR Confidence Score

Dry 50 0.72 0.97

Rain (100mm/h) 40 - 0.87

Rain (80mm/h) 50 - 0.83

Rain (80mm/h) 40 0.51 0.87

Fog-Visibility (100m) 20 0.84 0.99

Fog-Visibility (30m) 10 0.89 0.99

Fog-Visibility (10m) 10 0.83 0.98

Figure 4: YOLO11x confidence score for the Person class

under variations of fog, rain, and dry weather.

Figure 5: DETR(R50) confidence score for the Person class

under variations of fog, rain, and dry weather.

ble especially under heavy rain precipitation at fur-

ther distances above 40 meters. This could be due to

the global attention mechanism of DETR. A summary

of the confidence scores of both models under severe

conditions is shown in Table 3.

The degradation of the confidence score is gradual

under dry conditions, where the only variable chang-

ing is the location of the objects. While in fog and

rain, the challenge is coupled with the image-data

quality degradation. The challenge in detection un-

der the rain intensities used starts to be visible at 30

meters and the effect of the distance coupled with rain

noise starts to hinder the model’s confidence signifi-

cantly. Furthermore, reflections on the ground sur-

face due to rain as shown, for instance, in Figure 6

Figure 6: Reflection of the ground surface due to rain.

could reduce the confidence scores for other classes

e.g. Car. While under fog, the score highly depends

on the visibility level.

5 CONCLUSIONS

90 novel sequences of controllable and measurable

weather scenarios are collected and published, includ-

ing fog, rain, dry, dark, and dim light conditions. Fur-

thermore, state-of-the-art object detection models are

evaluated and critically compared with this unique

public dataset in various adverse weather conditions.

An illustration of each object detection model’s lim-

itation under adverse weather is defined accordingly.

An illustration of each object detection model’s lim-

itation under adverse weather is defined accordingly,

for a more comprehensive evaluation for each model,

more metrics should be used.

Future research could focus on collecting dynamic

datasets with finer increments of rain, fog, and vary-

ing sensor-object distances, in addition to expanding

the datasets to include cyclists, groups of people, and

strollers. Another approach includes collecting data

in challenging constellations where the sensor input

would be used for decision-making in autonomous

driving in outdoor infrastructure. Furthermore, Iden-

tifying real-world gaps, such as the absence of wind

and dust in the test hall, and addressing these limita-

tions while evaluating indoor versus real-world data

could enhance reliability. Further work could explore

challenging driving conditions like headlight reflec-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

734

tions, diffusion, non-uniform lighting, and feature fu-

sion from multiple sensor modalities to optimize per-

formance in real-world autonomous driving scenar-

ios.

ACKNOWLEDGEMENTS

The authors would like to express their gratitude to

the test engineers of CARISSMA, Christoph Trost

and Michael Graf, for their support in enabling the

successful execution of the tests, and Dr. Dagmar

Steinhauser for reviewing the dataset. The authors

thank the Bayerisches Verbundforschungsprogramm

(BayVFP) of the Freistaat Bavaria for funding the re-

search project BARCS (DIK0351) in the funding line

Digitization.

REFERENCES

Behret, V., Kushtanova, R., Fadl, I., Weber, S., Helmer,

T., and Palme, F. (2025). Sensor Calibration and Data

Analysis of the MuFoRa Dataset. Accepted at VIS-

APP 2025.

Bijelic, M., Gruber, T., Mannan, F., Kraus, F., Ritter,

W., Dietmayer, K., and Heide, F. (2020). Seeing

Through Fog Without Seeing Fog: Deep Multimodal

Sensor Fusion in Unseen Adverse Weather. In 2020

IEEE/CVF Conference on Computer Vision and Pat-

tern Recognition (CVPR), pages 11679–11689, Seat-

tle, WA, USA. IEEE.

Burnett, K., Wu, Y., Yoon, D. J., Schoellig, A. P., and

Barfoot, T. D. (2023). Are We Ready for Radar to

Replace Lidar in All-Weather Mapping and Localiza-

tion? arXiv:2203.10174 [cs].

Caesar, H., Bankiti, V., Lang, A. H., Vora, S., Liong, V. E.,

Xu, Q., Krishnan, A., Pan, Y., Baldan, G., and Bei-

jbom, O. (2020). nuScenes: A multimodal dataset for

autonomous driving. arXiv:1903.11027 [cs, stat].

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov,

A., and Zagoruyko, S. (2020). End-to-end object de-

tection with transformers.

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler,

M., Benenson, R., Franke, U., Roth, S., and Schiele,

B. (2016). The cityscapes dataset for semantic urban

scene understanding.

Deng, J., Shi, S., Li, P., Zhou, W., Zhang, Y., and Li, H.

(2021). Voxel R-CNN: Towards High Performance

Voxel-based 3D Object Detection. arXiv:2012.15712

[cs].

El-Shair, Z. A., Abu-raddaha, A., Cofield, A., Alawneh,

H., Aladem, M., Hamzeh, Y., and Rawashdeh, S. A.

(2024). SID: Stereo image dataset for autonomous

driving in adverse conditions.

Geiger, A., Lenz, P., Stiller, C., and Urtasun, R. (2013).

Vision meets robotics: The KITTI dataset. The Inter-

national Journal of Robotics Research, 32(11):1231–

1237.

Graf, M., Vriesman, D., and Brandmeier, T. (2023).

Testmethodik zur untersuchung, validierung und

absicherung von st

¨

oreinfl

¨

ussen auf umfeldsensoren

durch witterung unter reproduzierbaren bedingungen.

VDI Verlag, abs/1405.0312.

Gultepe, I., Tardif, R., Michaelides, S. C., Cermak, J., Bott,

A., Bendix, J., M

¨

uller, M. D., Pagowski, M., Hansen,

B., Ellrod, G., Jacobs, W., Toth, G., and Cober, S. G.

(2007). Fog Research: A Review of Past Achieve-

ments and Future Perspectives. Pure and Applied

Geophysics, 164(6-7):1121–1159.

Heinzler, R., Schindler, P., Seekircher, J., Ritter, W., and

Stork, W. (2019). Weather Influence and Classifica-

tion with Automotive Lidar Sensors.

Hu, X., Fu, C.-W., Zhu, L., and Heng, P.-A. (2019). Depth-

attentional features for single-image rain removal.

Jocher, G., Qiu, J., and Chaurasia, A. (2023). Ultralytics

YOLO.

Jokela, M., Kutila, M., and Pyyk

¨

onen, P. (2019). Test-

ing and Validation of Automotive Point-Cloud Sen-

sors in Adverse Weather Conditions. Applied Sci-

ences, 9:2341.

Kenk, M. A. and Hassaballah, M. (2020). DAWN: Ve-

hicle Detection in Adverse Weather Nature Dataset.

arXiv:2008.05402 [cs].

Lakra, K. and Avishek, K. (2022). A review on factors

influencing fog formation, classification, forecasting,

detection and impacts. Rendiconti Lincei. Scienze

Fisiche e Naturali, 33(2):319–353.

Liao, Y., Xie, J., and Geiger, A. (2022). KITTI-360: A

Novel Dataset and Benchmarks for Urban Scene Un-

derstanding in 2D and 3D. arXiv:2109.13410 [cs].

Mao, J., Niu, M., Jiang, C., Liang, H., Chen, J., Liang, X.,

Li, Y., Ye, C., Zhang, W., Li, Z., Yu, J., Xu, H., and

Xu, C. (2021). One Million Scenes for Autonomous

Driving: ONCE Dataset. arXiv:2106.11037 [cs].

Marathe, A., Ramanan, D., Walambe, R., and Kotecha,

K. (2023). WEDGE: A multi-weather autonomous

driving dataset built from generative vision-language

models. arXiv:2305.07528 [cs].

Miclea, R.-C., Dughir, C., Alexa, F., Sandru, F., and Silea,

I. (2020). Laser and LIDAR in a System for Visibil-

ity Distance Estimation in Fog Conditions. Sensors,

20(21):6322.

Ros, G., Sellart, L., Materzynska, J., Vazquez, D., and

Lopez, A. M. (2016). The SYNTHIA dataset: A

large collection of synthetic images for semantic seg-

mentation of urban scenes. In 2016 IEEE Conference

on Computer Vision and Pattern Recognition (CVPR),

pages 3234–3243. IEEE.

Sakaridis, C., Dai, D., and Van Gool, L. (2021). ACDC:

The adverse conditions dataset with correspondences

for semantic driving scene understanding. In 2021

IEEE/CVF International Conference on Computer Vi-

sion (ICCV), pages 10745–10755. IEEE.

Sezgin, F., Vriesman, D., Steinhauser, D., Lugner, R., and

Brandmeier, T. (2023). Safe Autonomous Driving

Environment Setup and Model Benchmark of the MuFoRa Dataset

735

in Adverse Weather: Sensor Evaluation and Perfor-

mance Monitoring. arXiv:2305.01336 [cs].

SICK AG (2018). Operating Instructions: VISIC620 Visi-

bility Measuring Device. SICK AG, 201.

Sun, P., Kretzschmar, H., Dotiwalla, X., Chouard, A., Pat-

naik, V., Tsui, P., Guo, J., Zhou, Y., Chai, Y., Caine,

B., Vasudevan, V., Han, W., Ngiam, J., Zhao, H., Tim-

ofeev, A., Ettinger, S., Krivokon, M., Gao, A., Joshi,

A., Zhang, Y., Shlens, J., Chen, Z., and Anguelov,

D. (2020). Scalability in Perception for Autonomous

Driving: Waymo Open Dataset. In 2020 IEEE/CVF

Conference on Computer Vision and Pattern Recog-

nition (CVPR), pages 2443–2451, Seattle, WA, USA.

IEEE.

Vriesman, D., Thoresz, B., Steinhauser, D., Zimmer, A.,

Britto, A., and Brandmeier, T. (2020). An experimen-

tal analysis of rain interference on detection and rang-

ing sensors. In 2020 IEEE 23rd International Con-

ference on Intelligent Transportation Systems (ITSC),

pages 1–5.

World Meteorological Organization (2008). Guide to Me-

teorological Instruments and Methods of Observa-

tion. WMO-No. 8. World Meteorological Organiza-

tion, Geneva, seventh edition.

Yu, F., Chen, H., Wang, X., Xian, W., Chen, Y., Liu, F.,

Madhavan, V., and Darrell, T. (2020). BDD100k:

A diverse driving dataset for heterogeneous multitask

learning.

Zhang, C., Huang, Z., Guo, H., Qin, L., Ang, M. H.,

and Rus, D. (2023). SMART-Rain: A Degradation

Evaluation Dataset for Autonomous Driving in Rain.

2023 IEEE/RSJ International Conference on Intelli-

gent Robots and Systems (IROS), pages 9691–9698.

Conference Name: 2023 IEEE/RSJ International Con-

ference on Intelligent Robots and Systems (IROS)

ISBN: 9781665491907 Place: Detroit, MI, USA Pub-

lisher: IEEE.

Zhou, Y. and Tuzel, O. (2017). VoxelNet: End-to-End

Learning for Point Cloud Based 3D Object Detection.

arXiv:1711.06396 [cs].

APPENDIX

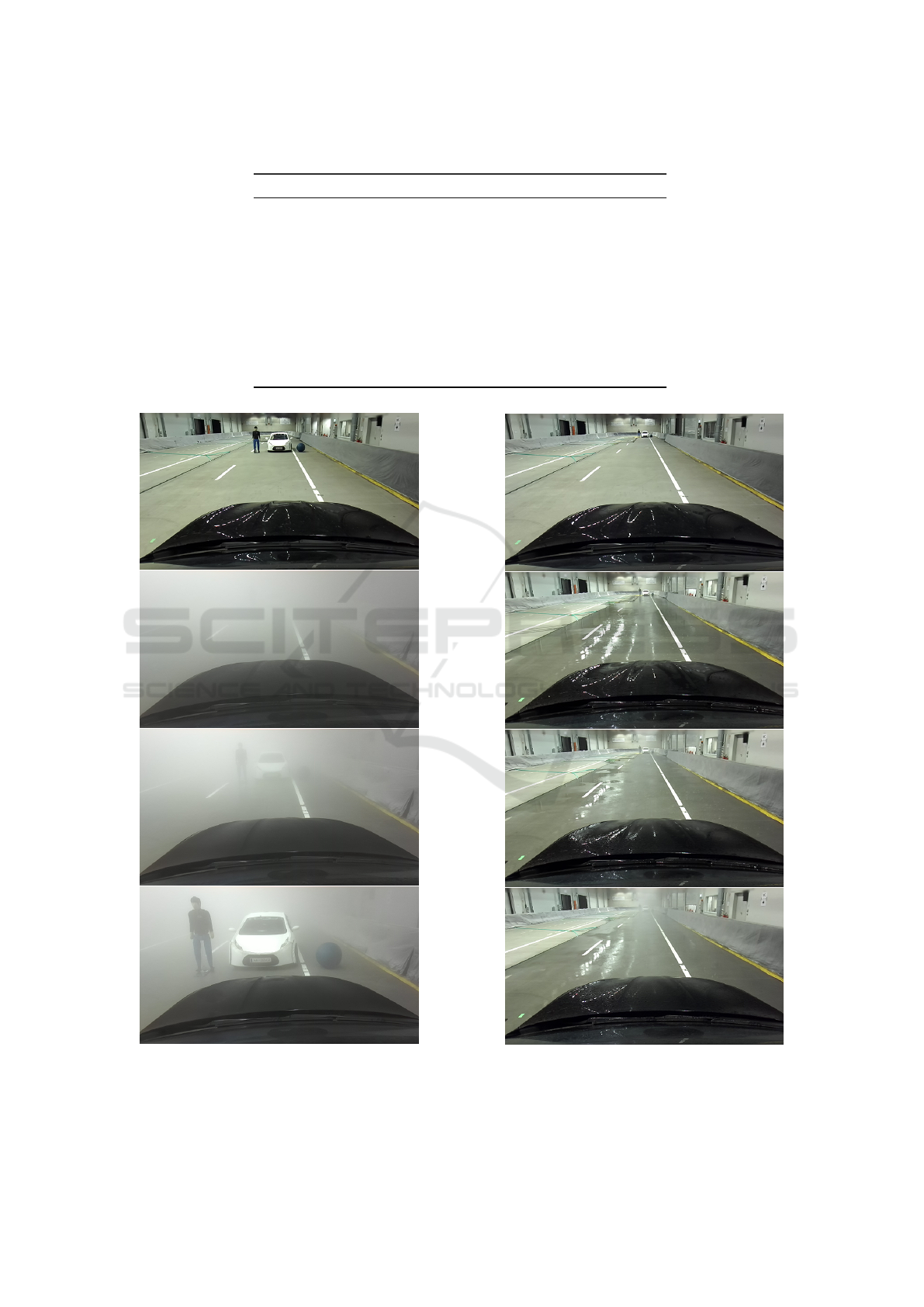

Figure 7: From top to bottom: Target objects positioned 45

meters from the sensors in dry conditions, and positioned

at 45, 35, 25, 15, and 5 meters respectively during the fog-

visibility level of approx. 35 meters.

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

736

Table 4: Sensor positions (x y z) relative to the total station origin over the five test days.

Sensor Day 1 Day 2 Day 3 Day 4 Day 5

Qb2 left

37.84

3.70

1.66

37.84

3.72

1.66

37.78

3.40

1.66

37.79

3.38

1.66

37.76

3.39

1.66

Qb2 right

37.80

4.61

1.66

37.81

4.62

1.66

37.79

4.32

1.66

37.79

4.29

1.66

37.77

4.28

1.66

ZED2i

37.86

4.08

1.62

37.85

4.10

1.62

37.82

3.79

1.62

37.82

3.75

1.62

37.79

3.75

1.62

Figure 8: From top to bottom: Target objects positioned 15

meters from the sensors in dry conditions, and positioned at

15, 10, and 5 meters respectively during the fog-visibility

level of approx. 8 meters.

Figure 9: From top to bottom: Target objects positioned 50

meters from the sensors in dry conditions, and during rain

precipitation of 20, 60, and 100 mm/h.

Environment Setup and Model Benchmark of the MuFoRa Dataset

737