One-Shot Polarization-Based Material Classification

with Optimal Illumination

Miho Kurachi

1

, Ryo Kawahara

2 a

and Takahiro Okabe

3 b

1

Department of Artificial Intelligence, Kyushu Institute of Technology, Iizuka, Fukuoka 820-8502, Japan

2

Graduate School of Informatics, Kyoto University, Sakyo-ku, Kyoto 606-8501, Japan

3

Information Technology Track, Okayama University, Kita-ku, Okayama 700-8530, Japan

Keywords:

Material Classification, Polarization, Illumination, Margin Maximization.

Abstract:

Image-based classification of surface materials is important for machine vision applications such as visual

inspection. In this paper, we propose a novel method for one-shot per-pixel classification of raw materials

on the basis of polarimetric feature such as the degree of linear polarization (DoLP) and the angle of linear

polarization (AoLP). It is known that the polarimetric feature depends not only on the intrinsic properties

of surface materials but also on the directions and wavelengths of light sources. Accordingly, our proposed

method jointly optimizes the non-negative light source intensities for feature extraction and the discriminant

hyperplane in the feature space via margin maximization so that the appearances of different materials are

discriminative. We conducted a number of experiments using real images captured by using a light stage, and

show that our method using a single input image works better than/comparably to the existing methods using

a single/multiple input images.

1 INTRODUCTION

Classifying material categories such as metals and

plastics, materials themselves such as iron and alu-

minum, and their surface states such as rust, cracks,

and scratches are important for computer vision ap-

plications such as visual inspection of metallic sur-

faces (Zheng et al., 2002; Pernkopf and O’Leary,

2003) and printed circuit boards (Tominaga and

Okamoto, 2003; Ibrahim et al., 2010). In this

study, we focus on planar and unpainted raw mate-

rials, and achieve appearance-based per-pixel mate-

rial classification that works in a non-contact and non-

destructive manner.

The polarimetric properties of reflected light such

as the degree of linear polarization (DoLP) and the

angle of linear polarization (AoLP) play important

roles for material classification (Wolff, 1990; Chen

and Wolff, 1998). It is known that the DoLP of the

reflected light depends on the refractive index of a

surface material and differently behaves for specu-

lar and diffuse materials, and the AoLPs of specular

and diffuse materials are different by π/2 (Shurcliff,

a

https://orcid.org/0000-0002-9819-3634

b

https://orcid.org/0000-0002-2183-7112

1962). The latter is because specular reflectance (re-

flectivity)/diffuse reflectance (transmissivity) is max-

imal when the polarization direction is perpendicu-

lar/parallel to the outgoing plane according to the

Fresnel equations.

In general, the reflected light observed on an ob-

ject surface consists of a diffuse reflection component

and a specular reflection component, and the mixture

ratio of those components depends also on the illumi-

nation condition. Specifically, the mixture ratio de-

pends on both the direction and wavelength of a light

source through the roughness and spectral reflectance

of the surface. Therefore, the apparent DoLP and

AoLP depends not only on the intrinsic properties,

i.e. the refractive index, roughness, and spectral re-

flectance of the object surface but also on the direc-

tions and wavelengths of light sources (Kondo et al.,

2020; Ichikawa et al., 2023).

Accordingly, we propose a novel method for

polarization-based per-pixel material classification

with the optimal illumination. We consider the 7D

polarimetric feature space on the basis of the polari-

metric image captured by using a polarization cam-

era with a four linear polarization filter. We show the

relationship between the illumination condition, i.e.

the intensities of multi-spectral and multi-directional

738

Kurachi, M., Kawahara, R. and Okabe, T.

One-Shot Polarization-Based Material Classification with Optimal Illumination.

DOI: 10.5220/0013312700003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 3: VISAPP, pages

738-745

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

light sources and the polarimetric feature, and jointly

optimize both the illumination condition for feature

extraction and the discriminant hyperplane in the fea-

ture space via margin maximization. Moreover, we

impose the non-negativity constraints on the light

source intensities, and achieve one-shot material clas-

sification that is applicable to dynamic objects as well.

The existing techniques for polarization-based

material classification (Chen et al., 2009; Liang et al.,

2022) often assume passive illumination, and then do

not optimize the illumination condition under which

input images are taken. In the context of material

classification based on grayscale/color images (Gu

and Liu, 2012; Liu and Gu, 2014; Wang and Okabe,

2017), the illumination condition is optimized so that

the appearances of different materials are discrimina-

tive, but they do not utilize polarimetric clues. In con-

trast to those existing methods, our proposed method

takes both the polarimetric clues and the illumination

condition into consideration.

To confirm the effectiveness of our proposed

method, we conducted a number of experiments us-

ing the images captured by using a polarization cam-

era and an LED-based light stage, i.e. multi-spectral

and multi-directional light sources. We show that the

performance of our method using a single input im-

age is better than/comparable to those of the existing

methods using a single/multiple input images.

The main contributions of this study are twofold.

First, we propose a novel method for one-shot per-

pixel material classification based on the polarimet-

ric clues under the optimal illumination. Specifically,

we show the relationship between the intensities of

multi-spectral and multi-directional light sources and

the polarimetric feature, and jointly optimize both the

non-negative illumination condition for feature ex-

traction and the discriminant hyperplane in the feature

space via margin maximization. Second, we experi-

mentally show that our method using a single input

image works better than/comparably to the existing

methods using a single/multiple input images.

2 RELATED WORK

2.1 Polarization-Based Classification

In general, the reflected light observed on an object

surface consists of a diffuse reflection component and

a specular reflection component. It is known that the

DoLP of the reflected light depends on the refractive

index of a surface material and differently behaves

for specular and diffuse components, and the AoLPs

of those components are different by π/2 (Shurcliff,

1962). The seminal works by Wolff (Wolff, 1990)

and Chen and Wolff (Chen and Wolff, 1998) study

those properties of specular and diffuse reflection

components, and propose polarization-based methods

for classifying metals and dielectrics. Unfortunately,

however, their methods require a number of images

taken under varying polarization states, and then have

difficulties in classifying materials of objects in mo-

tion.

The polarimetric properties are often combined

with the other modalities for material classification.

Chen et al. (Chen et al., 2009) show the effective-

ness of multi-spectral and polarimetric imaging for

classifying material categories. Recently, Liang et

al. (Liang et al., 2022) show the effectiveness of

multi-spectral and polarimetric images for material

segmentation. They assume passive illumination, and

then do not optimize the illumination condition under

which input images are taken. On the other hand, our

proposed method optimizes active illumination so that

the appearances of different materials are discrimina-

tive, since the polarimetric properties such as DoLP

and AoLP depends not only on the intrinsic proper-

ties of the object surface but also on the directions

and wavelengths of light sources.

2.2 Illumination Optimization

In general, the coded illumination using multiple light

sources is efficient in terms of SNR (Signal-to-Noise

Ratio) and the number of required images. It is

shown that the coded illumination is effective for im-

age acquisition (Schechner et al., 2003), BRDF (Bidi-

rectional Reflectance Distribution Function) measure-

ment (Ghosh et al., 2007), shape recovery (Ma et al.,

2007), and spectral reflectance recovery (Park et al.,

2007). Whereas those methods optimize the coded il-

lumination for reconstructing signals with high SNRs,

our proposed method optimizes the illumination con-

dition in terms of discriminative ability.

The coded illumination is utilized also for mate-

rial classification. Gu and Liu (Gu and Liu, 2012)

and Liu and Gu (Liu and Gu, 2014) propose an ap-

proach to per-pixel classification of raw materials

based on spectral BRDFs. Specifically, they optimize

the intensities of multi-spectral and multi-directional

light sources for two/multi-class classification via lin-

ear SVMs/Fisher LDA. Unfortunately, however, their

methods require two/multiple images taken under ac-

tual light sources with non-negative intensities, since

they allow the optimal intensities could be negative.

Wang and Okabe (Wang and Okabe, 2017) achieve

per-pixel material classification from a single color

image by jointly optimizing the light source inten-

One-Shot Polarization-Based Material Classification with Optimal Illumination

739

sities and the disctiminant hyperplane with the non-

negative constraints on the light source intensities. In

contrast to those methods, our method takes account

of the polarimetric clues as well as the illumination

condition into consideration.

3 PROPOSED METHOD

3.1 Setup

Similar to the existing techniques (Gu and Liu, 2012;

Liu and Gu, 2014; Wang and Okabe, 2017), we

assume that objects of interest are planar and un-

painted raw materials. Our proposed method illumi-

nates those objects by using a light stage with L light

sources, and then capture the images of them by us-

ing a polarization camera with a four linear polariza-

tion filter. We denote the four pixel values observed

at a certain surface point through the four linear po-

larization filter (0

◦

, 45

◦

, 90

◦

, and 135

◦

) by i

1

, i

2

, i

3

,

and i

4

1

. Unless otherwise noted, we omit the pixel’s

index since we address per-pixel classification.

Our proposed method uses the L images, each of

which is captured under each light source, for train-

ing. On the other hand, our method uses a single im-

age, which is captured under the L light sources with

the optimal intensities, for test.

3.2 Two-Class Classification

Feature Space and Discriminant Hyperplane:

We consider the 7D polarimetric feature on the ba-

sis of the polarimetric image as (i

1

,i

2

,i

3

,i

4

,ρ,c,s)

⊤

.

Here, ρ is the DoLP, c = cos(2φ), and s = sin(2φ) re-

spectively, where φ (−π/2 ≤ φ ≤ π/2) is the AoLP.

Note that c and s are continuous at φ = ±π/2.

Our proposed method finds the linear discriminant

hyperplane defined by

4

∑

d=1

w

′

d

i

d

+ ||w

w

w||

2

(w

′

5

ρ + w

′

6

c + w

′

7

s) + b = 0 (1)

in the 7D feature space. Here, we call the set of light

source intensities w

w

w = (w

1

,w

2

,w

3

,...,w

L

)

⊤

the light

source vector and the set of the weights of the polari-

metric features w

w

w

′

= (w

′

1

,w

′

2

,w

′

3

,...,w

′

7

)

⊤

the polari-

metric vector, and b is the bias. We explain the reason

why the 2-norm of the light source vector ||w

w

w||

2

is re-

quired later.

1

We assume that the four pixel values are obtained per

pixel via demosaicing.

Polarimetric Feature and Illumination:

We denote the four set of pixel values observed un-

der each of the L light sources with unit intensity as

x

x

x

d

= (x

d1

,x

d2

,x

d3

,...,x

dL

)

⊤

(d = 1, 2, 3, 4). Accord-

ing to the superposition principle of illumination, the

pixel value i

d

captured under the L light sources is

represented as

i

d

= w

w

w

⊤

x

x

x

d

(2)

by using the light source vector w

w

w. Note that eq.(2)

is the projection from 4L-dimensional space to 4D

space, and it acts as the feature extraction.

Substituting eq.(2) into eq.(1), we can rewrite the

linear discriminant hyperplane as

4

∑

d=1

w

′

d

w

w

w

⊤

x

x

x

d

+ ||w

w

w||

2

×

(w

′

5

ρ(w

w

w) + w

′

6

c(w

w

w) + w

′

7

s(w

w

w)) + b = 0. (3)

Here, ρ(w

w

w), c(w

w

w), and s(w

w

w) explicitly describe that

those features depend on the light source vector w

w

w,

but we omit (w

w

w) unless otherwise noted to make the

notation simpler.

Joint Optimization:

Our proposed method jointly optimizes both the illu-

mination condition, i.e. the light source vector w

w

w and

the discriminant hyperplane, i.e. the pair of the polari-

metric vector w

w

w

′

and the bias b via margin maximiza-

tion. In the 7D feature space, the distance between a

point (i

1

,i

2

,i

3

,i

4

,ρ,c,s)

⊤

and the discriminant hyper-

plane in eq.(3) is given by

|

∑

4

d=1

w

′

d

w

w

w

⊤

x

x

x

d

+ ||w

w

w||

2

(w

′

5

ρ + w

′

6

c + w

′

7

s) + b|

||w

w

w||

2

2

||w

w

w

′

||

2

2

.

(4)

Note that we can set the numerator to 1 for the set

of points nearest to the discriminant hyperplane, i.e.

support vectors without loss of generality in the same

manner as the formulation of SVMs (Vapnik, 1998).

This is because both the first and second terms of the

numerator are proportional to the overall scales of w

w

w

and w

w

w

′

.

Hence, the optimization results in

min

w

w

w,w

w

w

′

,b,{ξ

n

}

1

2

||w

w

w||

2

2

||w

w

w

′

||

2

2

+

α

N

N

∑

n=1

ξ

n

(5)

subject to

y

n

"

4

∑

d=1

w

′

d

w

w

w

⊤

x

x

x

nd

+ ||w

w

w||

2

×

(w

′

5

ρ

n

+ w

′

6

c

n

+ w

′

7

s

n

) + b

≥ 1 − ξ

n

(n = 1, 2, 3, ..., N), (6)

ξ

n

≥ 0 (n = 1,2,3,...,N), (7)

w

l

≥ 0 (l = 1,2,3,...,L). (8)

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

740

Here, y

n

and ξ

n

are the label (+1 or -1) and the slack

variable of the n-th sample. We denote the number of

training samples and the weight of the penalty term

by N and α/N respectively. In order to achieve one-

shot classification, we impose the non-negativity con-

straints on the light source intensities in eq.(8).

We use an alternative optimization technique for

solving the above optimization, because when one

of the light source vector w

w

w and the pair of the po-

larimetric vector and the bias {w

w

w

′

,b} is fixed, it

approximately results in the quadratic programming

with respect to the other. Specifically, we iteratively

update one of them; we fix w

w

w and update {w

w

w

′

,b}

via quadratic programming, and then we fix {w

w

w

′

,b}

and {ρ(w

w

w),c(w

w

w),s(w

w

w)} by using w

w

w computed at the

previous iteration and update w

w

w via quadratic pro-

gramming. In our current implementation, we set

the initial condition of the light source vector w

w

w to

(1,1,1,...,1)

⊤

.

3.3 Multi-Class Classification

Combination of Two-Class Classification:

We combine the two-class classifiers for multi-class

classification on the basis of binary tree. Let us con-

sider 4-class classification for example. First, we

find the two-class classifier that discriminates the four

classes (A, B, C, and D) into {A, B} and {C, D}. Sec-

ond, we find the two-class classifier that discriminates

A and B, and the two-class classifier that discrimi-

nates C and D. In general, we require M = (K − 1)

two-class classifiers for K-class classification.

Discriminant Hyperplanes:

In a similar manner to the two-class classifier for-

mulated by eq.(3), we represent the m-th (m =

1,2,3,...,M) linear discriminant hyperplane as

4

∑

d=1

w

′

md

w

w

w

⊤

x

x

x

d

+ ||w

w

w||

2

×

(w

′

m5

ρ(w

w

w) + w

′

m6

c(w

w

w) + w

′

m7

s(w

w

w)) + b

m

= 0.

(9)

Here, w

w

w

′

m

and b

m

are the m-th polarimetric vector and

bias. Note that we achieve one-shot multi-class clas-

sification by sharing a single light source vector w

w

w

among M classifiers.

Joint Optimization:

We jointly optimize the light source vector w

w

w and the

set of the polarimetric vector and bias {w

w

w

′

m

,b

m

} (m =

1,2,3,...,M) in a similar manner to the two-class clas-

sification. Specifically, the joint optimization is for-

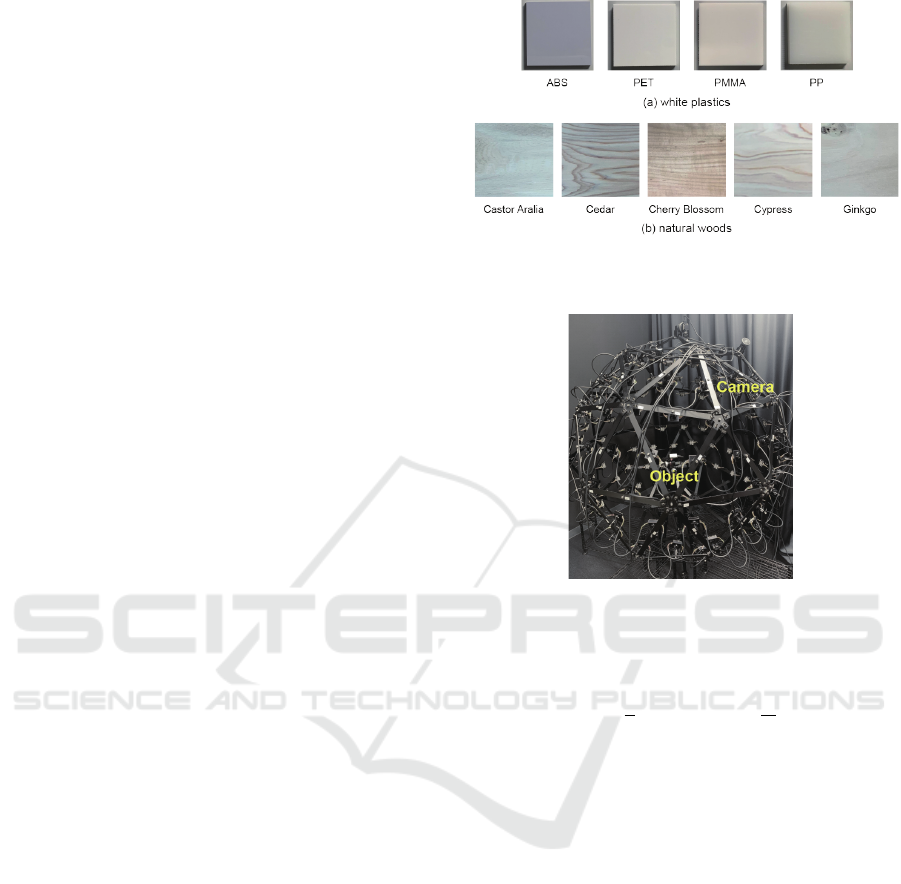

Figure 1: Two sets of raw materials with similar appear-

ances: (a) white plastics and (b) natural woods.

Figure 2: Our Kyutech-OU light stage II for illuminating an

object of interest located at the center.

mulated as

min

w

w

w,w

w

w

′

m

,b,{ξ

mn

}

M

∑

m=1

1

2

||w

w

w||

2

2

||w

w

w

′

m

||

2

2

+

α

N

N

∑

n=1

ξ

mn

!

(10)

subject to

y

mn

"

4

∑

d=1

w

′

md

w

w

w

⊤

x

x

x

mnd

+ ||w

w

w||

2

×

(w

′

5

ρ

mn

+ w

′

6

c

mn

+ w

′

7

s

mn

) + b

m

≥ 1 − ξ

mn

(m = 1, 2, 3, ..., M) (n = 1,2,3,...,N), (11)

ξ

mn

≥ 0

(m = 1, 2, 3, ..., M) (n = 1,2,3,...,N), (12)

w

l

≥ 0 (l = 1,2,3,...,L). (13)

In a similar manner to the two-class classifica-

tion, we solve the above optimization via an alter-

native optimization technique. Specifically, we set

the initial condition of the light source vector w

w

w to

(1,1,1,...,1)

⊤

, and then iteratively update the set of

{w

w

w

′

m

,b

m

} (m = 1, 2, 3, ..., M) by fixing w

w

w and vice

versa.

One-Shot Polarization-Based Material Classification with Optimal Illumination

741

Table 1: The classification accuracies (%) of the two-class classification for the white plastics: ours, ours with fixed w

w

w

′

, ours

with fixed w

w

w, Gu and Liu, SegFormer with s

0

, and SegFormer with (s

0

,s

1

,s

2

) from left to right.

Ours Ours with Ours with Gu and Liu SegFormer SegFormer

fixed w

w

w

′

fixed w

w

w with s

0

with (s

0

,s

1

,s

2

)

Number of images single single single two single single

ABS vs. PET 100.00 100.00 100.00 100.00 87.99 99.94

ABS vs. PMMA 100.00 100.00 100.00 100.00 94.75 83.15

ABS vs. PP 99.94 100.00 99.88 100.00 99.61 90.38

PET vs. PMMA 100.00 99.94 86.22 96.27 53.28 50.67

PET vs. PP 100.00 100.00 100.00 100.00 99.87 83.96

PMMA vs. PP 100.00 100.00 100.00 100.00 99.66 99.98

Table 2: The classification accuracies (%) of the two-class classification for the natural woods: ours, ours with fixed w

w

w

′

, ours

with fixed w

w

w, Gu and Liu, SegFormer with s

0

, and SegFormer with (s

0

,s

1

,s

2

) from left to right.

Ours Ours with Ours with Gu and Liu SegFormer SegFormer

fixed w

w

w

′

fixed w

w

w with s

0

with (s

0

,s

1

,s

2

)

Number of images single single single two single single

Castor Aralia vs. Ginkgo 100.00 99.96 98.36 99.60 99.28 99.97

Cedar vs. Cypress 99.16 99.10 75.36 98.90 51.45 53.65

Cedar vs. Cherry Blossom 99.74 99.44 98.00 99.26 97.52 93.76

Cypress vs. Ginkgo 97.30 94.12 80.74 92.82 91.18 83.04

4 EXPERIMENTS

In this Section, we explain our experimental setup,

and then report our experimental results for two-class

classification and multi-class classification. We show

that the effectiveness of our proposed method is re-

markable, especially for more challenging scenarios,

i.e. one-shot multi-class classification.

4.1 Experimental Setup

We tested two sets of raw materials with similar ap-

pearances. One is the set of 4 white plastics: ABS,

PET, PMMA, and PP in Figure 1 (a). The other is the

set of 5 natural woods: Castor Aralia, Cedar, Cherry

Blossom, Cypress, and Ginkgo in Figure 1 (b). We

can see that the texture of Cedar, Cherry Blossom,

and Cypress are more prominent than that of Castor

Aralia and Ginkgo.

We captured the images of those objects by us-

ing a polarization camera of BFS-U3-51S5P-C from

FLIR and our Kyutech-OU light stage II in Figure 2.

Similar to the existing light stages (Gu and Liu, 2012;

Liu and Gu, 2014; Wang and Okabe, 2017), the light

stage consists of the LED clusters at different direc-

tions, and each cluster has narrow-band LEDs with

different spectral intensities. In our experiments, we

used the images taken under 92 (= L) light sources;

23 directions × 4 spectral intensities.

To confirm the effectiveness of our proposed

method, we compared the performances of the fol-

lowing six methods:

• Ours: Our method jointly optimizes the light

source vector w

w

w for feature extraction and the po-

latimetric vector w

w

w

′

(and the bias b) for the dis-

criminant hyperplane in the feature space. Ours

achieves one-shot classification by imposing the

non-negative constraints on the light source inten-

sities.

• Ours with fixed w

w

w

′

: This method is used for the

ablation study of the polarimetric feature. Specif-

ically, we fix the polarimetric vector as w

w

w

′

=

(1/4,1/4,1/4,1/4,0,0,0)

⊤

in our method. In

other words, it uses the s

0

component of the

Stokes vector under the optimized illumination.

• Ours with fixed w

w

w: This method is used for

the ablation study of the illumination optimiza-

tion. Specifically, we fix the light source vector

as w

w

w = (1,1,1,...,1)

⊤

in our method. In other

words, it uses the polarimetric feature under the

unoptimized illumination.

• Gu and Liu (Gu and Liu, 2012): This method

optimizes the light source intensities for material

classification on the basis of SVMs. Because the

non-negativity constraints are not imposed on the

light source intensities, it requires two images for

two-class classification, and (K + 1) images for

K-class classification when it combines two-class

classification in a one-vs-the-rest manner. In other

words, it require an additional image for compen-

sating virtual light sources with negative intensi-

ties.

• SegFormer (Xie et al., 2021) with s

0

: SegFormer

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

742

Table 3: The classification accuracies (%) of the four-class classification for (a) the white plastics and (b) the natural woods:

ours, ours with fixed w

w

w

′

, ours with fixed w

w

w, Gu and Liu, SegFormer with s

0

, and SegFormer with (s

0

,s

1

,s

2

) from left to right.

Ours Ours with Ours with Gu and Liu SegFormer SegFormer

fixed w

w

w

′

fixed w

w

w with s

0

with (s

0

,s

1

,s

2

)

Number of images single single single five single single

(a) White plastics 99.88 91.13 94.41 90.72 71.93 68.24

(b) Natural woods 87.91 81.02 72.06 96.49 68.48 68.02

is one of the state-of-the-art methods based on

transformer. To investigate the performance of

the deep learning-based method with intensity im-

ages, the input to SegFormer is the s

0

component

of the Stokes vector when w

w

w = (1, 1, 1, ..., 1)

⊤

.

• SegFormer (Xie et al., 2021) with (s

0

,s

1

,s

2

): To

investigate the performance of the deep learning-

based method with polarimetric images, the input

to SegFormer is the s

1

, s

2

, and s

3

components of

the Stokes vector when w

w

w = (1, 1, 1, ..., 1)

⊤

.

We used the MATLAB implementation (quad-

prog) of the inter-point-convex algorithm for the first

iteration followed by the active-set algorithm (Gill

et al., 1981) for the alternative optimization of eq.(5)

and eq.(10). We empirically set the parameter α in

those equations to 10

6

and 10

5

for the datasets of

white plastics and natural woods respectively. For all

of the above methods, we used 900/2,500 pixels per

plastics/woods material in the test phase. In the train-

ing phase, we used the other 400 pixels per material

for the above methods other than SegFormer.

We used 48 images with 900 pixels/88 images

with 2,500 pixels per plastics/woods material for

training SegFormer. We found that the performance

of SegFormer tends to depend on the initial condi-

tions to some extent. Then, we show the average of

five trials for the results of SegFormer in Section 4.2

and Section 4.3.

4.2 Two-Class Classification

First, in Table 1, we summarize the accuracies of the

two-class classification for the white plastics: ours,

ours with fixed w

w

w

′

, ours with fixed w

w

w, Gu and Liu (Gu

and Liu, 2012), SegFormer (Xie et al., 2021) with

s

0

, and SegFormer with (s

0

,s

1

,s

2

) from left to right.

Note that Gu and Liu requires two input images taken

under different illumination conditions but the other

methods use only a single image per tow-class clas-

sifier in the test phase. We tested the six pairs of the

four plastics: ABS vs. PET, ABS vs. PMMA, ABS

vs. PP, PET vs. PMMA, PET vs. PP, and PMMA vs.

PP from top to bottom.

Basically, we can see that the classification of the

white plastics is relatively easy. Although the accu-

racies are often saturated, we can see that ours works

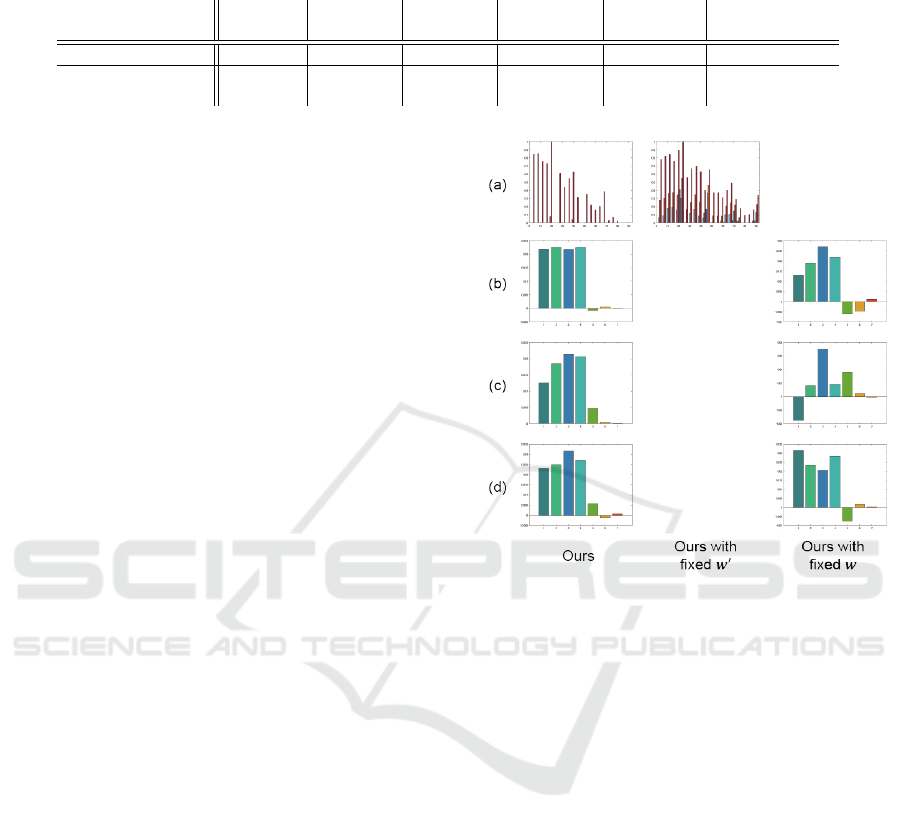

Figure 3: The optimized parameters for the four-class clas-

sification of the white plastics: Ours, Ours with fixed w

w

w

′

,

and Ours with fixed w

w

w from left to right, and (a) the light

source vector w

w

w and the polarimetric vectors (b) w

w

w

′

1

, (c) w

w

w

′

2

,

and (c) w

w

w

′

3

.

better than ours with fixed w

w

w and Gu and Liu with

two input images. In addition, Table1 also shows that

ours outperforms SegFormer with s

0

and (s

0

,s

1

,s

2

);

it supports the effectiveness of the illumination opti-

mization.

Second, we summarize the accuracies of the two-

class classification for the natural woods in Table 2.

We tested the four pairs of the five natural woods:

Castor Aralia vs. Ginkgo, Cedar vs. Cypress, Cedar

vs. Cherry Blossom, and Cypress vs. Ginkgo from

top to bottom. We can see that our proposed method

works better than the other methods. Since the clas-

sification of the natural woods is more difficult than

the white plastics due to spatially-varying reflectance

properties, the effectiveness of our method is more

significant.

4.3 Multi-Class Classification

First, in Table 3 (a), we summarize the accuracies

of the four-class classification for the white plastics:

One-Shot Polarization-Based Material Classification with Optimal Illumination

743

Figure 4: The optimized parameters for the four-class clas-

sification of the natural woods: Ours, Ours with fixed w

w

w

′

,

and Ours with fixed w

w

w from left to right, and (a) the light

source vector w

w

w and the polarimetric vectors (b) w

w

w

′

1

, (c) w

w

w

′

2

,

and (c) w

w

w

′

3

.

Figure 5: The s

0

image and the labels of the white plastics

predicted by using the 6 methods: (a) PET, (b) PMMA, (c)

PP, and (d) ABS. Red, green, yellow, and blue stand for

PET, PMMA, PP, and ABS respectively.

ours, ours with fixed w

w

w

′

, ours with fixed w

w

w, Gu and

Liu (Gu and Liu, 2012), SegFormer (Xie et al., 2021)

with s

0

, and SegFormer with (s

0

,s

1

,s

2

) from left to

right. Note that Gu and Liu requires five input im-

ages taken under different illumination conditions but

the other methods use only a single image in the test

phase.

Comparing the performance of ours and those of

ours with fixed w

w

w

′

and ours with fixed w

w

w, we can see

the effectiveness of the joint optimization of the light

source vector w

w

w for feature extraction and the polati-

metric vector w

w

w

′

(and the bias b) for the discriminant

hyperplane in the feature space. In addition, we can

Figure 6: The s

0

image and the labels of the natural woods

predicted by using the 6 methods: (a) Cypress, (b) Ginkgo,

(c) Cedar, and (d) Castor Aralia. Red, green, yellow, and

blue stand for Cypress, Ginkgo, Cedar, and Castor Aralia

respectively.

see that ours with a single input image works better

than Gu and Liu with five input images. Table 3 (a)

also shows that ours outperforms SegFormer with s

0

and (s

0

,s

1

,s

2

).

Figure 3 shows the light source vectors and the

polarimetric vectors of ours, ours with fixed w

w

w

′

, and

ours with fixed w

w

w from left to right. We can see that

our light source vector is significantly different from

(1,1,1,...,1)

⊤

, but our polarimetric vector is not so

different from (1/4, 1/4, 1/4, 1/4, 0, 0, 0)

⊤

. There-

fore, for the white plastics, we can see that the opti-

mization of illumination condition is more important

than the use of the polarimetric feature.

Second, we summarize the accuracies of the four-

class classification for the natural woods in Table 3

(b). The four materials are Castor Aralia, Cedar, Cy-

press, and Ginkgo. Similar to the case of the white

plastics, we can see the effectiveness of the joint op-

timization of the light source vector w

w

w and the polati-

metric vector w

w

w

′

(and the bias b), because ours works

better than ours with fixed w

w

w

′

, and ours with fixed

w

w

w. We can also see that ours outperforms SegFormer

with s

0

and (s

0

,s

1

,s

2

). Since the classification of the

natural woods is more difficult than the white plas-

tics due to spatially-varying reflectance properties as

shown in Figure 5 and Figure 6, the effectiveness of

our method is more significant. Note that Gu and Liu

performs best but requires five input images.

Figure 4 shows the light source vectors and the

polarimetric vectors of ours, ours with fixed w

w

w

′

, and

ours with fixed w

w

w from left to right. We can see

that our light source vector and polarimetric vec-

tor are significantly different from (1,1,1,...,1)

⊤

and

(1/4,1/4,1/4,1/4,0,0,0)

⊤

. Therefore, we can see

that both the illumination optimization and the polari-

metric feature are important for classifying the natural

woods.

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

744

5 CONCLUSION

In this paper, we proposed a novel method for one-

shot per-pixel material classification based on the

polarimetric clues under the optimal illumination.

Specifically, we show the relationship between the in-

tensities of multi-spectral and multi-directional light

sources and the polarimetric feature, and jointly opti-

mize both the non-negative illumination condition for

feature extraction and the discriminant hyperplane in

the feature space via margin maximization. Then, we

experimentally showed that our method using a single

input image works better than/comparably to the ex-

isting methods using a single/multiple input images.

The future work of this study includes the exten-

sion to complex objects such as non-planar surfaces

and translucent materials with significant subsurface

scattering. The use of the other modalities such as

polarimetric light sources is another direction of our

future work.

ACKNOWLEDGEMENTS

This work was supported by JSPS KAKENHI

Grant Numbers JP20H00612, JP23H04357, and

JP22K17914.

REFERENCES

Chen, C., Zhao, Y., Luo, L., Liu, D., and Pan, Q. (2009).

Robust materials classification based on multispectral

polarimetric brdf imagery. In SPIE Proceedings, vol-

ume 7384, pages 220–227.

Chen, H. and Wolff, L. (1998). Polarization phase-based

method for material classification in computer vision.

IJCV, 28(1):73–83.

Ghosh, A., Achutha, S., Heidrich, W., and O’Toole, M.

(2007). BRDF acquisition with basis illumination. In

Proc. IEEE ICCV2007, pages 1–8.

Gill, P., Murray, W., and Wright, M. (1981). Practical Op-

timization. Academic Press.

Gu, J. and Liu, C. (2012). Discriminative illumination:

per-pixel classification of raw materials based on op-

timal projections of spectral BRDF. In Proc. IEEE

CVPR2012, pages 797–804.

Ibrahim, A., Tominaga, S., and Horiuchi, T. (2010). Spec-

tral imaging method for material classification and in-

spection of printed circuit boards. Optical Engineer-

ing, 49(5):057201.

Ichikawa, T., Fukao, Y., Nobuhara, S., and Nishino, K.

(2023). Fresnel microfacet BRDF: Unification of

polari-radiometric surface-body reflection. In Proc.

IEEE/CVF CVPR2023, pages 16489–16497.

Kondo, Y., Ono, T., Sun, L., Hirasawa, Y., and Murayama,

J. (2020). Accurate polarimetric BRDF for real polar-

ization scene rendering. In Proc. ECCV2020, pages

220–236.

Liang, Y., Wakaki, R., Nobuhara, S., and Nishino, K.

(2022). Multimodal material segmentation. In Proc.

IEEE/CVF CVPR2022, pages 19800–19808.

Liu, C. and Gu, J. (2014). Discriminative illumination: per-

pixel classification of raw materials based on optimal

projections of spectral BRDF. IEEE Trans. PAMI,

36(1):86–98.

Ma, W.-C., Hawkins, T., Peers, P., Chabert, C.-F., Weiss,

M., and Debevec, P. (2007). Rapid acquisition of spec-

ular and diffuse normal maps from polarized spheri-

cal gradient illumination. In Proc. EGSR2007, pages

183–194.

Park, J.-I., Lee, M.-H., Grossberg, M., and Nayar, S. (2007).

Multispectral imaging using multiplexed illumination.

In Proc. IEEE ICCV2007, pages 1–8.

Pernkopf, F. and O’Leary, P. (2003). Image acquisition

techniques for automatic visual inspection of metallic

surfaces. NDT&E International, 36:609–617.

Schechner, Y., Nayar, S., and Belhumeur, P. (2003). A

theory of multiplexed illumination. In Proc. IEEE

ICCV2003, pages 808–815.

Shurcliff, W. (1962). Polarized Light: Production and Use.

Harvard University Press.

Tominaga, S. and Okamoto, S. (2003). Reflectance-based

material classification for printed circuit boards. In

Proc. IEEE ICIAP2003, pages 238–244.

Vapnik, V. (1998). Statistical Learning Theory. Wiley-

Interscience.

Wang, C. and Okabe, T. (2017). Joint optimization of

coded illumination and grayscale conversion for one-

shot raw material classification. In Proc. BMVC2017.

Wolff, L. (1990). Polarization-based material classifica-

tion from specular reflection. IEEE Trans. PAMI,

12(11):1059–1071.

Xie, E., Wang, W., Yu, Z., Anandkumar, A., Alvarez, J.,

and Luo, P. (2021). SegFormer: Simple and efficient

design for semantic segmentation with transformers.

In Proc. NeurIPS2021, pages 12077–12090.

Zheng, H., Kong, L., and Nahavandi, S. (2002). Automatic

inspection of metallic surface defects using genetic al-

gorithms. Journal of Materials Processing Technol-

ogy, 125–126:427–433.

One-Shot Polarization-Based Material Classification with Optimal Illumination

745