Towards Developing Ethical Reasoners: Integrating Probabilistic

Reasoning and Decision-Making for Complex AI Systems

Nijesh Upreti, Jessica Ciupa and Vaishak Belle

The University of Edinburgh, 10 Crichton Street, Edinburgh EH8 9AB, U.K.

Keywords:

Knowledge Representation and Reasoning (KRR), Ethical Reasoners, Probabilistic Decision-Making,

Agent-Based Models, Ethical AI Systems, Contextual Reasoning, Moral Principles in AI, Complex AI

Architectures.

Abstract:

A computational ethics framework is essential for AI and autonomous systems operating in complex, real-

world environments. Existing approaches often lack the adaptability needed to integrate ethical principles into

dynamic and ambiguous contexts, limiting their effectiveness across diverse scenarios. To address these chal-

lenges, we outline the necessary ingredients for building a holistic, meta-level framework that combines in-

termediate representations, probabilistic reasoning, and knowledge representation. The specifications therein

emphasize scalability, supporting ethical reasoning at both individual decision-making levels and within the

collective dynamics of multi-agent systems. By integrating theoretical principles with contextual factors, it fa-

cilitates structured and context-aware decision-making, ensuring alignment with overarching ethical standards.

We further explore proposed theorems outlining how ethical reasoners should operate, offering a foundation

for practical implementation. These constructs aim to support the development of robust and ethically reliable

AI systems capable of navigating the complexities of real-world moral decision-making scenarios.

1 INTRODUCTION

Computational Ethics seeks to create full ethical

agents—autonomous systems capable of translating

moral principles and reasoning into actionable, com-

putable frameworks (Moor, 2006). These agents aim

to optimize justifiable decisions and achieve ethical

competency comparable to or surpassing that of hu-

mans (Moor, 1995; Ganascia, 2007; Anderson et al.,

2006; Awad et al., 2022). Significant progress has

been made in fairness-focused learning frameworks

(Rahman et al., 2024; Zhang et al., 2023; Islam

et al., 2023) and ad hoc approaches to moral rea-

soning (Kleiman-Weiner et al., 2017; Krarup et al.,

2022; Dennis et al., 2016a), with efforts like Kleiman-

Weiner et al.’s common-sense moral model (Kleiman-

Weiner et al., 2017) demonstrating the utility of prob-

abilistic reasoning for ethical judgments. These ad-

vances provide valuable insights into specific aspects

of ethical reasoning.

However, a unified architecture that integrates so-

phisticated ethical theories with adaptive learning sys-

tems remains an open challenge. Autonomous agents

must move beyond task-specific reasoning to navigate

complex moral scenarios involving beliefs, intentions,

and the propositional attitudes of others (Belle, 2023).

The challenge lies in combining structured reason-

ing with adaptive learning in the face of uncertainty

and variability in ethical decisions. This underscores

the urgent need for a robust, theoretically grounded

framework capable of addressing these complexities

systematically.

Specific ethical frameworks, such as Bentham’s

theory of Hedonistic Act Utilitarianism (Bentham,

2003; Anderson et al., 2005) and Ross’s prima facie

duties (Ross, 2002), have historically informed moral

reasoning models. However, these approaches often

lack the flexibility needed to navigate ethical ambigu-

ities and uncertainties in real-world contexts. More

recent works (Lockhart, 2000; Gilpin et al., 2018)

have sought to adapt ethical principles dynamically

to situational ambiguities, yet broader integration is

required to address the diversity and evolving nature

of decision-making environments.

Ethical decision-making depends not only on ab-

stract principles but also on contextual facts, which

are often shaped by subjective interpretations. Ex-

isting frameworks, such as Kleiman-Weiner et al.’s

probabilistic model, provide useful tools for handling

moral judgments. However, a comprehensive archi-

tecture that accommodates meta-level specifications

is still lacking. To address this, it is essential to de-

fine the specifications needed for developing ethical

reasoning systems. These specifications should com-

588

Upreti, N., Ciupa, J. and Belle, V.

Towards Developing Ethical Reasoners: Integrating Probabilistic Reasoning and Decision-Making for Complex AI Systems.

DOI: 10.5220/0013314200003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 1, pages 588-599

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

bine robust knowledge representation and reasoning

(KRR) techniques, logic, and computational embod-

iment (Levesque, 1986; Segun, 2020). The goal is

to develop adaptive, context-aware systems capable

of managing the nuanced complexities of real-world

ethical dilemmas.

A critical specification is the use of intermediate

representations that integrate contextual factors into

ethical decision-making processes. These represen-

tations break down complex ethical dilemmas into

manageable sub-goals, allowing for a structured and

layered approach to decision-making. This structure

ensures adaptability to dynamic scenarios while pre-

serving alignment with overarching ethical principles.

By incorporating intermediate representations, ethical

agents can gain the flexibility and precision required

to respond effectively to evolving real-world condi-

tions.

In this paper, we explore the challenges and com-

plexities in developing ethical reasoning systems and

identify limitations in existing approaches. We out-

line the necessary ingredients for building a holistic

meta-level framework that integrates reasoning, learn-

ing, and uncertainty management, supported by a set

of proposed theorems defining foundational proper-

ties for ethical reasoning systems. These theorems

provide a conceptual foundation for designing agents

capable of navigating complex decision-making sce-

narios. By synthesizing existing knowledge and in-

corporating causal understanding and probabilistic

reasoning, we aim to establish the groundwork for

constructing ethical agents equipped to address real-

world dilemmas in AI systems.

2 INTERMEDIATE

REPRESENTATIONS FOR KRR

Intermediate representations within KRR frameworks

can take various forms, each contributing distinc-

tively to modeling complex decision-making environ-

ments. These representations may be propositional,

capturing straightforward truth-functional statements;

they may involve first-order logic for detailed object-

property relationships (Sanner and Kersting, 2010)

or include action operators and time sequences to

represent dynamic processes and temporal reasoning

(Beckers et al., 2022). Different logics are required

depending on the structure of the scenario. Some rep-

resentations account for multi-agent beliefs (Ghaderi

et al., 2007), probabilistic uncertainty (Belle, 2020),

and even the belief-desire-intention (BDI) models

standard in multi-agent systems (Belle and Levesque,

2015). These logics help represent agent beliefs,

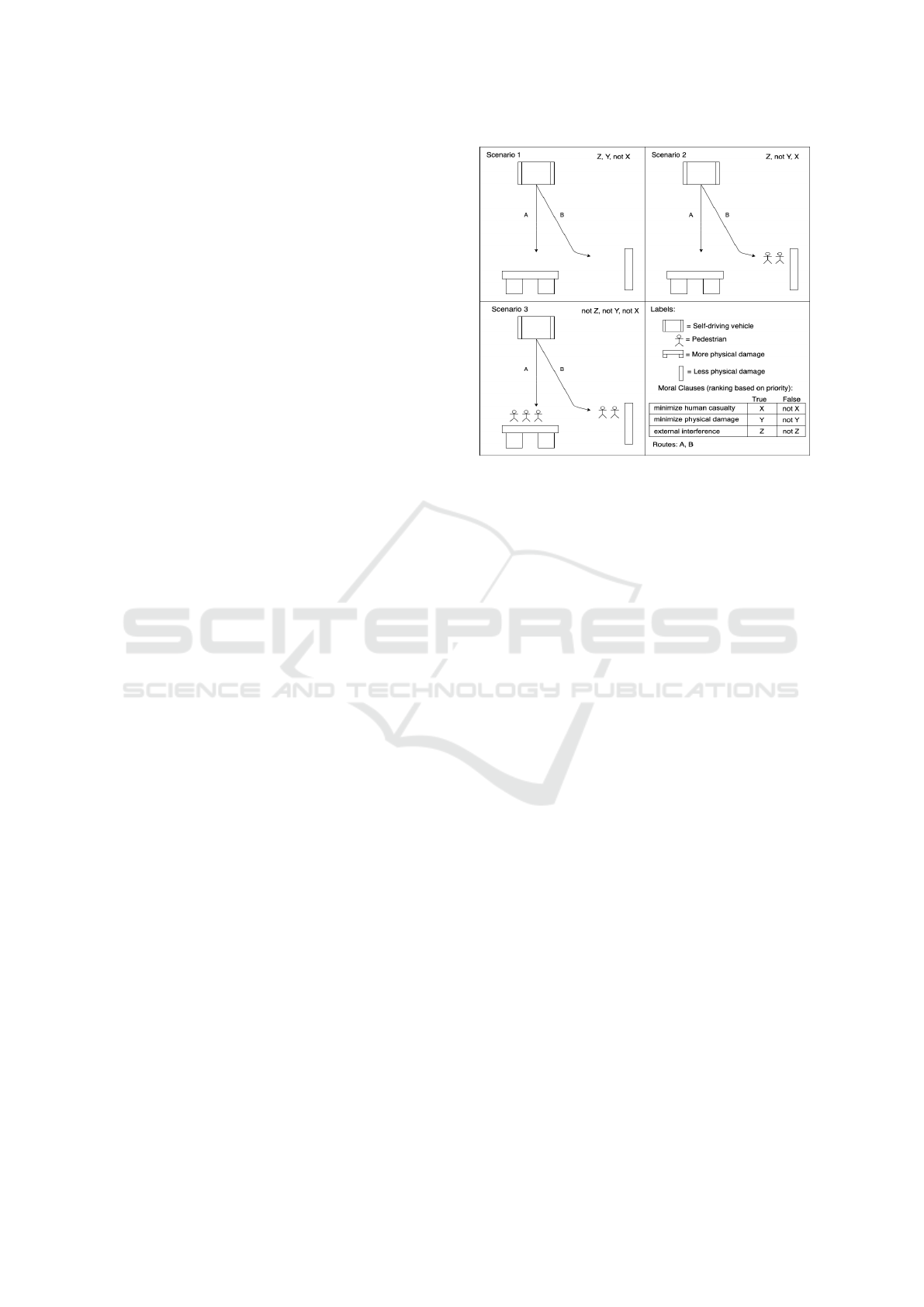

Figure 1: Three scenarios (Scenario 1, Scenario 2, and Sce-

nario 3) each with a choice between two possible routes (A

and B) for a self-driving vehicle are depicted in the figure

above.

preferences, and temporal factors for more adaptable

decision-making. However, this complexity is also

necessary to ensure that every significant aspect of

a situation can be accurately captured and reasoned

about within the KRR framework.

Consider a self-driving car faced with an ethi-

cal decision resembling the classic trolley problem

(Foot, 1967). The car in the current state of opera-

tion must choose between two routes, Route A and

Route B, each presenting different ethical consider-

ations based on potential human casualties, physical

damage, and the degree of interference required to

change its course. Let’s say that the car’s decision-

making framework is governed by three prioritized

principles: Minimize human casualties (X), Mini-

mize physical damage (Y), and Minimize external

interference (Z).

These principles create a structured, yet complex,

moral framework for the car’s next move as it calcu-

lates the best course of action based on each scenario.

The complexity of this ethical calculus is illustrated

across four distinct scenarios:

• Scenario 1 (No Pedestrians): With no human

lives at risk, the vehicle chooses Route B to min-

imize physical damage (Y) without requiring ma-

jor interference (Z), as human safety is not a fac-

tor.

• Scenario 2 (One Pedestrian on Route A): The

presence of a pedestrian on Route A makes mini-

mizing casualties (X) the top priority, leading the

vehicle to choose Route B to avoid harm, even if

it means some interference.

Towards Developing Ethical Reasoners: Integrating Probabilistic Reasoning and Decision-Making for Complex AI Systems

589

• Scenario 3 (Multiple Pedestrians on Both

Routes): Scenario 3 involves Route A (the default

path) with three pedestrians and Route B (requir-

ing intervention) with two. The ethical tension

here lies between staying on the initial path, po-

tentially sacrificing three lives, and intervening to

switch to Route B, saving three lives at the ex-

pense of two. This dilemma raises deeper ethical

questions: Is it justifiable for the car to intervene

in its initial course to save a greater number of

lives, even if this requires sacrificing others who

are already at risk on Route A?

• Scenario 4 (Specific Information about Indi-

viduals): Adding a new layer of complexity,

let us consider that the car has specific informa-

tion about the individuals on each route. Sup-

pose the two individuals on Route A are children,

while the three on Route B are adults. Alterna-

tively, perhaps one of the individuals on Route B

holds a position of societal importance, such as

a medical professional with exceptional skill or

highly important public official. The addition of

these contextual factors—age, health, and social

role—complicates the ethical calculus by intro-

ducing nuanced considerations rooted in cultural,

ethical, and social norms (Andrade, 2019).

In some ethical frameworks, for example, pri-

oritizing children may be seen as more ethical due

to their vulnerability or potential future contribu-

tions. In other contexts, societal roles might influence

decision-making, with a preference given to individ-

uals who can benefit the broader community. This

introduces new questions: Should the vehicle’s ethi-

cal framework consider these personal attributes, po-

tentially valuing certain lives differently? Is it ethi-

cally justifiable for the system to incorporate societal

or cultural values when evaluating lives at risk?

The complexities highlighted by scenarios 3 and

4 reveal the profound challenges in ethical decision-

making. Key questions arise, such as: Is it ethical for

the vehicle to actively alter its path to save more lives,

knowing it risks others in the process? Should char-

acteristics like age or social significance affect the

prioritization of lives? These considerations under-

score the limitations of a purely casualty-based ethical

framework, as individual characteristics and contex-

tual elements often shape ethical perceptions in real-

world settings.

Addressing these multifaceted dilemmas requires

a structured framework that can balance both contex-

tual factors and ethical objectives. In this context,

we introduce “circumstantial dicta” to represent situ-

ational details—such as the vehicle’s initial direction

and specific characteristics of individuals—and “eth-

ical prescripts” to embody moral objectives, such as

prioritizing human life or minimizing physical harm.

By clearly defining these components, the frame-

work can systematically navigate ethical complexi-

ties, adapting its decisions to reflect both individual

principles and situational context.

These scenarios illustrate the challenges in cre-

ating ethical AI systems capable of handling com-

plex, real-world situations. Ethical decision-making

often reflects collective social values and shared un-

derstandings of responsibility and vulnerability, not

merely individual ethical principles (Ruvinsky, 2007;

Kleiman-Weiner et al., 2017; Belle, 2023). For com-

putational ethics, this necessitates frameworks that

not only follow programmed rules but also adaptively

respond to nuanced human values across varied cul-

tural and ethical landscapes. By integrating detailed

contextual information and flexible ethical objectives,

AI systems may be better equipped to make informed,

ethically sound decisions in diverse and unpredictable

environments.

2.1 Circumstantial Dicta and Ethical

Prescripts

This subsection introduces two key concepts: circum-

stantial dicta and ethical prescripts. Circumstantial

dicta refer to contextual factors influencing an agent’s

ethical decisions, such as environmental conditions,

temporal aspects, societal norms, or other situational

details. Circumstantial dicta vary depending on the

application, e.g., road conditions in autonomous driv-

ing or patient conditions in healthcare. Formally, we

define C as the set of circumstantial dicta, with each

c

i

∈ C representing a distinct contextual factor. These

factors shape the ethical landscape, enabling agents to

adapt their decision-making based on situational in-

puts, ensuring a more comprehensive and pragmatic

ethical reasoning framework.

Ethical prescripts encode prioritized objectives

such as minimizing harm or promoting fairness.

Harm is context-specific, ranging from physical in-

jury to loss of autonomy. Quantifying harm involves

utility functions weighted by empirical data or stake-

holder priorities, ensuring decisions align with ethi-

cal standards across diverse scenarios (Krarup et al.,

2022; Beckers et al., 2023). For instance, a prescript

in healthcare may prioritize “do no harm,” encour-

aging low-risk treatment recommendations (Goodall,

2014). In autonomous driving, prescripts might em-

phasize minimizing harm to pedestrians over vehicle

efficiency (Bonnefon et al., 2016). Formally, E is the

set of ethical prescripts, with each e

j

∈ E represent-

ing a moral directive guiding decisions. Together, cir-

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

590

cumstantial dicta and ethical prescripts form a frame-

work balancing contextual awareness with ethical

principles, enabling consistent, contextually appropri-

ate decision-making.

2.2 Probabilistic Representation of

Ethical and Contextual Factors

In real-world scenarios, ethical decision-making often

involves navigating uncertainties in situational con-

texts and prioritizing ethical objectives (Krarup et al.,

2022; Dennis et al., 2016a; Dennis et al., 2016b). This

complexity necessitates a probabilistic approach rep-

resenting the likelihood and relevance of circumstan-

tial dicta and ethical prescripts (Pearl, 2014). By as-

signing probabilistic weights to these elements, the

framework enables an ethical agent to prioritize sit-

uationally appropriate and ethically aligned actions,

even amid uncertainty.

2.2.1 Probability Distribution over

Circumstantial Dicta

The first step in constructing a probabilistic represen-

tation is to define a probability distribution over cir-

cumstantial dicta. This distribution reflects the like-

lihood of various contextual factors being relevant to

the decision-making process (Kleiman-Weiner et al.,

2017). For instance, in an autonomous driving con-

text, circumstantial dicta, such as road conditions or

pedestrian presence, often interact (e.g., bad weather

increasing pedestrian risk). These interdependencies

are represented using probabilistic dependency mod-

els like Bayesian networks, which identify and re-

solve feedback loops, ensuring computational feasi-

bility in complex scenarios.

Let C = {c

1

, c

2

, . . . , c

n

} represent the set of all pos-

sible circumstantial dicta relevant to a particular deci-

sion context. We define the probability distribution

over C as:

P(C) = {P(c

i

) | c

i

∈ C}

where P(c

i

) denotes the probability that circumstan-

tial factor c

i

is relevant in a given scenario. Each

P(c

i

) quantifies the likelihood of encountering a spe-

cific contextual condition, such that the distribution

sums to 1:

n

∑

i=1

P(c

i

) = 1

This distribution enables the agent to interpret the sit-

uational elements, assigning higher probabilities to

more probable factors. For example, in a healthcare

setting, c

1

might denote “patient in critical condition”

with a specific probability based on historical data,

while c

2

might represent “patient has a history of

heart disease,” both contributing to the agent’s contex-

tual awareness and action prioritization (Pearl, 2014).

2.2.2 Conditional Probability of Ethical

Prescripts Given Circumstantial Dicta

Ethical prescriptions are prioritized in response to

circumstantial dicta. The framework uses a condi-

tional probability distribution to assign weights to eth-

ical prescriptions based on their relevance to specific

circumstantial factors, allowing for context-sensitive

ethical reasoning.

Let E = {e

1

, e

2

, . . . , e

m

} denote the set of priori-

tized ethical prescripts. The conditional probability

distribution of ethical prescripts given circumstantial

dicta is defined as:

P(E|C) = {P(e

j

|c

i

) | e

j

∈ E, c

i

∈ C}

where P(e

j

|c

i

) represents the probability of priori-

tizing ethical prescript e

j

given the presence of cir-

cumstantial factor c

i

. This conditional probability

captures the degree to which certain ethical priorities

should be emphasized in light of particular situational

factors.

For instance, in an autonomous driving context,

if c

i

represents a high likelihood of pedestrian pres-

ence, the ethical prescript e

j

corresponding to ”pri-

oritize pedestrian safety” would carry a higher condi-

tional probability, influencing the system toward deci-

sions that emphasize this ethical objective. To ensure

a complete probabilistic model, the conditional prob-

abilities over ethical prescripts for each circumstantial

factor sum to 1:

m

∑

j=1

P(e

j

|c

i

) = 1 ∀ c

i

∈ C

This probabilistic structure ensures that the ethi-

cal agent can adjust its moral priorities dynamically

based on changing situational indicators, enabling

a nuanced and responsive ethical reasoning process

(Dennis et al., 2016b; Dennis et al., 2016a; Pearl,

2014).

2.2.3 Joint Probability Distribution of

Circumstantial Dicta and Ethical

Prescripts

To unify the representation of circumstantial dicta and

ethical prescripts within the decision-making frame-

work, a joint probability distribution P(C, E) is de-

fined. This distribution allows the ethical agent to

compute the combined likelihood of encountering

specific contextual factors and corresponding ethi-

cal prescripts, providing a holistic view of scenario-

specific ethical considerations.

Towards Developing Ethical Reasoners: Integrating Probabilistic Reasoning and Decision-Making for Complex AI Systems

591

The joint probability distribution P(C, E) is given

by:

P(C, E) = P(C) · P(E|C)

where P(C) represents the independent probability

distribution over circumstantial factors, and P(E|C)

denotes the conditional probability distribution over

ethical prescripts given circumstantial factors.

This joint distribution forms the basis for calcu-

lating expected values associated with various ethical

actions, facilitating coherent and contextually rele-

vant decision-making in uncertain environments. For

example, in a healthcare setting where P(c

1

) repre-

sents a critical patient status and P(e

2

|c

1

) indicates a

high priority for life-saving actions, the joint proba-

bility P(c

1

, e

2

) will strongly influence the agent’s de-

cision to pursue life-saving interventions.

The joint probability distribution also supports

the calculation of expected utility for each potential

action, a process that quantifies the expected value

of decisions by factoring in both ethical priorities

and contextual conditions (Russell and Norvig, 2016;

Von Neumann and Morgenstern, 2007). Formally, the

expected utility for an action a can be defined as:

U(a|C, E) =

n

∑

i=1

m

∑

j=1

P(c

i

) · P(e

j

|c

i

) · u(a|c

i

, e

j

)

where u(a|c

i

, e

j

) represents the utility of action a un-

der circumstantial factor c

i

and ethical prescript e

j

.

The action that maximizes expected utility is selected

as the optimal decision:

a

∗

= argmax

a∈A

U(a|C, E)

This calculation enables the agent to prioritize actions

that align with both ethical imperatives and situa-

tional demands, ensuring principled, context-sensitive

decision-making.

2.3 Normalized Collection with Matrix

Representation

A standardized method for structuring ethical prior-

ities and contextual factors supports consistent yet

adaptable decision-making across diverse scenarios.

Normalized collections, which organize ethical pre-

scripts and circumstantial dicta into reusable matri-

ces, help address shifting contexts and interdependen-

cies, ensuring scalable ethical reasoning (Strehl and

Ghosh, 2002).

Each ethical scenario is encapsulated in a matrix

M = [m

i j

], where each entry m

i j

denotes the weighted

influence of ethical prescript e

i

in the context of cir-

cumstantial factor c

j

. Here:

m

i j

= w(e

i

, c

j

) · P(e

i

|c

j

)

where w(e

i

, c

j

) represents a baseline weight or rele-

vance of ethical prescript e

i

under the circumstantial

factor c

j

, and P(e

i

|c

j

) is the conditional probability

derived from the previous probabilistic model, indi-

cating the likelihood of prioritizing e

i

given c

j

.

This matrix structure M captures a unique ethi-

cal profile for each scenario, reflecting the contextual-

ized relevance of ethical prescripts in structured form.

Mathematically, this matrix is defined as:

M =

m

11

m

12

. . . m

1n

m

21

m

22

. . . m

2n

.

.

.

.

.

.

.

.

.

.

.

.

m

m1

m

m2

. . . m

mn

2.3.1 Construction and Normalization of the

Collection

The normalized collection, denoted N =

{M

1

, M

2

, . . . , M

k

}, is a standardized set of matri-

ces, each representing an ethical profile drawn from

various contexts. Normalization is a crucial process

here to allow comparisons and coherence across these

profiles, ensuring consistency in the application of

ethical prescripts across diverse scenarios.

To achieve normalization, each matrix entry m

i j

is

scaled to a defined range, such as [0, 1], so that eth-

ical prescripts retain consistent relative significance

across contexts. Formally, the normalization process

for any matrix M

i

∈ N is expressed as:

M

norm

i

=

M

i

− min(M

i

)

max(M

i

) − min(M

i

)

where max(M

i

) and min(M

i

) are the maximum and

minimum values in M

i

, respectively. This normalized

form M

norm

i

ensures that ethical profiles across differ-

ent contexts can be applied and interpreted uniformly,

allowing for adaptability (Pearl, 2014; Koller, 2009).

2.3.2 Ensemble Coding and Clustering of

Ethical Profiles for Efficient Contextual

Retrieval

To further enhance adaptability, identifying shared

ethical priorities and contextual influences enables the

agent to generalize ethical decision-making from one

scenario to another. The process includes grouping

profiles with similar ethical prescript priorities and

contextual factors, allowing the agent to identify and

apply ethical principles across scenarios with related

patterns. For instance, scenarios in autonomous driv-

ing that share similar road conditions, pedestrian pres-

ence, and weather may be grouped to ensure ethi-

cal priorities are maintained consistently across these

conditions (Ariely, 2001).

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

592

To organize the normalized collection for effec-

tive retrieval and application, Cluster Ensemble tech-

niques (Strehl and Ghosh, 2002) are employed to

arrange matrices by similarity, facilitating efficient

access to relevant ethical profiles. This clustering

method groups ethical profiles based on shared at-

tributes, simplifying retrieval for contexts that align

closely with previously encountered scenarios.

Given clusters {C

1

,C

2

, . . . ,C

k

}, a new scenario

represented by matrix M

x

is assigned to the most rel-

evant cluster C

k

by calculating the distance metric

d(M

x

, M

y

) between M

x

and each matrix M

y

in the col-

lection:

d(M

x

, M

y

) =

m

∑

i=1

n

∑

j=1

|m

(x)

i j

− m

(y)

i j

|

where m

(x)

i j

and m

(y)

i j

are the entries in matrices M

x

and

M

y

, respectively. This clustering ensures the ethical

reasoner can retrieve and apply ethical principles ef-

fectively across varying but related contexts.

By integrating normalization, ensemble coding,

and clustering, the normalized collection supports

consistent and contextually adaptive ethical reason-

ing. This organized repository enables the agent to

handle a wide variety of real-world scenarios, apply-

ing ethical principles in a principled yet flexible man-

ner.

2.3.3 Non-Deterministic Models for Action

Selection

In ethical reasoning systems, certain scenarios may

allow for multiple ethically acceptable actions, each

aligned with relevant ethical prescripts but varying

in their potential outcomes. To address this com-

plexity, non-deterministic modeling provides a mech-

anism for probabilistically weighing and selecting ac-

tions based on their ethical impact. This model al-

lows the ethical reasoner to account for ethical flexi-

bility while maintaining adherence to prioritized eth-

ical principles.

We define probability distribution over actions

A = {a

1

, a

2

, . . . , a

k

} as the set of all potential actions

available to the agent in a given scenario. Each ac-

tion a ∈ A is associated with a selection probability

P(a|C, E), which represents the likelihood of choos-

ing a given a combination of circumstantial factors C

and ethical prescripts E.

The action probability distribution P(A|C, E) is

formally expressed as:

P(A|C, E) = {P(a|C, E) | a ∈ A}

where each P(a|C, E) is calculated by factoring in

both the ethical prescripts relevant to the specific con-

text and the probabilities derived from the normal-

ized collection. This distribution ensures that each

action’s likelihood is proportionate to its ethical rel-

evance, making ethical decision-making probabilisti-

cally flexible across contexts.

2.3.4 Expected Utility for Action Selection

To determine the action that best aligns with ethical

objectives, an expected utility function U(a|C, E) is

defined for each action a in the context of circumstan-

tial factors C and ethical prescripts E (Von Neumann

and Morgenstern, 2007). The expected utility repre-

sents the ethical value or desirability of each action,

allowing the reasoner to make decisions that maxi-

mize ethical alignment.

The optimal action a

∗

is selected based on the ex-

pected utility, expressed as (refer to Section 2.2.3):

a

∗

= argmax

a∈A

∑

c∈C

∑

e∈E

P(c) · P(e|c) ·U (a|c, e)

where U(a|c, e) represents the utility associated with

action a under a specific circumstantial factor c and

ethical prescript e and P(c) is the probability of each

circumstantial factor, and P(e|c) is the conditional

probability of each ethical prescript given the context.

By maximizing expected utility, this model en-

ables the ethical agent to select an action that aligns

with prioritized ethical objectives while factoring in

context-driven variability. For example, in an au-

tonomous vehicle scenario, if ethical prescripts em-

phasize both minimizing harm and respecting pedes-

trian safety, the expected utility function can weigh

these prescripts against circumstantial factors to iden-

tify the most ethically appropriate action among avail-

able options.

2.3.5 Multi-Objective Optimization

In complex ethical decision-making, multiple ob-

jectives may need to be optimized simultaneously

(Keeney, 1993; Coello, 2006). Multi-objective opti-

mization enables the agent to balance competing ethi-

cal prescripts, ensuring that no single ethical directive

disproportionately influences the decision at the ex-

pense of others.

Formally, this approach introduces a weighted

utility function:

U(a|C, E) =

m

∑

j=1

α

j

·U

j

(a|C, e

j

)

where U

j

(a|C, e

j

) is the utility associated with action

a in relation to ethical prescript e

j

and α

j

represents

the weight assigned to each ethical prescript e

j

, re-

flecting its relative importance in the context of cir-

cumstantial factors.

Towards Developing Ethical Reasoners: Integrating Probabilistic Reasoning and Decision-Making for Complex AI Systems

593

Through this multi-objective framework, the eth-

ical reasoner is equipped to make balanced deci-

sions that satisfy multiple ethical objectives simulta-

neously. The weight α

j

can be dynamically adjusted

based on contextual elements, allowing the system to

adaptively balance ethical prescripts as situational de-

mands evolve.

2.3.6 Integrating Non-Deterministic Models

with Normalized Collection

The non-deterministic model is inherently connected

to the normalized collection in that the probabilistic

weights assigned to each action reflect the ethical pri-

orities derived from the collection’s matrix represen-

tation. Each matrix M

i

in the normalized collection

serves as a reference for determining the relative im-

portance of ethical prescripts, and by extension, the

probability distribution over actions.

When a scenario is encountered, the correspond-

ing matrix from the normalized collection provides

context-specific weights for ethical prescripts, influ-

encing the probability distribution P(A|C, E) over

possible actions. This integration ensures that the

ethical reasoner’s decision-making is not only prob-

abilistically flexible but also rooted in consistent ethi-

cal principles across diverse contexts.

By combining non-deterministic modeling, ex-

pected utility, and multi-objective optimization, this

approach enables the ethical agent to navigate com-

plex decision scenarios, balancing ethical princi-

ples with situational sensitivity. This layered model

serves as the basis for robust, adaptable, and ethically

grounded action selection in uncertain environments.

3 THEORETICAL

FOUNDATIONS AND DESIRED

THEOREMS

In this section, we aim to set foundational condi-

tions that provide clarity on how an ethical system

behaves under varied circumstances. These prin-

ciples—consistency, optimality, robustness, conver-

gence, and alignment with human judgment—are es-

sential pillars for ensuring correctness in ethical com-

putations. By defining these properties, we not only

articulate the expected behaviors of ethical agents but

also create a basis for proving correctness, stabil-

ity, and effectiveness. We provide sample theorems

and strategies for proving their validity, offering a

roadmap for substantiating each core attribute of the

system’s ethical reasoning with appropriate analytical

techniques.

Theorem 1 (Ethical Consistency): Ethical consis-

tency ensures stable prioritization using metrics like

cosine similarity or KL divergence, so small changes

in context result in proportionate changes in ethical

decisions. Specifically, for any two sets of circum-

stantial dicta, C

1

and C

2

, where C

1

≈ C

2

, the prob-

ability distributions over ethical prescripts should be

approximately the same:

∀C

1

,C

2

∈ C, if C

1

≈ C

2

, then P(E|C

1

) ≈ P(E|C

2

).

This statement ensures that if two situational con-

texts, C

1

and C

2

, are nearly identical, then the sys-

tem’s ethical priorities—represented by the distribu-

tion P(E|C) over ethical prescripts—should not vary

disproportionately. Such stability prevents minor con-

textual differences from causing erratic or unpre-

dictable shifts in ethical decisions, a foundational re-

quirement for reliable ethical decision-making in real-

world applications.

To formalize the concept of “similar” contexts,

we introduce a similarity metric d(C

1

,C

2

) over the

space of circumstantial dicta. This metric quantifies

the ”distance” between two sets of circumstantial fac-

tors, where contexts C

1

and C

2

are considered similar

if d(C

1

,C

2

) ≤ δ for a small threshold δ. This thresh-

old δ represents the maximum allowable contextual

variation for two scenarios to be treated as similar in

the system’s ethical reasoning process.

To support the claim that P(E|C) remains stable

across similar contexts, a Lipschitz continuity condi-

tion (Belle, 2023; Rudin et al., 1964; Boyd and Van-

denberghe, 2004) can be applied to P(E|C). This con-

tinuity condition ensures that there exists a constant

L > 0 such that:

∥P(E|C

1

) − P(E|C

2

)∥ ≤ L · d(C

1

,C

2

),

where ∥ · ∥ represents an appropriate norm (such

as the L

1

norm or total variation distance) that

measures the difference between the distributions

P(E|C

1

) and P(E|C

2

). This condition guarantees that

small variations in circumstantial contexts C produce

only proportionally small variations in P(E|C). The

Lipschitz constant L thus provides a bound on the

degree of ethical priority change as the context shifts,

establishing a formalized level of stability. The

theorem’s significance lies in its ability to confirm

that the system’s ethical responses will remain robust

under minor contextual changes. By ensuring that

similar contexts yield consistent ethical prioritization,

we underpin the reliability of the system’s ethical

decision-making, thus contributing to predictable,

stable, and ethically sound behavior across varying

but comparable scenarios.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

594

Theorem 2 (Decision Optimality): Optimal deci-

sions maximize expected utility through weighted

multi-objective optimization, where utility values are

derived from stakeholder-defined priorities or empiri-

cal data. This can be mathematically expressed as:

Optimal Decision = arg max

D

n

∑

i=1

P(C

i

) ·U(D, E

i

),

where U(D, E

i

) denotes the utility of decision D under

ethical prescript E

i

, and P(C

i

) represents the probabil-

ity of circumstantial dictum C

i

.

Here, we want the formulation to capture the prin-

ciple that the system’s decisions should reflect a ra-

tional prioritization of ethical objectives, with each

possible decision evaluated based on its alignment

with ethical prescripts under specific contextual con-

ditions. By calculating the expected utility of each

decision as a weighted sum of the utilities under var-

ious circumstantial factors, the system can select the

decision that provides the greatest alignment with eth-

ical principles in the given scenario. To frame this

mathematically, the expected utility of a decision D,

denoted E[U(D)], is given by:

E[U(D)] =

n

∑

i=1

P(C

i

) ·U(D, E

i

).

Here, the term P(C

i

)·U(D, E

i

) represents the con-

tribution of each circumstantial factor C

i

and its as-

sociated ethical prescript E

i

to the overall utility of

decision D. The optimization problem is thus to iden-

tify the decision D that maximizes this expected util-

ity, selecting the action with the highest ethical align-

ment.

To ensure an optimal solution for expected utility

maximization, certain conditions are required. The

utility function U(D, E

i

) must be continuous to avoid

abrupt changes in utility due to small variations in de-

cisions or context. Additionally, if the utility function

is bounded, it ensures that the optimization process

remains well-defined, preventing infinite utility val-

ues.

Achieving decision optimality involves several

steps. First, the expected utility E[U(D)] for each

potential decision D is formulated by calculating a

weighted sum of circumstantial factors and ethical

prescripts, with each weight reflecting the relevance

of specific contextual elements. This expected

utility is then framed as an optimization problem to

maximize E[U(D)], turning the decision selection

into a solvable problem. Finally, verifying that

conditions such as the continuity and boundedness of

the utility function are met ensures that the solution

is both practically feasible and theoretically sound,

resulting in an optimal, ethically aligned decision. By

maximizing expected utility, the system ensures that

decisions reflect a balanced consideration of ethical

priorities and context.

Theorem 3 (Robustness Under Uncertainty): An

ethical decision-making system is robust if small vari-

ations in the probability distributions of circumstan-

tial dicta P(C) result in proportionately small changes

in the decision outcome F(D|C). This robustness can

be formally expressed as:

∀ε > 0, ∃δ > 0 such that if |P(C) − P(C

′

)| < δ,

then |F(D|C) − F(D|C

′

)| < ε.

where, P(C) and P(C

′

) denote the probability distri-

butions over circumstantial dicta for two similar con-

texts, and F(D|C) represents the decision function for

a given decision D given the context C.

This expression asserts that for any desired degree

of stability ε in the decision outcome, there exists a

threshold δ for changes in the probability distribution

of circumstantial factors. As long as changes in P(C)

remain within this δ threshold, the corresponding de-

cision F(D|C) will change by no more than ε.

To establish robustness under uncertainty, the ap-

proach includes several key steps. First, a sensitivity

analysis of the decision function F(D|C) is conducted

by introducing a small perturbation in the probability

distribution of circumstantial dicta P(C) and observ-

ing its effect on F(D|C). This step involves deriv-

ing mathematical bounds on the impact of changes

in P(C) on the decision outcome, which can often be

achieved through Lipschitz continuity (Rudin et al.,

1964; Boyd and Vandenberghe, 2004). A Lipschitz

condition would imply that there exists a constant K

such that:

|F(D|C) − F(D|C

′

)| ≤ K|P(C) − P(C

′

)|,

where K is a bound on the sensitivity of the decision

function with respect to changes in P(C). This con-

dition ensures that the decision function’s response to

contextual changes is proportional and does not ex-

ceed the threshold of stability.

In simpler terms, this theorem offers a formal

way to assess the system’s ability to remain stable

despite small changes in input data. By ensuring this

robustness, the system can handle minor variations

in context without affecting the reliability or con-

sistency of its ethical decision-making, maintaining

trustworthiness across similar but slightly different

situations.

Theorem 4 (Convergence of Ethical Decision-

Making): In a learning-based ethical decision-

making system, the system’s policy is said to con-

verge if, over time and repeated exposure to similar

Towards Developing Ethical Reasoners: Integrating Probabilistic Reasoning and Decision-Making for Complex AI Systems

595

contexts, the probability of selecting a particular de-

cision given certain circumstantial dicta becomes sta-

ble. This convergence is essential for systems that

learn from experience, as it ensures that with increas-

ing data, the system’s decisions reach a predictable

and consistent pattern, ultimately leading to stable,

ethically sound behavior.

The convergence property can be formally stated

as:

lim

t→∞

P(D

t+1

= D|C

t

= C) = 1,

where, D

t

is the decision made at time t, C

t

repre-

sents the set of circumstantial dicta at time t, and

P(D

t+1

= D|C

t

= C) is the probability that the system

will continue to select decision D under the circum-

stances represented by C as time progresses.

Through the statement, we asserts that, as the sys-

tem gains more experience with context C over time,

the probability of making a specific decision D given

C approaches certainty. In practical terms, this means

that the system “learns” from its experiences, even-

tually developing a stable and repeatable pattern of

ethical decisions in familiar contexts.

The concept of convergence in decision-making is

particularly relevant for systems that adapt based on

data, such as those employing reinforcement learning

or iterative improvement. Convergence implies that

the ethical decision-making policy becomes stable

and predictable over time, resulting in a system that

behaves reliably and in accordance with predefined

ethical standards. For instance, in an autonomous

vehicle system, this would mean that as the vehicle

encounters similar road scenarios repeatedly, its re-

sponses to those scenarios converge, leading to a con-

sistent pattern of decision-making aligned with ethi-

cal priorities like pedestrian safety. To demonstrate

convergence, the strategy generally involves model-

ing the learning dynamics as a stochastic process or

Markov decision process (MDP), where the probabil-

ity distribution over decisions given a set of circum-

stantial factors evolves over time. Proving conver-

gence often requires showing that the policy updates

diminish over time, for instance by using a decreas-

ing learning rate in reinforcement learning, ensuring

that the updates become smaller as more data accu-

mulates. Additionally, fixed-point theory (Hadzic and

Pap, 2013) may be applied to identify stable points in

the learning process—decisions that do not change as

the system iterates through similar scenarios.

Establishing convergence involves several key

steps. First, the system’s learning dynamics are

modeled as a dynamical system, where decision

policy updates are influenced by past outcomes and

accumulated experiences. This allows the system’s

behavior to be analyzed as it adapts. Next, stability

is demonstrated using tools from dynamical systems

and probability theory, showing that policy adjust-

ments decrease over time, signaling convergence to

a stable state. Finally, limit behavior is proven by

showing that, in a given context, the probability of

selecting a particular decision stabilizes, ensuring

consistent adherence to ethical principles in recurring

scenarios.

Theorem 5 (Alignment with Human Ethical Judg-

ments): An ethical decision-making model achieves

alignment with human ethical judgments when the

system’s decisions correspond closely to human de-

cisions in similar contexts. Formally, this alignment

is measured by the correlation between the model’s

decisions, D

model

, and human decisions, D

human

, ex-

pressed as:

Corr(D

model

, D

human

) > θ,

where, Corr represents a correlation function that

measures the degree of similarity between the deci-

sions made by the model and those made by humans,

D

model

are the decisions made by the model, D

human

are the decisions made by humans, and θ is a prede-

fined threshold indicating acceptable alignment.

Here, we suggest that an ethical AI system’s de-

cisions should mirror human ethical reasoning to a

significant degree, particularly in complex or morally

ambiguous situations. Achieving this alignment en-

sures that the system’s ethical reasoning aligns with

commonly accepted moral standards, enhancing its

social acceptability and trustworthiness.

Alignment with human judgments in an ethical AI

system is achieved through a blend of empirical data

collection and statistical evaluation. First, data on hu-

man decisions is gathered across a variety of scenarios

and contexts that the model is likely to encounter, al-

lowing for a direct comparison between the model’s

choices and human decisions. This comparison in-

volves evaluating the model’s responses in each sce-

nario relative to those made by human subjects, estab-

lishing a basis for assessing alignment. Next, statisti-

cal methods are employed to calculate the correlation

between the model’s decisions and human decisions,

providing a quantitative measure of alignment. The

strength of this correlation serves as an indicator of

how closely the model’s ethical reasoning mirrors that

of humans. Finally, the correlation is assessed against

a predefined threshold θ, with high correlation values

indicating strong alignment. If the correlation falls

below this threshold, it suggests that the model may

require adjustments to better align with human ethi-

cal standards. By aligning its decisions with human

judgments, the model gains a measure of validation

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

596

against human moral reasoning, which is crucial for

building ethical AI systems that are consistent with

societal expectations and norms.

4 DISCUSSION

The central theme of our work so far has been the

use of circumstantial dicta, ethical prescripts, and in-

termediate representations (IRs) to deconstruct com-

plex ethical dilemmas into manageable subgoals, al-

lowing for adaptability in changing contexts while

maintaining alignment with core ethical principles.

However, practical implementation introduces chal-

lenges, particularly in quantifying ethical priorities

in real-time, stabilizing decision-making in variable

contexts, and addressing diverse real-world scenarios.

Ethical dilemmas, such as those embodied in the clas-

sic trolley problem, illustrate the difficulty of translat-

ing philosophical reasoning into computational pro-

cesses. These dilemmas are shaped by cultural, soci-

etal, and historical constructs, often involving mul-

tiple potential equilibria. Computational systems

must navigate these moral ambiguities while address-

ing the trade-offs inherent in real-world decision-

making. The challenge lies in ensuring that the un-

derlying ethical reasoning framework remains consis-

tent across various contexts while acknowledging that

moral judgments often hinge on subjective, culturally

influenced factors.

Advancing ethical reasoning systems necessitates

drawing from interdisciplinary insights, particularly

from the social sciences. Trust, agent relationships,

and affective dimensions play pivotal roles in shaping

ethical cognition, especially in multi-agent systems

(Etzioni and Etzioni, 2017). Misalignments in ethi-

cal reasoning between agents can erode trust and hin-

der collective decision-making, a particularly com-

plex issue in scenarios with conflicting ethical goals

or shared ethical objectives. Understanding the dy-

namics of individual agent behavior within collective

decision-making processes is essential for building

systems that can navigate such complexities. Game

theory offers tools for analyzing collective action and

ethical decision-making at the group level (Ostrom,

2000; Coleman, 2017). Concepts like correlated

equilibria and coordination games provide valuable

frameworks for understanding how individual ethical

judgments aggregate into collective moral behavior,

especially in multi-agent systems where cooperation

or competition is critical.

Incorporating human affective dimensions re-

mains a challenge in computational models but is

crucial for aligning AI systems with human values.

Insights from neuroeconomics, social sciences, and

affective neuroscience provide avenues for integrat-

ing emotion-driven, normative aspects into AI sys-

tems (Guzak, 2014). Embedding these affective el-

ements enhances the alignment of AI systems with

human values, fostering adaptability in complex, real-

world scenarios. Additionally, meta-ethical consid-

erations, such as the nature of moral principles and

their justification, play an essential role in guiding AI

design (Hagendorff, 2020). Should AI systems fol-

low universal ethical principles, or should they adapt

to culturally specific moral frameworks? Balancing

these approaches ensures that AI systems respect di-

verse moral values while maintaining consistency in

decision-making.

Intermediate representations are pivotal for en-

abling hierarchical reasoning, which allows ethical

decision-making to span multiple levels of abstrac-

tion. By decomposing overarching objectives into ac-

tionable subgoals, this framework offers a modular

approach to managing ethical complexity. However,

applying hierarchical reasoning within probabilistic

and non-deterministic systems remains an underex-

plored area that demands rigorous empirical testing to

ensure practical applicability. The challenge of align-

ing system outputs with human ethical judgments per-

sists, especially in probabilistic settings where the

uncertainty of outcomes needs to be accounted for.

Although probabilistic reasoning formalizes ethical

principles, aligning these with human moral stan-

dards remains an ongoing challenge. Defining bench-

marks, gathering diverse datasets, and setting align-

ment thresholds are necessary steps for progress. In

particular, reinforcement learning, while valuable for

optimizing decision-making, carries risks of ethical

drift if not carefully monitored. Ensuring that reward

functions remain aligned with ethical goals and that

updates to policies reflect human ethical intuitions is

a critical concern.

Bridging the gap between theoretical frameworks

and practical applications requires substantial empir-

ical testing. Validation through simulations, such

as autonomous driving scenarios, will benchmark

the system’s ethical decision-making against human

judgments, helping to identify discrepancies and re-

fine the framework. Adherence to current stan-

dards like IEEE P7001 ensures that the system aligns

with ethical protocols recognized in the field of au-

tonomous systems (Winfield et al., 2021), which con-

tributes to the robustness and practical applicability

of the proposed framework. Observing emergent be-

haviors through simulations, such as those inspired

by the Ethics microworld simulator (Kavathatzopou-

los et al., 2007), provides critical insights into soci-

Towards Developing Ethical Reasoners: Integrating Probabilistic Reasoning and Decision-Making for Complex AI Systems

597

etal implications. Social simulation frameworks, like

those used for studying multi-agent ethical systems

(Ghorbani et al., 2013; L

´

opez-Paredes et al., 2012;

Mercuur et al., 2019), offer valuable methodologies

for exploring how individual moral decisions aggre-

gate into collective behavior. These frameworks are

instrumental in studying complex multi-agent interac-

tions and the ethical dilemmas that emerge from them.

By integrating interdisciplinary insights, struc-

tured reasoning, and probabilistic modeling, this work

lays out a foundational framework for the develop-

ment of adaptive, ethically aligned AI systems. How-

ever, realizing this vision will require iterative re-

finement and extensive empirical validation. Ad-

ditionally, continued engagement with philosophical

and social dimensions is necessary to address the

inherent complexities of real-world moral decision-

making. The challenges associated with context-

dependent values, the quantification of utility, and

the management of interrelated ethical prescriptions

all point to the need for more comprehensive mecha-

nisms to handle dynamic and uncertain ethical scenar-

ios. Only through such comprehensive efforts can we

build AI systems capable of navigating the complex-

ities of moral decision-making in diverse, real-world

settings.

5 CONCLUSION

This paper introduces the necessary components

for developing computational ethics frameworks,

focusing on the integration of probabilistic, non-

deterministic, and context-sensitive models. While

the approach lays a strong foundation, challenges re-

main in building adaptive systems capable of navigat-

ing the complexities of real-world ethical dilemmas.

Moving forward, further refinement, empirical test-

ing, and cross-disciplinary collaboration are needed

to ensure these systems can be practically deployed

in diverse, unpredictable environments.

REFERENCES

Anderson, M., Anderson, S., and Armen, C. (2005). To-

wards machine ethics : Implementing two action-

based ethical theories. In AAAI Fall Symposium.

Anderson, M., Anderson, S., and Armen, C. (2006). An ap-

proach to computing ethics. IEEE Intelligent Systems,

21(4):56–63.

Andrade, G. (2019). Medical ethics and the trolley problem.

Journal of Medical Ethics and History of Medicine,

12.

Ariely, D. (2001). Seeing sets: Representation by statistical

properties. Psychological Science, 12(2):157–162.

Awad, E., Levine, S., Anderson, M., Anderson, S. L.,

Conitzer, V., Crockett, M., Everett, J. A., Evgeniou,

T., Gopnik, A., Jamison, J. C., Kim, T. W., Liao,

S. M., Meyer, M. N., Mikhail, J., Opoku-Agyemang,

K., Borg, J. S., Schroeder, J., Sinnott-Armstrong,

W., Slavkovik, M., and Tenenbaum, J. B. (2022).

Computational ethics. Trends in Cognitive Sciences,

26(5):388–405.

Beckers, S., Chockler, H., and Halpern, J. (2022). A causal

analysis of harm. Advances in Neural Information

Processing Systems, 35:2365–2376.

Beckers, S., Chockler, H., and Halpern, J. (2023). Quan-

tifying harm. In Proceedings of the 32nd Interna-

tional Joint Conference on Artificial Intelligence (IJ-

CAI 2023).

Belle, V. (2020). Symbolic Logic Meets Machine Learn-

ing: A Brief Survey in Infinite Domains. In Davis, J.

and Tabia, K., editors, Scalable Uncertainty Manage-

ment, volume 12322, pages 3–16. Springer Interna-

tional Publishing, Cham. Series Title: Lecture Notes

in Computer Science.

Belle, V. (2023). Knowledge representation and acquisition

for ethical AI: challenges and opportunities. Ethics

and Information Technology, 25(1):22.

Belle, V. and Levesque, H. (2015). ALLEGRO: Belief-

Based Programming in Stochastic Dynamical Do-

mains. In Proceedings of the Twenty-Fourth Interna-

tional Joint Conference on Artificial Intelligence (IJ-

CAI 2015).

Bentham, J. (2003). An introduction to the principles of

morals and legislation (chapters i–v). In Utilitarian-

ism and on Liberty, pages 17–51. Blackwell Publish-

ing Ltd.

Bonnefon, J.-F., Shariff, A., and Rahwan, I. (2016). The

social dilemma of autonomous vehicles. Science,

352(6293):1573–1576.

Boyd, S. and Vandenberghe, L. (2004). Convex optimiza-

tion. Cambridge university press.

Coello, C. C. (2006). Evolutionary multi-objective opti-

mization: a historical view of the field. IEEE com-

putational intelligence magazine, 1(1):28–36.

Coleman, J. (2017). The mathematics of collective action.

Routledge.

Dennis, L., Fisher, M., Slavkovik, M., and Webster, M.

(2016a). Formal verification of ethical choices in au-

tonomous systems. Robotics and Autonomous Sys-

tems, 77:1–14.

Dennis, L. A., Fisher, M., Lincoln, N. K., Lisitsa, A.,

and Veres, S. M. (2016b). Practical verification of

decision-making in agent-based autonomous systems.

Automated Software Engineering, 23(3):305–359.

Etzioni, A. and Etzioni, O. (2017). Incorporating Ethics

into Artificial Intelligence. The Journal of Ethics,

21(4):403–418.

Foot, P. (1967). The problem of abortion and the doctrine

of double effect. Oxford, 5:5–15.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

598

Ganascia, J.-G. (2007). Modelling ethical rules of lying

with Answer Set Programming. Ethics and Informa-

tion Technology, 9(1):39–47.

Ghaderi, H., Levesque, H., and Lesp

´

erance, Y. (2007). To-

wards a logical theory of coordination and joint abil-

ity. In Proceedings of the 6th international joint con-

ference on Autonomous agents and multiagent sys-

tems, AAMAS ’07, pages 1–3, New York, NY, USA.

Association for Computing Machinery.

Ghorbani, A., Dignum, V., Bots, P., and Dijkema, G. (2013).

Maia: a framework for developing agent-based social

simulations. JASSS-The Journal of Artificial Societies

and Social Simulation, 16(2):9.

Gilpin, L. H., Bau, D., Yuan, B. Z., Bajwa, A., Specter,

M. A., and Kagal, L. (2018). Explaining explanations:

An overview of interpretability of machine learning.

In 2018 IEEE 5th International Conference on Data

Science and Advanced Analytics (DSAA), pages 80–

89.

Goodall, N. J. (2014). Machine ethics and automated vehi-

cles. Road vehicle automation, pages 93–102.

Guzak, J. R. (2014). Affect in ethical decision making:

Mood matters. Ethics & Behavior, 25(5):386–399.

Hadzic, O. and Pap, E. (2013). Fixed point theory in prob-

abilistic metric spaces, volume 536. Springer Science

& Business Media.

Hagendorff, T. (2020). The ethics of ai ethics: An evalu-

ation of guidelines. Minds and machines, 30(1):99–

120.

Islam, R., Keya, K. N., Pan, S., Sarwate, A. D., and Foulds,

J. R. (2023). Differential Fairness: An Intersectional

Framework for Fair AI. Entropy, 25(4):660. Num-

ber: 4 Publisher: Multidisciplinary Digital Publishing

Institute.

Kavathatzopoulos, I., Laaksoharju, M., and Rick, C. (2007).

Simulation and support in ethical decision making.

Globalisation: Bridging the global nature of Infor-

mation and Communication Technology and the local

nature of human beings, pages 278–287.

Keeney, R. L. (1993). Decisions with multiple objectives:

Preferences and value tradeoffs. Cambridge univer-

sity press.

Kleiman-Weiner, M., Saxe, R., and Tenenbaum, J. B.

(2017). Learning a commonsense moral theory. Cog-

nition, 167:107–123.

Koller, D. (2009). Probabilistic Graphical Models: Princi-

ples and Techniques. The MIT Press.

Krarup, B., Lindner, F., Krivic, S., and Long, D. (2022).

Understanding a robot’s guiding ethical principles via

automatically generated explanations. In 2022 IEEE

18th International Conference on Automation Science

and Engineering (CASE), pages 627–632, Mexico

City. IEEE Xplore.

Levesque, H. J. (1986). Knowledge representation and

reasoning. Annual Review of Computer Science,

1(1):255–287.

Lockhart, T. (2000). Moral uncertainty and its conse-

quences. Oxford University Press, New York.

L

´

opez-Paredes, A., Edmonds, B., and Klugl, F. (2012).

Agent based simulation of complex social systems.

Mercuur, R., Dignum, V., and Jonker, C. (2019). The value

of values and norms in social simulation. Journal of

Artificial Societies and Social Simulation, 22(1):1–9.

Moor, J. H. (1995). Is ethics computable? Metaphilosophy,

26(1/2):1–21.

Moor, J. H. (2006). The nature, importance, and diffi-

culty of machine ethics. IEEE Intelligent Systems,

21(4):18–21.

Ostrom, E. (2000). Collective action and the evolution

of social norms. Journal of economic perspectives,

14(3):137–158.

Pearl, J. (2014). Probabilistic reasoning in intelligent sys-

tems: networks of plausible inference. Elsevier.

Rahman, M. M., Pan, S., and Foulds, J. R. (2024). Towards

A Unifying Human-Centered AI Fairness Framework.

In Proceedings of the 2024 International Conference

on Information Technology for Social Good, GoodIT

’24, pages 88–92, New York, NY, USA. Association

for Computing Machinery.

Ross, D. (2002). The Right and the Good. Oxford Univer-

sity Press.

Rudin, W. et al. (1964). Principles of mathematical analy-

sis, volume 3. McGraw-hill New York.

Russell, S. J. and Norvig, P. (2016). Artificial intelligence:

a modern approach. Pearson.

Ruvinsky, A. I. (2007). Computational ethics. In Encyclo-

pedia of Information Ethics and Security, pages 76–

82. IGI Global.

Sanner, S. and Kersting, K. (2010). Symbolic Dynamic Pro-

gramming for First-order POMDPs. In Proceedings

of the AAAI Conference on Artificial Intelligence, vol-

ume 24, pages 1140–1146. Number: 1.

Segun, S. T. (2020). From machine ethics to computational

ethics. AI & SOCIETY.

Strehl, A. and Ghosh, J. (2002). Cluster ensembles A

knowledge reuse framework for combining partition-

ings. In Dechter, R., Kearns, M. J., and Sutton, R. S.,

editors, Proceedings of the Eighteenth National Con-

ference on Artificial Intelligence and Fourteenth Con-

ference on Innovative Applications of Artificial Intel-

ligence, July 28 - August 1, 2002, Edmonton, Alberta,

Canada, pages 93–99. AAAI Press / The MIT Press.

Von Neumann, J. and Morgenstern, O. (2007). Theory of

games and economic behavior: 60th anniversary com-

memorative edition. In Theory of games and economic

behavior. Princeton university press.

Winfield, A. F., Booth, S., Dennis, L. A., Egawa, T., Hastie,

H., Jacobs, N., Muttram, R. I., Olszewska, J. I., Ra-

jabiyazdi, F., Theodorou, A., et al. (2021). Ieee p7001:

A proposed standard on transparency. Frontiers in

Robotics and AI, 8:665729.

Zhang, J., Shu, Y., and Yu, H. (2023). Fairness in De-

sign: A Framework for Facilitating Ethical Artificial

Intelligence Designs. International Journal of Crowd

Science, 7(1):32–39. Conference Name: International

Journal of Crowd Science.

Towards Developing Ethical Reasoners: Integrating Probabilistic Reasoning and Decision-Making for Complex AI Systems

599