Hierarchical Decomposition Framework

for Steiner Tree Packing Problem

Hanbum Ko

1,∗ a

, Minu Kim

2,∗ b

, Han-Seul Jeong

3 c

, Sunghoon Hong

3 d

, Deunsol Yoon

3 e

,

Youngjoon Park

3 f

, Woohyung Lim

3 g

, Honglak Lee

3 h

, Moontae Lee

3 i

, Kanghoon Lee

3 j

,

Sungbin Lim

4 k

and Sungryull Sohn

3 l

1

Department of Artificial Intelligence, Korea University, Korea

2

Kim Jaechul Graduate School of AI, KAIST, Korea

3

LG AI Research, U.S.A.

4

Department of Statistics, Korea University, Korea

Keywords:

Hierarchical Reinforcement Learning, Neural Combinatorial Optimization, Steiner Tree Packing Problem.

Abstract:

In this paper, we address the complex combinatorial optimization (CO) challenge of efficiently connecting

objects at minimal cost, specifically within the context of the Steiner Tree Packing Problem (STPP). Tradi-

tional methods often involve subdividing the problem into multiple Steiner Tree Problems (STP) and solving

them in parallel. However, this approach can fail to provide feasible solutions. To overcome this limitation,

we introduce a novel hierarchical combinatorial optimizer (HCO) that applies an iterative process of dividing

and solving sub-problems. HCO reduces the search space and boosts the chances of getting feasible solutions.

This paper proposes for the first time a learning-based approaches to address STPP, introducing an iterative

decomposition method, HCO. Our experiments demonstrate that HCO outperforms existing learning-based

methods in terms of feasibility and the quality of solutions, and showing better training efficiency and gener-

alization performance than previous learning-based methods.

1 INTRODUCTION

The challenge of optimizing connections at minimal

cost is crucial across various domains. In logistics and

transportation networks, efficient route design is cru-

cial for connecting warehouses, markets, and facto-

a

https://orcid.org/0000-0002-2601-0974

b

https://orcid.org/0000-0002-8126-4387

c

https://orcid.org/0000-0002-3525-8699

d

https://orcid.org/0000-0002-6745-5005

e

https://orcid.org/0000-0002-1069-112X

f

https://orcid.org/0000-0002-3950-3065

g

https://orcid.org/0000-0003-0525-9065

h

https://orcid.org/0000-0002-4109-327X

i

https://orcid.org/0000-0001-5542-3463

j

https://orcid.org/0000-0002-2077-7146

k

https://orcid.org/0000-0003-2684-2022

l

https://orcid.org/0000-0001-7733-4293

∗

This work was conducted during an internship at LG

AI Research.

ries. Urban planning requires the cost-effective link-

age of infrastructure, while chip design demands the

minimal-cost connection of electronic components to

enhance data movement and signal stability. De-

termining the optimal configuration from numerous

possibilities is a complex combinatorial optimization

(CO) problem, often referred to as the Steiner Tree

Packing Problem (STPP). STPP aims to identify a set

of minimum-cost edges that connect specific terminal

nodes without overlap, and it can be decomposed into

simpler Steiner Tree Problems (STP) (Karp, 1972),

where the objective is to connect all given terminal

nodes with the least number of edges.

The approach of dividing the CO problem into

multiple sub-problems and solving the divided sub-

problems in parallel—before even solving the first

sub-problem—has seen numerous attempts in various

problems such as the vehicle routing problem (VRP)

and the traveling salesman problem (TSP) (Nowak-

Vila et al.; Hou et al.; Fu et al.; Ye et al.). This

Ko, H., Kim, M., Jeong, H.-S., Hong, S., Yoon, D., Park, Y., Lim, W., Lee, H., Lee, M., Lee, K., Lim, S. and Sohn, S.

Hierarchical Decomposition Framework for Steiner Tree Packing Problem.

DOI: 10.5220/0013317800003893

In Proceedings of the 14th International Conference on Operations Research and Enterprise Systems (ICORES 2025), pages 165-176

ISBN: 978-989-758-732-0; ISSN: 2184-4372

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

165

separation of concerns, which involves splitting the

model into one that only divides the problem and

another that solves the divided problems, enhances

time efficiency by solving the problems in parallel.

The STPP is another CO problem that can be divided

into multiple sub-problems (i.e., STP). However, this

method significantly increases the risk of not obtain-

ing a feasible solution. To address this disadvan-

tage, we propose a hierarchical combinatorial opti-

mizer (HCO) that iteratively processes the dividing

and solving stages. An example of this is shown in

Figure 1. The Two-stage Divide Method (TAM) (Hou

et al., 2022) involves dividing all sub-problems before

solving any of them, whereas the HCO represents a

case where the process of dividing and solving sub-

problems is done iteratively. In the leftmost graph,

which is an STPP instance, circular nodes represent

normal nodes, while square nodes represent terminal

nodes. The different colors of the square nodes in-

dicate different types of terminal nodes, and the ob-

jective of STPP is to connect terminal nodes of the

same type with minimal cost. The main issue with ap-

plying the TAM to solve the STPP is that it assumes

all nodes of a specific color (apricot, in this case) are

selected before choosing the next sub-problem to di-

vide. This leads to a scenario where green nodes may

not have the opportunity to connect with the only fea-

sible node. Conversely, the HCO, by re-evaluating the

solution of the divided STP problem, creates the pos-

sibility to connect the green terminal nodes.

We refer to problems where a feasible solution

may not emerge as feasibility-hard problems. In this

study, we propose the HCO method to more effec-

tively address these challenges. Additionally, we

mathematically demonstrate that HCO can reduce the

search space through latent mapping to a smaller so-

lution space. We argue that HCO has the following

advantages: First, as observed in the examples in Fig-

ure 1, it can more effectively find feasible solutions.

Second, by reducing the search space, it alleviates

the burden on models that must consider both the ob-

jective and feasibility of the CO problem simultane-

ously. Third, it benefits from time efficiency by utiliz-

ing accurate and fast models that solve sub-problems

well. Lastly, the model that divides a large prob-

lem into smaller ones reduces the distribution shift

issue by partitioning the graph. This ensures that sub-

problems only see problems of similar size STP, thus

offering advantages in generalization.

In this paper, we detail the Markov decision prob-

lem (MDP) formulation for finding solutions to CO

problems and introduce the application of this formu-

lation to the STPP. We then describe how we apply

this formulation to solve problems using a hierarchi-

cal policy involving high-level and low-level policies.

Our experiments demonstrate that the HCO, defined

in this manner, more successfully finds feasible so-

lutions and produces higher quality solutions com-

pared to the method of dividing and solving the prob-

lem at once (TAM) and the method of solving STPP

at once without hierarchical decomposition (Khalil

et al.; Kool et al.).

Our contributions are summarized as follows:

• To our knowledge, this is the first work to solve

Steiner tree packing problem using an end-to-end

learning framework.

• We propose a novel decomposition approach for

general CO problems that results in sub-problems

with smaller search spaces.

• We demonstrate that HCO is advantageous over

other methods in terms of finding feasible solu-

tions and the optimal gap of the found solutions.

Additionally, HCO exhibits the ability to general-

ize to larger problem sizes.

2 RELATED WORKS

Combinatorial Optimization with Feasibility-

Hard Constraints. There have been few attempts

to directly tackle CO problems with feasibility-hard

constraints using RL. Ma et al. (2021) proposed

learning two separate RL models, with each model

respectively solving the constraint satisfaction and

objective optimization problems. Cappart et al.

(2021) manually shaped the reward to bias the RL

process toward predicting feasible solutions and

combined this approach with constraint programming

methods to guarantee the feasibility of the solutions.

Our work indirectly tackles the feasibility-hard

constraint by decomposing the given constraint

satisfaction problem into two easier sub-problems

with smaller problem size and search space, allowing

the learning algorithm to efficiently solve each

sub-problem.

Decomposition of Combinatorial Optimization

Problem. Several decomposition methodologies

have been introduced to address a variety of CO chal-

lenges. Nowak-Vila et al. (2018) introduced a Divide-

and-Conquer (DnC) framework focusing on scale-

invariant problems, which assumes the problem can

be split into sub-problems, solved independently, and

subsequently merged to form a full solution. Hou

et al., Fu et al. and Ye et al. proposed the domain-

specific approaches to divide problems and merge

partial solutions (via heatmap and MCTS) for VRP

ICORES 2025 - 14th International Conference on Operations Research and Enterprise Systems

166

TAM

Infeasible solution

HCO!

(Ours)

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

1

1

2

2

Feasible solution

Solve subproblem

: Subproblem

,

: Solution

,

: Normal node

,

: Terminal node

: Subproblem overlap

(1) (2)

(3)

(1) (2)

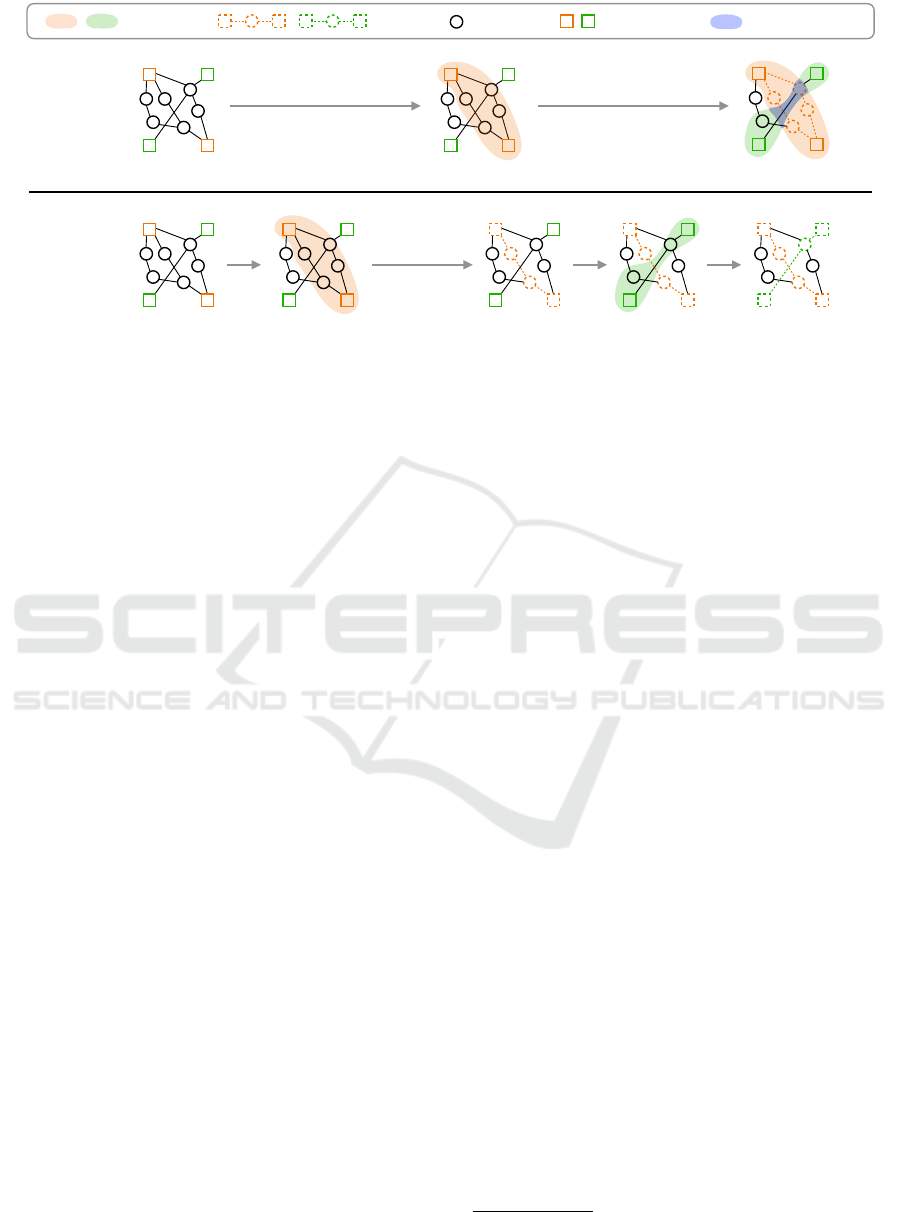

Figure 1: Comparison of two decomposition methods in the Steiner tree packing problem (STPP). (Top) TAM divides the

problem into subproblems all at once, allowing overlapping vertices between them. The entire solution may be infeasible if

the solutions of subproblems overlap. (Bottom) HCO, on the other hand, solves the problem iteratively by: 1) selecting a

sub-graph to define a subproblem, 2) solving the identified subproblem, 3) reflecting its solution, and repeating this process

until the entire problem is solved. Since HCO defines each subproblem by considering all previous solutions, it can more

effectively find a feasible solution compared to TAM.

and TSP, respectively. Despite their utility, the ap-

plicability of such DnC-based methods is limited by

their reliance on scale invariance, often leading to in-

feasible solutions when this assumption is violated.

In a different vein, local search-based methods by

Song et al. and Li et al. require an initial solution

to iteratively refine decision variables, which is a no-

table constraint. Wang et al. (2021) further inves-

tigated a bi-level formulation, where the high-level

problem modifies the given problem instance and the

low-level problem solves the modified instance, to

ease problem solving. However, this occasionally re-

sulted in more complex instances (e.g., a feasible so-

lution may not exist) and suboptimal solutions. Our

algorithm HCO also adopts the bi-level formulation

but sidesteps these issues by preserving the original

problem structure.

3 PRELIMINARIES

3.1 Combinatorial Optimization as

Markov Decision Process

Combinatorial optimization (CO) is a mathematical

optimization over a finite set, with a discrete feasible

solution space. Formally, a combinatorial optimiza-

tion problem can be written as follows.

argmin

x

x

x∈X

{f (x

x

x) : x

x

x ∈F } (1)

where X is a finite support for the variable x

x

x, F ⊂ X

is a set of feasible solutions

1

, and f : X → R is an

objective function of the CO problem. For instance,

a mixed integer linear programming (MILP) problem

with n variables and m constraints can be written in

the form

argmin

x

x

x∈Z

p

×R

n−p

{c

c

c

⊤

x

x

x : A

A

Ax

x

x ≤b

b

b, x

x

x ≥0

0

0} (2)

where A

A

A ∈ R

n×m

, b

b

b ∈ R

m

, and c

c

c ∈ R

n

.

Most of the CO problems can be formulated as a

Markov Decision Process (MDP) Khalil et al.; Gasse

et al.. Formally, it makes two assumptions to the CO

problem: 1) the solution space X of the original prob-

lem (1) is a finite vector space and 2) the objective f

is linear on X , so that for any given decomposition

of X into direct sum of subspaces X = X

1

⊕···⊕X

n

,

we have f (x

x

x) =

∑

n

i=1

f (x

x

x

i

) for each x

x

x

i

∈X

i

. Then, the

original problem (1) can be written as the following

sequential decision making problem:

argmin

x

x

x

t

∈X

t

, ∀t=1,···,H

X=X

1

⊕···⊕X

H

{

H

∑

t=1

f (x

x

x

t

) :

H

∑

t=1

x

x

x

t

∈ F }. (3)

The sequential decision can be thought of choos-

ing for each timestep t an action x

x

x

t

∈ X

t

, to receive

a reward R (s

t

,a

t

) = −f (a

t

) and a large negative

penalty c ≤ −sup

x∈X

f (x) if and only if any future

choice of action inevitably leads to an infeasible so-

lution at the end of the horizon. The optimal policy

π

∗

∈ Π for the original problem can be found upon

maximizing the expected return.

1

F is either discrete itself or can be reduced to a discrete

set.

Hierarchical Decomposition Framework for Steiner Tree Packing Problem

167

π

∗

= argmax

π∈Π

E

π

h

∑

H

t=1

R (s

t

,a

t

) | s

0

i

. (4)

We defer the rest of the details to Appendix. Note

that for some CO problems (e.g., TSP, MVC, Max-

Cut), carefully designing the action space can make

the constraint trivially satisfied (Khalil et al., 2017),

where in this case, reinforcement learning algorithm

can efficiently solve the problem. However, when

the constraint satisfaction is not guaranteed, the re-

inforcement learning methods often suffer from the

sparse reward problem, and does not learn efficiently.

In this work, we focus on the challenging CO prob-

lems, where designing action space cannot guarantee

the constraint satisfaction (i.e., feasibility-hard con-

straint): the Steiner tree packing problem.

3.2 Steiner Tree Packing Problem

A Steiner Tree Problem (STP) can be thought of as a

generalization of a minimum spanning tree problem,

where given a weighted graph and a subset of its ver-

tices (called terminals), one aims to find a tree (called

a Steiner tree) that spans all terminals (but not nec-

essarily all nodes) with minimum weights. Although

minimum spanning tree problem can be solved within

polynomial time, the Steiner tree problem itself is

a NP-complete combinatorial problem (Karp, 1972).

Formally, let G = (V,E) be an undirected weighted

graph, w

e

for e ∈ E its edge weights, and T ⊂ V be

the terminals. Then, a Steiner tree S is a tree that

spans T such that its edge weight is minimal. Hence,

the optimization problem for STP can be written as

follows.

argmin

x

x

x∈2

E

{

∑

e∈x

x

x

w

e

: x

x

x ∈Σ

T

} (5)

where 2

E

is a power set of E, and Σ

T

is a set of all

Steiner trees that span T . A more generalized version

of the above Steiner tree problem is called the Steiner

Tree Packing Problem, (STPP) where one has a col-

lection T of N disjoint non-empty sets T

1

,··· ,T

N

of

terminals called nets, that has to be packed with dis-

joint Steiner trees S

1

,··· ,S

N

spanning each of the nets

T

1

,··· ,T

N

. The optimization problem for STPP can

be written similarly, with N variables.

argmin

x

x

x

1

,···,x

x

x

N

∈2

E

{

∑

n≤N

e∈x

x

x

n

w

e

: x

x

x

n

∈Σ

T

n

, G[x

x

x

n

] ∩G[x

x

x

m

]=

/

0

∀n,m

} (6)

where G[x

x

x] is a subgraph of G generated by x

x

x ⊂E.

4 BI-LEVEL DECOMPOSITION

FOR STEINER TREE PACKING

PROBLEM

The goal of this section is to formulate our bi-level

framework for STPP and the corresponding MDP,

which allows us to use a hierarchical reinforcement

learning policy that efficiently learns to solve CO

problems.

Let us introduce a continuous surjective latent

mapping φ : X → Y onto a vector space Y such that

|Y | ≪ |X|. Then, the problem (1) admits a hierarchi-

cal solution concept:

y

y

y

∗

= argmin

y

y

y∈Y

{f (L(y

y

y)) : φ

−1

(y

y

y) ∩F ̸=

/

0} (7)

where, L(y

y

y) := argmin

x

x

x∈φ

−1

(y

y

y)

{f (x

x

x) : x

x

x ∈F }. (8)

We refer to problem (7) as a high-level problem, and

(8) as a low-level sub-problem induced by the high-

level action y

y

y in (7). Note that the hierarchical so-

lution concept still attains an optimality guarantee of

the original problem, since φ is a surjection and X is

finite.

The advantages of such hierarchical formulation

are: (i) searching for feasible solutions over Y rather

than X reduces the size of search space; (ii) learn-

ing to obtain an optimal solution can be done by

two different learnable agents, (namely the high-level

agent and the low-level agent for (7) and (8), re-

spectively) where the task for each agent is reduced

to be easier than the original problem, and (iii) the

generalization capability (with respect to the problem

size) increases when using learnable agents, since the

high-level agent can be made to always provide sub-

problems (for the low-level agent) with the same size,

regardless of the size of the original problem.

4.1 Hierarchical Decomposition for

Steiner Tree Packing Problem

For CO problems defined on a weighted graph G =

(V,E) with edge weights w

e

for each e ∈ E, it is

straight-forward and beneficial to choose a latent

mapping φ : E → V from the set of edges to the set

of nodes.

2

Specifically, we consider a version of φ

such that for any input x

x

x ⊂ E, u ∈ φ(x

x

x) if and only

if (u,v) ∈ x

x

x for some v ∈ V (G). Such a mapping φ

satisfies φ

−1

(y

y

y) = G[y

y

y] for any set of vertices y

y

y ⊂V ,

where we slightly overload the notation for the gener-

2

Since |V | ≈ O(

p

|E|), so that |Y | << |X| for large

graphs.

ICORES 2025 - 14th International Conference on Operations Research and Enterprise Systems

168

Initial Instance

Solution

ϕ

−1

(y) ⊂ X

y ∈ Y

⋮

Y

Latent

Mapping

ϕ

Bi-level Decomposition for STPP

Possible solutions in original problem

Possible solutions in

high-level problem

|

X

|

>>

|

Y

|

⋮

⋮

X

MDP Formulation for STPP

High-Level Policy

Low-Level Policy

Final Solution

Steiner Tree Packing Problem

Terminals

Non-terminal nodes

Low-level action

High-level action (shaded region)

Low-level

search space

Partial solution

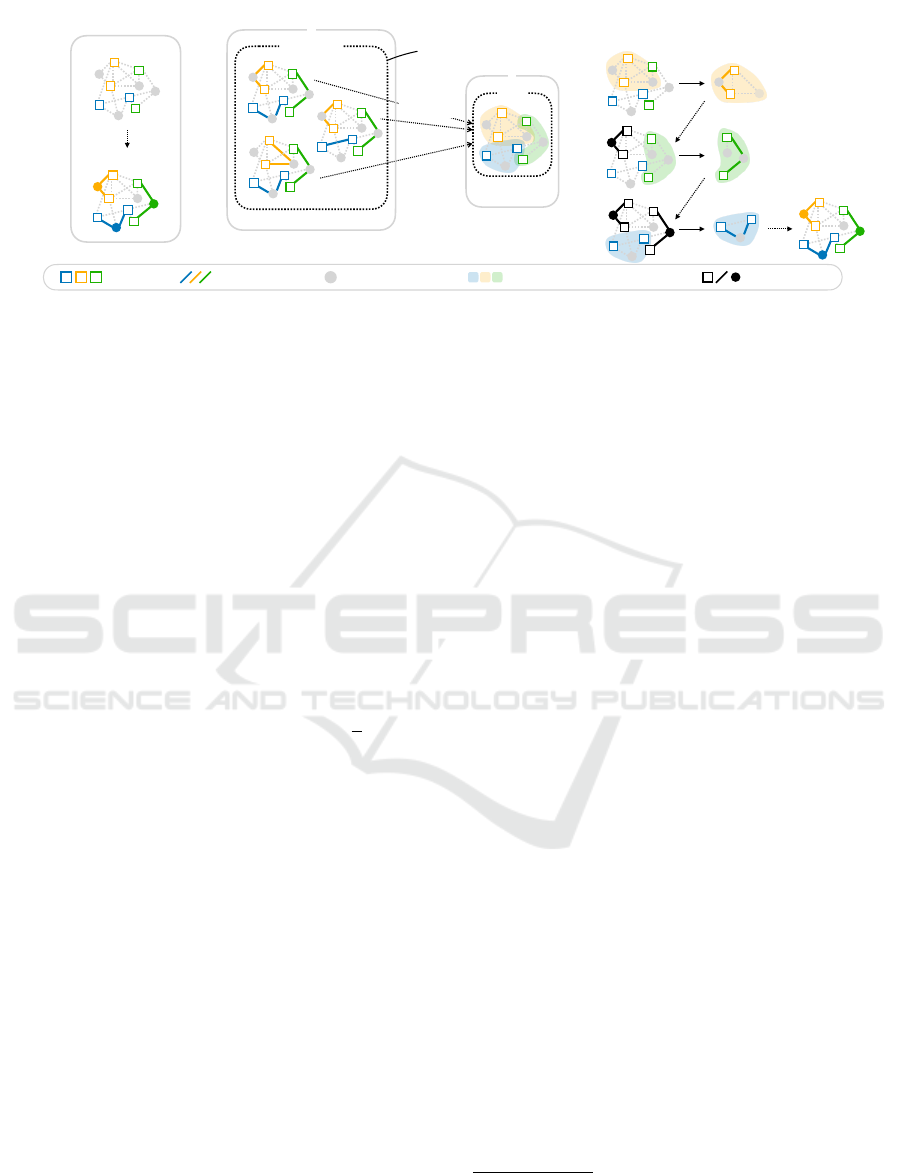

Figure 2: (Left) We tackle the Steiner tree packing problem, which aims to find a minimum-weight tree spanning all the

terminal nodes (square boxes) for each type (color) without overlap. (Middle) We propose to decompose the given problem

into high-level and low-level sub-problems via mapping φ : X 7→ Y , which are solved separately. This facilitates learning

since the search spaces are much smaller for high-level problem |Y | ≪ |X| and low-level problem |φ

−1

(y)| ≪ |X| compared

to the original problem. (Right) In MDP formulation, the high-level agent chooses a set of nodes (shaded region) to define a

sub-graph for each terminal type (color), and low-level agent finds a tree spanning all the terminal nodes within the sub-graph.

ated subgraph G[y

y

y]. For example, the high-level prob-

lem of a STPP (6) can be written as follows.

argmin

y

y

y

1

,···,y

y

y

N

∈2

V

{

∑

n≤N

e∈L(y

y

y

n

)

w

e

: L(y

y

y

n

)∈Σ

T

n

, y

y

y

n

∩y

y

y

m

=

/

0

∀n,m

} (9)

which is now a node-selection problem, (instead of

the original edge selection problem) where L(y

y

y

n

) is a

solution of the low-level subproblem:

L(y

y

y

n

) := arg min

x

x

x∈E(G[y

y

y

n

])

{

∑

e∈x

x

x

w

e

: x

x

x ∈Σ

T

n

} (10)

Notice that the high-level problem (9) has a reduced

size search space (from 2

E

to 2

V

≈ O(2

√

E

)) , and

the low-level subproblem corresponds to a single STP

of a smaller subgraph G[y

y

y

n

]. Therefore, the original

NP-hard problem (6) is decomposed into two smaller

NP-hard problems. The overview of our hierarchical

decomposition method for STPP is illustrated in Fig-

ure 2.

4.2 MDP Formulation and Hierarchical

Policy for Steiner Tree Packing

Problem

The high-level MDP M

hi

for STPP is based on

a sequential decision making y

y

y

1

,··· ,y

y

y

N

in equa-

tion 9. Formally, a state in the MDP is a tuple

s

t

= (G, T ,S

t

,t), where G is a weighted graph of the

problem, T the collection of set of terminals, and

S

t

⊂ V (G) is a partial solution constructed until the

current timestep t via previous actions. An action a

t

is

to select a set of vertices y

y

y

t

⊂V (G)\S which includes

a tree that spans the terminals T

t

∈T as a subgraph of

G[y

y

y

t

]. In turn, the subgraph G[y

y

y

t

] is forwarded to the

low-level agent which solves STP on the given sub-

graph by choosing the edges from E(G[y

y

y

t

]). Then,

the high-level agent receives negative of the sum of

the edge weights of the low-level solution L(y

y

y

t

) as

a reward, and appends the solution L(y

y

y

t

) to the pre-

vious partial solution S

t

.

3

If the low-level solution

L(a

t

) does not exist, or when any future choice of ac-

tions a

t+1

,··· ,a

N

leads to an infeasible solution of the

given STPP, the high-level agent receives a large neg-

ative penalty C < 0.

In the prior decomposition method (i.e., TAM),

V (L(y

y

y

t

)) and V (G[y

y

y

t

]) are the same. In other words,

once nodes are selected as a high-level action, they

are all used in the low-level solution, which prevents

the next high-level policy from selecting any of the

V (L(y

y

y

t

)) from the previous step. However, in the

STPP, as shown in Figure 1, using all nodes that con-

stitute the low-level problem, V (G[y

y

y

t

]), often leads to

a low probability of finding a feasible solution. HCO

reduces the likelihood of this occurring by removing

only V (L(y

y

y

t

)) from V (G) after getting the low-level

solution. We defer detailed settings of our MDP for-

mulation to the Appendix.

Model Architecture. Since STPP is a CO prob-

lem defined over a weighted graph, we use a graph

neural network (GNN) to encode the state repre-

sentation with the policy network π

θ

and the value

function V

π

θ

that serves as a baseline for actor-

critic methods (Konda and Tsitsiklis, 1999). Let

G be the weighted graph with edge weights w

e

as

3

Here, the low-level sub-problem can again be defined

by a MDP M

lo

, which is equivalent to the original deci-

sion making process (3) but on a smaller problem instance

span(φ

−1

(a

t

)).

Hierarchical Decomposition Framework for Steiner Tree Packing Problem

169

an edge feature for each e ∈ E(G). First, a D-

dimensional node feature µ

µ

µ

v

is computed for each

node v ∈ V . Please refer to Appendix for our de-

tailed choice of node and edge features. Then, the

extracted features are encoded with graph attention

network (GAT) (Veli

ˇ

ckovi

´

c et al., 2018) and atten-

tion network (AT) (Vaswani et al., 2017). GAT ag-

gregates the information across the neighbors in the

graph to capture the local connectivity, but it is lim-

ited in modeling the long-range dependency (Vaswani

et al., 2017). We overcome the limitation by using

the attention network. The attention network captures

the long-range dependency by encoding relation be-

tween all (i.e., ignores the graph structure) pairs of

nodes. Global structures are further encoded via a

graph embedding layer, which embeds particular sub-

sets of node features into groups based on their char-

acteristics.

We use behavioral cloning and reinforcement

learning to train high-level policy and use a mathe-

matical solver (i.e., MILP solver) for solving the low-

level problem (10) in our implementation

4

.

5 EXPERIMENTS

5.1 Setting

Dataset. To evaluate the learning efficiency and

generalization capacity of each algorithm, we cre-

ated feasibility-hard STPP instances at various scales.

Our instances included graphs of 40, 60, 80, and 100

nodes. For each graph category, we generated 50,000

instances for training, 1,000 for testing, and 100 for

validation. Graphs were created using the Watts-

Strogatz (WS) model (Watts and Strogatz, 1998)

with mean node degree k ∼ Uniform(3,4,5,6) and

rewiring probability β ∼Uniform(0,1). Edge weights

were uniformly random within [0,1]. A subset of ver-

tices was selected as terminals (see Section 3.2) to

construct the STPP instances. To avoid unsolvable

or trivially solvable instances, we designed a termi-

nal selection algorithm ensuring instances were both

solvable and non-trivial. We partitioned the random

graph into T = N

type

subgraphs using Lukes algo-

rithm (Lukes, 1974), then chose N

terminal

terminals per

subgraph. Each subgraph ensured a spanning tree, but

we filtered out trivial or unsolvable instances.

4

Since our decomposition keeps the size of low-level

subproblem small, we can run the MILP solver in a short

time.

Figure 3: Training performance of the HCO for problem

size n = 40. We pre-trained the agents via behavioral

cloning until 5 millions episodes (i.e., vertical dotted line

in the figure), and then finetuned via reinforcement learning

afterwards. We report the performance averaged over 4 ran-

dom seeds.

Baselines. We compare our model with following

baselines. MILP-t utilizes the mixed integer linear

programming (MILP) solver to identify the best so-

lution within a specified time limit, denoted by t.

Our implementation employs OR-Tools (Perron and

Furnon, 2022) to achieve this. For a fair compari-

son, we set t to 1 second for MILP-t roughly matching

the execution time of the compared methods. MILP-

∞ on the other hand, is the MILP solver operating

without any time constraints, enabling it to find the

optimal solution. PathFinder (McMurchie and Ebel-

ing, 1995) is a heuristic algorithm designed to solve

the STPP, as detailed in Section 2. We used a pub-

licly available implementation of Lee et al. (2022),

which includes two variations of low-level solvers:

the shortest-path finding variant (PathFinder-SP) and

the two-approximation variant (PathFinder-TA). Flat

represents a non-hierarchical RL agent that constructs

a solution by sequentially selecting nodes one by one,

following the methodology outlined in studies Khalil

et al.; Kool et al.. The Two-stage Divide Method

(TAM) (Hou et al., 2022) addresses the STPP by de-

composing it entirely in a single step, after which each

sub-problem is addressed individually. This method

differs from the HCO approach, which iteratively se-

lects and solves one sub-problem at a time.

Training. We discovered that starting training with

a random policy made it difficult to obtain feasible so-

lutions, resulting in minimal learning signals. There-

fore, for HCO training, we perform pre-training us-

ing behavioral cloning, followed by fine-tuning with

IMPALA (Espeholt et al., 2018). The behavioral

cloning data was generated using a solver OR-Tools,

and training was conducted using cross-entropy loss.

The training was conducted for 500K episodes to en-

sure convergence to some extent in all methods. We

used the reinforcement learning framework RLlib for

training with IMPALA. Hyperparameters were deter-

mined based on performance on the validation set,

and the detailed process is described in Appendix.

ICORES 2025 - 14th International Conference on Operations Research and Enterprise Systems

170

Table 1: Result table for Steiner tree packing problem. Gap and FSR denote the average optimality gap and feasible solution

ratio, respectively, while ET represents the average time taken to process a test instance. We report the performance averaged

over 4 random seeds.

n = 40 n = 60

Method Gap↓ FSR↑ ET (ms)↓ Gap↓ FSR↑ ET (ms)↓

Learning-based

HCO (Ours) 0.039 0.969 38 0.031 0.953 99

TAM 0.105 0.885 17 0.128 0.914 46

Flat 0.045 0.957 106 0.087 0.935 252

Heuristic

MILP-1s 0.000 1.000 127 0.000 0.982 501

PathFinder-SP 0.112 0.974 5 0.165 0.990 8

PathFinder-TA 0.116 0.966 19 0.147 0.974 48

Exhaustive MILP-∞ 0.000 1.000 125 0.000 1.000 532

n = 80 n = 100

Method Gap↓ FSR↑ ET (ms)↓ Gap↓ FSR↑ ET (ms)↓

Learning-based

HCO (Ours) 0.054 0.932 124 0.056 0.892 246

TAM 0.246 0.620 47 0.291 0.520 47

Flat 0.087 0.902 529 0.062 0.905 679

Heuristic

MILP-1s 0.001 0.832 975 0.000 0.035 1007

PathFinder-SP 0.150 0.976 20 0.155 0.970 35

PathFinder-TA 0.149 0.965 115 0.150 0.954 170

Exhaustive MILP-∞ 0.000 1.000 1648 0.000 1.000 4685

Table 2: Generalization performance in terms of Common

Instance Gap (CIG). The CIG measures the Gap averaged

over the instances where all methods (HCO, TAM, Flat)

found feasible solutions. Models are trained with n = 40

and are tested with n = {40, 60,80,100}. We report perfor-

mance averaged over 4 random seeds.

n = 40 n = 60

HCO (Ours) 0.033 (±0.001) 0.033 (±0.002)

TAM 0.090 (±0.007) 0.332 (±0.026)

Flat 0.065 (±0.012) 0.086 (±0.014)

n = 80 n = 100

HCO (Ours) 0.041 (±0.003) 0.059 (±0.006)

TAM 1.017 (±0.113) 2.910 (±0.126)

Flat 0.120 (±0.014) 0.260 (±0.056)

Table 3: The average likelihood of decomposition policy

selecting trap nodes, optimal nodes, and redundant nodes

for test instances with n = 40 and |T | = 2.

Trap↓ Optimal↑ Redundant↓

HCO (Ours) 0.422 0.887 0.608

TAM 0.494 0.898 0.747

Evaluation. We use three metrics to evaluate the

algorithm’s capability to minimize the cost and sat-

isfy the constraint. Feasible solution ratio (FSR)

is the ratio of instances where a feasible (i.e., con-

straint is satisfied) solution was found by the method.

Since the solution cost can be computed only for

a feasible solution, we also introduce the metric

optimality gap (Gap), measuring the average of

the cost suboptimality in feasible solutions found:

Gap=

algorithm cost

optimal cost

−1

. Finally, elapsed time (ET)

measures the average wall clock time taken to solve

each instance in the test set. We report the perfor-

mance averaged over four random seeds.

5.2 Result

Generalization to Unseen Instances with the Same

Graph Size. Table 1 summarizes the performance

of each method when the training and test sets con-

sist of graphs of the same size but are mutually ex-

clusive. The top three rows compare the learning-

based algorithms: HCO, TAM, Flat. Overall, HCO

is the most performant algorithm in terms of Gap

and FSR. We observe that HCO consistently outper-

forms Flat with much less computation required (i.e.,

smaller ET). Our hierarchical framework improves

the sample efficiency of reinforcement learning by re-

ducing the search space in high-level and low-level

problems, leading to HCO’s superior performance in

Hierarchical Decomposition Framework for Steiner Tree Packing Problem

171

Gap and FSR. Also, Flat sequentially solves prob-

lem by choosing the node one by one without de-

composition, which requires more number of feed-

forwards (i.e., higher ET) per problem compared to

HCO. As shown in Figure 1, TAM has a high proba-

bility of failing to obtain a feasible solution, result-

ing in a relatively low FSR. Additionally, we ob-

served that learning signals from infeasible solutions

significantly destabilize the training process. Rest

of the table summarizes the performance of heuristic

and exhaustive search-based methods. MILP-1s and

MILP-∞ performs search over solution space, where

MILP-1s constrains the search based on computation

time and MILP-∞ performs search exhaustively. We

note that MILP-based approaches excels in minimiz-

ing the cost once feasible solution is found, but suffers

from finding any feasible solution especially when the

problem size grows. This results in drastic degra-

dation in FSR for MILP-1s and ET for MILP-∞ for

larger-sized problems (n = 80 and 100). When we

compare PathFinder-based approaches to HCO, HCO

is significantly advantageous in terms of Gap. We at-

tribute the high FSR of PathFinder to their iterative

algorithm, negotiated-congestion avoidance, which is

tailored for finding feasible solutions in STPP.

Generalization to Unseen and Larger Instances.

To facilitate a clearer comparison, we introduce Com-

mon Instance Gap (CIG) metric, which evaluates the

Gap exclusively on instances where every compared

method identified a feasible solution. This approach

ensures a fair comparison ground. We present the CIG

performance, averaged across four distinct random

seeds in the Table 2 (values in parentheses represent

standard error). The results demonstrate HCO’s su-

perior performance over baseline methods across all

tested instance sizes (n = 40,60,80, 100), with a no-

tably wider performance margin against Flat in larger

or more novel instances (n = 80 and 100). This un-

derscores the bi-level formulation’s effectiveness in

enhancing generalization capabilities, even in more

challenging scenarios.

Analysis of Decomposition-Based Models. We

further evaluate the decomposition performance of

the decomposition-based models, HCO and TAM.

When the model decomposes the given problem into

sub-problems for each net, it must consider three

types of nodes. First, the trap node is a critical part of

another net’s solution and must not be used for solv-

ing the current net. Second, the optimal node is in-

cluded in the optimal solution. Third, the redundant

node refers to nodes that do not fall into either of the

previous categories. Generally, including redundant

nodes in the solution may increase the cost (i.e., sub-

optimal). Effective decomposition should avoid trap

and redundant nodes while incorporating as many op-

timal nodes as possible. To assess this, we measure

the average likelihood of each decomposition algo-

rithm (i.e., high-level policy) selecting trap, optimal,

and redundant nodes. Our analysis was conducted on

all instances with two nets (i.e., |T | = 2) from the

n = 40 test instances. The results are presented in Ta-

ble 3. Overall, TAM shows a higher likelihood of se-

lecting any type of node compared to HCO. Notably,

the likelihood of selecting optimal nodes is similar for

both HCO and TAM. This indicates that while both

methods are capable of effectively identifying nodes

that contribute to the optimal solution, TAM is less

successful at avoiding negative nodes (i.e., trap and

redundant nodes), which may lead to infeasible solu-

tions or suboptimality. This is further supported by

the results in Tables 1 and 2, where TAM shows a

larger Gap and lower FSR due to its higher tendency

to select redundant and trap nodes. This difference

in performance can be attributed to the methods’ ap-

proaches. Unlike TAM, which decomposes all prob-

lems at once, HCO re-evaluates and adjusts its decom-

position after reviewing the results of the low-level

problem, leading to more cautious decision-making.

Learning Curves. The learning curve for n = 40 is

shown in Figure 3. The supervised pre-training im-

proves both FSR and Gap of all the methods but the

performance improvement plateaus around 4M steps.

During RL finetuning, the FSR rapidly improves for

all the methods while Gap worsens. The observed

rise in FSR, indicating more feasible solutions, corre-

lates with a growing gap. This suggests that instances

solved later in training often need more training to

reach optimality. Consequently, as FSR rises sharply,

the model encounters many new instances for which

it initially only finds suboptimal (i.e., higher Gap) so-

lutions, leading to an increase in Gap.

6 CONCLUSIONS

In this work, we proposed a novel hierarchical ap-

proach to address challenges of finding feasible so-

lution for STPP. It uses latent mapping to decompose

the solution search space, resulting in more sample-

efficient learning due to separation of concerns and

a smaller search space, and improved generalization

from a homogeneous problem size for the low-level

policy. We showed that the proposed decomposition

framework is generally applicable to broader scope of

combinatorial optimization problems. The effective-

ICORES 2025 - 14th International Conference on Operations Research and Enterprise Systems

172

ness of our method was evaluated on various sizes of

STPP instances, demonstrating improved sample ef-

ficiency and generalization capability, outperforming

heuristic, mathematical optimization, and learning-

based algorithms designed for STPP.

ACKNOWLEDGEMENTS

This work was supported by the National Research

Foundation of Korea(NRF) grant funded by the Korea

government(MSIT) (RS-2024-00410082).

REFERENCES

Cappart, Q., Moisan, T., Rousseau, L.-M., Pr

´

emont-

Schwarz, I., and Cire, A. A. (2021). Combining

reinforcement learning and constraint programming

for combinatorial optimization. In Proceedings of

the AAAI Conference on Artificial Intelligence, vol-

ume 35, pages 3677–3687.

Espeholt, L., Soyer, H., Munos, R., Simonyan, K., Mnih,

V., Ward, T., Doron, Y., Firoiu, V., Harley, T., Dun-

ning, I., Legg, S., and Kavukcuoglu, K. (2018). IM-

PALA: Scalable distributed deep-RL with importance

weighted actor-learner architectures. In Dy, J. and

Krause, A., editors, Proceedings of the 35th Interna-

tional Conference on Machine Learning, volume 80

of Proceedings of Machine Learning Research, pages

1407–1416. PMLR.

Fu, Z.-H., Qiu, K.-B., and Zha, H. (2021). Generalize

a small pre-trained model to arbitrarily large tsp in-

stances. In Proceedings of the AAAI Conference on

Artificial Intelligence, volume 35, pages 7474–7482.

Gasse, M., Ch

´

etelat, D., Ferroni, N., Charlin, L., and Lodi,

A. (2019). Exact combinatorial optimization with

graph convolutional neural networks. Advances in

Neural Information Processing Systems, 32.

Hou, Q., Yang, J., Su, Y., Wang, X., and Deng, Y. (2022).

Generalize learned heuristics to solve large-scale vehi-

cle routing problems in real-time. In The Eleventh In-

ternational Conference on Learning Representations.

Karp, R. M. (1972). Reducibility among combinatorial

problems. In Complexity of computer computations,

pages 85–103. Springer.

Khalil, E., Dai, H., Zhang, Y., Dilkina, B., and Song, L.

(2017). Learning combinatorial optimization algo-

rithms over graphs. In Guyon, I., Luxburg, U. V.,

Bengio, S., Wallach, H., Fergus, R., Vishwanathan,

S., and Garnett, R., editors, Advances in Neural Infor-

mation Processing Systems, volume 30. Curran Asso-

ciates, Inc.

Konda, V. and Tsitsiklis, J. (1999). Actor-critic algorithms.

Advances in neural information processing systems,

12.

Kool, W., van Hoof, H., and Welling, M. (2019). Atten-

tion, learn to solve routing problems! In International

Conference on Learning Representations.

Lee, K., Park, Y., Jeong, H.-S., Yoon, D., Hong, S., Sohn,

S., Kim, M., Ko, H., Lee, M., Lee, H., Kim, K., Kim,

E., Cho, S., Min, J., and Lim, W. (2022). ReSPack: A

large-scale rectilinear steiner tree packing data gener-

ator and benchmark. In NeurIPS 2022 Workshop on

Synthetic Data for Empowering ML Research.

Li, S., Yan, Z., and Wu, C. (2021). Learning to delegate for

large-scale vehicle routing.

Lukes, J. A. (1974). Efficient algorithm for the partitioning

of trees. IBM Journal of Research and Development,

18(3):217–224.

Ma, Y., Hao, X., Hao, J., Lu, J., Liu, X., Xialiang, T., Yuan,

M., Li, Z., Tang, J., and Meng, Z. (2021). A hi-

erarchical reinforcement learning based optimization

framework for large-scale dynamic pickup and deliv-

ery problems. Advances in Neural Information Pro-

cessing Systems, 34:23609–23620.

McMurchie, L. and Ebeling, C. (1995). Pathfinder: A

negotiation-based performance-driven router for fp-

gas. In Proceedings of the 1995 ACM third inter-

national symposium on Field-programmable gate ar-

rays, pages 111–117.

Nowak-Vila, A., Folqu

´

e, D., and Bruna, J. (2018). Divide

and conquer networks.

Perron, L. and Furnon, V. (2022). Or-tools.

Song, J., Lanka, R., Yue, Y., and Dilkina, B. (2020). A gen-

eral large neighborhood search framework for solving

integer linear programs.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones,

L., Gomez, A. N., Kaiser, L. u., and Polosukhin,

I. (2017). Attention is all you need. In Guyon,

I., Luxburg, U. V., Bengio, S., Wallach, H., Fer-

gus, R., Vishwanathan, S., and Garnett, R., editors,

Advances in Neural Information Processing Systems,

volume 30. Curran Associates, Inc.

Veli

ˇ

ckovi

´

c, P., Cucurull, G., Casanova, A., Romero, A., Li

`

o,

P., and Bengio, Y. (2018). Graph attention networks.

In International Conference on Learning Representa-

tions.

Wang, R., Hua, Z., Liu, G., Zhang, J., Yan, J., Qi, F., Yang,

S., Zhou, J., and Yang, X. (2021). A bi-level frame-

work for learning to solve combinatorial optimization

on graphs. Advances in Neural Information Process-

ing Systems, 34:21453–21466.

Watts, D. J. and Strogatz, S. H. (1998). Collective dynamics

of ”small-world” networks.

Ye, H., Wang, J., Liang, H., Cao, Z., Li, Y., and Li, F.

(2024). Glop: Learning global partition and local

construction for solving large-scale routing problems

in real-time. In Proceedings of the AAAI Conference

on Artificial Intelligence, volume 38, pages 20284–

20292.

Hierarchical Decomposition Framework for Steiner Tree Packing Problem

173

APPENDIX

Combinatorial Optimization as Markov

Decision Process

In this section, we provide a full illustration of a se-

quential optimization process for a general CO prob-

lem. Our goal is to design a corresponding MDP for

equation 3. Intuitively, the sequential decision mak-

ing process in equation 3 can be thought of choosing

for each timestep t an action x

x

x

t

∈ X

t

, until we have

a full solution x

x

x =

∑

t

x

x

x

t

. However, note that we are

constructing X

1

,··· ,X

H

sequentially, i.e., we do not

have the subspace decomposition X = X

1

⊕···⊕X

H

beforehand. Hence, assume we have constructed

X

1

,··· ,X

t

until the current timestep t. Let us define

W

t

the remaining subspace that are yet to be decom-

posed, i.e., X = X

1

⊕···⊕X

t

⊕W

t

. Then, the sequen-

tial decision making is equivalent to choosing for each

timestep t a subspace X

t

≤W

t

and consequently an ac-

tion x

x

x

t

∈X

t

, until we have a trivial subspace W

t

= {0

0

0}.

Sequential decision making process for the bi-

level decomposition in equation 7 and 8 can be formu-

lated similarly. The key is to construct the subspace

decomposition Y = Y

1

⊕···⊕Y

N

sequentially, while

obtaining the partial solution for the original problem

from the low-level sub-problem (8) simultaneously.

MDP Formulation

Below we provide a full description of our MDP for-

mulation for STPP in section 4.2.

State. The state s

t

of the high-level MDP M

hi

con-

sists of a graph G, collection of sets of terminals T , a

partial solution S

t

⊂V (G) constructed until timestep

t, (i.e., the nodes of the disjoint trees that span ter-

minals T

1

,··· ,T

t−1

) and the current timestep t. The

graph G provides general information of the prob-

lem instance, i.e., the connectivity of the graph via

edges. In practice, the graph G can be represented by

the adjacency matrix A ∈ R

|V |×|V|

, where a

i j

takes the

edge weight w

i j

. The information can be further en-

coded via message passing layers of GAT and AT in

GNN. T , S

t

and t provide node features for the cur-

rent timestep, and are essential for generating a graph

embedding. From the state information s

t

, we extract

the node features of the graph for further encoding via

the GNN model. For a node v ∈V , we denote the node

features of the vertex v as x

v

:= (x

o

,x

τ

,x

d

) ∈ Z

3

. The

first node feature x

o

∈ {0, 1} denotes whether a node

v is included in the current partial solution or not. If

v is selected as part of the partial solution, we define

x

o

= 1, otherwise, it is 0. The second node feature

x

τ

∈ {0,1, ··· ,N

type

} indicates the terminal type (i.e.,

x

τ

= k if and only if v ∈T

k

), where the indices are la-

beled in the order that the high-level MDP solves for.

Non-terminal nodes are assigned a value of 0. The

last feature, x

d

, denotes the degree of a node v ∈ V .

The edges of the graph are also assigned with edge

features. We only use the edge weights as the edge

features in this paper. Our choice of node and edge

features is summarized in Table 4, 5.

Action. The action a

t

of a high-level MDP M

hi

at

timestep t is to select a set of vertices y

y

y

t

⊂V (G) \S

t

,

where S

t

is the partial solution constructed until the

previous timesteps. Intuitively, the action a

t

is to se-

lect a node set that includes all terminals of the current

type at timestep t. The selected set of nodes should

create a subgraph G[a

t

] of G generated by the nodes

a

t

, and should correspond to a STP instance (with sin-

gle type of terminals). The low-level agent then com-

putes a minimum weight tree that spans all terminals

of current type from the subgraph G[a

t

]. In practice,

we further assist the agent by designing the MDP en-

vironment for M

hi

in a way such that the terminal

nodes of the current type are automatically selected

by the environment internally. Hence, the action a

t

will result in a subgraph G[a

t

∪V (T

t

)] instead of G[a

t

].

Reward. Designing a reward with an optimality

guarantee is a non-trivial task. In particular, we wish

to construct a reward where all feasible solutions re-

sult in higher reward than those of any infeasible so-

lutions. Also, the feasible solutions with better so-

lution quality (i.e.the objective being closer to op-

timal solution) should be assigned a higher reward.

Hence, given a final solution x

x

x of a CO problem (1),

a reward with an optimality guarantee can be com-

pactly formulated as r(x

x

x) = c ·1

1

1

F

(x

x

x) − f (x

x

x), where

c ≥ sup

x

x

x∈X

f (x

x

x), f is the objective function of prob-

lem (1), and 1

1

1

F

(·) is an indicator function. Note that

this form of the reward is equivalent to what is de-

scribed in Section 3.1. Achieving the maximal return

will result in the same optimal policy

5

. Finally, recall

that our objective f is linear in X . For each partial

solution x

x

x

t

constructed at timestep t, we are able to

decompose our reward function as follows.

r(x

x

x

t

) = c ·1

1

1

F

t

∑

k=1

x

x

x

k

!

− f (x

x

x

t

) (11)

Transition. Our transition in MDP is deterministic;

the change in the partial solution alters a node feature

5

Providing incentives for x

x

x ∈ F instead of a penalty

when x

x

x /∈ F scales all rewards to be non-negative.

ICORES 2025 - 14th International Conference on Operations Research and Enterprise Systems

174

Table 4: Node features.

Notation Value Description

x

o

∈ {0,1} Is partial solution

x

τ

∈ {0,1, 2,...,N

type

} Terminal type

x

d

∈ Z Node degree

Table 5: Edge feature.

Notation Value Description

w

e

∈ R Edge weight(=cost)

x

o

from 0 into 1, which results in different node and

graph embeddings from GNN.

Termination. Our STPP environment is terminated

when it is not able to generate a feasible STPP solu-

tion or when a feasible solution is found. The cases

where generating a feasible STPP solution includes

(1) no possible actions remaining, (2) any choice of

actions in future timestep inevitably results in an in-

feasible solution, or (3) the choice of action a

t

in a

high-level MDP M

hi

results in an unsolvable STP in-

stance G[a

t

].

GNN Architecture

Encoder. Given a graph G, we first extract a D-

dimensional node embedding µ

µ

µ

v

for each node v ∈

V , where D denotes the number of features, as pro-

vided in Section 6. Note that we use D = 3, with

x

v

= (x

o

,x

τ

,x

d

) representing the features as described

in Table 4. Let ρ : R

D

→ R

D·p

be a fixed vector-

ization mapping of a given node feature x

v

, and let

θ

0

: R

D·p

→ R

p

be a linear mapping. We obtain the

initial node embedding µ

µ

µ

v

as follows.

µ

µ

µ

v

= ReLU(θ

0

(ρ(x

v

))). (12)

Next, we encode the node embeddings M :=

(µ

µ

µ

1

,··· ,µ

µ

µ

|V |

) ∈ R

|V |×p

via graph attention network

(GAT) and attention network (AT). Formally, let Θ

i

:

R

n

h

×p

→R

2p

and θ

i

: R

2p

→R

p

for i = 1, ···, l be lin-

ear mappings, where n

h

denotes the number of heads

of GAT. For convenience, we slightly overload the no-

tation, writing Θ

i

(M) := (Θ

i

(µ

µ

µ

1

),··· ,Θ

i

(µ

µ

µ

|V |

)), and

use a similar notation for θ

i

. We recursively encode

the node embedding as follows

M

(i−1)′

= AT(Θ

i

(GAT(M

(i−1)

;G)); G) (13)

M

(i)

= θ

i

(ReLU(M

(i−1)

||M

(i−1)′

)) (14)

for each i = 1,··· , l. Here, we define M

(0)

≡ M,

and write (·||·) for CONCATENATE(·,·). We denote

our graph encoder function Enc succinctly as follows,

omitting some details for brevity.

Enc(·;G) :=

l times

z }| {

(AT ◦GAT) ◦···◦(AT ◦GAT)(·;G)

(15)

Graph Embedding, Logit, and Probability.

Given the node embedding of the last layer M

(l)

, we

obtain the embedding for the entire graph µ

µ

µ

G

. Instead

of simply averaging over all the nodes, we enrich the

graph embedding by grouping the nodes into three

subsets based on their characteristics: current termi-

nal T

t

, partial solution S

t

, and non-terminal nodes

V := V \

S

T ∈T

T . Then, the embeddings are averaged

within each subset, concatenated, and projected to

obtain the graph embedding µ

µ

µ

G

. Formally, the graph

embedding layer Emb is written as follows.

Emb(·;t) := Ψ(·,T

t

)||Ψ(·,S

t

)||Ψ(·,V ) (16)

where Ψ(M

(l)

,A) performs the average pooling

over the set of node embeddings that belong to

A. The graph embedding µ

µ

µ

G

is obtained as µ

µ

µ

G

=

Emb(M

(l)

;t). Finally, the logit value, which is used

to compute the probability of choosing the node for

each node v ∈V is computed as follows.

logit

v

= w

4

(w

3

(ReLU(w

1

(µ

µ

µ

G

)||w

2

(µ

µ

µ

(l)

v

))) + µ

µ

µ

(0)

v

) ∀v ∈V

(17)

p

v

= softmax(logit

v

) ∀v ∈V (18)

where w

1

: R

3p

→R

p

, w

2

: R

p

→R

p

, w

3

: R

2p

→R

p

,

and w

4

: R

p

→ R

2

are linear functions.

Value Function. The value function V

π

θ

uses a

model that has a similar GNN architecture with a

simple multi layer perceptron MLP that sequentially

projects (R

3p

→R

3p

→R

p

→R

1

), and does not share

weights with the policy network.

V

π

θ

(s

t

) = MLP(Emb(Enc(M;G);t)). (19)

Hyperparameters

We set the hyperparameters based on the performance

of Flat and considered the following hyperparameter

ranges for the 40-node experiment:

• p = {32, 64, 128, 256}

• l = {3, 4, 5}

• number of heads = {4, 8}

• IL learning rate = {1e-3, 1e-4, 1e-5, 1e-6}

• weight decay = {1e-6, 5e-7}

Hierarchical Decomposition Framework for Steiner Tree Packing Problem

175

• RL learning rate = {1e-3, 1e-4, 1e-5, 1e-6}

• entropy coefficient = {1, 1e-1, 1e-2}

• value loss coefficient = {1e-1, 1, 3, 5}

Thirty hyperparameter combinations were randomly

selected from the given ranges, and the combination

that performed best on the validation set was used.

This parameter combination was consistently used for

all methods. The GNN model uses a hidden dimen-

sion of p = 128 and l = 5 for both GAT and AT en-

coder layers. Both GAT and AT use 8 heads, with

a dropout rate of 0.5 in IL, but dropout is not used

in RL training. A batch size of 64 is used, and the

learning rate is initialized to 10

−4

, decreasing by 0.99

per epoch. A fine-tuned value of 5 ×10

−7

is used for

weight decay. To prevent divergence in learning, the

gradient norm is clipped to 1. HCO trains for 100

epochs, using 1 epoch to update the model with BC

data at every step and in every episode. In the RL

phase, a batch size of 30, a learning rate of 10

−6

, a

discount factor of 0.99, an entropy coefficient of 0.01,

and a value function loss coefficient of 5 are used. The

number of workers used in IMPALA is set to 30.

Training and Evaluation

For imitation learning, we first collect the demon-

stration data using the optimal solver, MILP, as ex-

pert policy. The expert policy π

expert

is defined as

π

expert

(a

t

|·) = 1 if a

t

∈ A

∗

t

and π

expert

(a

t

|·) = 0 other-

wise. The nodes that are not selected by the expert are

excluded from the loss calculation. HCO uses cross-

entropy loss for BC training, and one epoch is defined

as updating HCO for every step of every instance.

During the evaluation phase, the softmax function is

applied to the logit values of each node to obtain prob-

abilities, and nodes with probability values exceed-

ing 0.5 are selected as high-level actions. The train-

ing and evaluation are carried out on a single GPU,

comprising an AMD EPYC 7R32 CPU and NVIDIA

A10G GPU.

ICORES 2025 - 14th International Conference on Operations Research and Enterprise Systems

176