PBL Meets AI: Innovating Assessment in Higher Education

Blaženka Divjak, Barbi Svetec and Katarina Pažur Aničić

University of Zagreb, Faculty of Organization and Informatics, Varaždin, Croatia

Keywords: Artificial Intelligence, Chatbot, Problem-Based Learning, Project-Based Learning, Learning Assessment.

Abstract: Problem-based and project-based learning (PBL) is a creative, inquiry-based process, enriched by teamwork,

implemented through a longer timespan, with considerable student workload. As the process is normally

conducted with a high level of student autonomy, there is often no way of monitoring what resources students

rely on. In this context, the rapid availability of generative AI can support not only innovation, but also unfair

practices. In this article, we will present case study research on the use of AI in PBL summative assessment

in higher education, based on six courses. We analyzed and compared six courses of different levels of study

(undergraduate, graduate, postgraduate), in different areas/subjects (mathematics, IT, project management,

education). In some courses, it was obligatory to use AI, while in others it was either optional or not foreseen.

We analyzed assessment results, student surveys and teacher testimonials, using mixed-method research.

Based on the research cases, we identified three possible models of integrating AI in PBL and provided

recommendations.

1 INTRODUCTION

The emergence of generative AI (GenAI), and

particularly the release of OpenAI’s ChatGPT chatbot

in November 2022, has undoubtedly had a

transformative impact on numerous aspects of the

society. It has transformed the way we interact with

technology, work, look for information, and the way

we learn. As such, it has a potential to profoundly

transform the way we teach as well.

In educational research, as well as in practice,

both opportunities and challenges of such a powerful

tool have already been recognized. On the one hand,

GenAI has been found useful in terms of enabling

easier access to knowledge, facilitating personalized

learning and providing assistance in writing, research

and analysis. On the other hand, it has also been

linked with concerns related primarily to ethics,

including the accuracy, quality and unbiasedness of

educational content, as well as, notably, plagiarism.

(Chan & Hu, 2023; Ray, 2023) On the latter, Noam

Chomsky expressed the view that the use of GenAI

chatbots is ”basically high-tech plagiarism” and ”a

way of avoiding learning” (Marshall, 2023).

So, understandably, the rise of GenAI is making

educators question their assessment practices. Can we

prevent the misuse of GenAI? How can we maintain

the fairness of the assessment process? But regardless

of the concerns, it seems that the use of GenAI

chatbots in education, however controversial, cannot

be banned. This might be especially so in cases of

learning approaches in which students have more

independence and autonomy in their work, like

problem-based and project-based learning (Spikol et

al., 2018). So, instead of trying to prevent students to

(mis)use new technology, we should embrace it

(Rudolph et al., 2023). Therefore, the right questions

to ask might be: How can we use GenAI to support,

rather than undermine learning and assessment?

Instead of seeing it as a disadvantage, how can we use

it to our advantage?

In the light of these questions, we conducted case

study research including six university courses, in

which GenAI was used - either per teacher’s

instructions or autonomously - by students in the

process of problem-based and project-based learning

(PBL), as part of summative assessment.

While a number of studies have been conducted

at the intersection of PBL and AI, there seems to be

more focus on teaching and learning about AI than

teaching and learning with AI. The aim of this study

is to contribute to the developing body of knowledge

on teaching and learning with AI, from the specific

angle of problem-based and project-based learning, as

approaches that offer, at the same time, more room

for creativity and less room for teachers’ control.

120

Divjak, B., Svetec, B. and Ani

ˇ

ci

´

c, K. P.

PBL Meets AI: Innovating Assessment in Higher Education.

DOI: 10.5220/0013331700003932

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 2, pages 120-130

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

2 THEORETICAL

BACKGROUND

2.1 Problem-Based and Project-Based

Learning

Problem-based learning (PrBL) and project-based

learning (PjBL) are student-centered, creative,

inquiry-based processes, rooted in constructivist

learning theory (Dole et al., 2015). They both include

learning activities towards a shared goal usually done

by students in collaboration, with a high level of

independence and autonomy (Brassler & Dettmers,

2017; Savery, 2006). As such, they are implemented

through a longer time period, requiring substantial

student workload. Despite many similarities, there are

also specificities distinguishing between the two

approaches (Dole et al., 2015).

Problem-based learning (PrBL) starts with a

problem students are to learn about or solve (Dole et

al., 2015). To solve the said problem, PrBL gives

students an opportunity to investigate, apply their

knowledge and skills, and combine theory with

practice (Savery, 2006), and develop critical thinking

(Kek & Huijser, 2011; Thorndahl & Stentoft, 2020).

Problems are ill-structured, and often

interdisciplinary, reflecting real-life complexity, and

present the “driving force” of the learning process

(Dole et al., 2015; Savery, 2006). These realistic and

complex problems are usually solved in collaborative

groups (Allen et al., 2011). An important element is

guidance: the learning process is guided by a tutor, as

a facilitator of learning (Allen et al., 2011; Savery,

2006). The tutor’s role encompasses choosing a

problem, providing assistance and motivation to

students, articulating the problem-solving process

(Doumanis et al., 2021) and, in the end, carrying out

a detailed debriefing (Savery, 2006). While students

do present the conclusion they reached, the process

does not necessarily result in a concrete product (Dole

et al., 2015). PrBL supports students in both acquiring

and applying knowledge and skills (Dochy et al.,

2003), fostering deep understanding and particularly

the development of process-related skills like

research, teamwork, negotiation, writing, verbal

communication (Allen et al., 2011).

However, PrBL is not without challenges for

teachers, calling for institutional support and training

(Savery, 2006). The challenges often relate to large

classes, students resisting group work, but also

assessment metacognition and procedural

knowledge. Finally, the essential shift from teachers

providing knowledge to tutors facilitating learning

(Savery, 2006).

Project-based learning (PjBL), similarly, also

uses real-world problems, and fosters collaboration,

critical thinking and interdisciplinary knowledge. But

contrary to PrBL, it starts with the vision of an

artifact, which presents the “driving force”, and is

based on problems that reflect real-world. The

production process leads to the acquisition of

knowledge and skills, needed for successful

finalization of the artifact (Dole et al., 2015). While

PrBL focuses on knowledge application, PjBL

emphasizes knowledge construction.

Contrary to PrBL, in PjBL, students are provided

with clear instructions and guidelines for the final

artifact, and receive continuous feedback and

guidance from their teachers, who act as instructors

or coaches. Teachers are more flexible in terms of

giving direct instruction and support to students, but

still need to ensure balance enabling students to

acquire the intended outcomes while fostering self-

directed learning (Savery, 2006). Students’ problem-

solving in PjBL takes more time. Being aware of the

differences between the two approaches, for the

purpose of this paper, we use a single abbreviation,

PBL, as the aspects important for this study are

relevant for both approaches.

2.2 AI and Chatbots in Education

Previous research has shown that chatbots can be

useful in supporting students to learn basic content in

an interactive, responsive and confidential way (Chen

et al., 2023). Some of the identified benefits of using

chatbots include integration of content, quick access,

motivation, engagement, access for multiple users,

immediate assistance and support, as well as

encouraging personalized learning (Clark, 2023;

Okonkwo & Ade-Ibijola, 2021). Nevertheless, there

are also challenges, regarding ethics, assessment, user

attitude, supervision and maintenance, as well as the

constraints of natural language processing and the

limited possibility of thorough customized

conversations (Clark, 2023).

Regardless of the limitations, chatbots have been

used in education in various ways (Clark, 2023), for

example, as interactive knowledge bases (Chang et

al., 2022), virtual students (Lee & Yeo, 2022),

learning partners (Fryer et al., 2019), or help in exam

preparation (Korsakova et al., 2022).

Since its release, much of the focus in this area has

been on Open AI’s ChatGPT, generating “more

natural-sounding and context-specific responses”

(Dai et al., 2023). It has been noted that GenAI

PBL Meets AI: Innovating Assessment in Higher Education

121

chatbots like ChatGPT can be used, on the one hand,

as learning partners or tutors, and support self-

regulated learning (Dwivedi et al., 2023), but on the

other hand, as a means to pass exams without or with

minimal learning. Therefore, educators have been

emphasized as those responsible to support students’

development of critical thinking, while being

receptive to experimentation and navigating

transformation (Dwivedi et al., 2023). Furthermore,

potential applications of ChatGPT have been

identified in terms creating personalized learning

materials, lesson plans, engaging educational content,

and adaptive learning environments, providing

immediate and constructive feedback to students, and

helping teachers with grading (Ray, 2023).

In the light of the said developments, the use of

ChatGPT in education has been in the focus of recent

research endeavors, including its use in assessment.

Importantly, it has been found (Clark, 2023) that

ChatGPT is not as successful in problem-solving and

open-ended questions as in answering closed-ended

questions, and it could be useful in assignments which

include students analyzing the chatbot’s output to

improve it.

Meaningful integration of AI in education has

been perceived as a lengthy process (Rudolph et al.,

2023), especially if based on a top-down policy

development approach. Therefore, our aim is to use a

bottom-up approach to identify best practices and

speed up the process and enhance the quality of

integration of AI in education.

3 METHODOLOGY

Our research was focused on the following research

questions:

RQ1. How do students use AI chatbots in PBL?

RQ2. What are the benefits and risks of possible

teachers’ approaches to integrating AI in PBL?

RQ3. What are generic models of using generative AI

by students in PBL related to study levels, experience

with AI and type of assessment?

To answer these questions, we used mixed-

method research, in particular, multi-case study

research methodology (Yin, 2017). The study was

done in line with an action research approach,

directed towards introducing changes in practice

(Clarke, 2023), with course teachers studying their

own classrooms (Mertler, 2020). In this sense, it

involved course teachers as researchers, but also

research participants, with a focus on reflective

practice (Cohen et al., 2011). With action research

being a less intrusive approach to research, the study

was conducted based on several types of data and

analyses, depending on availability.

3.1 Study Setting

The study was conducted in academic years

2022/2023 and 2023/2024 at a higher education

institution (HEI) offering undergraduate, graduate

and postgraduate study programs in IT and related

fields like e-learning. Specifically, the study

encompassed six courses:

• Undergraduate level: Mathematics 2 (MAT2),

Introduction to IT Projects (IITP), Informatics

Services Management (ISM)

• Graduate level: Project Cycle Management

(PCM), Project Cycle Management in IT (PCM

IT)

• Postgraduate level: E-Learning Strategy and

Management (ELSM)

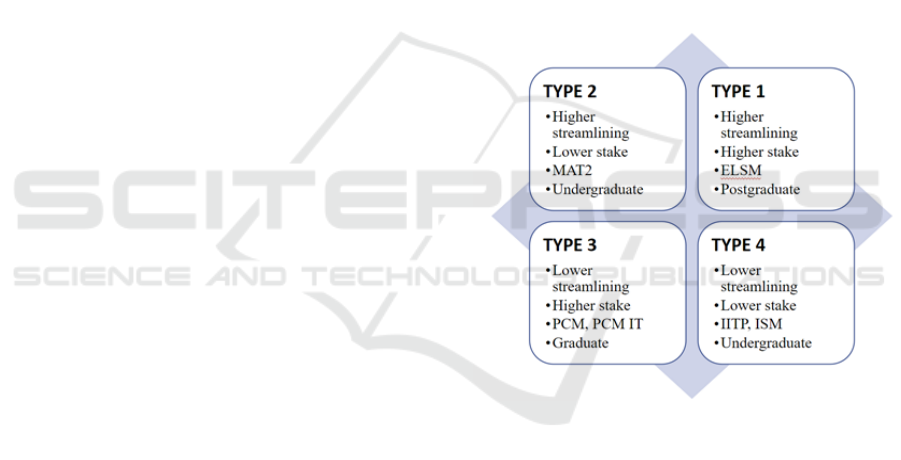

Figure 1: Research cases (courses) in a multi-case study

matrix.

We described four types of research cases by

placing the chosen courses (Table 1) in a matrix

(Figure 1) (Yin, 2017) based on two categories: (1)

the level of teachers’ streamlining of students’ use of

AI in PBL and (2) the influence of the PBL task on

the final grade. Additionally, we chose research cases

of different levels of study: undergraduate (bachelor),

graduate (master) and postgraduate.

TYPE 1. E-Learning Strategy and

Management (ELSM). This is a first-year

postgraduate level course (study program: E-learning

in Education and Business), with a student workload

of 5 ECTS credits (around 150 hours). The

assessment program includes regular quizzes and

discussions (formative assessment) and assignments

CSEDU 2025 - 17th International Conference on Computer Supported Education

122

Table 1: Research cases descriptions.

Course & sub

j

ect area T

yp

e of activit

y

Phase of PBL Individual/ team Use of AI

E-Learning Strategy and

Management

Education

Preparing a

strategic plan for

e-learning

Initiating and

planning

Individual Students were instructed to

use AI

Mathematics 2

Mathematics

Solving

mathematical

p

roblems

Problem-solving Individual Students were instructed

how to use a chatbot

Project Cycle

Management

Project Management

Preparing a

project proposal

for EU funding

Artifact

production

Team Students were not

recommended to use AI

Introduction to IT

Projects

Pro

j

ect Mana

g

ement

Preparing an

initial IT project

descri

p

tion

Initiating and

planning

Team Students were not

recommended to use AI

Informatics Services

Management

IT

Preparing a needs

analysis for IT

services

Initiating and

planning

Team Students were allowed (but

not instructed) to use AI

Project Cycle

Management in IT

Project Management, IT

Preparing a

project proposal

for EU funding

Artifact

production

Team Students were not

recommended to use AI

done by the students either individually or in teams

(summative assessment). One of these assignments

refers to an essay on a chosen topic related to strategic

decision-making based on relevant data sources, and

another is an essay on scenarios of the future of

education. Each of the assignments contributes 10%

to the total grade. In the academic year 2023/2024,

the students were instructed to use a GenAI chatbot

like ChatGPT in the preparation of the two essays and

include a critical reflection on the results in their

essays. Both assignments are assessed based on

criteria and a rubric that includes the assessment of

the use of AI. The students were asked to fill in a short

questionnaire about their experience with the use of

an AI chatbot.

TYPE 2. Mathematics 2 (MAT 2). This is a first-

year undergraduate level course (study program:

Informatics), with student workload amounting to 6

ECTS credits (about 180 hours). The assessment

program includes quizzes and homework

assignments (formative assessment), three periodical

exams and a problem-solving essay (summative

assessment). The essay contributes 10% to the total

grade. In the academic years 2022/2023 and

2023/2024 the essay exercise has been upgraded to

include GenAI to assist students in problem-solving,

and students’ critical reflection on GenAI as a partner

in PBL. Students were given individualized problem-

solving tasks with instructions on how to use GenAI

in PBL. They had to report on the results of their work

with GenAI, and then further research a given topic

to solve a mathematical problem; they were asked to

analyze the solutions and provide a critical evaluation

of their work with GenAI. Students also provided

feedback on the problem-solving task via a

questionnaire.

TYPE 3. Project Cycle Management (PCM).

This is a second-year graduate level course (study

program: Economics), with a workload of 4 ECTS

credits (about 120 hours). The assessment program

includes homework assignments (formative

assessment), two periodical exams and preparation of

a project application using PBL (summative

assessment). PBL contributes 40% to the final grade.

The project application is prepared in a team, and in

line with a relevant financing program. In the

academic year 2023/2024, students were given a

simplified Erasmus+ project application template,

and worked on its parts in classes, where they

received formative feedback, but had to complete the

project application autonomously as a team. In doing

so, they were not advised to use the support of GenAI.

Project applications were assessed based on a rubric.

The students were asked to fill in a short

PBL Meets AI: Innovating Assessment in Higher Education

123

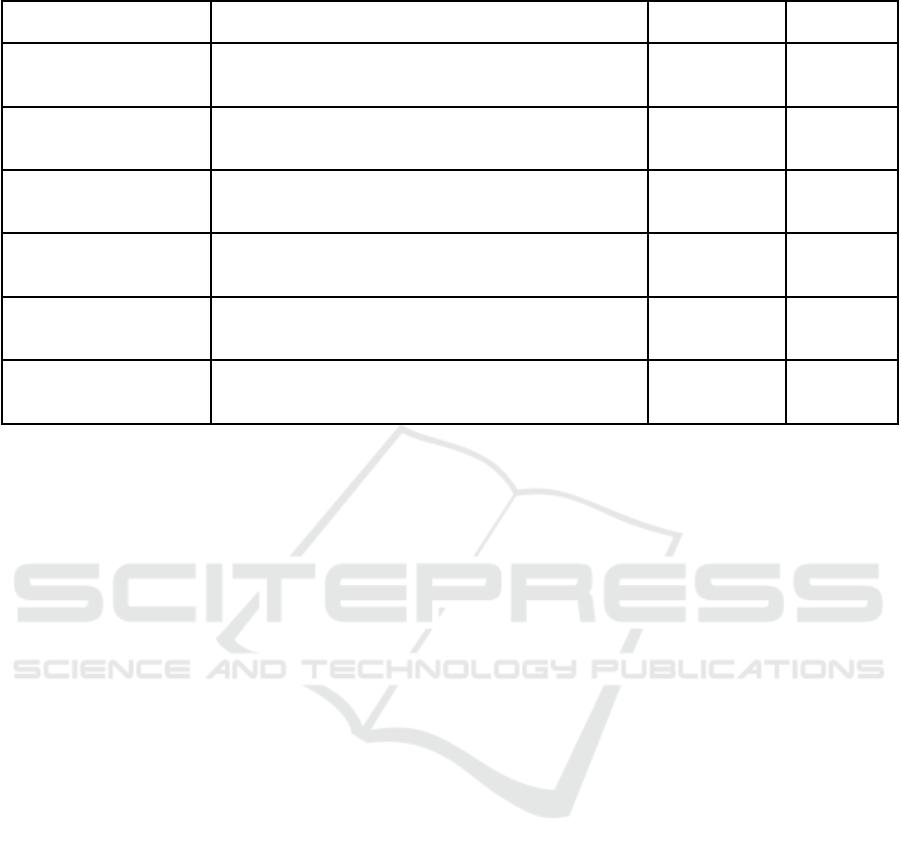

Table 2. Data collection and analysis.

Course Source of information Anal

y

sis Sam

p

le

E-Learning Strategy

and Mana

g

ement

Reported by students in assignments; teachers’ insights

from students’ assi

g

nments; a follow-u

p

q

uestionnaire

Quantitative

and

q

ualitative

32 students

3 teachers

Mathematics 2

Reported by students in the essay and the follow-up

questionnaire

Quantitative

and qualitative

229 students

6 teachers

Project Cycle

Mana

g

ement

Teachers’ insights from students’ assignments; a follow-

u

p

q

uestionnaire

Quantitative

and

q

ualitative

21 students

3 teachers

Introduction to IT

Pro

j

ects

Teachers’ insights from students’ assignments;

discussion in classes

Qualitative 91 students

3 teachers

Informatics Services

Management

Teachers’ insights from students’ assignments;

discussion in classes

Qualitative 210 students

6 teachers

Project Cycle

Management in IT

Teachers’ insights from students’ assignments; a follow-

up questionnaire

Qualitative 9 students

3 teachers

questionnaire which questions about their (possible)

use of AI in the preparation of the project application.

TYPE 3. Project Cycle Management in IT

(PCM IT). This is a first-year graduate level course

(study program: Informatics), with a workload of 4

ECTS credits (about 120 hours). The assessment

program includes quizzes and homework (formative

assessment), two periodical exams and two PBL-type

project assignments (summative assessment). PBL is

done in teams and contributes 60% to the final grade.

In the first project assignment, students plan an IT

project using the standard IT project management

methods. In the second project assignment, students

prepare a project application in line with a relevant

financing program. In the academic year 2023/2024,

students worked on a simplified EU-financed, IT-

related project application template, with parts of the

application discussed in classes, where students

received formative feedback. The project application

was finalized autonomously by each team, whereas

the teams were not advised to use the support of

GenAI. Project applications were assessed based on a

rubric. The students filled in a short questionnaire

including questions about the (possible) use of AI

while working on the project application.

TYPE 4. Introduction to IT Projects. This is a

second-year undergraduate level course (study

program: Applied IT), with a workload of 3 ECTS

credits (about 90 hours). The assessment program

includes weekly assignments during seminars

(formative assessment), two periodical exams and a

PBL-type of an IT project (summative assessment).

PBL contributes 40% to the final grade. In academic

year 2023/2024 students were given a task to make a

proposal for an IT project with the description of its

main elements. Student proposals were submitted in

the LMS and discussed with teachers and peers in

classes. Students were not recommended to use the

support of GenAI in this task.

TYPE 4. Informatics Services Management

(ISM). This is a second-year undergraduate level

course (study program: Informatics), with a student

workload of 4 ECTS credits (about 120 hours). The

assessment program includes assignments during

laboratory exercises (formative assessment), two

periodical exams and a prototype developed using the

PBL approach (summative assessment). PBL

contributes 50% to the final grade. In the academic

year 2023/2024, each student group was given a

project task by the teacher. Students worked on

assignments every week in laboratory exercises and

continued at home. Students were allowed to use AI

during laboratory exercises and discussed the

obtained solutions with the teacher, as a part of

formative assessment.

3.2 Data Collection and Analysis

The data were collected in academic years 2022/2023

and 2023/2024. Depending on the course, the data

included data collected directly from students

(assessment grades, reporting in assignments, and

questions about the use of AI integrated in student

questionnaires at the end of the course) and teacher

testimonials (based on insights from students’

assignments and discussions in class). As, in line with

CSEDU 2025 - 17th International Conference on Computer Supported Education

124

the action research approach, teachers/researchers

studied their own classrooms and provided

testimonials immediately after teaching and learning

activities were done, convenience sampling was done

based on the availability of the respective courses’

data (Cohen et al., 2011). However, importantly, the

sampling of courses was targeted in a way to include

several levels of study and several scientific

disciplines. More details on the data collection and

analysis are presented in Table 2.

4 RESULTS

4.1 e-Learning Strategy and

Management

Within the two assignments of this course, students

were instructed to use AI and then report and

critically reflect on their work with AI. It should be

noted that the students of this course are adult

students, primarily in-service teachers.

The students were interested in the assignments,

which is reflected in the fact that 30 out of 32 students

filled-in the questionnaire to share their experience,

and the fact that 67% reported that using AI in the

assignments was interesting to them. A vast majority

of students were in-service K-12 teachers, whose

interest in the use of AI was additionally triggered by

these assignments, as they motivated them to use AI

in their own teaching practices. All the students

replied that, after the assignments, they were going to

use AI in a supervised way in their classrooms even

if they had not done that before. While 37% already

had used AI before, 63% had not. A vast majority of

the students (67%) reported that they would have

used AI even if it were not instructed by the course

teachers, while only one third of the students would

not mention that in the references.

When asked about the positive sides of using AI

chatbots, a majority of students found them useful in

terms of providing the overall structure of the topic,

new hints and ideas, and direction for further research.

A third of the students pointed out that AI chatbots

were useful in providing fast access to basic

information. Several students also identified pros in

terms of text formulation, creation of tables, graphs and

graphic representations, and summarizing sources.

When it comes to the negative sides, a third of the

students thought that AI chatbots were not reliable and

trustworthy, providing incorrect and invented

information. In this sense, some stressed the need for a

critical approach and checking the accuracy of the

answers, which means extra work for the students.

While assessing student’s assignments, the

teachers observed that the students did use AI and

refer to it in their assignments, but the reported output

of AI was often generic and not substantive. This may

be related to AI’s non-specific responses, but also to

the students' not-so-well-targeted prompts. Moreover,

the students often provided no critical reflection on

the AI’s responses, or their reflection was rather

superficial, lacking critical analysis and fact-

checking. Finally, the teachers reported that giving

constructive feedback, which would refer both to the

content and the critical use of AI, was time-

consuming.

4.2 Mathematics 2

In the PBL within this course, the use of an AI chatbot

was also highly structured, with students receiving

clear instructions on how to use and report on their

work with AI. Student assessment results achieved

without and with chatbots (in two consecutive years,

2021/2022 and 2022/2023) were comparable, though

the results were slightly better without chatbots.

Furthermore, students who performed better in the

entire course were also more successful in PBL.

Importantly, a great majority of students reported

they already had experience in the use of chatbots for

learning.

Students’ responses to the questionnaire indicated

that they found AI chatbots to be useful in finding and

verifying information, but were not worried about its

accuracy, particularly when it comes to calculations

in mathematics, and were not very satisfied with AI’s

recommending capabilities in terms of literature, or

effectiveness in problem-solving. The comprehensive

results related to the use of AI in PBL within this

course in academic year 2022/2023 have been

described in the article entitled Generative AI in

mathematics education: analysing student

performance and perceptions over three academic

years (Divjak et al., 2025).

Additionally, according to course teachers’

testimonials, the PBL exercise supported by AI was

successful, as the students learned about the benefits

and downsides of the use of AI. Teachers are less

worried about AI’s capability of correct calculation,

and more about misinterpretation of mathematical

concepts. Understanding mathematical concepts is

essential for developing mathematical reasoning.

Finally, teachers reported that designing meaningful

assignments, in a way that enables a critical approach

to the use of AI, for a large group of students, was

highly demanding.

PBL Meets AI: Innovating Assessment in Higher Education

125

4.3 Project Cycle Management and

Project Cycle Management in IT

In the PBL tasks within these two courses, the use of

AI was not recommended. While assessing students’

projects, the course teachers gave feedback to some

student teams mentioning that the teachers noticed the

use of AI in descriptive parts. The teachers

recognized the use of AI chatbots primarily based on

the way the text was formed: general in terms of

content and complex in terms of expression. At the

same time, the students did not add AI chatbots in the

lists of references.

In the questionnaire, almost all the students

(21/22) reported using the support of AI tools at least

to some extent, but none reported using it to a great

extent. More than a half of the students (55%)

reported using it moderately or quite a lot, while less

than a half (41%) reported using it a little. Students

reported that they predominantly used AI for work-

intensive tasks like preparing the project budget, risk

analysis, project management description and

horizontal topics (e.g., green practices,

inclusiveness).

4.4 Introduction to IT Projects

In PBL, the use of AI was not recommended. When

analyzing the students’ assignments, the course

teacher noticed the use of AI in the following

elements: the used vocabulary is not common for

students, as it includes complex and professional

terms; students were not able to elaborate the

meaning of certain text; the structure of the

elaboration of the project idea (i.e. phases of IT

project development) was repeated in several teams

in an almost identical way, although the students did

not receive instructions for a specific structure;

moreover, students were not able to elaborate if they

have learned such structure in some other courses; in

expressing the IT project budget, some teams made

obvious mistakes as a result of non-critical text-

copying (e.g. used dollars instead of euros). During

the discussion with the teacher and peers, the students

admitted that they used an AI chatbot to prepare

project proposals for the mentioned elements

observed by the teacher but were not aware they

should reflect on that.

4.5 Informatics Services Management

In PBL, students were allowed, but not instructed to

use AI. Although teachers allowed students to use AI

chatbots during exercises, students rarely used them.

However, when discussing homework assignments,

teachers noted (and students confirmed) that they

used AI. Considering that these are creative tasks that

require thinking about the given problem and the end

users of the solution, the teachers noticed that in

certain segments the students' solutions were very

generic and insufficiently concrete. For example,

students asked AI to propose steps in a customer

journey map and the biography for persona, but they

received very generic solutions as they did not

include more detailed information about their

concrete project task to the query posed to AI.

Teachers noted that the solutions obtained by the AI

should have been further refined by the students in

most cases.

5 DISCUSSION

In PBL students develop critical thinking and use

different resources, simulating the real world (Savery,

2006), and AI can be used to support this. The six

presented research cases describe situations of using

generative AI for PBL, which generally gives

students a certain rate of autonomy and flexibility in

terms of organizing their own learning and problem-

solving. But this “hands-on and open-ended nature”

of PBL also means less control for teachers and more

complex tracking of the integrity of students’ work.

(Spikol et al., 2018)

It should be noted that PBL cannot be integrated

in the same way in every subject, as well as that the

integration of AI in teaching, learning and

assignments depends on the specificities of the course

content and learning outcomes. It was our aim to

illustrate different approaches and practices and

generalize some aspects in order to provide a

framework which can accommodate different

courses.

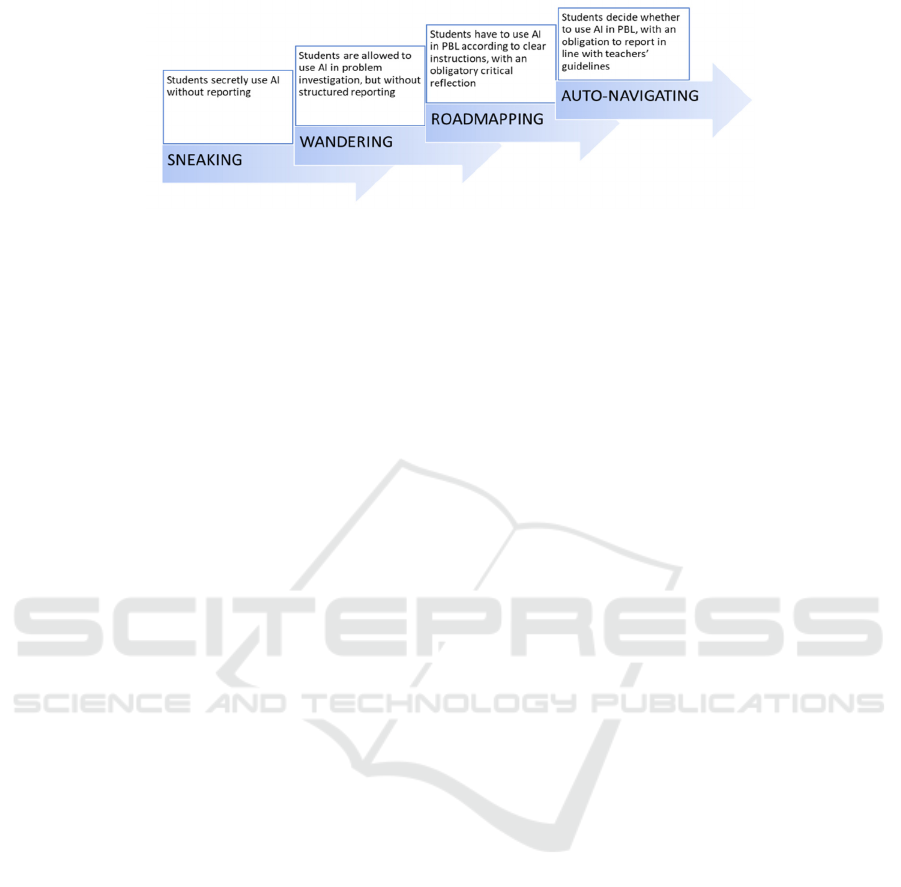

5.1 How Students Use AI in PBL

Considering the presented cases, we identified several

possible ways in which students can use AI in PBL

tasks, as presented in Figure 2. With the rapid rise of

GenAI, especially since November 2022 and the

launch of ChatGPT 3, students started increasingly

using GenAI chatbots in their assignments, and

usually did not report on that (Sneaking). Some

teachers recognized this and started openly discussing

the use of GenAI in assignment preparation when

they noticed ideas and patterns possibly produced by

GenAI. Teachers tried to integrate GenAI in the initial

phases of PBL, low-stake in terms of assessment, like

CSEDU 2025 - 17th International Conference on Computer Supported Education

126

Figure 2: How students use AI in PBL.

in problem investigation (Wandering). In order to

streamline the process and facilitate the recognition

of benefits and challenges of the use of AI by

students, teachers integrated AI as an obligatory tool

in PBL assignments, but with clear instructions for

students on how to use AI and critically reflect on

that. This contributed to the development of AI

literacy of both students and teachers (Roadmapping),

in terms of not only using AI applications, but also

understanding the underlying concepts and related

ethical concerns, as a prerequisite for responsible use

of AI (Ng et al., 2021). Finally, the goal is to support

informed use of AI by students, in which case AI can

be an optional tool, but students have the

responsibility to report on the use of AI and use it

critically, applying their AI literacy skills and being

mindful of the considerations of AI ethics (European

Commission, 2019) (Auto-navigating).

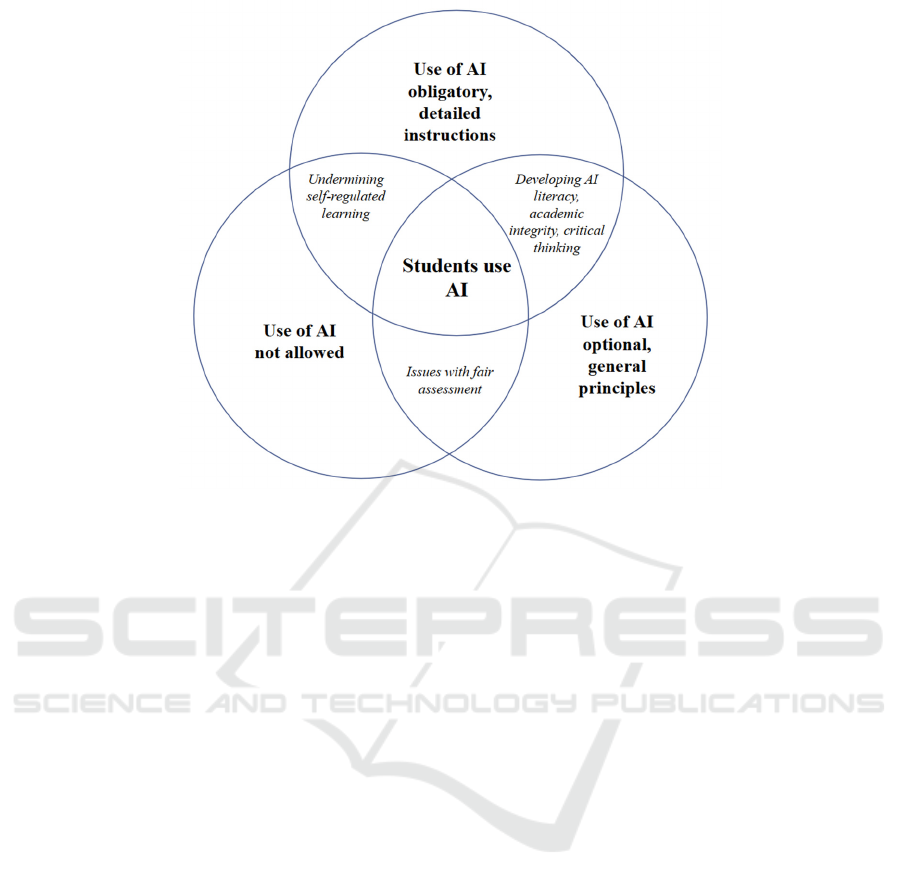

5.2 Benefits and Risks of Teachers’

Approaches to Using AI in PBL

Teachers can take different approaches to integrating

(or not) AI into PBL:

A teacher can simply forbid or ignore the use

of AI. Our results show that students use AI

regardless of their teachers’ explicit permission (e.g.,

in the PCM courses). Students can benefit from

additional and instantly available sources and

support, but this can also lead to misinformation and

misguidance from AI. On the one hand, an

experienced teacher can detect the use of AI in

students’ assignments even though it is not reported.

On the other hand, there is a risk that an inexperienced

teacher will not detect the use of AI, and award a

student with credits that are not fairly deserved. In

this case, students who secretly use AI may get better

grades, without acquiring learning outcomes. This

“ostrich” approach can be harmful for students’

learning and the development of academic integrity.

Considering the risks, this “policing” approach has

not been recommended (Rudolph et al., 2023) and

should better be avoided by teachers when using PBL.

A teacher can make the use of AI obligatory.

Our results show that students can benefit from

structured use of AI, which is interesting and

challenging, since it requires a critical approach to AI

outputs (for example, Mathematics 2 and E-Learning

Strategy and Management). Among the benefits, AI

can provide students with hints for orientation within

a new topic and direction for further research.

Moreover, this approach contributes to the

development of students’ and teachers’ AI literacy,

students’ critical thinking, and recognizing the

principles of academic integrity. However, there is a

risk that some students would not recognize

misinformation provided by AI. Moreover, this

“shepherd dog” approach limits students’ flexibility

and undermines self-regulation. For teachers, it may

be time consuming to give constructive feedback on

both the content of the PBL assignment and students’

critical use of AI. Moreover, not any topic is

appropriate for work with AI in a way that enables

substantial critical analysis by students, which calls

for a substantial level of teachers’ AI and assessment

literacy. Finally, this approach is neither appropriate

for any PBL assignment, nor is it beneficial for all

students for a longer period of time.

A teacher can make the use of AI optional. This

“owl” approach is suitable for AI literate students and

teachers, who accept AI as imperfect, but immediate

support, and are skillful in using AI in line with

general academic principles. In terms of benefits, this

approach supports self-regulated learning and

students’ autonomy. On the other hand, teachers have

to be able to either trust that their students use AI

responsibly or critically reflect on their students’

assignments in terms of detecting potential unfair

practices. This approach should be risky if not

preceded by adequate preparation of students or an

approach like the previous one. Furthermore, it calls

for careful assessment planning and possible redesign

of assessment criteria, to take into account that some

students do use AI while others do not, and ensure fair

assessment.

PBL Meets AI: Innovating Assessment in Higher Education

127

Figure 3: Major benefits and risks of teachers’ approaches

Figure 3 presents the major benefits/risks at the

intersections of these approaches.

5.3 Models of Integrating AI in PBL

The assessment tasks described in the research case

studies differ in at least three aspects. First, the extent

to which the teacher streamlines the process of

integrating AI in PBL. Second, the influence of the

PBL task on the final grade. Third, the level of HE.

The benefits and risks of different teachers’ and

students’ approaches were covered in previous

sections. Based on the presented research cases and

analyses, we further describe three models of using

AI in PBL, applicable in different contexts.

Model 1 (FIRM): The teacher requires the use of

AI in PBL, gives instructions on how to use it and

how to reflect on the results, since GenAI is not very

reliable in problem-solving (Clark, 2023). The aim is

to provide students with an experience in learning

with AI and a critical understanding of its benefits and

downsides. This is recommended in the case of high-

stake (summative) assessment and on lower levels of

HE, and when students are less familiar with

academic integrity, including responsible use of AI.

For example, MAT2 and ELSM courses describe

situations where students practice how to use AI,

creating meaningful prompts and recognizing

limitations. Results show that students are satisfied

with this guided way of using AI and find it

interesting. On the one hand, students practice critical

thinking and AI literacy, and on the other hand,

teachers know what they are assessing and can assess

the critical analysis of answers.

This model is important for freshmen and those

just being introduced to a subject, or when

(institutional) practices in the use of AI are still being

established: e.g., how it is used, referenced, what is

author's contribution. Ideally, the goal is to progress

towards the model in which the use of AI is optional,

but with clear institutional guidelines.

Model 2 (RELAXED): The teacher allows the

use of AI, but does not give instructions about

reflection and reporting. This is suitable for initial

phases of PBL, when results can still be discussed and

AI outputs can later be adequately referenced.

Moreover, this model can be used once students have

already gone through the “firm” model 1 and have

already acquired the critical AI literacy skills,

including academic integrity. Otherwise, students can

be confused about the use of AI.

For example, in ISM and IITP courses, students

admitted that they had used AI at home, but in class

(although they were allowed) they hesitated to use it.

This might be because they had already accepted AI as

a tool for cheating. In this model, transparency should

be insisted on, and AI presented as a legitimate tool and

their “teammate” (Fryer et al., 2019). Students still

need to be provided with some guidance on the use of

AI if they are not skilled in asking questions in

problem-based tasks that require creative solutions.

CSEDU 2025 - 17th International Conference on Computer Supported Education

128

This model is applicable primarily in formative

assessment, when teachers still give feedback in the

problem-solving process. This model gives teachers

the possibility to calibrate the instrument for

summative assessment. However, summative

assessment requires a more structured approach.

Model 3 (FLEXIBLE): Teachers do not give

instructions on the use of AI, but students use it

anyway. As such situations cannot be controlled in

PBL, it is better to think of ways of using AI in a

structured way, to support students’ problem-solving

skills. However, this does not imply that the use of AI

should be obligatory, but an option should be left to

students to decide whether to use it, with a

responsibility of reporting.

For example, looking at the PCM courses, the

conclusion is that teachers cannot ban the use of AI

as they do not control the PBL environment. Teachers

can indirectly find out (or not) that students used AI,

which may have an impact on fairness of assessment.

In order to successfully implement this “flexible”

model, in which students have a choice to use or not

use AI in PBL summative assessment, students and

teachers have to be sufficiently mature and literate in

terms of using AI. To achieve this, a prior

implementation of the “firm” model 1 can be an asset.

Alternatively, training and institutional guidelines for

teachers and students provided by a HEI can be

beneficial.

5.4 Limitations and Further Research

The main limitation of this study is that it includes a

limited number of research cases, courses belonging

to specific subject areas. Furthermore, the data

collected in relation to the said research cases differs

in volume, type and quality. Therefore, it would be

valuable to conduct further research in other

educational contexts, harmonize the data collection,

and based on that, conduct more sophisticated

statistical analyses.

6 CONCLUSION

We conducted case study research including six

research cases - courses at all the three levels of

higher education - to analyze approaches to the use of

AI in project-based and problem-based learning

(PBL). Based on the six cases, we identified three

possible models of introducing AI in PBL. The three

models differ in several aspects. The first one refers

to whether the use of AI in PBL is obligatory, optional

or not allowed. The second refers to whether learning

with AI is done in line with detailed teachers’

instructions or students use AI more flexibly, but

(presumably) in line with general academic and

ethical principles. The third difference refers to

whether PBL-based assessment is high-stake or low-

stake. The analysis showed that it is advisable to start

with the introduction of AI in PBL as early during the

studies as possible, and that students benefit from

structured and comprehensive instructions. This also

contributes to the trustworthiness of the use of AI in

PBL, as well as to the AI literacy of students. This is

especially relevant in cases of high-stake PBL

assessment. In the second phase of integration of AI

in PBL, the use of AI can be optional for students, but,

if used, it should be reflected on and reported, to

ensure ethics and academic integrity, as well as

critical use of AI. We should be aware that the use of

AI in PBL is not something that can or should be

forbidden. On the one hand, this is due to less teacher

control of students’ learning processes. On the other

hand, AI is here to stay, and we should learn how to

meaningfully use it in problem-solving and creating

creative project-based solutions.

ACKNOWLEDGEMENT

This work has been supported in part by the

Trustworthy Learning Analytics and Artificial

Intelligence for Sound Learning Design (TRUELA)

project, financed by the Croatian Science Foundation

(IP-2022-10-2854).

REFERENCES

Allen, D. E., Donham, R. S., & Bernhardt, S. A. (2011).

Problem‐based learning. New Directions for Teaching

and Learning, 2011(128), 21–29. https://doi.org/

10.1002/tl.465

Brassler, M., & Dettmers, J. (2017). How to Enhance

Interdisciplinary Competence—Interdisciplinary

Problem-Based Learning versus Interdisciplinary

Project-Based Learning. Interdisciplinary Journal of

Problem-Based Learning, 11(2). https://doi.org/10.77

71/1541-5015.1686

Chan, C. K. Y., & Hu, W. (2023). Students’ voices on

generative AI: perceptions, benefits, and challenges in

higher education. International Journal of Educational

Technology in Higher Education, 20(1), 43.

https://doi.org/10.1186/s41239-023-00411-8

Chang, C.-Y., Kuo, S.-Y., & Hwang, G.-H. (2022).

Chatbot-facilitated Nursing Education: Incorporating a

Knowledge-Based Chatbot System into a Nursing

Training Program. Educational Technology & Society,

25(1), 15–27.

PBL Meets AI: Innovating Assessment in Higher Education

129

Chen, Y., Jensen, S., Albert, L. J., Gupta, S., & Lee, T.

(2023). Artificial Intelligence (AI) Student Assistants in

the Classroom: Designing Chatbots to Support Student

Success. Information Systems Frontiers, 25(1), 161–

182. https://doi.org/10.1007/s10796-022-10291-4

Clark, T. M. (2023). Investigating the Use of an Artificial

Intelligence Chatbot with General Chemistry Exam

Questions. Journal of Chemical Education, 100(5),

1905–1916. https://doi.org/10.1021/acs.jchemed.3c00

027

Clarke, A. (2023). ‘Teacher inquiry: by any other name’, in

Tierney, R. J., Rizvi, F., and Ercikan, K. (eds)

International Encyclopedia of Education (Fourth

Edition). Elsevier, pp. 232–242.

Cohen, L., Manion, L. and Morisson K. (2011). Research

Methods in Education, 7

th

Edition, Routledge, Taylor

and Francis Group.

Dai, W., Lin, J., Jin, H., Li, T., Tsai, Y.-S., Gašević, D., &

Chen, G. (2023). Can Large Language Models Provide

Feedback to Students? A Case Study on ChatGPT. 2023

IEEE International Conference on Advanced Learning

Technologies (ICALT), 323–325. https://doi.org/10.11

09/ICALT58122.2023.00100

Divjak, B., Svetec, B., Horvat, D. (2025). Generative AI in

Mathematics Education: Analysing Student

Performance and Perceptions over Three Academic

Years. International Journal of Technology Enhanced

Learning. Accepted for publication.

Dochy, F., Segers, M., Van den Bossche, P., & Gijbels, D.

(2003). Effects of problem-based learning: a meta-

analysis. Learning and Instruction, 13(5), 533–568.

https://doi.org/10.1016/S0959-4752(02)00025-7

Dole, S., Bloom, L., & Kowalske, K. (2015). Transforming

Pedagogy: Changing Perspectives from Teacher-

Centered to Learner-Centered. Interdisciplinary

Journal of Problem-Based Learning, 10(1).

https://doi.org/10.7771/1541-5015.1538

Doumanis, I., Sim, G., & Read, J. (2021). Problem-Based

Learning and AI, ALIEN Active Learning in

Engineering Education. http://projectalien.eu/wp-

content/uploads/2021/03/D4.1-PBLand-AI-

community-document.pdf

Dwivedi, Y. K., Kshetri, N., Hughes, L., Slade, E. L.,

Jeyaraj, A., Kar, A. K., Baabdullah, A. M., Koohang,

A., Raghavan, V., Ahuja, M., Albanna, H., Albashrawi,

M. A., Al-Busaidi, A. S., Balakrishnan, J., Barlette, Y.,

Basu, S., Bose, I., Brooks, L., Buhalis, D., … Wright,

R. (2023). Opinion Paper: “So what if ChatGPT wrote

it?” Multidisciplinary perspectives on opportunities,

challenges and implications of generative

conversational AI for research, practice and policy.

International Journal of Information Management, 71,

102642.

https://doi.org/10.1016/j.ijinfomgt.2023.102642

European Commission. (2019). Ethics Guidelines for

Trustworthy AI. https://digital-strategy.ec.europa.eu/

en/library/ethics-guidelines-trustworthy-ai

Fryer, L. K., Nakao, K., & Thompson, A. (2019). Chatbot

learning partners: Connecting learning experiences,

interest and competence. Computers in Human

Behavior, 93, 279–289. https://doi.org/10.1016/j.chb.

2018.12.023

Kek, M. Y. C. A., & Huijser, H. (2011). The power of

problem‐based learning in developing critical thinking

skills: preparing students for tomorrow’s digital futures

in today’s classrooms. Higher Education Research &

Development, 30(3), 329–341. https://doi.org/10.1080/

07294360.2010.501074

Korsakova, E., Sokolovskaya, O., Minakova, D.,

Gavronskaya, Y., Maksimenko, N., & Kurushkin, M.

(2022). Chemist Bot as a Helpful Personal Online

Training Tool for the Final Chemistry Examination.

Journal of Chemical Education, 99(2), 1110–1117.

https://doi.org/10.1021/acs.jchemed.1c00789

Lee, D., & Yeo, S. (2022). Developing an AI-based chatbot

for practicing responsive teaching in mathematics.

Computers & Education, 191, 104646. https://doi.org/

10.1016/j.compedu.2022.104646

Marshall, C. (2023). Noam Chomsky on ChatGPT: It’s

“Basically High-Tech Plagiarism” and “a Way of

Avoiding Learning.” Open Culture. https://www.open

culture.com/2023/02/noam-chomsky-on-chatgpt.html

Mertler, C. A. (2020). Action research: Improving schools

and empowering educators (6th ed.). SAGE.

Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., Qiao, M. S.

(2021). Conceptualizing AI literacy: An exploratory

review, Computers and Education: Artificial

Intelligence, 2. https://doi.org/10.1016/j.caeai.2021.10

0041

Okonkwo, C. W., & Ade-Ibijola, A. (2021). Chatbots

applications in education: A systematic review.

Computers and Education: Artificial Intelligence, 2,

100033. https://doi.org/10.1016/j.caeai.2021.100033

Ray, P. P. (2023). ChatGPT: A comprehensive review on

background, applications, key challenges, bias, ethics,

limitations and future scope. Internet of Things and

Cyber-Physical Systems, 3, 121–154. https://doi.org/

10.1016/j.iotcps.2023.04.003

Rudolph, J., Tan, S., & Tan, S. (2023). ChatGPT: Bullshit

spewer or the end of traditional assessments in higher

education? Journal of Applied Learning & Teaching,

6(1). https://doi.org/10.37074/jalt.2023.6.1.9

Savery, J. R. (2006). Overview of Problem-based Learning:

Definitions and Distinctions. Interdisciplinary Journal

of Problem-Based Learning, 1(1). https://doi.org/

10.7771/1541-5015.1002

Spikol, D., Ruffaldi, E., Dabisias, G., & Cukurova, M.

(2018). Supervised machine learning in multimodal

learning analytics for estimating success in project‐

based learning. Journal of Computer Assisted Learning,

34(4), 366–377. https://doi.org/10.1111/jcal.12263

Thorndahl, K. L., & Stentoft, D. (2020). Thinking Critically

About Critical Thinking and Problem-Based Learning

in Higher Education: A Scoping Review.

Interdisciplinary Journal of Problem-Based Learning,

14(1). https://doi.org/10.14434/ijpbl.v14i1.28773

Yin, R. K. (2017). Case Study Research and Applications:

Design and Methods, 6th Ed. Sage Publications Inc.

CSEDU 2025 - 17th International Conference on Computer Supported Education

130