Data Governance Capabilities Model:

Empirical Validation for Perceived Usefulness and Perceived Ease of

Use in Three Case Studies of Large Organisations

Jan R. Merkus

a

, Remko W. Helms

b

and Rob J. Kusters

c

Faculty of Beta Sciences, Open Universiteit, Valkenburgerweg 177, Heerlen, The Netherlands

Keywords: Data Governance, Capabilities Models, Empirical Validation, TAM Evaluation, Metaplan.

Abstract: A Data Governance Capabilities (DGC) model for measuring the status quo of Data Governance (DG) in an

organisation has been validated in practice. After DG experts gained experience with the operationalised DGC

model, we evaluated its perceived usefulness (PU) and perceived ease of use (PEOU) in case studies of three

large organisations in the Netherlands. PU and PEOU are evaluated positively, but a moderator and

knowledgeable participants remain necessary to make a meaningful contribution.

1 INTRODUCTION

Data Governance (DG) is relevant for Corporate

Governance because data is seen as one of the most

valuable assets (Hugo, 2024; Addagada, 2023).

Recent research identifies DG as relevant for

corporate financial reporting, economic performance,

or the facilitation of corporate takeovers, and a

positive influence of DG on corporate governance

(Addagada, 2023). Additionally, DG is promising in

successfully maximising value from data (Schmuck,

2024).

An organisation's ability to govern data can be

determined by its data governance capabilities (DGC)

to execute certain data governance activities (Merkus

et al., 2021). Capabilities are defined as “the

collective abilities of an organisation to carry out

business processes that contribute to its

performance” (Brennan et al., 2018; Merkus et al.,

2020). Governance, and hence DG, can be measured

in terms of capabilities (Rosemann & De Bruin, 2005;

Otto et al., 2022). When doing so, a DGC reference

model can be useful in determining which DGCs are

relevant. A DGC reference model is a model from

which one can, depending on the organisation, select

relevant DGCs, e.g. to determine its DG status quo

for further improvement (Merkus, 2023).

a

https://orcid.org/0000-0003-2216-7816

b

https://orcid.org/0000-0002-3707-4201

c

https://orcid.org/0000-0003-4069-5655

However, we could not find a validated DGC

reference model, so we developed one consisting of

34 DGCs. Recently, we presented an empirical

validation of these 34 different DGCs, forming a

DGC (reference) model (Merkus et al., 2023). Each

of the DGCs in the model was empirically validated

in practice by DG activities occurring in large

organisations. In this study, we design an approach to

use the DGC model in practice to validate its

usefulness and ease of use in its entirety.

Consequently, the resulting research question is as

follows:

To what extent is the DGC model useful and easy

to use in practice when used to determine the status

quo of Data Governance in large organisations?

This research's theoretical relevance is adding an

empirically validated DGC reference model to the

literature. Its practical relevance is providing large

organisations with a set of DGCs to improve their

DG. The remainder of this paper is outlined as

follows. First, an overview of related work is

presented. Next, our research approach is described.

Third, the research results and analysis are presented.

Finally, the results are discussed, followed by the

conclusions, limitations and future research.

Merkus, J. R., Helms, R. W. and Kusters, R. J.

Data Governance Capabilities Model: Empirical Validation for Perceived Usefulness and Perceived Ease of Use in Three Case Studies of Large Organisations.

DOI: 10.5220/0013358100003929

In Proceedings of the 27th International Conference on Enterprise Information Systems (ICEIS 2025) - Volume 1, pages 99-108

ISBN: 978-989-758-749-8; ISSN: 2184-4992

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

99

2 RELATED WORK

This section reviews the current state of the literature

on DGC research and establishes the motivation for

this research. DGCs describe an organisation’s

ability to execute data governance activities (Merkus

et al., 2021). DGCs can be used to develop and

execute DG in an organisation. Furthermore, DGCs

are the basis of DG maturity models. With these

DGCs, an organisation can determine its DG status

quo (Merkus et al., 2023). When this DG status quo

is known, a DG future state can be set, DG

capabilities can be developed accordingly, and

benchmarks with other organisations can be made

(Pöppelbuss et al., 2011).

Previous studies discussed other DGC and DG

maturity models (Rifaie, 2009; Rivera,2017;

Permana,2018; Dasgupta, 2019; Heredia, 2019;

Olaitan,2019; Merkus et al, 2021). These studies

introduced DG models with different sets of DGCs in

specific business sectors, yet only two were

empirically validated in one large organisation.

Recent research on DGC and DG maturity models

focuses on different application areas and other types

of organisations, like government or specific business

sectors, and on ways to implement DG maturity

models within organisations (Abeykoon,2023; Alsaa,

2023; Mouhib, 2023; Hugo, 2024).

One notable contribution on DGCs presents a

more comprehensive framework for implementing

DG, which consists of 18 DG requirements and a

TOGAF reference architecture describing the

architecture of an Industry 4.0 DG system. This DG

framework has been validated theoretically in a

“fictitious” power grid operating company but not yet

in practice (Zorrilla & Yebenes,2022). In addition,

the authors recommend further review and empirical

testing, which is currently a gap (Bento, 2022). So,

although some DGC frameworks have been

presented, researchers disagree on the set of DGCs,

and the empirical validation of a comprehensive DGC

model including all known DGCs has not yet taken

place but is recommended.

Recently, we presented our research on DGCs,

revealing 34 DGCs from literature, each of which we

validated in practice by DG expert’s arguments, (see

Table 1) (Merkus et al., 2023, Merkus et al., 2021).

We validated these individual DGCs in the practice

of 19 large organisations using DG expert interviews,

resulting in a comprehensive DGC model for generic

application (Merkus et al., 2023). We created this set

of 34 DGCs ourselves because we did not find a

reference set of DGCs for determining the status quo

of DG, let alone one that was empirically validated.

However, our comprehensive DGC model has not

yet been validated in practice, only its parts, a gap in

the literature. Consequently, our research aims to

rigorously validate the most comprehensive DGC

model so far as a reference model for its usefulness

and ease of use in determining the status quo of DG.

Table 1: Empirically validated DGCs.

Generic

Capability

Groups

Data Governance

Capabilities

#

DG sub-

capa-

bilities

#

Experts

argu-

ments

Leadership Establish Leadership 1 3

Culture Establish & manage culture 4 15

Establish & manage awareness 4 15

Communicaton Establish & manage Train 8 36

Establish & manage Communicate 13 44

Strategy Specify data value 19 53

Set goals & objectives 6 20

Make business case 1 3

Formulate data strategy 4 13

Align with the business 14 44

Governance Establish roles & responsibilities 13 53

& Control Establish policies, principles, procedures 11 51

Establish performance management 3 15

Establish Monitoring 1 4

Establish KPI's 1 2

Establish decision-making authority 10 35

Establish data stewardship 5 28

Establish committees 1 4

Establish Auditing 7 23

Establish accountability 1 3

Organisation, Manage risk 8 36

Management Manage processes & lifecycle 14 43

& Processes Manage organisation 7 23

Manage metadata 3 8

Manage issues 1 4

Manage data 20 74

Information Setup security & privacy 11 45

Technology Setup IT 8 24

Setup DG tools 1 1

Human Resrcs Organize people 9 23

Value Chain Contract data-sharing agreements 7 34

Align & integrate data 5 21

Legislation Comply with regulations 9 36

Environment Establish environmental response 1 4

Total 231 840

3 RESEARCH METHOD

This study aims to validate the whole DGC model for

its usefulness and ease of use in determining the status

quo of DG in three large organisations that differ in

the type of organisation and business sector.

We select an evaluative strategy (Venable, 2014).

More specifically, we choose the human risk and

effectiveness strategy with formative evaluations

early in the process and a summative evaluation

focusing on evaluating the effectiveness of the

artefact in this research in multiple naturalistic case

studies (Venable, 2014). These case studies explore

the DGC model holistically as it applies to the entire

organisation. The reasons for selecting this evaluation

strategy are that (1) the design risk is user-oriented,

(2) it is cheap to evaluate with real users in their

organisation, (3) the utility of the DGC model should

be long-lasting in practical situations.

Our research method is based on how to refine and

evaluate artefacts in design science research with

groups of experienced participants (Tremblay,

Hevner&Blend, 2010). Therefore, our research is

divided into three distinct phases, elaborated in the

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

100

remainder of this section. Phase 1 involves designing

an approach to let participants use the DGC model in

practice to gain experience with it. When people gain

experience with the DGC model, they may be better

able to evaluate it. Phase 2 is about building that

experience with the DGM model in case

organisations to make its evaluation possible. Phase 3

evaluates the DGC model's usefulness and ease of use

to answer the research question. We end this session

with, we describe our measures for improving the

research validity and reliability.

3.1 Phase 1 Designing an Approach to

Use the DGC Model

Phase 1 aims to design an approach for determining

the DG status quo at an organisation using the DGC

model. We selected seven steps to improve the

research construct validity (see Table 2) (Tremblay,

Hevner & Blend,2010).

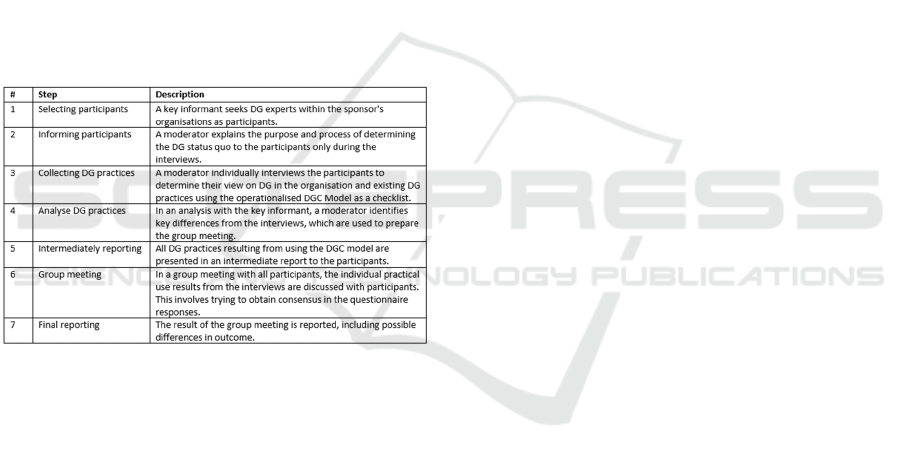

Table 2: Steps to use the DGC model in practice.

We made the following choices in our approach to

obtain proper use of the DGC model in an

organisation. We support organisations using the to

them unknown DGC model with a moderator

knowledgeable in DG. The moderator gives a proper

introduction at the beginning and guidance during the

interview.

We need a sponsor for each participating

organisation to approve and enable the research.

We require an organisation's key informant to

organise contacts between the moderator and

participants employed by the organisation. This

contact is only needed to arrange the interviews with

suitable candidates and provide the moderator

with context about the organisation despite the key

informant’s organisational bias.

We try to assemble participants with professional

experience in DG or data roles within that

organisation. They hold a position in DG or the data

field and have the ability and willingness to exchange

abstract ideas. Collectively, they have the information

to judge the status quo (Bagheri,2019). We select a

group of DG experts within an organisation to correct

the filters and biases of individuals in their roles or

positions and to obtain a richer picture and variation

in interpretations (Heemstra et al., 1996). To qualify

as an expert and contribute to how to set up data

management, participants must have more than five

years of experience in data governance or data

management at the B- or C-level. Typical DG or data

positions include Chief Data or Information Officers,

other Data (Governance) Board members, Data

officers, Privacy or Security Officers, Data or

Enterprise architects or Information or BI managers

and experienced data specialists e.g. data stewards.

The DGC model is used as a checklist to arrive at

a consensus for each DGC (Heemstra et al.,1996).

The advantage of this choice is that all DGCs are

presented and discussed with all the participants.

Previous experience with checklists shows that their

advantages outweigh the disadvantages if the

checklist is manageable (Heemstra et al.,1996). We

operationalise the checklist in the form of a maturity

scale based on the results of our previous research

(Merkus, 2023). With the resulting questionnaire, a

user can determine the organisational maturity.

We choose individual online interviews collecting

DG practices so that the moderator can clear up

ambiguities while answering evaluation questions by

immediately providing the necessary explanations.

DG practices are real DG activities happening in the

organization in practice demonstrating the ability to

execute these DG activities.

All DG activities, as the results of the interviews,

are presented graphically and on a sheet to all

participants of their own organisation in a group

meeting. The purpose here is to learn from each

other’s contributions, to obtain feedback on the

individual interview results for further refinement,

and to reach a consensus on the status quo of DG. For

each DGC, the group tries to meet mutual

understanding amongst participants on the underlying

DGC activities happening in practice. Consequently,

the group concludes on the status quo for each DGC

or expresses the differences in insights. The results of

the group meetings are presented to the participants,

representing the organisation's DG status quo.

With this approach, we aim to obtain access to

knowledgeable participants who have used the DGC

model and, therefore, evaluate it properly in practice.

Data Governance Capabilities Model: Empirical Validation for Perceived Usefulness and Perceived Ease of Use in Three Case Studies of

Large Organisations

101

3.2 Phase 2 Building Experience in

Using the DGC Model

In phase 2, the approach developed in phase 1 is

executed within the context of a number of large

organisations. The purpose is to execute phase 1 for

building the necessary experience with the DGC

model among DG experts so that they can be asked to

evaluate it. In our research, one of the authors

executes the moderator role, having knowledge of

DG.

We select three large organisations for the

multiple case study to be able to compare the results

between them. We aim to achieve this by comparing

the results in cross-analysis to ascertain a broad-based

opinion (Yin, 2014). Furthermore, with literal

replication in three organisations, we aim to achieve

saturation in the answers to the interview questions.

Here, saturation refers to the degree of similarity

between the answers. When similar answers are

mentioned across two or all three organisations, a

shared perspective or consensus can be achieved, this

strengthens credibility.

We select organisations that are suitable for our

study and meet the following criteria: (1) Sufficient

size (>1,000 employees) and consequential

complexity reflecting the need for governance

awareness in such large organisations (Merkus,2023).

(2) DG is being implemented in the organisation. (3)

The organisation employs DG experts. (4) Operating

in different business sectors from different types of

organisations so that we find general and broader

arguments that are not business sector specific. By

formulating these requirements, we aim to facilitate

the acquisition of experience in using the DGC

model.

We apply purposive sampling because "studying

information-rich cases yields insights and in-depth

understanding", according to Patton, which occurs

before the data is gathered (Suri,2011; Yazan,2015).

Additionally, to ensure the validity of the

participant’s experiences in using the DGC model,

the moderator observes whether the participants make

a meaningful contribution to this study through

thoughtful participation. This is achieved by

recording observations in a log by only recording

factual observations and not the researcher's own

interpretations. Afterwards, the observations are

analysed, and conclusions about the participants’

contributions are drawn. These conclusions may

confirm whether a participant's experience with the

DGC model is meaningful for our research, i.e. will

the participant be able to reflect meaningfully on

using the DGC model. If a participant did not make a

meaningful contribution, he will be excluded from

phase 3 and, therefore, from further analysis.

3.3 Phase 3 Evaluating the DGC Model

In phase 3, the purpose is to evaluate the DGC model

in practice. Having gained experience with the DGC

model within the context of their organisation in

phase 2. The participants are now interviewed using

a questionnaire about the perceived usefulness and

perceived ease of use of the DGC model. More

specifically, we collected the respondent's opinions

based on their experience with the model and their

underlying arguments for their opinion. Where

perceived usefulness is ‘the degree to which an

individual believes that using a particular system

would enhance his or her job performance’, and

perceived ease of use is ‘the degree to which an

individual believes that using a particular system

would be free of physical and mental effort’ (Davis,

1991).

The questionnaire for evaluating our DGC model

consists of the Technical Acceptance Model (TAM)

questionnaire, initially developed to ascertain a new

technology's perceived usefulness (PU) and

perceived ease of use (PEOU) and later improved

(Davis, 1991; Turner,2008). The improved TAM

questionnaire was selected because it can be used for

qualitative research on the acceptance and use of new

technologies in semi-structured interviews (i.e.

Singh,2019). Like Singh, we use it to interview a

small number of respondents to understand the

reasoning behind its usability and ease of use

(Davis,1991; Singh, 2019).

So, we adapted the four questions from Turner's

improved TAM questionnaire to measure

the usefulness and ease of use of the DGC model

(Turner, 20008). We followed Turner’s advice by

replacing “the technology” with “the DGC model to

determine the status quo of DG” and formulating the

statements into questions (Turner, 2008). This

resulted in four questions for usefulness and four for

ease of use. Furthermore, we chose the original five-

point Likert scale because we agree that a five-point

scale is sufficient for our evaluation (Turner, 2008).

The original Likert scale values Strongly Approve -

Approve – Undecided – Disapprove - Strongly

Disapprove (Likert,1932). In addition to the standard

series of four TAM questions, we added question

number five to both series to ask respondents about

their underlying arguments (Singh,2019). Finally, we

added an extra question on whether respondents

would use the tool again and why, as an extra question

to understand their reasoning why to reuse the DGC

model again. With the resulting questionnaire, we can

evaluate the usefulness and useability of the DGC

model in semi-structured interviews of the

respondents within the practice of their case

organisations, (see Table 3).

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

102

Table 3: Operationalised TAM questionnaire for the semi-

structured evaluation interviews.

We take measures to preserve the resulting research

data for further analysis and verification. We record

the online evaluation interviews, transcribe the

recordings, ask participants for their agreement on the

content of the transcripts, and delete the recordings to

assure the anonymity of the case organisation and the

participants.

Our data analysis aims to answer the research

question by evaluating the usefulness and ease of use

of the DGC model in practice so that we understand

the underlying reasoning why the DGC model is

useful and easy to use. The research data we need to

analyse are the respondents' potential arguments from

the semi-structured interviews resulting from phase

three.

The method to reveal the arguments for evaluation

and, consequently, the result of our evaluation is

elaborated as follows. First, we extract potential

arguments from the respondent's interviews by

applying in-vivo coding to identify the respondent’s

potential arguments and register them on a list

(Saldana,2013, p.91). Second, we apply axial coding

to classify the potential arguments to find the

arguments for evaluation (Saunders,2012, p.185).

This is carried out using Metaplan for the card sorting

technique (Howard,1994; Harboe et al., 2015;

Merkus et al., 2020). To achieve a more stable and

higher conceptual quality of card sorting, we execute

the card sorting in a group of three peers, all DG

researchers (Paul, 2008). (3) Finally, the researcher

reports the identified arguments to conclude the

evaluation of phase 3. This additional categorisation

of arguments further improves the research result's

validity.

3.4 Research Quality

Below, we assess the quality of our research method

to ensure its validity and reliability by highlighting

the measures we applied (Saunders,2012)

To improve the validity of the survey data, the

construct validity, we allowed participants to gain

experience with the DGC model with the guidance of

a moderator. For this we operationalised the 34 DGCs

in a questionnaire as a checklist. We also repeated the

survey in three appropriate organisations, conducted

careful interviews with predefined questionnaires and

collected survey data from all three organisations to

compare results. In addition, during the evaluation

phase 3, we used the well-known, improved TAM

questionnaire and the original Likert scale for

collecting research data.

To improve the rigour of our reasoning in

validating the DGC model, the internal validity, we

applied categorisation by applying the referenced

Metaplan technique of card sorting to find arguments

on which to base our conclusion ((Howard,1994). In

addition, we perform the card sorting with the

authors, all three being DGC researchers, in one

physical room simultaneously to eliminate the

researcher’s bias and apply peer scrutiny.

Although the generalisability or external validity

of qualitative research is limited, our research

outcomes are determined by arguments given by

precisely selected respondents in precisely selected

organisations and, therefore, might most likely apply

to similar case organisations. We replicate our

research in multiple organisations to obtain

arguments from similar contexts to achieve

congruence in our findings with reality. According to

the concept of purposive sampling, we will achieve

better insights and obtain more precise results with

just a few organisations, and consequently, in less

time. This is because we know the DGCs from our

previous research to select a suitable case

organisation. In addition, the requirements to select a

case organisation are detailed in our approach are

strict enough to replicate our research (Meriam

(1993); Yin (2004); Yazan (2015)).

Our qualitative research has a sufficient degree of

reliability because we detail our approach so that

others can easily replicate and check our research.

To ethically protect participants and respondents,

we assure them that their answers are anonymised and

that the survey data are not traced back to them or

their organisation. We do this by asking their explicit

permission for the anonymous processing of their

answers and allowing them to check the answers

afterwards. Furthermore, before each interview, we

ask each participant and respondent for their informed

consent. Given our limited time, resources, and

suitable case organisations willing to participate, our

design is as valid, reliable, and ethical as possible.

Data Governance Capabilities Model: Empirical Validation for Perceived Usefulness and Perceived Ease of Use in Three Case Studies of

Large Organisations

103

4 RESULTS

This section firstly reflects on how we conducted our

research in three large case organisations. Secondly,

we present the results obtained from experiences with

the practical use of the DGC model in Phase 2.

Thirdly, we present the evaluation outcome of Phase

3. Finally, we analyse the arguments obtained in the

evaluation in a cross-case analysis.

4.1 Three Case Studies of Large

Organisations

We conducted this research using the method

described in the previous section. In Phase 1, we

designed the approach we executed in Phase 2 as

follows. For step 1, we selected 3 case organisations

for our research with more than 5.000

employees from different business sectors in the

Netherlands to obtain a significant contrast between

them (see Table 4). The three key informants

recruited 16 participants from all three organisations.

Each participant is an employed DG expert with a

specific DG role. Participants have roles like chief

data officer, data architect, enterprise architect, data

manager, BI manager, and privacy officer. We also

included a data steward and a data governance

specialist with over seven years of experience with

DG because of their lived-through experience with

DG. The three case organisations have a corporate

data strategy in place, demonstrating the presence of

sufficient DG complexity in these organisations to be

suitable for this research. The interviews were held

online to facilitate on-screen use of the questionnaires

and reduce travel time and expenses. One of the

authors played the role of the moderator.

Table 4: Case organisations.

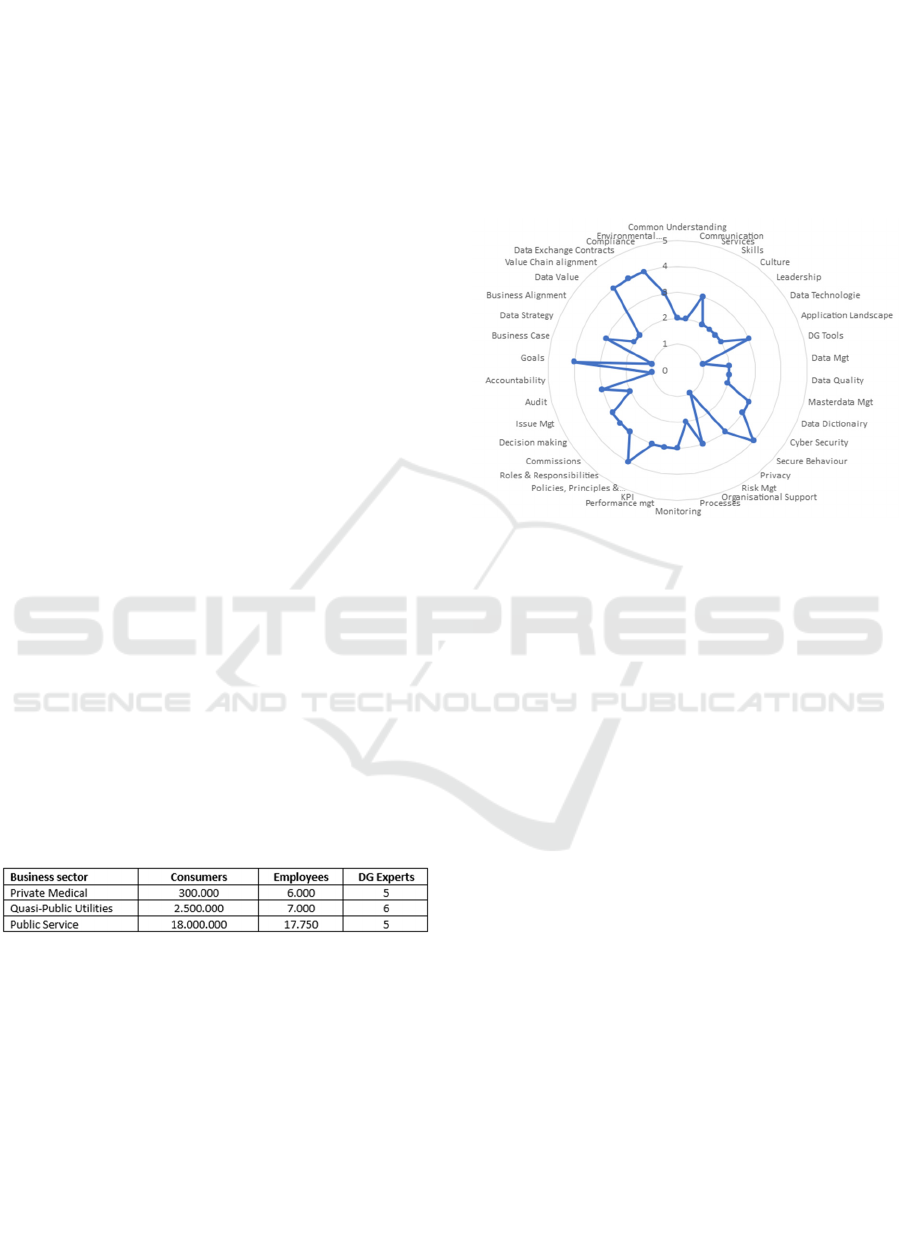

4.2 Practical Use Results

For step 2, the moderator explained the questionnaire

process at the start of the interview and guided the

participants while explaining the questions. Each

participant appeared to recognise all DGCs, and no

new DGCs were suggested. For step 3, the moderator

noted the DG practices mentioned by the participants

during the interviews, keeping scrutinous

bookkeeping of all research results available at the

author. For step 4, the moderator presented the key

differences between the participants and discussed

these with the key informant to prepare the group

meetings. For step 5, all organisational DG practices

were reported in a large table together with the

maturity scores displayed in a radar chart (see Figure

1). For step 6, we held three group meetings, one for

each organisation with its employees. The moderator

had the groups reach a consensus on the status quo of

DG for all DGCs. For step 7, the group meeting

results are reported to the participants and the

sponsor.

Figure 1: Group meeting outcome from the measurement

with the operationalised DGC checklist for organisation 3

plotted in a radar chart.

Additionally, according to the additional step in the

research method, the researcher observed the

thoughtful contributions of the participants during the

interviews and group meetings. The conclusion was

that all participants made a meaningful contribution

to the research, but some were absent during the

group meetings due to other priorities or changing

employers. Consequently, these three participants

were excluded from further participation in this

research.

4.3 Evaluation Results

In phase 3, we interviewed the remaining 13 DG

experts as respondents to our research with the

applied TAM list as a questionnaire in online

sessions. The answers to the questionniare were

recorded using automatic transcription. Afterwards,

we collected potential arguments from the transcripts

using en vivo coding and kept the administration

(Saunders, 2012). The result of the en vivo coding is

167 different potential arguments, which we recorded

on a list for further cross-case analysis.

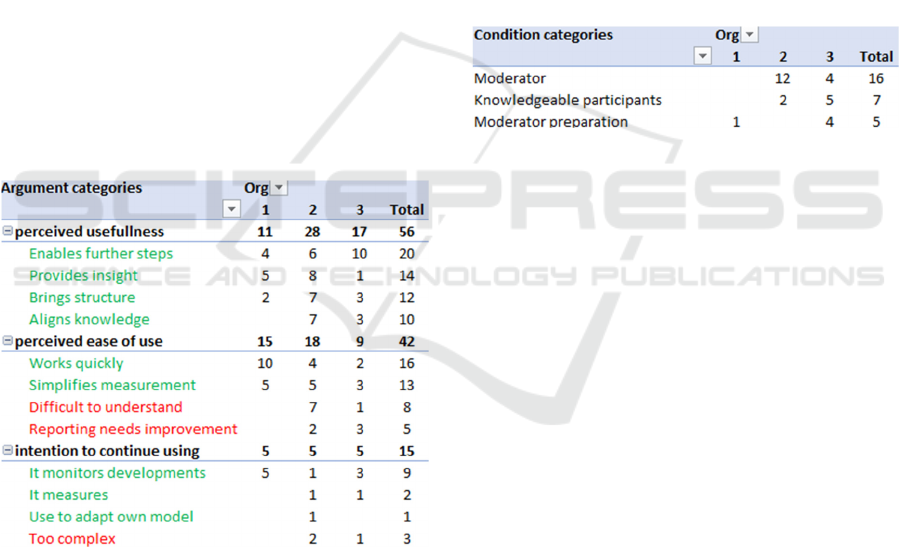

4.4 Evaluation Analysis

The aim of the evaluation analysis is to understand

the potential arguments resulting from phase 3 for the

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

104

usefulness and ease of use of the DGC model so that

we understand the underlying reasoning why the

DGC model is useful and easy to use. To arrive at

such an understanding, the 167 potential arguments

were printed on cards and physically categorised in

axial coding by card sorting using the Metaplan

technique with three peers in a physical setting of two

and a half hours.

The card sorting of the 167 potential arguments

resulted as intended in the following arguments. 26

potential arguments are categorised as out of scope

and thus seen as irrelevant. 113 of the remaining 141

potential arguments are categorised as arguments for

PU, PEOU and Intention to continue using (ITCU),

while 28 are categorised as conditions.

113 potential arguments are categorised as

arguments, (see table 5). Although the TAM

questionnaire was classified by PU, PEOU and ITCU

classification, respondents sometimes answered the

questions with potential arguments for the other

classes. Nevertheless, potential arguments and

discovered arguments during card sorting were

classified into the intended classes. E.g. the argument

Reporting needs improvement moved from the PU

class to the PEOU class.

Table 5: Arguments.

We found the following arguments.

PU is positively supported by arguments Enables

further steps, Provides insight, Brings structure and

Aligns knowledge. This indicates that the DGC model

is perceived as useful with 56 potential arguments and

without any other negative arguments or potential

arguments.

PEOU is positively supported by the arguments

Works quickly and Simplifies measurement supported

with 29 potential arguments, but for some the

DGCmodel is Difficult to understand and Reporting

needs improvement which is supported by 13

potential arguments. This indicates that most

perceived the DGC model easy to use, but some find

it complex.

ITCU the DGC model is positively supported by

arguments like It monitors developments, It

measures, Use to adapt own model with 12 potential

arguments, but negatively supported by the argument

Too complex with 3 potential arguments. In addition,

all thirteen respondents answered to reuse the DGC

model, three of them only some missing DGCs that

are not yet part of their model. This means that we

found one argument supported in Organisation 2 and

Organisation 3 that does not support the intention to

use the DGC model but not in Organisation 1.

Although we did not specifically ask for them, we

categorised not less than 28 potential arguments as

conditions (see Table 6).

Table 6: Conditions.

We found the following conditions: Moderator,

Moderator preparation and Knowledgeable

participants.

Although the nine positive arguments are

supported by 97 potential arguments, three negative

arguments supported by 16 potential arguments were

found together with three conditions supported by 28

potential arguments. First, this indicates the necessity

of a moderator and knowledgeable participants.

Second, this confirms our choice to apply a

knowledgeable moderator and search for DG Experts

as participants when designing our research method.

Third, some respondents mentioned potential

arguments supporting the negative arguments and

conditions, each in up to two organisations. So, using

the DGC model can be complicated if DG knowledge

lags and use requires proper support.

5 CONCLUSIONS

This section concludes our research and its relevance,

discusses the results and analysis, and elaborates on

limitations and further research of our study.

5.1 Conclusions

Regarding the research question, we conclude the

following concerning the DGC model:

Data Governance Capabilities Model: Empirical Validation for Perceived Usefulness and Perceived Ease of Use in Three Case Studies of

Large Organisations

105

The whole set of 34 DGCs has been validated in

practice as a comprehensive DGC model to measure

the status quo of DG in large organisations. From our

evaluation, we conclude that the DGC model is

perceived as useful and easy to use. However, we also

see some complex issues in two of the three

organisations. PU is evaluated positively without

negative arguments. PEOU is also evaluated

positively with two positive arguments from all three

organisations, but also with two negative arguments

from two organisations. Similarly, ITCU is supported

by three positive arguments in three organisations,

but a negative argument was also discovered in two

organisations. Even more, three conditions are found;

a moderator is needed to prepare and guide the

process so that knowledgeable participants can make

meaningful contributions. All negative arguments

and conditions are related to the complexity of the

model, which should be looked at in further research.

Looking at the results of all participating

organisations, we can conclude that each argument

occurs in at least two organisations. Given the large

overlap in arguments between organisations, we

suspect that adding a fourth organisation would not

change the outcomes much. The results have already

been confirmed in more than one organisation.

Another result of this research is the approach in

Phase 1 to use the DGC model in practice to measure

DG in case organisations.

During our research, none of the sixteen DG

experts added new DGCs. So, no one was aware of

DGCs other than those that comprise the DGC model,

which supports the comprehensiveness of our DGC

model.

5.2 Relevance

The outcomes of this research are relevant in two

ways. The theoretical relevance is that the

comprehensive DGC model is validated in practice in

multiple organisations, and our qualitative evaluation

method proved useful. The practical relevance is that

organisations obtain a comprehensive measure and

approach to assess their DG status quo and to

benchmark across organisations. This can be useful in

improving the value of data as one of an

organisation's most valuable assets.

5.3 Discussion

Discussing the DGC model evaluation outcomes, we

see the following discussion points.

To date, no such comprehensive and validated

capability model for measuring DG status quo exists

in literature.

Looking at the discovered conditions, we see that

a knowledgeable moderator and knowledgeable

participants are required to use the DGC model. In

addition, an argument about the complexity of the

intention to continue using the DGC model was

discovered. We could conclude from this that

knowledge transfer seems to be a concern that needs

attention when using the DGC model. This points to

further research for improving our approach with

more knowledge transfer.

The average Likert score for PU is 4.1 on a scale

of 5, and for PEOU, it is 3.7. Although this is a

qualitative study, this indicates that respondents

perceive PU as positive. PEOU is also perceived as

positive, albeit slightly less positive than PU. So, both

PU and PEOU are rated positively on average,

indicating that the DGC model is also perceived

positively on average.

5.4 Limitations

Given the limitations of our study, we have identified

some shortcomings when conducting research which

may need further research. Concerning data

collection (1) One of the authors was the moderator;

a researcher's bias must be considered, (2)

Respondants were recruited by the key informant,

who could influence their selection, (3) Only Dutch

organisations have been selected for the case studies,

and (4) The organisations operated in only three

different business sectors. Concerning data analysis

only three DG researchers conducted this research.

However, given the limitations, our approach

proved valuable in evaluating the DGC model. When

replicated rigorously in other organisations using the

scrutinous described method, the DG status quo can

be measured in a useful and easy-to-use manner over

time and benchmarked with other organisations.

5.5 Further Research

Further research is recommended for some of the

outcomes of this study.

To improve the use of the DGC model, further

research may address the concern of transferring

knowledge of DG or the DGC model as part of the

approach to improve the PEOU and ITCU.

Seen this research’s limitations and for validating

the results with other data sets, we recommend

replication of our research (1) by other researchers,

(2) in the same organisation with other respondents,

(3) in other countries or cultures, and (4) in other

business sectors. For validating the data analysis, we

recommend replication by different DG researchers.

In addition, certain DGCs seem to be required for

others to develop. A logical order could indicate a

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

106

guide on implementing DG. This idea is supported in

other maturity model research (Van Steenbergen et

al.,2007). A critical path of developing DG

capabilities can be determined as a guide for

organisations to improve DG.

During the practical use of the DGC model,

many experts suggested a higher-level classification

for more convenient use of the model and

understandable reporting. Some suggested the

categories of the applied generic capability reference

model from the underlying literature, designed for

that purpose and to enable measuring and

benchmarking governance (Merkus et al., 2021).

Finally, apply our research method in evaluating

other artefacts in DSR research, e.g. new x

governance capability models using the same

reference framework as the DGC model, where x is

any organisational area to govern.

FUNDING

This research received no specific grant from funding

agencies in the public, commercial, or not-for-profit

sectors.

ACKNOWLEDGEMENT

Special thanks go to the anonymous respondents,

without whom this study could not have succeeded.

REFERENCES

Abeykoon, B. B. D. S., & Sirisena, A. B. (2023). A

Bibliometric Analysis of Data Governance Research:

Trends, Collaborations, and Future Directions. In:

South Asian Journal of Business Insights, 3(1), 70–92.

Addagada, T. (2023). Corporate Data Governance, an

evolutionary framework, and its influence on financial

performance. In: Global Journal of Business and

Integral Security.

Alsaad, A. (2023). Governmental Data Governance

Frameworks: A Systematic Literature Review. 2023 In:

International Conference on Computing, Electronics &

Communications Engineering (ICCECE), 150–156.

Abraham, R., Schneider, J., & vom Brocke, J. (2023). A

taxonomy of data governance decision domains in data

marketplaces. In: Electronic Markets, 33(1).

Bagheri, S. (2019). Design of a reference model-based

approach to support business-IT alignment

improvement in co-creation value networks. Doctoral

dissertation. Technische Universiteit Eindhoven.

Becker, J., Knackstedt, R., & Pöppelbuß, J. (2009).

Developing Maturity Models for IT Management – A

Procedure Model and its Application. In: Business &

Information Systems Engineering, 1(3), 213–222.

Bento, P., Neto, M., & Corte-Real, N. (2022). How data

governance frameworks can leverage data-driven

decision making: A sustainable approach for data

governance in organisations. In: Iberian Conference on

Information Systems and Technologies, CISTI, 2022-

January.

Brennan, R., Attard, J., & Helfert, M. (2018). Management

of data value chains, a value monitoring capability

maturity model. In : ICEIS 2018 - Proceedings of the

20th International Conference on Enterprise

Information Systems, 2(January), 573–584.

Dasgupta, A., Gill, A., & Hussain, F. (2019). A conceptual

framework for data governance in IoT-enabled digital

IS ecosystems. In: Proceedings of the 8th International

Conference on Data Science, Technology and

Applications (DATA, 2019), Data, 209–216.

Davis, F. D. (1991). User acceptance of information

systems: the technology acceptance model (TAM). In:

International Journal Man-Machine Studies, 38, 475–

487.

Dijkstra, J. (2022). Toward an IoT Analytics capability

framework for business value creation. Master’s thesis,

Open Universiteit, The Netherlands.

Heemstra, F. J., & Kusters, R. J. (1996). Dealing with risk:

A practical approach. In: Journal of Information

Technology, 11(4), 333–346.

Heredia-Vizcaíno, D., & Nieto, W. (2019). A Governing

Framework for Data-Driven Small Organizations in

Colombia. In: New Knowledge in Information Systems

and Technologies, Springer, WorldCIST’19 2019.

Advances in Intelligent Systems and Computing. Vol.

930, Issue 1, pp. 622–629.

Harboe, G., & Huang, E. M. (2015). Real-world affinity

diagramming practices: Bridging the paper-digital gap.

In: Conference on Human Factors in Computing

Systems. In: Proceedings of the 33rd annual ACM

conference on human factors in computing systems (pp.

95-104).

Howard, M. S. (1994). Quality of Group Decision Support

Systems: A comparison between GDSS and traditional

group approaches for decision tasks. Doctoral

dissertation: Technische Universiteit Eindhoven.

Hugo Ribeiro Machado, V., Barata, J., & Rupino Da Cunha,

P. (2024). A Maturity Model for Data Governance in

Decentralized Business Operations: Architecture and

Assessment Archetypes. In: International Conference

on Information Systems Development 2024.

Mouhib, S., Anoun, H., Ridouani, M., & Hassouni, L.

(2023). Global Big Data Maturity Model and its

Corresponding Assessment Framework Results. In:

International Journal of Applied Mathematics, 53(1).

Likert, R. (1932). A Technique for the Measurement of

Attitudes. In: Archives of Psychology, 22, 5–55.

Merkus, J. R., Helms, R. W., & Kusters, R. J. (2021). Data

Governance Capabilities; Maturity Model design with

Generic Capabilities Reference Model. In: Proceedings

of the 13th International Joint Conference on

Data Governance Capabilities Model: Empirical Validation for Perceived Usefulness and Perceived Ease of Use in Three Case Studies of

Large Organisations

107

Knowledge Discovery, Knowledge Engineering and

Knowledge Management (IC3K), 102–109.

Merkus, J. R., Helms, R. W., & Kusters, R. J. (2023). Data

Governance Capabilities; Empirical Validation In Case

Studies Of Large Real-Life Organisations. In:

proceeedings of the 36TH Bled E-Conference, 35–48.

Merriam, S. B. (1998). Qualitative Research and Case

Study Applications in Education. Revised and

Expanded from “Case Study Research in Education.”.

In: Quality Research and Case Study Applications in

Education, (pp. 26–43) San Fancisco.

Mouhib, S., Anoun, H., Ridouani, M., & Hassouni, L.

(2023). Global Big Data Maturity Model and its

Corresponding Assessment Framework Results. In:

International Journal of Applied Mathematics, 53(1).

Olaitan, O., Herselman, M., & Wayi, N. (2019). A Data

Governance Maturity Evaluation Model for

government departments of the Eastern Cape province.

In: South Africa. South African Journal of Information

Management, 21(1), 1–12.

Otto, B., Hompel ten, M., & Wrobel, S. (2022). Designing

Data Spaces; the ecosystem approach to competitive

advantage. Springer.

Paul, C. L. (2008). A modified Delphi approach to a new

card sorting methodology. Journal of Usability Studies,

4(1), 24.

Permana, R. I., & Suroso, J. S. (2018). Data Governance

Maturity Assessment at PT. XYZ. Case Study: Data

Management Division. In Proceedings of 2018

International Conference on Information Management

and Technology (ICIMTech 2018), 15–20.

Pöppelbuß, J., & Röglinger, M. (2011). What makes a

useful maturity model? A framework of general design

principles for maturity models and its demonstration in

business process management. Ecis, Paper28.

Rifaie, M., Alhajj, R., & Ridley, M. (2009). Data

governance strategy : A key issue in building enterprise

data warehouse. IiWAS2009, 587–591.

Rivera, S., Loarte, N., Raymundo, C., & Dominguez, F.

(2017). Data Governance Maturity Model for Micro

Financial Organizations in Peru. In Proceedings of the

19th International Conference on Enterprise

Information Systems (ICEIS 2017), 203–214.

Rosemann, M., & Bruin, T. de. (2005). Towards a Business

Process Mangement Maturity Model. ECIS 2005

Proceedings of the Thirteenth European Conference on

Information Systems, May, 26–28.

Saldaña, J. (2013). The Coding Manual for Qualitative

Researchers (Second Edition, Vol. 1).

Saunders, M. N. K., Lewis, P., & Thornhill, A. (2012).

Research Methods for Business Students (6th ed.).

Pearson Education Limited.

Schmuck, M., & Georgescu, M. (2024). Enabling Data

Value Creation with Data Governance: A Success

Measurement Model. CS & IT Conference

Proceedings, Vol. 14, Nr 8.

Shenton, A. K. (2004). Strategies for ensuring

trustworthiness in qualitative research projects.

Education for Information, 22(2), 63–75.

Singh, S., & Srivastava, P. (2019). Social media for

outbound leisure travel: a framework based on

technology acceptance model (TAM). Journal of

Tourism Futures, 5(1), 43–61.

Steenbergen, M. van, Berg, M.van den , & Brinkkemper, S.

(2007). A balanced approach to developing the

enterprise architecture practice. ICEIS 2007, Lecture

Notes in Business Information Processing, 12 LNBIP,

240–253.

Stoiber, C., Stöter, M., Englbrecht, L., Schönig, S., &

Häckel, B. (2023). Keeping Your Maturity Assessment

Alive: A Method for the Continuous Tracking and

Assessment of Organizational Capabilities and

Maturity. Business and Information Systems

Engineering.

Suri, H. (2011). Purposeful sampling in qualitative research

synthesis. Qualitative Research Journal, 11(2), 63–75.

Tremblay Monica Chiarini, Hevner Alan R., & Berndt

Donald J. (2010). Focus Groups for Artifact

Refinement and Evaluation in Design Research.

Communications of the Association for Information

Systems, 26(27), 327–337.

Turner, M., Kitchenham, B., Budgen, D., & Brereton, P.

(2008). Lessons Learnt Undertaking a Large-scale

Systematic Literature Review.

Venable, J., Pries-Heje, J., & Baskerville, R. (2014). FEDS:

A Framework for Evaluation in Design Science

Research. European Journal of Information Systems,

25(1), 77–89.

IJmker, S. (2022). Verbetermaatregelen opstellen en

prioriteren om IT-project portfolio’s op koers te houden

— Open Universiteit research portal.

Yazan, B. (2015). Three Approaches to Case Study

Methods in Education: Yin, Merriam, and Stake. In The

Qualitative Report (Vol. 20, Issue 2, pp. 134–152).

Yin, R. K. (2014). Case study research: design and methods

(5th ed.). SAGE Publications, Inc.

Zorrilla, M., & Yebenes, J. (2021). A reference framework

for the implementation of Data Governance Systems for

Industry 4.0. Computer Standards & Interfaces, 81,

103595.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

108