Use and Perception of Generative AI in Higher Education: Insights

from the ERASMUS+ Project 'Teaching and Learning with Artificial

Intelligence' (TaLAI)

Susmita Rudra

1a

, Peter Weber

1b

, Tanja Tillmanns

2c

, Alfredo Salomão Filho

2d

,

Emma Wiersma

3

, Julia Dawitz

3e

, Dovile Dudenaite

4f

and Sally Reynolds

4

1

Competence Center E-Commerce (CCEC), South Westphalia University of Applied Sciences, Soest, Germany

2

The Innovation in Learning Institute (ILI), Friedrich-Alexander-University, Erlangen-Nürnberg, Germany

3

Teaching & Learning Centre Science, University of Amsterdam, Amsterdam, Netherlands

4

Media & Learning Association, Roosbeek, Belgium

Keywords: Generative AI, Higher Education, Perception, Survey Results.

Abstract: The integration of Generative Artificial Intelligence (GenAI) in higher education offers transformative

opportunities alongside significant challenges for both educators and students. This study, part of the

ERASMUS+ project Teaching and Learning with Artificial Intelligence (TaLAI), aims to explore the

familiarity, usage patterns, and perceptions of GenAI in academic settings. A survey of 152 students (mainly

from Germany, Belgium, and the Netherlands) and 118 educators (81 professors, 37 trainers) reveals

widespread GenAI use, with ChatGPT being the most common tool. Findings indicate both enthusiasm for

GenAI’s potential benefits and concerns regarding ethical implications, academic integrity, and its impact on

learning processes. While students and educators recognize GenAI’s ability to enhance learning and

productivity, uncertainties persist regarding assessment practices and its potential short and long-term effects

on various aspect such as decision making, creativity, and memory performance. The study also highlights

gaps in institutional support and policy, emphasizing the need for clearer communication to ensure responsible

AI adoption. This paper contributes to the ongoing discussions on GenAI in higher education and is aimed at

educators, policymakers, and researchers concerned with its responsible use. By addressing students’ and

educators' both perspectives and concerns, institutions and policymakers can develop well-informed strategies

and guidelines that promote responsible and effective use of GenAI, ultimately enhancing the overall teaching

and learning experience in academic environments.

1 INTRODUCTION

The rapid advancement of Generative Artificial

Intelligence (GenAI) has the potential to

revolutionize higher education by offering tools that

enhance teaching and learning experiences. Unlike

traditional AI models that rely on predefined rules

and numerical predictions, GenAI refers to models

that generate novel, previously unseen content based

a

https://orcid.org/0009-0004-2512-9836

b

https://orcid.org/0000-0003-4192-5555

c

https://orcid.org/0000-0001-5662-5761

d

https://orcid.org/0000-0003-3006-5991

e

https://orcid.org/0009-0008-8143-2580

f

https://orcid.org/0000-0003-1671-668X

on the data they have been trained on. These models

produce human-like material that can be interacted

with and consumed, rather than merely analysing

existing data patterns (García-Peñalvo & Vázquez-

Ingelmo, 2023). GenAI-powered tools have

demonstrated capabilities in generating content,

aiding in problem-solving, summarizing texts and

providing personalized feedback, making them

invaluable in educational contexts (Dale & Viethen,

Rudra, S., Weber, P., Tillmanns, T., Filho, A. S., Wiersma, E., Dawitz, J., Dudenaite, D. and Reynolds, S.

Use and Perception of Generative AI in Higher Education: Insights from the ERASMUS+ Project ’Teaching and Learning with Artificial Intelligence’ (TaLAI).

DOI: 10.5220/0013360200003932

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 2, pages 319-330

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

319

2021; Rowland, 2023; Nguyen, 2023). However,

their adoption raises critical questions around ethical

usage, academic integrity, and equitable access. The

integration of GenAI tools into education has the

potential to reshape learning goals, activities, and

assessment practices, emphasizing creativity and

critical thinking over general skills (Zhai, 2022).

Educators and institutions are increasingly tasked

with navigating the challenges of integrating such

transformative technologies responsibly (Ray, 2023).

While some studies highlight the potential of GenAI

to foster critical thinking and creativity (Chan & Hu,

2023), others caution about possible over-reliance,

which could impede foundational skill development

(Bobula, 2023). These complexities underline the

necessity of a structured approach to understanding

and implementing GenAI in higher education.

The Erasmus+ project Teaching and Learning

with Artificial Intelligence in Higher Education

(TaLAI) addresses these challenges by aiming to train

higher education (HE) faculty staff and students with

the knowledge and skills to use GenAI responsibly

and ethically. Launched on November 1, 2023, and

running until October 31, 2026, the project seeks to

foster digital literacy among academic stakeholders

and to promote the ethical and effective integration of

GenAI into teaching and learning. In addition to the

scientific study of the implications triggered by

GenAI, TaLAI aims to create a digital platform and a

Massive Open Online Course (MOOC) that aims to

help academic professionals to learn, discuss and

integrate GenAI into their teaching and learning,

while adhering to ethical standards. TaLAI thereby

will also develop recommendations for assessment in

the context of GenAI.

In the first year of the project, two fundamental

research activities were prioritized to create a solid

understanding of the current role and perception of

GenAI in higher education. First, a systematic

literature review, following PRISMA guidelines,

examined the current state of GenAI in higher

education, focusing on its effective and ethical

integration into teaching and learning. The second

research activity was a survey, conducted among both

educators (including professors, lecturers,

researchers, trainers and educational advisors) and

students at higher education institutions. This survey

explored four main dimensions: participants'

familiarity with GenAI; the extent and nature of its

use; perceptions of GenAI; and its acceptance. The

survey was divided into two distinct parts, one

measuring the use and perception of GenAI in

academic contexts, and the other examining factors

influencing GenAI acceptance through the UTAUT2

model (Unified Theory of Acceptance and Use of

Technology) (Venkatesh et al., 2012).

The objective of this study is to focus solely on

the findings from the first component, specifically

addressing participants’ familiarity with GenAI,

current usage patterns, perceived benefits, challenges,

and ethical considerations among educators and

students. The second component, which investigates

acceptance-related factors, will be explored in future

research through inferential statistical analyses. By

separating these two components, this study ensures

a focused analysis while laying the groundwork for a

deeper exploration of GenAI acceptance in

subsequent work.

This paper provides a foundational analysis of the

descriptive survey data, offering insights into how

GenAI is currently perceived and used in academia.

By exploring students’ and educators’ views, the

research further seeks to inform policy

recommendations to support responsible and

effective integration of GenAI in educational settings.

2 METHODOLOGY

To assess the current status of GenAI use and

perception, a combined descriptive and exploratory

survey design was employed, offering a robust

approach for capturing participants' viewpoints. This

approach allows to efficiently gather diverse

responses and provides a broad understanding of the

topic (Creswell & Creswell, 2018). The descriptive

survey component facilitates a structured overview of

variable distribution, allowing documentation of

existing patterns and trends without pursuing causal

inference (Aggarwal & Ranganathan, 2019).

Additionally, exploratory elements were integrated

into the survey allowing for in-depth responses,

capturing nuanced participant perspectives. This

combination of question types supports a

comprehensive investigation into participant

perspectives, aligning with the mixed-methods

framework for educational research (Johnson &

Onwuegbuzie, 2004).

The survey questions were developed through a

collaborative brainstorming process, guided by

relevant literature and prior studies on GenAI

perceptions in higher education. The questionnaire

was designed to explore students' and educators'

perspectives on GenAI, with a focus on its

pedagogical integration and their beliefs about its

potential benefits, challenges, and overall acceptance

in the classroom. To enhance validity and clarity, a

pilot test was conducted with 14 researchers

CSEDU 2025 - 17th International Conference on Computer Supported Education

320

experienced in using GenAI for teaching and

academic purposes. Feedback from this pilot

informed refinements to the questionnaire, ensuring

alignment with the study’s objectives. This iterative

process aligns with best practices in survey research,

which emphasize the value of piloting for improving

reliability and validity (DeVellis, 2016).

The survey was administered online through the

Unipark Survey tool, facilitating broad participation

and data management efficiency. The questionnaire

included three tailored sections for different target

groups - students, professors, and educational

instructors. The survey consisted primarily of

multiple-choice and Likert scale questions (using a 5-

point scale), following established survey guidelines,

as these formats are effective for capturing attitudes

and perceptions (Allen & Seaman, 2007). To enhance

inclusiveness, we followed the comprehensive

questionnaire design recommendations by Jenn

(2006) including an "Other: please specify" option in

several questions, allowing respondents to provide

answers beyond the preset options. Additionally, two

open-ended questions were included to explore

commonly used GenAI tools for academic purposes.

A convenience sampling method was used to

select respondents based on their availability and

willingness to participate. This approach is

appropriate for exploratory studies, as it enables the

collection of diverse perspectives from a broad pool

(Etikan, 2016). While convenience sampling

provides initial insights into trends and perceptions, a

key limitation is its reduced generalizability, as the

sample may not fully represent the larger population,

potentially introducing selection bias (Emerson,

2021). Participation in the survey was voluntary and

anonymous, with informed consent obtained from all

respondents. Participants were assured of

confidentiality, adhering to ethical standards in

educational research (Cohen et al., 2017). A total of

152 students (undergraduate and postgraduate) and

118 educators from various countries and various

disciplines completed the survey, providing a

comprehensive view of GenAI perceptions across

academia.

The data analysis presented in this paper primarily

involved descriptive statistics to summarize and

interpret responses across selected survey questions.

Means, standard deviations, and frequencies were

calculated to identify trends and patterns in

participants’ responses. Demographic and contextual

variables, such as role, country, academic discipline

and academic experiences were analyzed to provide a

comprehensive understanding of the dataset.

For the specific survey question regarding the

perceived impact of GenAI tools on the learning

process in the short and long term, a comparison of

means was conducted to examine differences

between student and educator responses. Independent

samples t-tests were used to assess statistical

significance, and p-values were calculated for each

aspect included in the question. This additional layer

of analysis explored variations in perceived impacts

between the two groups, highlighting potential areas

of divergence or alignment in their viewpoints (Field,

2018). Data processing and analysis were conducted

using Microsoft Excel and Python’s Pandas library.

This combination of tools provided robust data

handling and visualization capabilities, supporting

clear interpretation and presentation of results.

3 RESULTS

3.1 Data Cleaning and Preparation

To prepare our primary dataset for analysis, we

undertook an initial restructuring process to ensure

consistency and clarity. Since some responses were

downloaded as variable numbers from the survey

software, these variables required annotation for

interpretability. By annotating each variable with its

corresponding value or meaning, a more

comprehensible dataset was created, facilitating

subsequent analysis.

Then, data was cleaned, i.e. errors were identified

and corrected, particularly focusing on handling

missing values. This systematic process of

restructuring, annotating, and cleaning the dataset

established a solid foundation for in depth analysis.

3.2 Data Analysis

3.2.1 Demographic Information

The survey targeted a diverse group of participants in

higher education, focusing on both students and

educators. Out of the 270 respondents, 56% were

students, providing insights into the perceptions and

current use of GenAI from a learner’s perspective.

The remaining participants were educators, including

professors (9%), lecturers (14%), researchers (7%),

trainers (3%), and educational instructors (11%),

offering their perspectives on AI's role and impact in

academia. This distribution allowed us to capture a

comprehensive view of GenAI’s perception and use,

exploring whether the perceptions of students and

educators align or diverge within the academic

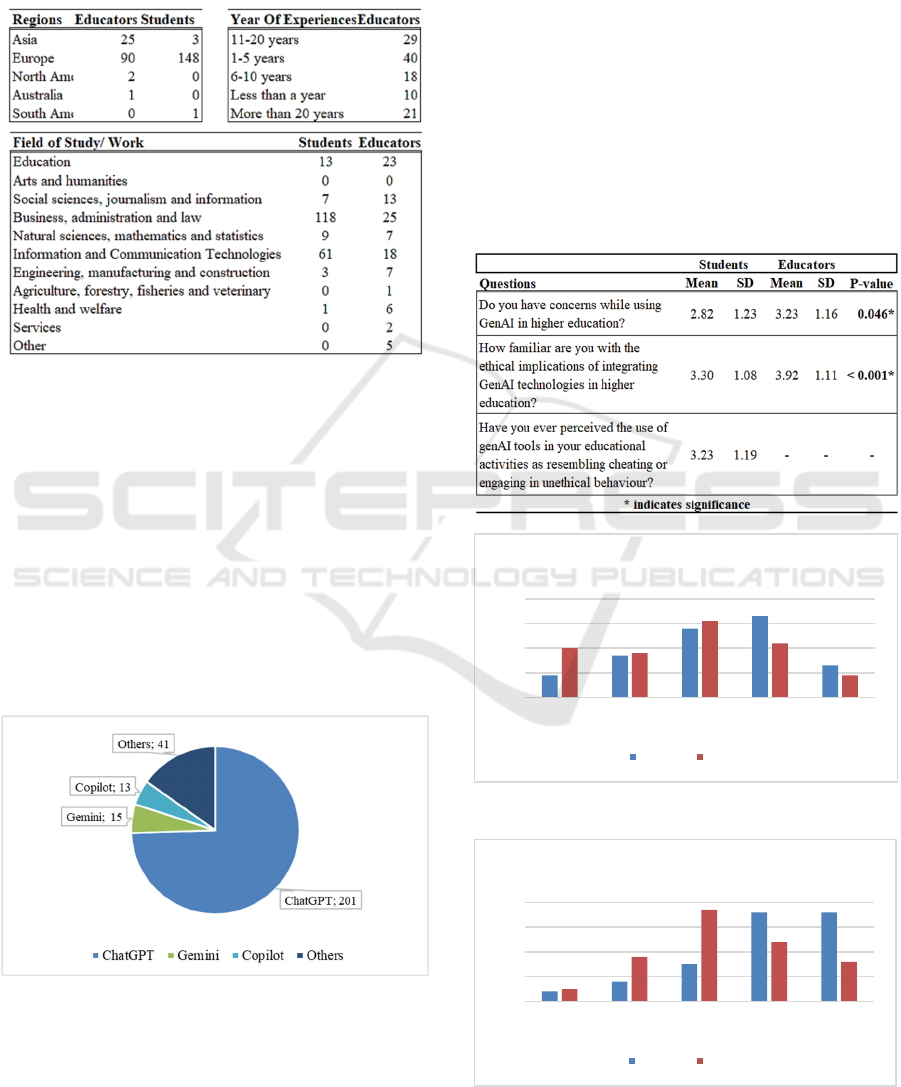

setting. Table 1 presents the demographic information

of the survey participants, including their region of

Use and Perception of Generative AI in Higher Education: Insights from the ERASMUS+ Project ’Teaching and Learning with Artificial

Intelligence’ (TaLAI)

321

work or study, years of experience (for educators),

and field of study or work for both students and

educators.

Table 1: Demographic Information.

3.2.2 GenAI Preferences and Ethical

Considerations

We explored in our study which GenAI tools are most

commonly utilized by students and educators in

higher education, focusing specifically on their top

choices and preferred tool. Participants were asked to

name the top three generative AI tools they use, as

well as their single most preferred tool. The findings,

illustrated in Figure 1, reveal that ChatGPT is

overwhelmingly popular, with 201 mentions among

the top three tools used. Other frequently cited tools

included Copilot (13), Gemini (15), and a variety of

others such as Midjourney, Firefly, and Perplexity

Figure 1: Preferred GenAI Tools.

(41). Additionally, when participants were asked to

identify their “number one” tool, both groups

consistently favored ChatGPT, with 67 out of 118

educators and 133 out of 152 students selecting it.

These results underscore ChatGPT’s currently

prominent role in academic settings, its extensive use

and perceived value across different roles within the

educational ecosystem.

Table 2 and Figures 2 and 3 compare students' and

educators' perspectives on the ethical considerations

surrounding GenAI in higher education. The results

indicate that educators express greater concerns about

the use of GenAI (Mean = 3.23, SD = 1.16) than

students (Mean = 2.82, SD = 1.23). The difference is

statistically significant (p = 0.046), suggesting that

educators are more cautious about the potential risks

of GenAI in academic settings.

Table 2: Concerns and familiarity with GenAI integration

in higher education.

Figure 2: Concerns While Using GenAI.

Figure 3: Familiarity with Ethical Implications.

0%

10%

20%

30%

40%

Not at all

concerned (1)

Slightly

concerned (2)

Somewhat

concerned (3)

Moderately

concerned (4)

Extremely

concerned (5)

Degree of Concern

Educators Students

0%

10%

20%

30%

40%

Not at all

familiar (1)

Slightly

familiar (2)

Somewhat

familiar (3)

Moderately

familiar (4)

Extremely

familiar (5)

Degree of Familiarity

Educators Students

CSEDU 2025 - 17th International Conference on Computer Supported Education

322

Additionally, educators report a significantly higher

familiarity with the ethical implications of GenAI

integration (Mean = 3.92, SD = 1.11) compared to

students (Mean = 3.30, SD = 1.08), with a highly

significant difference (p < 0.001). This indicates that

educators may have more exposure to discussions on

AI ethics, policies, or guidelines within academic

institutions. Furthermore, students were asked

whether they perceived the use of GenAI tools in

educational activities as cheating or unethical

behavior – the agreement was moderate (Mean =

3.23, SD = 1.19). This indicates that while some

students may view GenAI as potentially problematic

in terms of academic integrity, overall, there appears

to be uncertainty on the part of students at this time.

3.2.3 Perceptions and Current Practices of

GenAI Use in Academic Settings

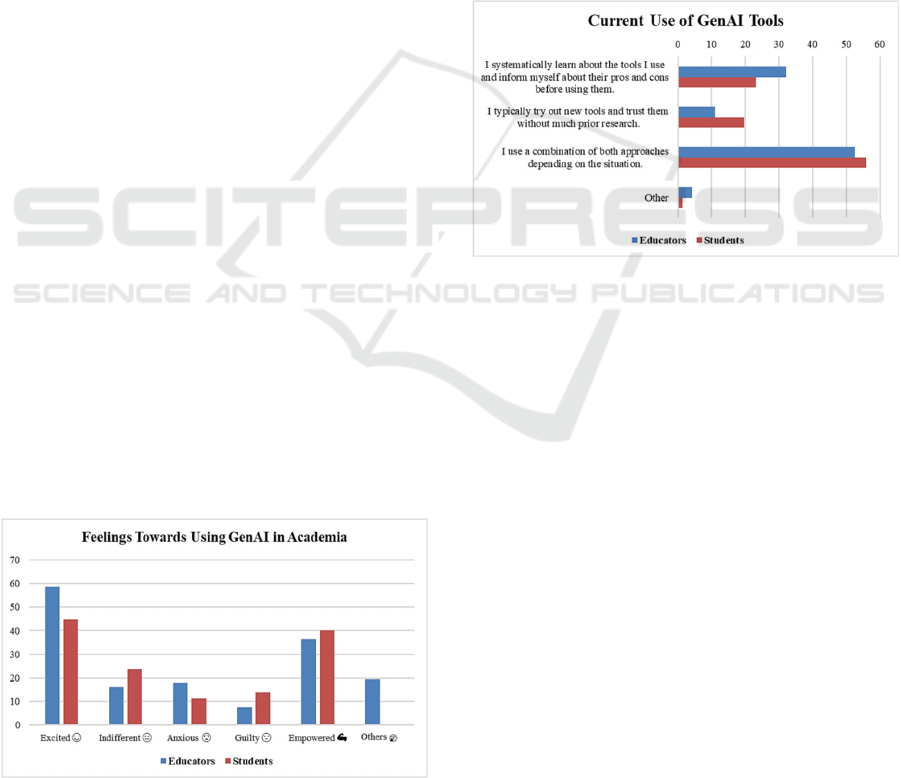

To understand the feelings of the participants when

using GenAI in academic work, participants had to

select multiple answers from a list of emotions. The

results show that 58.5% of teachers and 44.7% of

students feel “excited”, which could also indicate a

shared enthusiasm often observed initially

surrounding technology and innovative

advancements. Furthermore, 36.4% of educators and

40.1% of students feel “empowered,” suggesting that

many view GenAI as an empowering tool. Notably,

13.8% of students feel a “guilt” when using GenAI,

further indicating uncertainty, especially when taking

into account that 23.68% of students felt indifferent

towards using GenAI in academia. In contrast, only

7.6% of educators share the feeling of guilt. Some

educators provided additional answers by selecting

the “other” option, expressing a mixture of curiosity,

caution, ethical concerns, and frustration about the

impact of GenAI on the quality of learning. Overall,

the range of emotions reflects a positive outlook that

is

partially

tempered

by

uncertainty,

ethical

Figure 4: Feelings Towards Using GenAI.

considerations and a drive towards a responsible

approach to the role of GenAI in education.

The survey also examined how participants are

currently using generative AI tools in their academic

work. As illustrated in Figure 5, the majority of both

educators (53%) and students (56%) prefer a flexible

approach, combining systematic learning and trial-

and-error. Fewer participants adopt structured

methods, with 32% educators and 23% students

systematically researching tools before use, while

11% educators and 20% students try new tools with

minimal prior research. A small group (4% educators

and 1% students) chose "Other", describing varied

approaches such as starting with ChatGPT,

consulting experts or colleagues, or cautiously testing

tools before official use. These responses reflect

diverse strategies based on individual comfort with

experimentation and trust in GenAI tools.

Figure 5: Current Use of GenAI Tools.

Additionally, teaching staff was asked to clarify

how they incorporate generative AI in their teaching

practices. Respondents were allowed to select

multiple approaches, reflecting the diverse ways used

to integrate GenAI into education. The most common

approach, chosen by 55 participants, was discussing

the risks and limitations of GenAI in higher

education. Other frequently selected strategies

included promoting critical thinking through essential

and clear instructions (34 responses), exploring

potential future applications of GenAI (33 responses),

discussing the role of prompts with students (29

responses), and requiring students to document,

display, and evaluate GenAI contributions in their

work (29 responses).

Beyond these, educators also emphasized the

importance of guiding students in evaluating the

accuracy of GenAI outputs, reflecting on its influence

in their assignments, and understanding how GenAI

can facilitate feedback and alternative perspectives.

These findings highlight that educators often employ

multiple strategies, emphasizing a balanced approach

Use and Perception of Generative AI in Higher Education: Insights from the ERASMUS+ Project ’Teaching and Learning with Artificial

Intelligence’ (TaLAI)

323

Figure 6: Incorporation of GenAI in Teaching Practices.

that combines ethical awareness with fostering

critical engagement and practical understanding

among students.

The survey further asked participants whether

their university or study program has a policy on the

ethical use of GenAI tools. The results indicate that

141 out of 270 respondents (educators 54%, students

51%) believe their institution has a clear policy. In

contrast, 50 participants (educators 33%, students

7%) responded "No," indicating either the absence of

such policies or a lack of awareness among

participants. Additionally, 79 participants (educators

13%, students 42%) were "Unsure," suggesting that

nearly one-third of respondents may not have

sufficient information or clarity on this issue.

Notably, a considerably larger share of students

seems to be uninformed or uncertain about the

existence of such policies compared to educators.

This distribution reflects varied levels of awareness

regarding

GenAI

policies,

emphasizing

the

need

for

Figure 7: Availability of AI Policy.

clearer communication and implementation to ensure

all stakeholders are well-informed about ethical

guidelines.

Additionally, considering the absence of

institutional guidelines or policies, we asked the

students whether lecturers or professors personally

encourage the responsible and ethical use of

generative AI tools for academic purposes. The

results show that 56% of students responded "Yes,"

indicating they receive direct encouragement from

their instructors. In contrast, 20% responded "No,"

and 24% were "Not Sure," suggesting that a

significant portion of students either do not receive or

are uncertain about receiving guidance on ethical

GenAI use from their educators. Following up on the

question posed to students, we also asked educators if

they personally encourage students for responsible

and ethical use of generative AI tools regarding

academic purpose. The results show that a substantial

77% of educators (91 out of 118) responded "Yes",

indicating a high level of support for GenAI usage in

academic settings. In contrast, 10% said "No", and

13% were "Not Sure". This indicates a stronger

inclination among educators to support GenAI use

compared to the students’ perception of clear

instructions they received. While many educators are

actively discussing GenAI’s academic integration,

some hesitancy remains, with a small portion either

uncertain or explicitly not endorsing its use. This

difference in perspectives suggests that clear

instructions on the use of GenAI might not always be

consistently communicated to students, despite

overall positive attitudes among educators.

To gain insight into current practices, we asked

participants how they manage the use of GenAI in

graded assignments. This question was directed

specifically at professors, lecturers, and researchers

within the educator group who have direct classroom

involvement. The responses reveal a range of

approaches: 23 out of 81 educators allow GenAI use

broadly in assignments, while a larger portion,35

educators permit its use only for specific purposes. In

contrast, rest 23 educators do not allow GenAI use in

any graded assignments. This distribution suggests

that most educators are cautious, with many setting

boundaries around GenAI based on assignment goals

and context.

13%

10%

12%

10%

9%

11%

13%

22%

Incorporation of

GenAI in Teaching

I include instructions that are essential, clear, and

promote critical metacognitive reflection of the

genAI use.

I include instructions on how genAI tools can

help receive feedback and get alternative

perspectives on given information.

I require groups to document, display, and

evaluate genAI contributions in their

submissions.

I require students to reflect on how genAI

influenced their final submission.

I require students to evaluate the accuracy of

genAI outputs.

I discuss the role of prompts with the students.

I discuss potential future uses of genAI.

I discuss the risks and limitations of using genAI

in higher education with the students.

0%

10%

20%

30%

40%

50%

60%

No Unsure Yes

Institutional Policy Awareness

Educators Students

CSEDU 2025 - 17th International Conference on Computer Supported Education

324

Figure 8: Handling the Use of Generative AI in Graded

Assignments.

3.2.4 Perceived Impact and Challenges of

GenAI on Learning

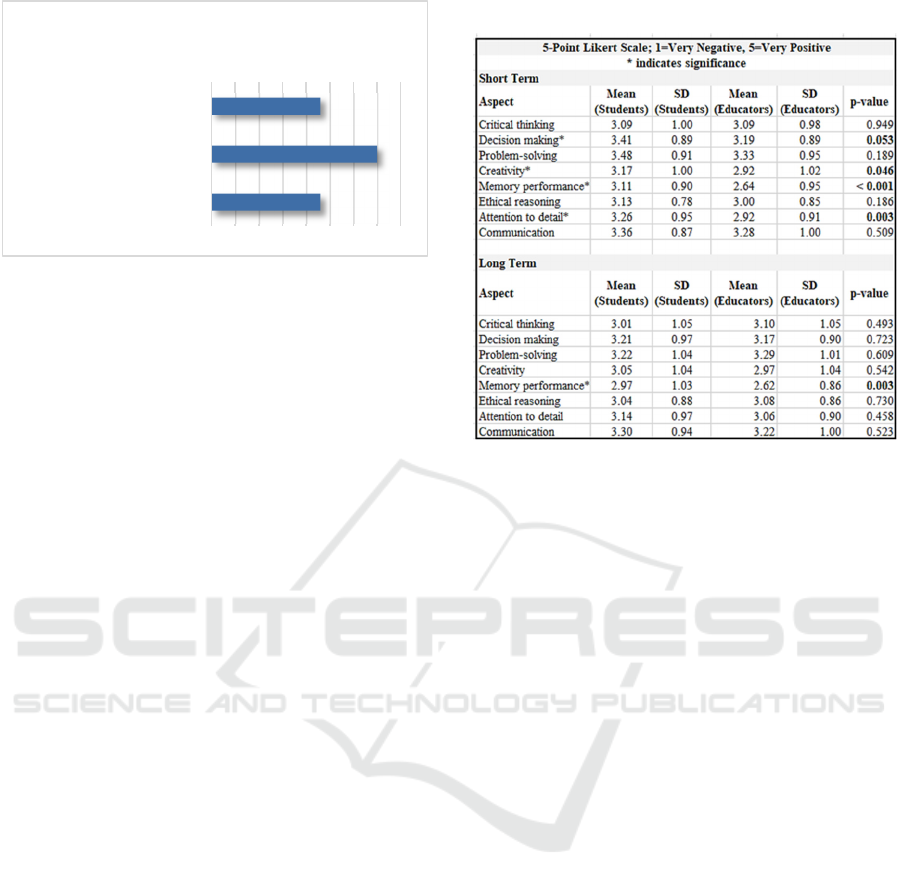

In examining the perceived short-term impact of

generative AI tools on student learning, we found

notable differences between students and educators

across various learning aspects from the t-test result.

Regarding memory performance (p < 0.001) and

attention to detail (p = 0.003), students rate the impact

of generative AI significantly more positive than

educators. This suggests that students may see GenAI

as a tool that could support memory retention and

attention to detail, while educators seem to be slightly

more cautious or skeptical about these potential

benefits. Decision making (p = 0.05) and creativity (p

= 0.05) showed borderline significance, where

educators expressed slightly lower confidence in

GenAI’s positive impact on these areas compared to

students. For critical thinking (p = 0.95), problem-

solving (p = 0.19), ethical reasoning (p = 0.19), and

communication (p = 0.51), no significant differences

were observed, indicating that both students and

educators have a similar, relatively neutral outlook on

the short-term impact of GenAI on these aspects of

learning. This may also be due to the fact that very

little empirical evidence exists so far on the impact of

using GenAI on critical thinking, problem-solving,

ethical reasoning and communication. Moreover, it

also depends on how GenAI has been utilized.

When considering the long-term impact of GenAI

tools on learning, students and educators largely

shared similar views, with no statistically significant

differences in areas like critical thinking (p = 0.49),

decision making (p = 0.72), problem-solving (p =

0.61), creativity (p = 0.54), ethical reasoning (p =

0.73), attention to detail (p = 0.46), and

communication (p = 0.52). However, a significant

difference was observed in memory performance (p =

0.003). Students rated the long-term impact of GenAI

higher

than educators, suggesting students might

Table 3: Perceived Impact of GenAI.

expect GenAI to aid memory retention more than

educators believe it will. This contrast in views on

memory performance could indicate differing

expectations for GenAI’s role in supporting cognitive

functions over time.

Interestingly, despite the relatively neutral

perceptions of GenAI’s impact on critical thinking,

problem-solving, and ethical reasoning, these aspects

prominently feature in the challenges reported by

both groups. The challenges associated with GenAI

differ slightly between students and educators, but

some concerns are shared. Among students, the most

reported challenges include maintaining academic

integrity and preventing plagiarism (94 responses),

verifying reliability and accuracy of AI-generated

content (81 responses), and balancing GenAI tool

usage with their own contributions (72 responses).

Educators, on the other hand, foresee similar

challenges when addressing students’ use of GenAI,

with maintaining academic integrity (88 responses)

and addressing concerns about AI tool reliability (77

responses) being prominent. Additionally, educators

emphasize the need for students to understand the

ethical implications of GenAI (79 responses), which

aligns with 41 students acknowledging this as a

challenge.

Apart from the given options, both target groups

shared additional insights. Educators expressed

concerns about fostering critical thinking, ensuring

authentic assessments aligned with labor market

demands, and encouraging creativity. They also

highlighted the risks of over-reliance on GenAI tools,

the challenge of convincing students to master

foundational skills, and the relevance of traditional

learning objectives in an GenAI-driven era. On the

23

35

23

0 5 10 15 20 25 30 35 40

I allow the use of genAI in graded

assignments in general

I allow the use of genAI in graded

assignments only for specific purposes

I do not allow the use of genAI in any

graded assignments

Approaches to Handling Generative AI in

Graded Assignments

Use and Perception of Generative AI in Higher Education: Insights from the ERASMUS+ Project ’Teaching and Learning with Artificial

Intelligence’ (TaLAI)

325

other hand, students mentioned difficulties in

verifying sources provided by GenAI, deciding which

tools to document, and crafting effective prompts to

generate outputs that meet their needs. These results

highlight shared concerns around academic integrity

and reliability while emphasizing educators’ focus on

ethical awareness and students’ focus on preventing

plagiarism and balancing GenAI usage with their own

contributions.

3.2.5 Institutional Support for Ethical and

Effective GenAI Integration

The integration of GenAI tools in higher education

demands thoughtful and tailored institutional support.

Insights from the survey suggest that students and

educators recognize the importance of institutional

support for effectively and ethically integrating

GenAI tools into higher education.

Students were offered answer options focusing on

guidance and skill-building. The most frequently

mentioned needs included learning ethical

approaches to using GenAI for assignments (83

responses), understanding how to effectively conduct

written assignments with GenAI support (77

responses), and exploring broader applications of

GenAI to enhance learning (69 responses).

Additionally, 60 respondents stressed the need for

resources that promote responsible and ethical usage

of GenAI tools. Under the "Other" category, students

proposed providing free or discounted access to

GenAI tools and offering detailed guidelines for

documenting GenAI use in academic submissions.

Educators, on the other hand, were offered a

different set of predefined options, focusing more on

structural and resource-based support. About half of

the educators requested clear policies and guidelines

(66 responses), as well as training and

professional

development programs (64 responses).

Figure 9: Institutional Support (Students).

Figure 10: Institutional Support (Educators).

Approximately every third educator asked for access

to institutional resources, such as campus licenses or

compliance-aligned GenAI tools (51 responses), and

technical support (46 responses). In addition,

educators underlined the importance of fostering

critical thinking and non-AI-dependent skills,

promoting ethical GenAI practices, encouraging

opportunities for GenAI-related research and

experimentation, and developing community-driven

GenAI initiatives tailored to institutional needs.

These responses reflect a shared emphasis on

ethical practices and structured institutional support

while highlighting the unique emphasis of each group

based on the options provided.

4 DISCUSSION

The study on the use and perception of GenAI in

higher education underlines its transformative

potential while revealing significant gaps in ethical

awareness, institutional support, and integration

practices that need to be addressed for its effective

adoption. The results presented in this paper provide

insights into the use and perception of GenAI among

students and educators in higher education, revealing

both shared and divergent perspectives. One of the

most notable differences lies in the levels of

familiarity and ethical awareness regarding GenAI

between students and educators. Educators stated a

significantly higher awareness of ethical implications

compared to students, which aligns with their

professional responsibility to uphold academic

integrity. Conversely, students exhibited moderate

agreement on viewing GenAI usage as potentially

unethical or resembling cheating. These findings

align with recent studies that emphasize the

importance of fostering ethical literacy among

students to mitigate misuse of GenAI technologies in

educational contexts (Fu & Weng, 2024). Institutions

83

77

69

60

4

0 20406080100

Ethical ways to use generative AI tools to

design, conduct and write up assignments

How to do conduct written assignments

with generative AI appropriately and

efficiently

Getting more of a sense of the breadth of

particularly activities on how to use

generative AI to support my learning

Learn how to responsibly and ethically use

generative AI tools for academic purpose

Other (please specify)

Students

66

64

51

46

5

0 10203040506070

Clear Policies and Guidelines

Training and Professional Development

Institutional Access (campus license / data

protection-compliant access via a proxy)

Technical Support and Resources

Other (please specify)

Educators

CSEDU 2025 - 17th International Conference on Computer Supported Education

326

must bridge this gap by fostering ongoing dialogue

between students and educators about the ethical

boundaries of GenAI use in academia.

The short-term and long-term impact of GenAI on

various learning aspects is perceived overall neutral

from both groups. This neutrality may derive from a

lack of long-term exposure to GenAI tools and

limited evidence on their concrete benefits or

drawbacks in supporting higher-order cognitive

skills. While students generally rated GenAI's

contributions to short-term memory performance

(Mean = 3.11, SD = 0.90) and attention to detail

(Mean = 3.26, SD = 0.95) more positively, educators

remained slightly more skeptical, as evidenced by

statistically significant differences in these areas

(memory performance Mean = 2.64, SD = 0.95;

attention to detail Mean = 2.92, SD = 0.91). This

disparity may stem from educators' cautious approach

to over-reliance on GenAI tools, fearing they might

inhibit critical thinking and foundational skill

development. Both groups rated the impact on critical

thinking and ethical reasoning neutrally, signalling a

shared uncertainty about GenAI's potential to support

or hinder higher-order cognitive skills. These findings

highlight an urgent need for empirical evidence on the

effectiveness of integrating GenAI as a supportive

tool while preserving essential cognitive

competencies.

Overall, these findings resonate with the prior

research emphasizing the dual nature of technological

adoption in education as enabling and disruptive

(Mogavi et al., 2024). Both students and educators

expressed enthusiasm and a sense of empowerment

regarding GenAI's potential, but notable differences

emerged in their emotional responses. Educators

reported greater caution and frustration compared to

students. This caution likely arises from concerns

about the potential impact of GenAI on learning

quality and academic integrity, as well as

uncertainties surrounding its ethical and pedagogical

implications. Frustration, on the other hand, may be

linked to a lack of robust evidence on GenAI’s

effectiveness in diverse learning environments and

the rapid emergence of new tools, contributing to

technology anxiety.

Interestingly, students were more likely than

educators to report feelings of "guilt" and

"indifference" toward the use of GenAI in academic

settings. Guilt may arise from the strict academic

integrity policies they are subject to, combined with

the fear of repercussions for perceived misuse.

Students may also experience guilt from a belief that

relying on GenAI could undermine their learning

process or conflict with expectations to produce

original work. The ambiguity surrounding what

constitutes acceptable use of GenAI adds to this

tension, as students may be unsure if their actions

align with ethical standards. This uncertainty,

coupled with the high stakes of academic evaluations,

creates a psychological conflict that fosters feelings

of guilt.

Conversely, feelings of indifference may be

attributed to students' familiarity with rapidly

advancing technologies. Many students see GenAI as

just another tool, potentially underestimating its

ethical implications or transformative effects in

academia. For some, indifference may reflect a

rationalization of GenAI use as a practical necessity

in a competitive academic environment, where

achieving results often takes precedence over the

process. Others may feel indifferent due to a lack of

perceived enforcement or clearly defined boundaries

regarding GenAI use in academia.

These mixed emotions - guilt and indifference,

may also reflect broader cultural and situational

factors. Students experiencing cognitive dissonance

might rationalize their use of GenAI to reconcile the

tension between academic integrity and the demands

of academic success. While guilt arises from the

perceived ethical compromise, indifference might

stem from prioritizing efficiency over adherence to

traditional academic norms. Additional empirical

studies are required to further explore the feelings

triggered by students with respect to GenAI in their

academic work.

Nevertheless, the emotional responses highlight

the transformative yet challenging role of GenAI in

education, underscoring the need for professional

development programs to equip educators and

students alike with strategies for effective integration

while addressing potential risks. Supporting this, a

recent study on U.S. universities’ GenAI policies

revealed an open yet cautious approach, prioritizing

ethical usage, accuracy, and data privacy, while

providing resources like workshops and syllabus

templates to aid educators in adapting GenAI

effectively in their teaching practices (Wang et al.,

2024). Several studies have also highlighted the

importance of involving students in the development

of GenAI training curricula and policies and

guidelines for the ethical and responsible use of

GenAI that directly affect their academic work

(Camacho-Zuñiga et al. 2024; Magrill & Magrill,

2024; Moya & Eaton, 2024; Vetter et al., 2024;

Goldberg et al., 2024; Bannister et al., 2024; Chen et

al., 2024; Malik et al., 2024).

The study revealed significant gaps in

institutional support and policy clarity surrounding

Use and Perception of Generative AI in Higher Education: Insights from the ERASMUS+ Project ’Teaching and Learning with Artificial

Intelligence’ (TaLAI)

327

the use of GenAI. While more than half of the

respondents (52%) believed their institutions had

policies addressing the ethical use of AI, a notable

proportion (29%) were uncertain, indicating either a

lack of communication or the absence of

comprehensive guidelines. Interestingly, a significant

number (56%) of educators called for clear

institutional policies as institutional support,

highlighting a potential contradiction. This may

suggest that policies are either underdeveloped, or

inadequately communicated to relevant stakeholders.

The absence of well-communicated institutional

policies often results in uncertainty and fragmented

practices among educators and students. Research has

shown that explicitly communicating expectations

about what students are permitted to do and where

restrictions apply can reduce ambiguity and foster

compliance with institutional guidelines (Kumar et

al., 2024; Ivanov, 2023; Dai et al., 2023).

Furthermore, while students expressed a need for

practical resources and opportunities to develop

GenAI-related skills, educators emphasized the

importance of structural support, including

professional training and access to technical

resources. Addressing these gaps requires a dual-

focus approach: institutions must establish

transparent, well-communicated policies, while

simultaneously equipping stakeholders with the tools

and skills necessary to navigate GenAI responsibly.

Both groups identified shared challenges in

integrating GenAI into academia, particularly

regarding maintaining academic integrity and

verifying the reliability of AI-generated content.

Educators highlighted the importance of fostering

critical thinking and creativity while ensuring

equitable access to AI tools. Recent studies also

emphasize the need to enhance holistic competencies

(Chan, 2023), focus on developing lifelong skills

(Elbanna & Armstrong, 2024; AlDhaen, 2022) and

strengthen students' critical thinking and creativity

(Klyshbekova & Abbott, 2023; Xie & Ding, 2023;

Chan & Hu, 2023). On the other hand, students

reported challenges in crafting effective prompts and

documenting AI usage, underscoring the need for

practical skill-building resources. These findings

suggest that while GenAI holds transformative

potential, its integration into academia must be

supported by robust educational practices to address

these barriers effectively. Aligning with existing

recommendations from literature, students should be

encouraged to critically evaluate AI-generated

content and distinguish between reliable and

unreliable sources, fostering essential critical

thinking skills (Chan & Hu, 2023; Yeadon & Hardy,

2024). Furthermore, caution must be exercised

regarding the accuracy and reliability of GenAI

outputs, as highlighted by recent studies (Kayalı et al.

2023; Pallivathukal et al. 2024; Camacho-Zuñiga et

al. 2024). Addressing these challenges will require a

comprehensive, collaborative approach that

empowers both students and educators to navigate

GenAI responsibly while maximizing its educational

potential.

This study provides valuable inspirations for

future studies but is not without limitations. The use

of a convenience sampling method may limit the

generalizability of findings to other contexts.

Therefore, future research could benefit from diverse

sampling techniques to enhance representativeness.

Future research should explore longitudinal studies to

assess how perceptions and impacts of GenAI evolve

over time as its adoption becomes more widespread.

Furthermore, qualitative methods such as interviews

or focus groups could provide deeper insights into

participants' hands-on experiences with GenAI,

particularly in addressing feelings of uncertainty,

guilt, and indifference, as well as the challenges

associated with its integration.

5 CONCLUSIONS

This study emphasizes the transformative potential of

GenAI in higher education while highlighting critical

areas that require attention for its effective adoption.

A key finding is the higher ethical awareness among

educators compared to students, reflecting their

professional responsibility to uphold academic

integrity. Conversely, some students experience

feelings of guilt in using GenAI, stemming from

unclear boundaries around acceptable practices and

concerns about academic integrity.

The research also reveals a significant gap in

institutional support, with many respondents

uncertain about the existence or clarity of GenAI-

related policies. This uncertainty highlights the

necessity for universities to establish comprehensive

and transparent policies that address both

opportunities and risks associated with GenAI use.

These policies should be actively communicated to

educators and students to ensure informed decision-

making and ethical engagement with AI tools.

Training programs tailored for both faculty and

students are crucial in fostering AI literacy and

equipping users with the skills to critically assess and

integrate GenAI into academic practices. Educators,

in particular, require professional development

opportunities to explore effective teaching strategies

CSEDU 2025 - 17th International Conference on Computer Supported Education

328

that incorporate GenAI while upholding academic

standards. Additionally, students need guidance on

how to use AI tools responsibly, with a focus on

understanding the ethical implications and ensuring

that AI-generated content does not replace

independent learning and critical thinking.

There is a burning need of reevaluating

assessment practices in response to the growing use

of GenAI. Traditional evaluation methods may need

to be adapted to ensure that assessments accurately

measure students’ understanding and problem-

solving abilities, rather than their ability to generate

AI-assisted responses. Developing assessment

frameworks that promote critical engagement with

AI, requiring students to analyze, justify, or refine AI-

generated content, could help maintain academic

integrity while leveraging GenAI’s potential as a

learning aid.

Furthermore, fostering open discussions about

GenAI’s role in education is essential for shaping its

ethical and pedagogical integration. Institutions

should create platforms where educators and students

can share experiences, voice concerns, and

collaborate on best practices for AI adoption in

teaching and learning. Such collaborative efforts will

help bridge the gap between policy development and

practical implementation, ensuring that AI tools

enhance rather than undermine educational

objectives.

While GenAI holds immense potential to enhance

educational outcomes, its integration must be

approached thoughtfully to address ethical concerns,

emotional responses, and structural barriers. By

establishing clear policies, providing tailored

training, and encouraging open dialogue, higher

education institutions can create an environment

where GenAI’s potential is maximized responsibly

and equitably.

REFERENCES

Aggarwal, R., & Ranganathan, P. (2019). Study designs:

Part 2 - Descriptive studies. Perspectives in Clinical

Research, 10(1), 34–36.

AlDhaen, F. (2022). The use of artificial intelligence in

higher education – Systematic review. In M. Alaali

(Ed.), COVID-19 challenges to university information

technology governance (pp. 269–285). Springer

International Publishing.

Allen, I. E., & Seaman, C. A. (2007). Likert scales and data

analyses. Quality Progress, 40, 64–65.

Bannister, P., Peñalver, E. A., & Urbieta, A. S. (2024).

International students and generative artificial

intelligence: A cross-cultural exploration of HE

academic integrity policy. Journal of International

Students, 14(3), 149–170.

Bobula, M. (2023). Generative artificial intelligence (AI) in

higher education: A comprehensive review of

challenges, opportunities, and implications. Journal of

Learning Development in Higher Education, (30).

Camacho-Zuñiga, C., Rodea-Sánchez, M. A., López, O. O.,

& Zavala, G. (2024). Generative AI guidelines by/for

engineering undergraduates. In 2024 IEEE Global

Engineering Education Conference (EDUCON) (pp. 1–

8). IEEE.

Chan, C. K. Y. (2023). A comprehensive AI policy

education framework for university teaching and

learning. International Journal of Educational

Technology in Higher Education, 20(1).

Chan, C. K. Y., & Hu, W. (2023). Students’ voices on

generative AI: Perceptions, benefits, and challenges in

higher education. International Journal of Educational

Technology in Higher Education, 20(1).

Chen, K., Tallant, A. C., & Selig, I. (2024). Exploring

generative AI literacy in higher education: Student

adoption, interaction, evaluation, and ethical

perceptions. Information and Learning Sciences.

Cohen, L., Manion, L., & Morrison, K. (2017). Research

methods in education (8th ed.). Routledge.

Creswell, J. W., & Creswell, J. D. (2018). Research design:

Qualitative, quantitative, and mixed methods

approaches (5th ed.). SAGE Publications, Inc.

Dai, Y., Liu, A., & Lim, C. P. (2023). Reconceptualizing

ChatGPT and generative AI as a student-driven

innovation in higher education. Procedia CIRP, 119,

84–90.

Dale, R., & Viethen, J. (2021). The automated writing

assistance landscape in 2021. Natural Language

Engineering, 27(4), 511–518.

DeVellis, R. F. (2016). Scale development: Theory and

applications (4th ed.). SAGE Publications, Inc.

Elbanna, S., & Armstrong, L. (2024). Exploring the

integration of ChatGPT in education: Adapting for the

future. Management in Education (MSAR), 3(1), 16–29.

Emerson, R. (2021). Convenience Sampling Revisited:

Embracing Its Limitations Through Thoughtful Study

Design. Journal of Visual Impairment & Blindness,

115, 76 - 77.

Etikan, I. (2016). Comparison of convenience sampling and

purposive sampling. American Journal of Theoretical

and Applied Statistics, 5(1), 1–4.

Field, A. (2018). Discovering statistics using IBM SPSS

statistics (5th ed.). SAGE Publications.

Fu, Y., & Weng, Z. (2024). Navigating the ethical terrain of

AI in education: A systematic review on framing

responsible human-centered AI practices. Computers

and Education: Artificial Intelligence, 7, 100306.

García-Peñalvo, F., & Vázquez-Ingelmo, A. (2023). What

do we mean by GenAI? A systematic mapping of the

evolution, trends, and techniques involved in generative

AI. The International Journal of Interactive Multimedia

and Artificial Intelligence, 8, 7–16.

Goldberg, D., Sobo, E., Frazee, J., & Hauze, S. (2024).

Generative AI in higher education: Insights from a

Use and Perception of Generative AI in Higher Education: Insights from the ERASMUS+ Project ’Teaching and Learning with Artificial

Intelligence’ (TaLAI)

329

campus-wide student survey at a large public

university. In J. Cohen & G. Solano (Eds.), Proceedings

of Society for Information Technology & Teacher

Education International Conference (pp. 757–766). Las

Vegas, NV: Association for the Advancement of

Computing in Education (AACE).

Ivanov, S. (2023). The dark side of artificial intelligence in

higher education. The Service Industries Journal,

43(15–16), 1055–1082.

Jenn, N. C. (2006). Designing a questionnaire. Malaysian

Family Physician: The Official Journal of the Academy

of Family Physicians of Malaysia, 1(1), 32–35.

Johnson, R. B., & Onwuegbuzie, A. J. (2004). Mixed

methods research: A research paradigm whose time has

come. Educational Researcher, 33(7), 14–26.

Kayalı, B., Yavuz, M., Balat, Ş., & Çalışan, M. (2023).

Investigation of student experiences with ChatGPT-

supported online learning applications in higher

education. Australasian Journal of Educational

Technology, 39(5), 20–39.

Klyshbekova, M., & Abbott, P. (2023). ChatGPT and

assessment in higher education: A magic wand or a

disruptor? Electronic Journal of e-Learning, 21(5),

Article 0.

Kumar, R., Eaton, S. E., Mindzak, M., & Morrison, R.

(2024). Academic integrity and artificial intelligence:

An overview. In S. E. Eaton (Ed.), Second handbook of

academic integrity (pp. 1583–1596). Springer Nature

Switzerland.

Malik, T., Dettmer, S., Hughes, L., & Dwivedi, Y. K.

(2024). Academia and generative artificial intelligence

(GenAI) SWOT analysis: Higher education policy

implications. In S. K. Sharma, Y. K. Dwivedi, B. Metri,

B. Lal, & A. Elbanna (Eds.), Transfer, diffusion and

adoption of next-generation digital technologies (IFIP

Advances in Information and Communication

Technology, Vol. 698). Cham, Switzerland: Springer.

Magrill, J., & Magrill, B. (2024). Preparing educators and

students at higher education institutions for an AI-

driven world. Teaching and Learning Inquiry, 12.

Mogavi, R. H., et al. (2024). ChatGPT in education: A

blessing or a curse? A qualitative study exploring early

adopters’ utilization and perceptions. Computers in

Human Behavior: Artificial Humans, 2(1), 100027.

Moya, B. A., & Eaton, S. E. (2024). Academic integrity

policy analysis of Chilean universities. Journal of

Academic Ethics.

Nguyen, V. K. (2023). The use of generative AI tools in

higher education: Ethical and pedagogical principles.

Pallivathukal, R. George, Kyaw Soe, H. H., Donald, P. M.,

Samson, R. S., & Hj Ismail, A. R. (2024). ChatGPT for

academic purposes: Survey among undergraduate

healthcare students in Malaysia. Cureus, 16(1), e53032.

Ray, P. P. (2023). ChatGPT: A comprehensive review on

background, applications, key challenges, bias, ethics,

limitations and future scope. Internet of Things and

Cyber-Physical Systems, 3, 121–154.

Rowland, D. R. (2023). Two frameworks to guide

discussions around levels of acceptable use of

generative AI in student academic research and writing.

Journal of Academic Language and Learning, 17(1),

T31–T69.

Venkatesh, V., Thong, J. Y. L., & Xu, X. (2012). Consumer

acceptance and use of information technology:

Extending the unified theory of acceptance and use of

technology. MIS Quarterly, 36(1), 157.

Vetter, M. A., Lucia, B., Jiang, J., & Othman, M. (2024).

Towards a framework for local interrogation of AI

ethics: A case study on text generators, academic

integrity, and composing with ChatGPT. Computers

and Composition, 71, 102831.

Wang, H., Dang, A., Wu, Z., & Mac, S. (2024). Generative

AI in higher education: Seeing ChatGPT through

universities’ policies, resources, and guidelines.

Computers and Education: Artificial Intelligence, 7,

100326.

Xie, X., & Ding, S. (2023). Opportunities, challenges,

strategies, and reforms for ChatGPT in higher

education. In 2023 International Conference on

Educational Knowledge and Informatization (EKI) (pp.

14–18).

Yeadon, W., & Hardy, T. (2024). The impact of AI in

physics education: A comprehensive review from

GCSE to university levels. Physics Education, 59(2),

25010.

Zhai, X. (2022). ChatGPT user experience: Implications for

education. SSRN 4312418.

CSEDU 2025 - 17th International Conference on Computer Supported Education

330