Stakeholder Responsibility for Building Trustworthy Learning

Analytics in the AI-Era

Barbi Svetec

1

, Blaženka Divjak

1

, Bart Rienties

2

and Hanni Muukkonen

3

1

University of Zagreb, Faculty of Organization and Informatics, Varaždin, Croatia

2

The Open University, Milton Keynes, U.K.

3

University of Oulu, Oulu, Finland

Keywords: Learning Analytics, Trust, Trustworthiness, Stakeholders, Responsibility.

Abstract: This position paper builds on previous research publications and activities related to trustworthy learning

analytics (LA) to provide an additional angle on the fundamental considerations for ensuring trustworthy LA.

In our view, these considerations include strategic guidance and support, pedagogical soundness and human

interaction, stakeholder engagement, data and AI literacy, ethics, data limitations and meaningful use of

algorithms, as well as transparency of the whole process. In this paper, we discuss each of the considerations

with respect to the roles and responsibilities of the key stakeholders in the LA systems: educational leaders,

educators (especially teachers) and students.

1 INTRODUCTION

It is widely known that the digital age has brought

numerous changes to teaching and learning, and

educators and students alike use digital tools and

artificial intelligence (AI) to support and enhance

learning on a daily basis. One of the most advanced

ways of harnessing technology to foster learning is

the use of learning analytics (LA) to better understand

learning, provide targeted learning support, improve

the quality of learning experiences, and encourage

self-regulated learning. However, while during the

last decade the potentials and benefits of LA have

been widely recognized in research as well as

educational practice, especially in higher education,

its use is still far from widespread (Tsai et al., 2021).

There is, clearly, a whole range of context-specific

reasons for that, which has been addressed in LA

research (Tsai & Gasevic, 2017).

What has been standing out as one of the

significant factors possibly affecting the adoption of

LA is trust (Tsai et al., 2021). In some of the first

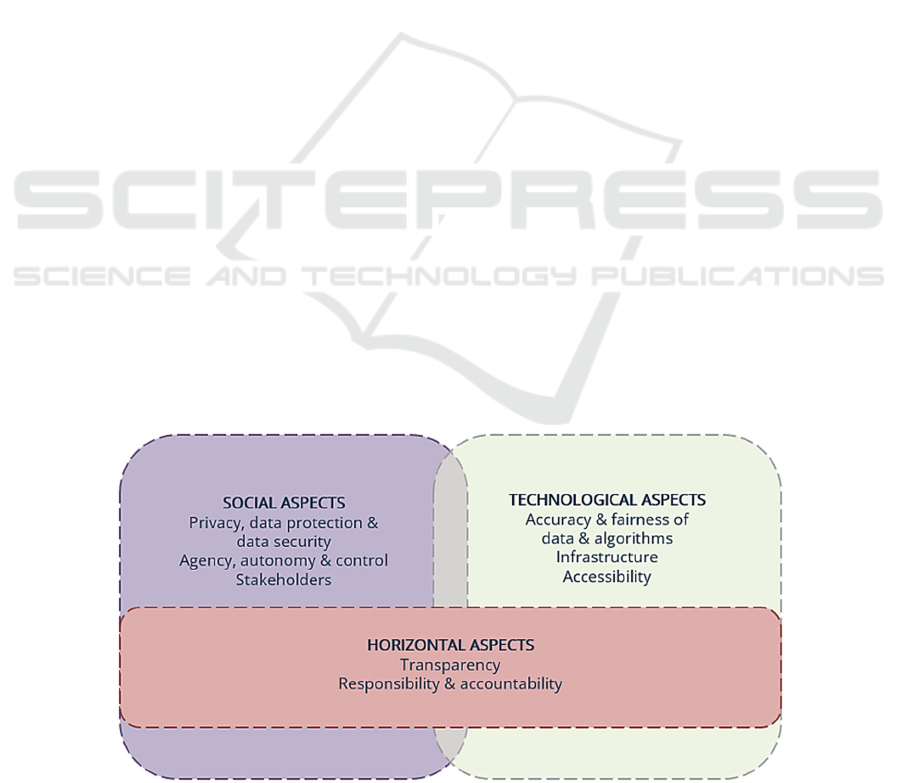

Figure 1: Aspects and dimensions of trustworthy LA (from: Svetec & Divjak, 2025).

Svetec, B., Divjak, B., Rienties, B. and Muukkonen, H.

Stakeholder Responsibility for Building Trustworthy Learning Analytics in the AI-Era.

DOI: 10.5220/0013363300003932

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 2, pages 331-337

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

331

attempts to define trust in the context of LA, it has

been described as “subjective, psycho-social,

relational and often asymmetrical and founded on the

character/values/credibility and track record/consis-

tency/expertise of the person/organization requiring

our trust” (Slade et al., 2023). It should be noted,

though, that trust and trustworthiness are not

synonymous. In this paper, we look at trust as a

subjective belief, while we consider trustworthiness

as a more measurable “quality of LA which abides by

legal rules and ethical principles related to learners’

privacy, their data security and control, is based on

non-biased data and algorithms, transparently used,

and can be trusted to support all learners in successful

acquisition of learning outcomes” (Svetec & Divjak,

2025).

Against the described background, and especially

since LA is “increasingly unthinkable without AI”

(Slade et al., 2023), the issue of trustworthiness of LA

has been high on the agenda in LA research. With

parallels to trustworthy AI, comparable aspects of

trustworthy LA have been explored and discussed

(Figure 1). Some of those aspects are more social,

including ethical concerns related to (primarily

students’ and educators’) privacy, data protection and

security, their agency, autonomy, and control

pertaining to the collection and use of data, as well as

trust in stakeholders’ competences and interests.

Others are more technological, referring to data and

algorithms and the way they affect accuracy and

fairness of LA, as well as the need for appropriate

infrastructure and accessibility. Horizontally, there is

a need for transparency, not only in terms of data

collection, but also interpretability and explainability

of algorithms. Another essential aspect is assuming

responsibility and ensuring accountability, at

institutional and higher levels, in terms of leadership

and policy supporting implementation of LA that

considers all the other aspects of trustworthiness.

(Svetec & Divjak, 2025)

With this position paper, our aim is to contribute

to the debate on trustworthy LA by discussing what

educational systems, institutions and individuals can

do to support trustworthy and trusted implementation

of LA.

2 COLLECTION OF INSIGHTS

Besides the authors’ current informed positions, this

position paper takes into account the discussions

among experts and researchers previously held in an

international context. First, the paper builds on the

insights from a panel discussion organized within the

Learning Analytics in Practice 2024 conference, held

online worldwide in June 2024, which gathered four

esteemed LA experts from Europe and Australia.

Second, the paper presents the highlights of a

workshop and three focus groups on trustworthy LA

held as part of the Trustworthy Learning Analytics

and Artificial Intelligence for Sound Learning Design

(TRUELA) project. The workshop was held in March

2024 and included eight LA experts and seven HE

educators, and focus groups were held in September

2024, with 18 participants from Europe and South

Africa.

3 FUNDAMENTAL

CONSIDERATIONS FOR

BUILDING TRUSTWORTHY LA

SYSTEMS

Strategic Guidance and Support Are

Indispensable. Research has established there is a

lack of institutional policies for the implementation of

LA (Baker et al., 2021; Ifenthaler et al., 2021;

Vigentini et al., 2020). However, it is important to

make strategic-level decisions and develop clear

policies on the use of educational data: what data to

collect, what to monitor and what to do with the

findings (Rienties, 2021; Rienties & Herodotou,

2022). Being clear about the strategy may also

contribute to the motivation of individuals to consent

to share their data and participate in LA. Besides

developing policies and strategies, educational

institutions should engage in sharing information and

educating everyone involved in LA, for example,

through teacher training. Institutions should also

provide encouragement, supporting champions to

experiment and inspire others, as well as fostering

interdisciplinarity and links between research and

practice (Herodotou et al., 2020; Kaveri et al., 2023).

Finally, it is essential that institutions ensure the

necessary financing for the implementation of LA,

including infrastructure and training, and invest in

explainable LA systems.

Pedagogical Soundness and Human Interaction

Remain the Backbone. Only meaningful LA should

be trusted. For LA to be meaningful, it is important to

ensure a sound pedagogical foundation and enable

theoretical and practical educational (didactical)

explainability of LA. Furthermore, while LA systems

provide visualizations and reports, however rich and

meaningful, these only achieve their purpose if they

CSEDU 2025 - 17th International Conference on Computer Supported Education

332

are reacted upon, interpreted and if improvement is

considered (Alcock et al., 2024; Clow, 2012;

Herodotou et al., 2023; Kaliisa et al., 2024;

Muukkonen et al., 2023). Here, teachers remain

central. They are the ones who should consider the

insights from aggregated data, but keep the individual

approach to their students, supporting them in more

successful learning. While we agree that teachers are

essential for trust-building, over 10 years of research

at the Open University with large-scale adoption of

LA dashboards suggest that less than half of teachers

regularly use these kinds of dashboard (Herodotou et

al., 2020, 2023). In part teachers who are less likely

to use these LA systems indicate that they need more

support and training to use these complex and data-

driven systems, but also there is an underlying

concern around whether (or not) the data can be

trusted, and what the most appropriate intervention

strategies might be (Frank et al., 2016; Herodotou et

al., 2023).

Engaging Stakeholders Can Enhance

Meaningfulness and Trust. In the last couple of

years, there has been quite some discussion on

human-centered LA. This refers to involving

educational stakeholders in the process of designing

and evaluating LA systems, as well as studying the

sociotechnical factors that affect the success of LA

(Alcock et al., 2024; Buckingham Shum et al., 2019;

Buckingham Shum et al., 2024). Engaging LA users

- primarily teachers, other educators and students - in

LA development and implementation can help

understand their needs to provide meaningful LA on

the one hand, and enable them to understand how LA

helps them on the other hand (Gedrimiene et al.,

2023). Knowing why they are providing their data

may increase stakeholders’ motivation to participate

and support their trust in LA.

Data and AI Literacy Are the Foundation.

Stakeholders do not always understand LA

(Herodotou et al., 2020, 2023), which may make it

hard for them to trust it, and subsequently use it. To

be able to trust LA, it is important to understand data,

know how to use appropriate methods of analysis, and

interpret the results (Gedrimiene et al., 2023). This

could be supported with the use of AI, including in

terms of providing suggestions and recommendations

for improvements. For example, several fitness apps

(e.g., Strava) provide people with detailed training

data on their phone after they went for a run or a

cycle. These apps provide very rich and dynamic data

of a particular work-out but do require substantial

data skills and understanding to make sense of

whether or not a person has benefited from a

particular training. Recently, some apps have made

Generative AI (GenAI) advice available based upon

months of data of a user, which beyond an easy to

follow narrative of the actual workout also provides

suggestions of further training. By combining months

of data with easy storytelling this GenAI might be

more attractive for some users. However, the use of

AI should be approached with caution, especially

when it comes to the interpretation of mathematical

and statistical models. When interpreting LA results,

it is also essential to be mindful of differences in

learning contexts, learning dispositions and cultural

perspectives.

Ethics Is the Cornerstone. Adequate privacy, data

protection and security arrangements (Slade &

Prinsloo, 2013; Tzimas & Demetriadis, 2021;

Ungerer & Slade, 2022), aligned with the relevant

regulation, are paramount. Stakeholders need to be

allowed agency, autonomy, and control when it

comes to the use of their data (Korir et al., 2023; Li et

al., 2021; Slade & Prinsloo, 2013) Competence and

interest of the involved (especially third) parties

should be considered (Alzahrani et al., 2023). For

example, if LA systems are provided by vendors

outside of HE, they might not be fully aware of the

specificities of the educational context or understand

the pedagogical framework. They might also be more

oriented towards profit than towards students’

wellbeing and learning progress. Furthermore, the era

of GenAI sheds a new, even more complex light on

the ethics-related issues and opens new questions

(Bond et al., 2024; Giannakos et al., 2024). For

example, who should take responsibility if GenAI

makes conclusions and decisions about humans?

Data Limitations Need to Be Considered. While on

the one hand, it is ethically only acceptable to allow

stakeholders (primarily students) the possibility to

make an informed decision on their participation in

LA, on the other hand, incomprehensive data can lead

to biased results (Li et al., 2021). For example, some

demographic groups or students with disabilities

might be reluctant to consent to the use of their data,

so data and analyses can therefore be biased.

Moreover, there are different possible sources of data,

and multimodal data (Mangaroska et al., 2020;

Ochoa, 2022), like data collected via sensors and

cameras, are not available in every educational

context. These limitations should be taken into

account at all times, and blind trust is not to be

Stakeholder Responsibility for Building Trustworthy Learning Analytics in the AI-Era

333

encouraged: learning data and LA results should

always be considered critically and in context.

Algorithms Should Be Appropriate and

Explainable. While LA normally uses machine

learning and AI algorithms, statistical models and

methods are not always used in an appropriate way.

This can lead to results that make no sense in practice,

resulting in untrustworthy LA. Therefore, great care

should be taken of using models and methods that are

fit for purpose (Albuquerque et al., 2024; Baker et al.,

2023; Tao et al., 2024), minding the assumptions like

homogeneity of variance or normal distribution.

Moreover, LA should consider the differences in

learning contexts, which calls for inclusion of

contextual variables. Here, the question opens

whether AI can account for the specifics of fields of

study, courses, teaching and learning approaches, and

the way they are used in a specific learning context.

In this sense, it is important to distinguish between the

predictive models relying on small (local) and big

data. Furthermore, the intersection of LA and GenAI

should be further explored, being mindful that, while

machine learning includes known algorithms, how

GenAI concludes is unknown. However, to enable

trust in LA, we should aim for the explainability of

algorithms and avoiding black boxes.

Transparency Should Be Upheld Throughout the

Entire Process. It can be viewed as a

multidimensional concept encompassing clarity,

accuracy, and the disclosure of information within

organizations. Clarity ensures that information is

understandable and meaningful, accuracy guarantees

it is perceived as precise, and information disclosure

highlights the availability of valuable insights

(Schnackenberg et al., 2021). Specifically, we should

be mindful of ensuring transparent presentation of

what data is being collected, for what purpose, how it

is going to be analysed, and the results used.

Moreover, when it comes to algorithms, maintaining

transparency is valuable, but not always feasible with

the GenAI.

4 DISCUSSION

We believe that the presented considerations play an

important role in supporting not only a more

widespread adoption of LA, but the adoption of LA

that can be and is trusted by the stakeholders. It

should be noted, though, that areas of responsibility

differ among the stakeholders, and that not all of the

considerations are equally important with respect to

each group.

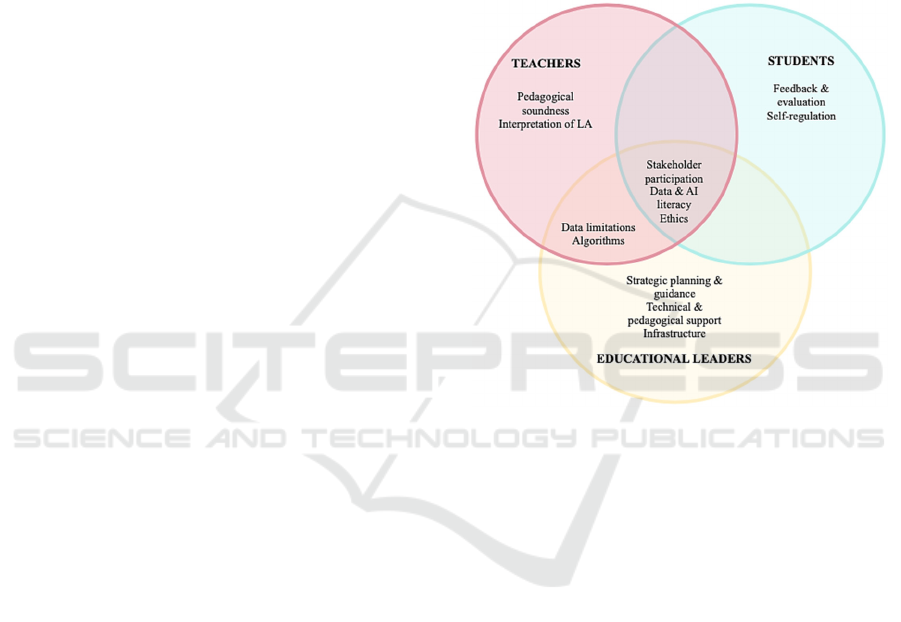

Responsibility and accountability have been

identified in previous work (Svetec & Divjak, 2025)

as a horizontal aspect of ensuring the trustworthiness

of LA, including both its social and technological

aspects. And while the said work, based on an

analysis of previous research, focused primarily on

institutional responsibility, here we would also like to

consider the responsibilities of other stakeholders

(Figure 2).

Figure 2: Venn diagram of stakeholder areas in ensuring

trustworthy LA.

First, when it comes to the level of educational

systems and institutions, the essential role is to be

played by educational leaders, at different levels of

decision-making. They are the ones who are, above

all, responsible to implement strategic planning and

provide guidance, which can make the

implementation of LA meaningful, well-focused,

transparent, and therefore more trustworthy. Strategic

planning should include data collection and problem

analysis, decision-making, followed by monitoring,

evaluation and timely interventions if needed (agile

approach) (Divjak & Begičević Ređep, 2015). On a

more concrete level, educational leaders are those

who should take care of strategic financial investment

and ensure the prerequisites in terms of technical

(e.g., infrastructure) and human resources (e.g.,

developers, third party providers). In some contexts,

this can also include setting up specialized units

providing LA on an institutional level (e.g., LA in

national or institutional student information systems).

Importantly, educational leaders should also ensure

technical and pedagogical support for educators and

CSEDU 2025 - 17th International Conference on Computer Supported Education

334

students, whether in the form of technical assistance,

teacher training or possibly AI assistance. When it

comes to investment, this also includes monitoring

and evaluation of tangible and intangible benefits and

the return on investment.

Second, when it comes to “closing the loop” by

introducing LA-based educational interventions in

the classroom, the responsibility belongs to the

educators, especially teachers. They are in charge of

ensuring the pedagogical soundness of the teaching

and learning process, including meaningful learning

design. If this basis is not firmly established, and

aligned with the principles of constructive alignment

(Biggs, 1999), the soundness and explainability of LA

can be questionable, and LA results can make little

sense in terms of improving teaching and learning.

Furthermore, educators have the essential role in

interpreting the results of LA, using their pedagogical

knowledge, considering their students’ individual

needs, and reacting in a way that can support the

successful acquisition of learning outcomes.

At the intersection of the responsibilities of

educational leaders and educators, there is the

awareness of the data limitations and the possible bias

stemming from incomprehensive data. Furthermore,

these stakeholders should be mindful of the

appropriate use of (explainable) algorithms and

statistical models, as well as the risks of using GenAI.

It is essential to note that lack of consideration for the

data bias and inappropriate algorithms, including

GenAI, possibly affecting the accuracy and fairness

of LA, as well as insufficient consideration of the

specific learning and cultural context, can present a

risk of poorly targeted interventions that can even

have a negative impact on learning.

Third, there are students, who should be in the

center of all LA endeavors. Their area of

responsibility is, on the one hand, related to the

provision of information and feedback on what they

consider important and useful in terms of LA (Divjak

et al., 2023), as well as what kind of support they need

(e.g., training, revision of curricula). For example, to

ensure a student-centered approach, students should

have the opportunities to pose questions they would

like LA to answer and share their visions of LA

assistance (Silvola et al., 2021). On the other hand, it

is upon students to self-direct their learning based on

the outputs of LA (Schumacher & Ifenthaler, 2018),

with the support of interpretations provided by

educators. For example, LA can provide personalized

feedback related to specific tasks, and students are

autonomous in deciding how to use it not only in that

particular context, but also in their further learning

practice and adaptation of their learning strategies.

Finally, all the three groups of stakeholders share

the responsibility to ensure stakeholder participation,

to enable the development of human-centered LA

systems (Buckingham Shum et al., 2024).

Furthermore, it is essential that they develop the

levels of data literacy and AI literacy that is necessary

for the implementation and understanding of LA. And

last but not least, much has been discussed and

researched on the topic of ethics in LA and AI, and

while it is not specifically highlighted in this position

paper, it should be clear at all times that working in

line with ethical standards is the crucial prerequisite

for ensuring trustworthy LA. This includes a number

of aspects, from basic privacy and data security

assumptions, to providing the stakeholders with the

right information and the possibility to decide on

how, why and by whom their data will be used.

When it comes to the stakeholders, discussing

their responsibilities is only one side of the coin. On

the other side, it is also important to look at which

considerations they find important. This may be

closely related to the question of cultural perspective,

as an additional aspect to explore in order to design

culturally aware and value-sensitive LA (Viberg et

al., 2023).

5 LIMITATIONS AND FUTURE

WORK

This paper has a more conceptual nature and tries to

provide an overview of a complex topic, with the

roles of stakeholders which are in essence

intertwined. It builds on the previous literature review

(Svetec & Divjak, 2025) by providing expert views

which consider recent developments in the area of

trustworthy LA, encompassing issues related to the

rapid development and spreading of GenAI. Future

work should provide a more practical perspective,

looking into actual research case studies, to provide

insights into practices, challenges, limitations and

opportunities as perceived by stakeholders in

particular instituions. Our assumption is that

stakeholder perspectives might differ if we consider

the specificities of HE contexts, pedagogical

traditions, institutional visions and missions,

governance models, as well as cultural factors.

6 CONCLUSION

Based on current research and discussion among

international experts in learning analytics (LA), in

Stakeholder Responsibility for Building Trustworthy Learning Analytics in the AI-Era

335

this position paper, we outlined what we believe to be

the fundamental considerations for the trustworthy

implementation of LA. The said considerations

pertain to strategic guidance and support, pedagogical

soundness and human interaction, stakeholder

engagement, data and AI literacy, ethics, data

limitations and meaningful use of algorithms, as well

as ensuring transparency of LA processes. We also

discussed the responsibilities of stakeholders

(primarily educational leaders, educators, and

students) related to the said considerations. Finally,

we opened some questions for further research and

discussion, such as how culture affects trust and the

perceived trustworthiness of LA.

ACKNOWLEDGEMENTS

This work has been supported by the Trustworthy

Learning Analytics and Artificial Intelligence for

Sound Learning Design (TRUELA) project, financed

by the Croatian Science Foundation (IP-2022-10-

2854). Besides the authors, special contribution was

provided by Lourdes Guàrdia, Wim Van Petegem,

Mladen Raković, who took part in the discussions and

whose insights are included in this position paper.

REFERENCES

Albuquerque, J., Rienties, B., Holmes, W., & Hlosta, M.

(2024). From hype to evidence: Exploring large

language models for inter-group bias classification in

higher education. Interactive Learning Environments,

1–23. https://doi.org/10.1080/10494820.2024.2408554

Alcock, S., Rienties, B., Aristeidou, M., & Kouadri

Mostéfaoui, S. (2024). How do visualizations and

automated personalized feedback engage professional

learners in a Learning Analytics Dashboard?

Proceedings of the 14th Learning Analytics and

Knowledge Conference, 316–325. https://doi.org/10.11

45/3636555.3636886

Alzahrani, A. S., Tsai, Y. S., Aljohani, N., Whitelock-

wainwright, E., & Gasevic, D. (2023). Do teaching staff

trust stakeholders and tools in learning analytics? A

mixed methods study. In Educational Technology

Research and Development (Vol. 71, Issue 4). Springer

US. https://doi.org/10.1007/s11423-023-10229-w

Baker, R. S., Gašević, D., & Karumbaiah, S. (2021). Four

paradigms in learning analytics: Why paradigm

convergence matters. Computers and Education:

Artificial Intelligence, 2, 100021. https://doi.org/10.10

16/j.caeai.2021.100021

Baker, R. S., Lief Esbenshade, Vitale, J., & Shamya

Karumbaiah. (2023). Using Demographic Data as

Predictor Variables: A Questionable Choice.

https://doi.org/10.5281/ZENODO.7702628

Biggs, J. (1999). What the Student Does: Teaching for

enhanced learning. Higher Education Research &

Development, 18(1), 57–75. https://doi.org/10.1080/07

29436990180105

Bond, M., Khosravi, H., De Laat, M., Bergdahl, N., Negrea,

V., Oxley, E., Pham, P., Chong, S. W., & Siemens, G.

(2024). A meta systematic review of artificial

intelligence in higher education: A call for increased

ethics, collaboration, and rigour. International Journal

of Educational Technology in Higher Education, 21(1),

4. https://doi.org/10.1186/s41239-023-00436-z

Buckingham Shum, S., Ferguson, R., & Martinez-

Maldonado, R. (2019). Human-Centred Learning

Analytics. Journal of Learning Analytics, 6(2).

https://doi.org/10.18608/jla.2019.62.1

Buckingham Shum, S., Martínez‐Maldonado, R.,

Dimitriadis, Y., & Santos, P. (2024). Human‐Centred

Learning Analytics: 2019–24. British Journal of

Educational Technology, 55(3), 755–768.

https://doi.org/10.1111/bjet.13442

Clow, D. (2012). The learning analytics cycle. Proceedings

of the 2nd International Conference on Learning

Analytics and Knowledge, 134–138. https://doi.org/

10.1145/2330601.2330636

Divjak, B., & Begičević Ređep, N. (2015, June). Strategic

Decision Making Cycle in Higher Education: Case

Study of E-learning. Proceedings of the International

Conference on E-Learning 2015. International

Conference on E-learning 2015, Las Palmas, Spain.

Divjak, B., Svetec, B., & Horvat, D. (2023). Learning

analytics dashboards: What do students actually ask

for? LAK23: 13th International Learning Analytics and

Knowledge Conference, 44–56. https://doi.org/10.114

5/3576050.3576141

Frank, M., Walker, J., Attard, J., & Tygel, A. (2016). Data

Literacy—What is it and how can we make it happen?

The Journal of Community Informatics, 12(3).

https://doi.org/10.15353/joci.v12i3.3274

Gedrimiene, E., Celik, I., Mäkitalo, K., & Muukkonen, H.

(2023). Transparency and Trustworthiness in User

Intentions to Follow Career Recommendations from a

Learning Analytics Tool. Journal of Learning

Analytics, 10(1), 54–70. https://doi.org/10.18608/jla.20

23.7791

Giannakos, M., Azevedo, R., Brusilovsky, P., Cukurova,

M., Dimitriadis, Y., Hernandez-Leo, D., Järvelä, S.,

Mavrikis, M., & Rienties, B. (2024). The promise and

challenges of generative AI in education. Behaviour &

Information Technology, 1–27. https://doi.org/10.10

80/0144929X.2024.2394886

Herodotou, C., Maguire, C., Hlosta, M., & Mulholland, P.

(2023). Predictive Learning Analytics and University

Teachers: Usage and perceptions three years post

implementation. LAK23: 13th International Learning

Analytics and Knowledge Conference, 68–78.

https://doi.org/10.1145/3576050.3576061

Herodotou, C., Rienties, B., Hlosta, M., Boroowa, A.,

Mangafa, C., & Zdrahal, Z. (2020). The scalable

implementation of predictive learning analytics at a

distance learning university: Insights from a

longitudinal case study. The Internet and Higher

CSEDU 2025 - 17th International Conference on Computer Supported Education

336

Education, 45, 100725. https://doi.org/10.1016/j.ihe

duc.2020.100725

Ifenthaler, D., Gibson, D., Prasse, D., Shimada, A., &

Yamada, M. (2021). Putting learning back into learning

analytics: Actions for policy makers, researchers, and

practitioners. Educational Technology Research and

Development, 69(4), 2131–2150. https://doi.org/10.10

07/s11423-020-09909-8

Kaliisa, R., Misiejuk, K., López-Pernas, S., Khalil, M., &

Saqr, M. (2024). Have Learning Analytics Dashboards

Lived Up to the Hype? A Systematic Review of Impact

on Students’ Achievement, Motivation, Participation

and Attitude. Proceedings of the 14th Learning

Analytics and Knowledge Conference, 295–304.

https://doi.org/10.1145/3636555.3636884

Kaveri, A., Silvola, A., & Muukkonen, H. (2023).

Supporting Student Agency with a Student-Facing

Learning Analytics Dashboard: Perceptions of an

Interdisciplinary Development Team. Journal of

Learning Analytics, 10(2), 85–99. https://doi.org/

10.18608/jla.2023.7729

Korir, M., Slade, S., Holmes, W., Héliot, Y., & Rienties, B.

(2023). Investigating the dimensions of students’

privacy concern in the collection, use and sharing of

data for learning analytics. Computers in Human

Behavior Reports, 9(December 2021). https://doi.org/

10.1016/j.chbr.2022.100262

Li, W., Sun, K., Schaub, F., & Brooks, C. (2021).

Disparities in Students’ Propensity to Consent to

Learning Analytics. International Journal of Artificial

Intelligence in Education, 564–608. https://doi.org/10.

1007/s40593-021-00254-2

Mangaroska, K., Sharma, K., Gašević, D., & Giannakos, M.

(2020). Multimodal Learning Analytics to Inform

Learning Design: Lessons Learned from Computing

Education. Journal of Learning Analytics, 7(3), 79–97.

https://doi.org/10.18608/jla.2020.73.7

Muukkonen, H., Van Leeuwen, A., & Gašević, D. (2023).

Learning analytics and societal challenges. In C.

Damşa, A. Rajala, G. Ritella, & J. Brouwer, Re-

theorising Learning and Research Methods in Learning

Research (1st ed., pp. 216–233). Routledge.

https://doi.org/10.4324/9781003205838-15

Ochoa, X. (2022). Multimodal Learning Analytics:

Rationale, Process, Examples, and Direction. In The

Handbook of Learning Analytics (pp. 54–65). SOLAR.

https://doi.org/10.18608/hla22.006

Rienties, B. (2021). Implementing Learning Analytics at

Scale in an Online World. In J. Liebowitz, Online

Learning Analytics (1st ed., pp. 57–77). Auerbach

Publications. https://doi.org/10.1201/9781003194620-4

Rienties, B., & Herodotou, C. (2022). Making sense of

learning data at scale. In Handbook for Digital Higher

Education. https://doi.org/10.4337/9781800888494.000

32

Schumacher, C., & Ifenthaler, D. (2018). Features students

really expect from learning analytics. Computers in

Human Behavior, 78, 397–407. https://doi.org/10.10

16/j.chb.2017.06.030

Schnackenberg, A. K., Tomlinson, E., & Coen, C. (2021).

The dimensional structure of transparency: A construct

validation of transparency as disclosure, clarity, and

accuracy in organizations. Human Relations, 74(10),

1628–1660. https://doi.org/10.1177/0018726720933317

Silvola, A., Näykki, P., Kaveri, A., & Muukkonen, H.

(2021). Expectations for supporting student

engagement with learning analytics: An academic path

perspective. Computers & Education, 168, 104192.

https://doi.org/10.1016/j.compedu.2021.104192

Slade, S., & Prinsloo, P. (2013). Learning Analytics:

Ethical Issues and Dilemmas. American Behavioral

Scientist, 57(10), 1510–1529. https://doi.org/10.1177/

0002764213479366

Slade, S., Prinsloo, P., & Khalil, M. (2023). “Trust us,” they

said. Mapping the contours of trustworthiness in

learning analytics. Information and Learning Science,

124(9–10), 306–325. https://doi.org/10.1108/ILS-04-

2023-0042

Svetec, B., & Divjak, B. (2025). Trustworthy Learning

Analytics for Smart Learning Ecosystems. Interaction

Design & Architecture(s). Accepted for publication.

Tao, Y., Viberg, O., Baker, R. S., & Kizilcec, R. F. (2024).

Cultural Bias and Cultural Alignment of Large

Language Models. PNAS Nexus, 3(9), pgae346.

https://doi.org/10.1093/pnasnexus/pgae346

Tsai, Y.-S., & Gasevic, D. (2017). Learning analytics in

higher education --- challenges and policies: A review

of eight learning analytics policies. Proceedings of the

Seventh International Learning Analytics &

Knowledge Conference, 233–242. https://doi.org/10.11

45/3027385.3027400

Tsai, Y.-S., Whitelock-Wainwright, A., & Gašević, D.

(2021). More Than Figures on Your Laptop:

(Dis)trustful Implementation of Learning Analytics.

Journal of Learning Analytics, 8(3), 81–100.

https://doi.org/10.18608/jla.2021.7379

Tzimas, D., & Demetriadis, S. (2021). Ethical issues in

learning analytics: A review of the field. Educational

Technology Research and Development, 69(2), 1101–

1133. https://doi.org/10.1007/s11423-021-09977-4

Ungerer, L., & Slade, S. (2022). Ethical Considerations of

Artificial Intelligence in Learning Analytics in Distance

Education Contexts. In P. Prinsloo, S. Slade, & M.

Khalil (Eds.), Learning Analytics in Open and

Distributed Learning (pp. 105–120). Springer Nature

Singapore. https://doi.org/10.1007/978-981-19-0786-

9_8

Viberg, O., Jivet, I., & Scheffel, M. (2023). Designing

Culturally Aware Learning Analytics: A Value

Sensitive Perspective. In O. Viberg & Å. Grönlund

(Eds.), Practicable Learning Analytics (pp. 177–192).

Springer International Publishing. https://doi.org/10.10

07/978-3-031-27646-0_10

Vigentini, L., Liu, D. Y. T., Arthars, N., & Dollinger, M.

(2020). Evaluating the scaling of a LA tool through the

lens of the SHEILA framework: A comparison of two

cases from tinkerers to institutional adoption. The

Internet and Higher Education, 45, 100728.

https://doi.org/10.1016/j.iheduc.2020.100728

Stakeholder Responsibility for Building Trustworthy Learning Analytics in the AI-Era

337