Prediction-Based Selective Negotiation for Refining

Multi-Agent Resource Allocation

Madalina Croitoru

1

, Cornelius Croitoru

2

and Gowrishankar Ganesh

3

1

University of Montpellier, LIRMM, France

2

Faculty of Computer Science, Al. I. Cuza Univerisity, Iasi, Romania

3

LIRMM, CNRS (National Center of Scientific Research), France

Keywords:

Collective Decision Making, Multi Agent Systems, Negotiation.

Abstract:

This paper proposes a 2-stage framework for multi-agent resource allocation. Following a Borda-based al-

location, machine learning predictions about agent preferences are used to selectively choose agent pairs to

perform negotiations to swap resources. We show that this selective negotiation improves overall satisfaction

towards the resource redistribution.

1 INTRODUCTION

Resource allocation (Ibaraki and Katoh, 1988) is a

core problem in a wide range of real-world multi-

agent applications (Chevaleyre et al., 2005), from

distributing medical supplies in emergency situations

(Zhang et al., 2016) to assigning computational re-

sources in cloud computing (Vinothina et al., 2012)

or allocating advertising slots to bidders in digital

marketplaces (Li et al., 2018). Such problems re-

quire dividing limited resources among competing

agents, each with their own preferences. Finding opti-

mal solutions to such problems is typically NP-hard,

with linear programming methods used for approxi-

mate solutions (Katoh and Ibaraki, 1998; Croitoru and

Croitoru, 2011).

Advancements in generative Artificial Intelligence

(AI) and autonomous systems are shaping hybrid so-

cieties where human agents and artificial systems are

deeply interconnected. In these environments, sub-

jective, context-dependent human preferences must

integrate with the pre-coded preferences of artificial

agents. Resource allocation problems involving hu-

man agents should consider contextual factors such as

socioeconomic status or geographic location, as these

can influence priorities that may evolve during the al-

location process (Dafoe et al., 2020). For instance,

in digital marketplaces, preferences for goods or ser-

vices can be inferred from browsing behavior or de-

mographic profiles. Similarly, in education, predic-

tive models can guide resource allocation to students

based on their specific learning needs.

In hybrid societies, achieving the global good re-

quires systems that facilitate fair trade-offs among di-

verse stakeholders, balancing individual preferences

with collective outcomes. This paper examines a

straightforward approach to negotiation: the swap-

ping of goods. This method serves as a form

of compromise, enabling mutually acceptable out-

comes through direct exchanges while supporting the

broader collective interest. We propose a 2-stage

framework refining multi-agent resource allocation:

1. In the first step, individual preferences are ag-

gregated using the Borda voting method to cre-

ate a collective preference ranking. This aggre-

gated ranking, combined with the individual pref-

erences of each agent, is used to allocate goods in

a greedy manner. Starting with the most preferred

good in the Borda aggregated ranking, goods are

allocated to the maximum number of agents, pro-

ceeding sequentially to less preferred goods.

2. In the second step, following a Condorcet-like ap-

proach, pairs of agents are identified based on ma-

chine learning predictions of their features, indi-

cating potential for compromise. These agents

are then considered for swapping their allocated

goods to enhance overall societal satisfaction by

aligning the allocations with predicted prefer-

ences.

In this work, our contributions are threefold:

• We formalize the 2-stage framework for

prediction-based resource allocation.

• We design an algorithm that integrates preference

prediction and preference aggregation for overall

agent satisfaction.

656

Croitoru, M., Croitoru, C. and Ganesh, G.

Prediction-Based Selective Negotiation for Refining Multi-Agent Resource Allocation.

DOI: 10.5220/0013368000003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 1, pages 656-662

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

• Third, we analyse the satisfaction guarantees pro-

vided by the proposed framework.

The paper is structured as follows. Section 2 pro-

vides a motivating example illustrating how the use

of swaps can improve outcomes compared to a sim-

ple naive allocation. The naive allocation relies on

a lexicographical ordering of agents, assigning each

their most preferred goods in sequence until all goods

are allocated. Section 3 formally defines the resource

allocation problem and introduces the pseudocode for

the naive algorithm used in the motivating example

presented in Section 2. Section 4 details the Borda

voting method, which serves as the basis for the re-

fined allocation process described in the paper, and

the Condorcet voting method, which relies on pair-

wise comparisons of preferences. The intuition be-

hind Condorcet’s pairwise comparisons is used to jus-

tify why agents might swap goods. Section 5 intro-

duces our two-step framework and its theoretical sat-

isfaction guarantees. The framework (i) uses Borda

aggregation for an initial allocation, followed by (ii)

optimization through swaps between agents, guided

by feature-based preference predictions. Section 6

concludes the paper.

2 MOTIVATING EXAMPLE

This section shows an illustrative example involving

10 agents and 5 goods, each with a maximum avail-

ability of 4 units. The scenario explains how a simple

greedy initial allocation, while respecting multiplicity

constraints, may fail to achieve optimal satisfaction.

Using predicted preferences provided by a machine

learning model, we are then able to target the agents

that might engage in negotiation to improve the allo-

cation by augmenting overall satisfaction.

We consider 10 children A = {a

1

,a

2

,. . . ,a

10

} and

a pool of 5 goods G = {g

1

,g

2

,g

3

,g

4

,g

5

}, each with a

multiplicity of 4. Each child has a valuation function

v

i

: G → {15,10,5,0}, representing their preferences

for the goods. The top three preferences for each child

are shown in Table 1.

An initial allocation respecting multiplicities (no

good allocated to more than 4 children) is:

O

1

= {g

1

}, O

2

= {g

1

}, O

3

= {g

1

}, O

4

= {g

1

},

O

5

= {g

2

}, O

6

= {g

2

}, O

7

= {g

3

}, O

8

= {g

3

},

O

9

= {g

4

}, O

10

= {g

5

}.

The overall initial allocation satisfaction is:

v

A

(O) =

10

∑

i=1

v

i

(O

i

)

= 15 + 15 + 15 + 15 + 10 + 15 + 15 + 10

+ 15 + 0 = 135.

Using negotiations children can adjust the alloca-

tion to improve overall satisfaction:

• a

5

, receiving g

2

(value 10), negotiates with a

10

to

swap g

2

for g

1

, as g

1

is a

5

’s top preference and g

2

is a

10

’s second preference.

• a

10

, currently receiving g

5

(value 0), negotiates

with a

7

to swap g

5

for g

3

, as g

3

is a

10

’s top pref-

erence and g

5

is in a

7

’s top three.

After negotiation, the final allocation is:

O

∗

1

= {g

1

}, O

∗

2

= {g

1

}, O

∗

3

= {g

1

},

O

∗

4

= {g

1

}, O

∗

5

= {g

1

},O

∗

6

= {g

2

}, O

∗

7

= {g

2

},

O

∗

8

= {g

3

}, O

∗

9

= {g

4

}, O

∗

10

= {g

3

}.

The overall final allocation satisfaction is:

v

A

(O

∗

) =

10

∑

i=1

v

i

(O

∗

i

) = 150.

3 RESOURCE ALLOCATION

A resource allocation problem is defined as a tuple

(A, G, M,V ), where:

• A = {a

1

,a

2

,. . . ,a

n

} is the set of n ≥ 2 agents.

• G = {g

1

,g

2

,. . . ,g

m

} is the set of m ≥ 1 goods.

• M = {m

1

,m

2

,. . . ,m

m

} is the multiplicity m

j

≥ 1

of each good g

j

∈ G, representing the maximum

number of agents that can receive g

j

. The total

availability of goods is

∑

m

j=1

m

j

.

• V = {v

1

,v

2

,. . . ,v

n

} is the set of ordinal preference

functions, one for each agent a

i

∈ A. Each v

i

de-

fines a strict ranking over the goods G, such that

for any two goods g

j

,g

k

∈ G, v

i

(g

j

) < v

i

(g

k

) im-

plies that g

j

is strictly preferred to g

k

by agent a

i

.

An allocation O = {O

1

,O

2

,. . . ,O

n

} is a mapping

of goods to agents, where O

i

⊆ G denotes the subset

of goods allocated to agent a

i

. The allocation must

satisfy the multiplicity constraints:

∑

a

i

∈A

1

g

j

∈O

i

≤ m

j

, ∀g

j

∈ G,

where 1

g

j

∈O

i

is an indicator function that equals 1 if

g

j

∈ O

i

and 0 otherwise.

The (individual) satisfaction of agent a

i

is a func-

tion S

i

that associates to each subset G

′

⊆ G of goods

Prediction-Based Selective Negotiation for Refining Multi-Agent Resource Allocation

657

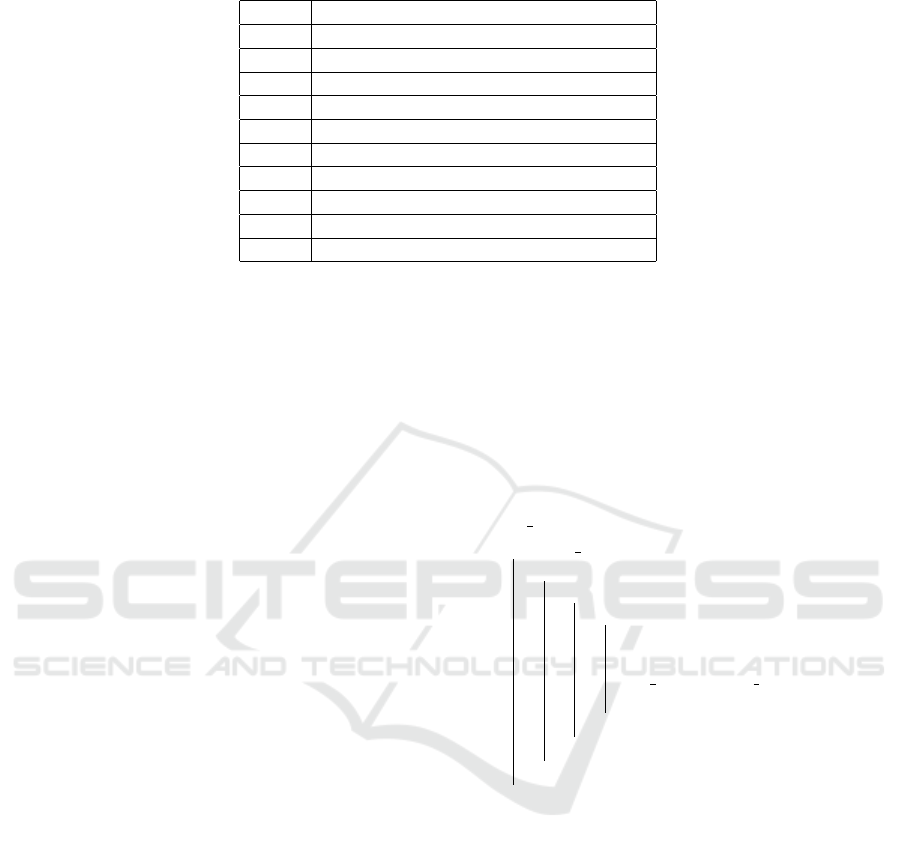

Table 1: Top 3 goods and their valuations for each child.

Child Top 3 Goods (Values v

i

(g))

a

1

v

1

(g

1

) = 15, v

1

(g

2

) = 10, v

1

(g

3

) = 5

a

2

v

2

(g

1

) = 15, v

2

(g

3

) = 10, v

2

(g

4

) = 5

a

3

v

3

(g

1

) = 15, v

3

(g

4

) = 10, v

3

(g

5

) = 5

a

4

v

4

(g

1

) = 15, v

4

(g

5

) = 10, v

4

(g

2

) = 5

a

5

v

5

(g

1

) = 15, v

5

(g

2

) = 10, v

5

(g

3

) = 5

a

6

v

6

(g

1

) = 15, v

6

(g

3

) = 10, v

6

(g

4

) = 5

a

7

v

7

(g

1

) = 15, v

7

(g

4

) = 10, v

7

(g

5

) = 5

a

8

v

8

(g

2

) = 15, v

8

(g

3

) = 10, v

8

(g

4

) = 5

a

9

v

9

(g

2

) = 15, v

9

(g

3

) = 10, v

9

(g

5

) = 5

a

10

v

10

(g

3

) = 15, v

10

(g

4

) = 10, v

10

(g

1

) = 5

a positive real value S

i

(G

′

). This value is strongly de-

pendent of the preferences values v

i

(g), for g ∈ G

′

.

The satisfaction of an allocation O is the sum of the

satisfactions of the agents for their respective alloca-

tions: S(O) =

∑

n

i=1

S

i

(O

i

). The objective is to find

an allocation O

∗

that maximizes satisfaction over all

possible allocations.

A straightforward allocation algorithm can allo-

cate goods to agents based on their ordinal prefer-

ences in a sequential manner. For each agent a

i

∈ A

(in a pre-defined order) the algorithm will allocate the

most preferred good g

(1)

of a

i

that is still available,

if g

(1)

is no longer available, allocate the next most

preferred good g

(2)

, and so on, until either a good is

allocated or all preferred goods are unavailable.

This greedy approach ensures that each agent re-

ceives the best possible good based on availability,

prioritizing agents in the order they are processed. In

order to keep the code simple, we used the command

Proceed to the next agent which tries to be equitable

enough and which can restart from the first agent if

there are unallocated goods and also agents a

i

with

O

i

̸= G. Obviously, this algorithm may not result in

optimal overall satisfaction.

4 PREDICTIVE-BASED

RESOURCE ALLOCATION

In this section, we introduce the concepts that under-

pin the two-step framework for refining multi-agent

resource allocation. The first step relies on the aggre-

gated preferences of agents, calculated using social

choice methods outlined in Section 4.1 and detailed

in (Brandt et al., 2016). The second step involves

the use of classifiers (Jordan and Mitchell, 2015), dis-

cussed in Section 4.2, to identify pairs of agents for

good-swapping, inspired by Condorcet principles.

Input: A = {a

1

,a

2

,. . . ,a

n

} (agents in a

predefined order),

G = {g

1

,g

2

,. . . , g

m

} (set of goods),

M = {m

1

,m

2

,. . . , m

m

} (multiplicities of goods),

V = {v

1

,v

2

,. . . , v

n

} (ordinal preference

functions for each agent).

Output: An allocation O = {O

1

,O

2

,. . . , O

n

}

where O

i

is the set of goods allocated

to agent a

i

.

Initialize O

i

←

/

0 for all a

i

∈ A ;

No goods ←

∑

m

j=1

m

j

;

while No goods > 0 do

foreach a

i

∈ A do

foreach g

j

∈ G in order given by v

i

do

if m

j

> 0 and g

j

/∈ O

i

then

O

i

← O

i

∪ {g

j

} ;

m

j

← m

j

− 1 ;

No goods ← No goods − 1 ;

Proceed to the next agent;

end

end

end

end

return O

Algorithm 1: Simple Allocation Algorithm.

4.1 Social Choice

Let us now define an aggregated preference v

A

that

combines the individual preferences v

1

,v

2

,. . . ,v

n

of

all agents A = {a

1

,a

2

,. . . ,a

n

} into a single collective

preference over the goods G = {g

1

,g

2

,. . . ,g

m

}. The

aggregated preference v

A

can be formally expressed

as:

v

A

= Aggregation(v

1

,v

2

,. . . ,v

n

),

where, Aggregation is the function (e.g., Borda or

Condorcet) used to combine the individual prefer-

ences into a collective ranking.

In the Borda method, a score is assigned to goods

based on their ordinal rankings in each agent’s prefer-

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

658

ence. The aggregated preference v

A

is defined as:

v

A

(g

j

) =

n

∑

i=1

(n − v

i

(g

j

) + 1),

• v

i

(g

j

) is the rank of g

j

in agent a

i

’s preference list

(lower ranks indicate higher preference),

• (n − v

i

(g

j

) + 1) converts the rank into a score

(higher scores indicating higher preference).

The goods are then ordered in descending order of

v

A

(g

j

) to form the aggregated preference.

In the Condorcet method, the aggregated pref-

erence is determined through pairwise comparisons.

For each pair of goods (g

j

,g

k

, a preference matrix M

is constructed:

M(g

j

,g

k

) =

n

∑

i=1

1

v

i

(g

j

)<v

i

(g

k

)

,

• M(g

j

,g

k

) is the number of agents preferring g

j

to

g

k

,

• 1

v

i

(g

j

)<v

i

(g

k

)

= 1 if g

j

is ranked higher than g

k

by

agent a

i

, and 0 otherwise.

A good g

j

is a Condorcet winner if it is preferred to

every other good in pairwise comparisons:

M(g

j

,g

k

) > M(g

k

,g

j

) ∀g

k

̸= g

j

.

An aggregation function for collective prefer-

ences should adhere to several key principles to en-

sure fairness and rationality: Pareto efficiency, Non-

Dictatorship, Independence of Irrelevant Alternatives

(IIA), and Anonymity.

Pareto Efficiency requires that if all agents prefer

a good g

j

over another good g

k

, the collective prefer-

ence v

A

ranks g

j

higher than g

k

:

v

i

(g

j

) < v

i

(g

k

) ∀i ∈ A,

then the aggregated preference must satisfy:

v

A

(g

j

) < v

A

(g

k

).

Non-Dictatorship ensures that no single agent a

i

∈

A can unilaterally determine the collective preference

v

A

, unless their preferences align with all agents unan-

imous preference:

∃g

j

,g

k

∈ G such that v

i

(g

j

) < v

i

(g

k

) and

v

A

(g

k

) < v

A

(g

j

),

for at least one pair of goods g

j

,g

k

and a

i

.

Independence of Irrelevant Alternatives (IIA) en-

sures that the collective ranking of two goods g

j

and

g

k

depends on their relative rankings in individual

preferences, unaffected by the presence or absence of

other goods. Formally, if:

v

i

(g

j

) < v

i

(g

k

) ∀i ∈ A,

then the aggregated preference must also preserve this

ordering:

v

A

(g

j

) < v

A

(g

k

).

Anonymity requires that the aggregation mechanism

treats all agents equally, i.e. the outcome is invariant

to the permutation of agent indices.

The Condorcet method satisfies Pareto efficiency, as

any unanimously preferred good will dominate oth-

ers in pairwise comparisons. It also respects Non-

Dictatorship, as no single agent can dictate out-

comes unless their preferences align with the unan-

imous preference of all agents. Condorcet also sat-

isfies Anonymity, as all agents are treated symmet-

rically in the aggregation process. However, Con-

dorcet violates IIA because the addition or removal

of other goods can alter pairwise comparison results.

A Condorcet winner might not always exist (Arrow,

2012). The Borda method equally violates IIA and re-

spects Anonymity, as the scoring mechanism treats all

agents equally. However, Borda fails to satisfy Pareto

efficiency because the collective ranking can assign

higher aggregate scores to a good g

k

that is unani-

mously less preferred than g

j

. Furthermore, Borda

does not adhere to the Condorcet criterion, as it can

select a good that loses in pairwise comparisons to

another (Arrow, 2012).

As an example consider four agents A =

{a

1

,a

2

,a

3

,a

4

} and three goods G = {g

1

,g

2

,g

3

} with

the following preferences:

a

1

: g

1

≻ g

2

≻ g

3

, a

2

: g

2

≻ g

3

≻ g

1

,

a

3

: g

3

≻ g

1

≻ g

2

, a

4

: g

3

≻ g

2

≻ g

1

.

Using Borda points are assigned as 2 for first

place, 1 for second, and 0 for third:

g

1

: 3, g

2

: 4, g

3

: 5.

The Borda ranking is:

g

3

≻ g

2

≻ g

1

.

For Condorcet each pair of goods is compared

across agents:

g

1

vs. g

2

: 2 votes for each (Tie),

g

1

vs. g

3

: 2 votes for each (Tie),

g

2

vs. g

3

: 2 votes for each (Tie).

Since no good wins all pairwise comparisons, no

Condorcet winner exists.

4.2 Preference Classifiers

Classifiers in Machine Learning analyse data fea-

tures to learn patterns that distinguish between differ-

ent categories. Features are measurable attributes or

Prediction-Based Selective Negotiation for Refining Multi-Agent Resource Allocation

659

properties of the data that are considered relevant for

the classification task. The classifier evaluates these

features to identify patterns or decision boundaries

that separate classes.

A classifier can be formalized as a function f :

X → Y , where:

• X is the input space, representing the set of possi-

ble feature vectors, x = (x

1

,x

2

,. . . ,x

d

) ∈ X , where

d is the number of features.

• Y is the output space, which contains the set

of possible labels or classes, typically Y =

{1,2,. . . ,C}, where C is the number of classes.

• f (x;θ) is the decision function, parameterized by

θ, which maps input features x to a predicted class

ˆy ∈ Y .

The classifier is trained on a dataset D =

{(x

i

,y

i

)}

N

i=1

, where x

i

∈ X are feature vectors and

y

i

∈ Y are the corresponding ground truth labels. The

objective during training is to optimize the parameters

θ by minimizing a loss function L, i.e. the discrepancy

between the predicted labels ˆy

i

= f (x

i

;θ) and the true

labels y

i

:

ˆ

θ = argmin

θ

1

N

N

∑

i=1

L( f (x

i

;θ), y

i

).

The classifier can also incorporate a hypothesis

space H , representing the set of all possible decision

functions f that can be chosen given the parameteri-

zation:

f ∈ H , H = { f (x; θ) | θ ∈ Θ},

where, Θ is the parameter space.

Once trained, the classifier predicts the label for a

new input x

∗

by computing:

ˆy = argmax

y∈Y

P(y | x

∗

;

ˆ

θ),

where, P(y | x

∗

;

ˆ

θ) represents the model’s estimated

probability of class y for the input x

∗

, given the opti-

mized parameters

ˆ

θ.

The Predictive-Based Resource Allocation Problem

extends the traditional resource allocation problem by

incorporating classifiers to predict preferences based

on agent feature as follows:

(A, G, M,F , C ),

where:

• A = {a

1

,a

2

,. . . ,a

n

} is the set of n agents.

• G = {g

1

,g

2

,. . . ,g

m

} is the set of m goods.

• M = {m

1

,m

2

,. . . ,m

m

} specifies the maximum

number of agents m

j

that can receive each good

g

j

, satisfying

∑

m

j=1

m

j

≥ n.

• F is the feature space, where each agent a

i

is as-

sociated with a feature vector x

i

= ( f

i1

,. . . , f

ip

).

• C is the set of classifiers, where each classifier

f

c

k

: X → Y predicts the preference rankings of

agents based on their features.

For each agent a

i

, the predicted preference rank-

ing over goods is given by:

ˆv

i

= f

c

k

(x

i

),

where f

c

k

is a classifier applied to x

i

, and ˆv

i

defines

the predicted strict ranking of goods G.

An allocation O = {O

1

,. . . ,O

n

} maps subsets of

goods to agents, satisfying the multiplicity:

∑

a

i

∈A

1

g

j

∈O

i

≤ m

j

, ∀g

j

∈ G,

where 1

g

j

∈O

i

= 1 if g

j

∈ O

i

, and 0 otherwise.

The satisfaction of agent a

i

is a function S

i

that as-

sociates to each subset G

′

⊆ G of goods a positive real

value S

i

(G

′

). Note that here, the individual satisfac-

tion S

i

of agent a

i

depends on the predicted preference

ranking ˆv

i

. The satisfaction of an allocation O is the

sum of the satisfaction of the agents for their respec-

tive allocations: S(O) =

∑

n

i=1

S

i

(O

i

). The objective is

to find an allocation O

∗

that maximizes satisfaction.

5 OPTIMISING THE

ALLOCATION

The algorithm introduced in this section operates in

four phases, each presented as a distinct algorithm

for lisibility. The first phase, which corresponds to

the naive allocation based on the lexicographical or-

der of agents, is identical to the algorithm described

in Section 3 and is repeated here for readability. In

the second phase, the Borda aggregation is com-

puted, which is then used in the third phase to per-

form a Borda-based allocation of goods. Finally, in

the fourth phase, the allocation is refined through

classifier-driven swaps to further enhance satisfaction.

The last two phases form the two-step framework for

prediction-based refinement of the allocation.

In the second phase, the Borda method is applied

to aggregate individual preferences into a collective

ranking of goods. Each good receives a score based

on its rank in the agents’ preference lists. The goods

are then ordered by their scores to produce a collective

preference ranking that reflects the priorities of the

group.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

660

Input: A = {a

1

,a

2

,. . . , a

n

}: Set of agents;

G = {g

1

,g

2

,. . . , g

m

}: Set of goods;

M = {m

1

,m

2

,. . . , m

m

}: Multiplicities of goods;

{v

1

,v

2

,. . . , v

n

}: Agents’ preferences over

goods;

A lexicographical order on A;

Output: Initial allocation O = {O

1

,O

2

,. . . , O

n

}

Construct allocation O using Algorithm in

Section 3 or using any else Heuristic;

Compute overall satisfaction S(O) of the initial

allocation;

return O;

Algorithm 2: Phase 1: Initial Allocation.

Input: A = {a

1

,a

2

,. . . , a

n

}: Set of agents;

G = {g

1

,g

2

,. . . , g

m

}: Set of goods;

{v

1

,v

2

,. . . , v

n

}: Agents’ preferences over

goods;

Output: Aggregated preference v

A

foreach g

j

∈ G do

Compute Borda score:

B(g

j

) =

n

∑

i=1

score(g

j

in v

i

)

end

Sort G in descending order of Borda scores to

form aggregated preference v

A

;

return v

A

;

Algorithm 3: Phase 2: Aggregated Preferences (Borda

Method).

In the third phase, the algorithm refines the Borda

allocation to improve overall satisfaction according to

the collective preferences. Starting with the most pre-

ferred good in the collective ranking, the algorithm

allocates goods to agents in a way that maximizes the

number of agents receiving goods they rank highly.

This process continues for successive goods until ei-

ther the goods are exhausted or further allocations no

longer align with the collective ranking.

In the final phase, predictive models are used to

adjust the allocation further. For each agent, a classi-

fier predicts preferences based on their feature profile.

Agents whose predicted preferences differ from their

true preferences are identified, and the algorithm pro-

poses swaps with other agents in order to increase the

overall satisfaction of the allocation. The swaps are

done iteratively to improve the alignment of the allo-

cation. More precisely, a swap between agents a

i

and

a

k

means that goods ginO

i

and g

′

∈ O

k

are identified

such that

S

i

((O

i

− {g}) ∪ {g

′

}) + S

k

((O

k

− {g

′

}) ∪ {g}) >

S

i

(O

i

) + S

k

(O

k

).

Input: A = {a

1

,a

2

,. . . ,a

n

}: Set of agents;

G = {g

1

,g

2

,. . . ,g

m

}: Set of goods;

Aggregated preference v

A

;

Current allocation O;

{m

′

1

,m

′

2

,. . . ,m

′

m

}: Current multiplicity of

available (m

′

j

> 0) goods;

Output: Updated allocation O

foreach g

j

∈ G (in v

A

-descending order) do

foreach a

i

∈ A do

if m

′

j

> 0 and g

j

/∈ O

i

then

Allocate g

j

to a

i

: O

i

← O

i

∪ {g

j

};

Update m

′

j

← m

′

j

− 1;

end

end

end

return O;

Algorithm 4: Phase 3: Satisfaction Aggregated Preference

Boosting.

Input: A = {a

1

,a

2

,. . . ,a

n

}: Set of agents;

G = {g

1

,g

2

,. . . ,g

m

}: Set of goods;

Feature space F ;

Classifiers C ; Current allocation O;

Output: Optimized allocation O

∗

foreach a

i

∈ A do

Predict preferences using classifier:

ˆv

i

= f

c

k

(x

i

)

if ˆv

i

̸= v

i

then

Identify agents a

k

such that a swap with

a

i

is possible;

Propose swaps between a

i

and a

k

to

improve satisfaction;

Update O

i

and O

k

if swaps are accepted;

end

end

return O

∗

= O;

Algorithm 5: Phase 4: Optimization.

The algorithm outputs an optimized allocation that

improves on the naive approach by incorporating both

collective preferences and predictions. In each of the

Phases 1, 3 and 4, an implicit assumption is made:

each (individual) satisfaction function S

i

of the agent

a

i

is monotone:

if G

1

⊆ G

2

⊆ G then S

i

(G

1

) ≤ S

i

(G

2

).

Then, it is not difficult to see that the following theo-

rem holds.

Theorem 1. Let O be the naive initial allocation and

O

∗

the final allocation produced by the Predictive-

Based Resource Allocation Algorithm. If all satisfac-

tion function S

i

of the agents are monotone, then

S(O

∗

) ≥ S(O),

where S(·) is the overall satisfaction of an allocation.

Prediction-Based Selective Negotiation for Refining Multi-Agent Resource Allocation

661

6 FUTURE WORK

In this paper, we proposed a predictive-based resource

allocation algorithm that combines machine learning

predictions with preference aggregation techniques to

optimize resource allocation in multi-agent systems.

• Experimental validation is necessary to assess the

framework performance in diverse practical set-

tings (cloud resource allocation, logistics, pub-

lic goods distribution etc.). This includes a for-

mal computational complexity analysis evaluating

scalability of the proposed algorithm as well as

execution time, memory usage, and performance

to assess the scalability of your approach while

varying distributions of preferences and goods.

• Apart from the theoretical study of the properties

such as envy-freeness or equitability another no-

tion that should be investigated is that of “stable”

allocation (the agents do not have any incentive to

further swap).

• When several agents are eligible for a swap based

on their profiles and predicted preferences, crite-

ria for choosing the most appropriate participants

need to be defined. This decision introduces po-

tential concerns regarding ethics and bias, particu-

larly when prioritizing agents could inadvertently

favor certain groups over others (Hurwicz, 1973).

• Furthermore, the notion of overall satisfaction

could be refined to incorporate subpopulation-

specific goals. For instance, rather than optimiz-

ing global satisfaction, the algorithm could priori-

tize improving the satisfaction of specific subpop-

ulations (e.g., AI-agents vs humans in hybrid soci-

eties) based on implicit or explicit norms (Aldew-

ereld et al., 2016). Similarly to as above, this issue

is directly related to the fairness and ethical con-

cerns of our approach.

REFERENCES

Aldewereld, H., Dignum, V., and Vasconcelos, W. W.

(2016). Group norms for multi-agent organisations.

ACM Transactions on Autonomous and Adaptive Sys-

tems (TAAS), 11(2):1–31.

Arrow, K. J. (2012). Social choice and individual values,

volume 12. Yale university press.

Brandt, F., Conitzer, V., Endriss, U., Lang, J., and Procac-

cia, A. D. (2016). Handbook of computational social

choice. Cambridge University Press.

Chevaleyre, Y., Dunne, P. E., Endriss, U., Lang, J.,

Lemaitre, M., Maudet, N., Padget, J., Phelps, S.,

Rodr

´

ıgues-Aguilar, J. A., and Sousa, P. (2005). Issues

in multiagent resource allocation.

Croitoru, M. and Croitoru, C. (2011). Generalised net-

work flows for combinatorial auctions. In 2011

IEEE/WIC/ACM International Conferences on Web

Intelligence and Intelligent Agent Technology, vol-

ume 2, pages 313–316. IEEE.

Dafoe, A., Hughes, E., Bachrach, Y., Collins, T., Mc-

Kee, K. R., Leibo, J. Z., Larson, K., and Graepel,

T. (2020). Open problems in cooperative ai. arXiv

preprint arXiv:2012.08630.

Hurwicz, L. (1973). The design of mechanisms for resource

allocation. The American Economic Review, 63(2):1–

30.

Ibaraki, T. and Katoh, N. (1988). Resource allocation prob-

lems: algorithmic approaches. MIT press.

Jordan, M. I. and Mitchell, T. M. (2015). Machine learn-

ing: Trends, perspectives, and prospects. Science,

349(6245):255–260.

Katoh, N. and Ibaraki, T. (1998). Resource allocation

problems. Handbook of Combinatorial Optimization:

Volume1–3, pages 905–1006.

Li, J., Ni, X., Yuan, Y., and Wang, F.-Y. (2018). A hier-

archical framework for ad inventory allocation in pro-

grammatic advertising markets. Electronic Commerce

Research and Applications, 31:40–51.

Vinothina, V. V., Sridaran, R., and Ganapathi, P. (2012). A

survey on resource allocation strategies in cloud com-

puting. International Journal of Advanced Computer

Science and Applications, 3(6).

Zhang, J., Zhang, M., Ren, F., and Liu, J. (2016). An in-

novation approach for optimal resource allocation in

emergency management. IEEE Transactions on Com-

puters.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

662