Multi-Modal Multi-View Perception Feature Tracking for Handover

Human Robot Interaction Applications

Chaitanya Bandi

a

and Ulrike Thomas

Robotics and Human Machine Interaction Lab, Technical University of Chemnitz, Chemnitz, Germany

{chaitanya.bandi, ulrike.thomas}@etit.tu-chemnitz.de

Keywords:

Hand Pose, Hand-Object Pose, Body Pose, Handover, Human-Robot Interaction.

Abstract:

Object handover is a fundamental task in human-robot interaction (HRI) that relies on robust perception fea-

tures such as hand pose estimation, object pose estimation, and human pose estimation. While human pose

estimation has been extensively researched, this work focuses on creating a comprehensive architecture to

track and analyze hand and object poses, thereby enabling effective handover state determination. We pro-

pose an end-to-end architecture that integrates unified hand-object pose estimation with hand pose tracking,

leveraging an early and efficient fusion of RGB and depth modalities. Our method incorporates existing state-

of-the-art techniques for human pose estimation and introduces novel advancements for hand-object pose esti-

mation. The architecture is evaluated on three large-scale open-source datasets, demonstrating state-of-the-art

performance in unified hand-object pose estimation. Finally, we implement our approach in a human-robot

interaction scenario to determine the handover state by extracting and tracking the necessary perception fea-

tures. This integration highlights the potential of the proposed system for enhancing collaboration in HRI

applications.

1 INTRODUCTION

Bi-directional handovers in human-robot interaction

(HRI) involve the mutual transfer of objects be-

tween humans and robots, encompassing both robot-

to-human and human-to-robot interactions. This dy-

namic exchange requires the robot to not only exe-

cute precise physical actions but also understand con-

textual cues to coordinate effortlessly with the human

partner. In both directions, the process depends on

accurate perception, intention recognition, and syn-

chronized motion planning. For instance, in a robot-

to-human handover, the robot must identify when the

human is ready to receive the object by analyzing

body posture, hand position, and gaze direction. Con-

versely, in a human-to-robot handover, the robot must

detect when the human intends to release the object by

monitoring cues like grip loosening or object trajec-

tory. For human-to-robot handovers, the robot’s role

involves anticipating the human’s intent, adjusting its

gripper orientation to align with the object’s pose, and

ensuring a firm grasp at the right moment. This direc-

tion of communication also needs to consider safety

of human subject and avoid collsions.

a

https://orcid.org/0000-0001-7339-8425

In this work, we design a model and test specifi-

cally for human-to-robot complex handover scenario

nevertheless the model is applicable for handover ap-

plication. We can achieve the seamless interaction

model by fusing vision-based 3D hand pose track-

ing, unified hand-object pose tracking, and body pose

tracking. The core idea is to leverage the relationships

between the tracked features (hand pose, body pose,

and object pose) to recognize the interaction state

(handover).The fusion of data from multiple modal-

ities ensures robustness and reduces ambiguities in

complex or cluttered environments.

Human body pose estimation is a well-researched

area, with recent advancements achieving robust per-

formance even under conditions of partial body oc-

clusion. Given this progress, our focus is not on con-

tributing to this domain but rather on leveraging exist-

ing state-of-the-art methods capable of real-time 3D

human pose estimation.

The primary contribution of this work lies in

achieving unified hand-object pose estimation. Lever-

aging Intel RealSense D415 cameras, which provide

both RGB and depth data, we utilize multimodal in-

put to enhance the accuracy of hand-object pose esti-

mation. To surpass state-of-the-art performance, our

approach integrates feature fusion from multimodal

Bandi, C. and Thomas, U.

Multi-Modal Multi-View Perception Feature Tracking for Handover Human Robot Interaction Applications.

DOI: 10.5220/0013373800003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 3: VISAPP, pages

797-807

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

797

Figure 1: The proposed architecture provides an overview of a multi-camera setup designed for object handover interactions

in a human-robot interaction environment. Among the three available camera views, only two are utilized, as the third view

is deemed unnecessary for the handover application and is therefore excluded from the architecture.

data at early stages, along with cross-attention and

self-attention mechanisms within the network. The

complete process for estimating the handover inter-

action states is depicted in Figure 1. In the collabo-

rative interaction scenario, two cameras are strategi-

cally positioned within the workspace. The first cam-

era is placed to ensure a clear view of the subject’s

upper body and face, capturing essential cues for in-

teraction. The second camera is mounted on the left

and right sides, respectively as illustrated in Figure 1.

The RGB image from the first camera view is pro-

cessed using RTMW3D (Jiang et al., 2024) to obtain

3D human pose estimation. Images from the second

camera are fed into the YOLOv8 (Ultralytics, 2023)

architecture to detect bounding boxes of the hand and

identify the object regions, facilitating unified hand-

object pose estimation.

After extracting the necessary information from

YOLOv8 (Ultralytics, 2023), we proceed with two

distinct tasks: 3D hand pose estimation and unified

hand-object pose tracking. To avoid overload of load-

ing all the models every time, we introduce proxim-

ity and geometric cues in addition to the bounding

box intersection from object detection. For indepen-

dent 3D hand mesh reconstruction, we adopt a process

similar to the Vision Transformer (ViT) (Dosovitskiy

et al., 2020) architecture. The input images are di-

vided into patches and passed through a transformer

encoder, which regresses the pose parameters of the

MANO hand model.

Building on this foundation, our proposed contri-

bution focuses on estimating the unified hand-object

pose for real-time tracking in handover interaction

scenarios. This unified approach enables precise and

efficient tracking of both the hand and the object, en-

hancing the system’s reliability during dynamic inter-

actions. For unified hand-object pose estimation, we

rely on multi-modal data from intelrealsense camera.

To reduce computational complexity in later stages,

we first fuse the RGB and depth information using an

attention mechanism. The fused data is then passed

through a unified backbone network based on a Mo-

bileNetV2 (Sandler et al., 2018) feature pyramid net-

work (Lin et al., 2017) (FPN). ROI aligned informa-

tion from hand and object are forwarded to separate

hand and object encoders using attention mechanism

which are later decoded using cross-attention to ob-

tain outputs. From the hand decoder, MANO pose

and shape parameters are obtained, which are then

processed through the MANO model to reconstruct

the 3D hand mesh. Meanwhile, the object decoder

regresses 2D keypoint correspondences, which are

matched with 3D keypoints to compute the object’s

6D pose using the Perspective-n-Point (PnP) (Lepetit

et al., 2009) algorithm.

2 RELATED WORK

This work focuses on three perception features: 3D

body pose estimation, 3D hand mesh recovery, and

6D object pose estimation. We then perform unified

hand-object pose estimation. For 3D body pose esti-

mation we rely on existing state-of-the-art works. In

this section, we discuss recent work related to hand

mesh reconstruction and unified hand-object pose es-

timation.

2.1 3D Hand Mesh Reconstruction

The work introduced in (Zimmermann and Brox,

2017) one of the first deep learning frameworks for

3D hand pose estimation from RGB images. It em-

ployed a keypoint-based regression method to pre-

dict the 3D pose and introduced a dataset to facili-

tate this task. The model demonstrated robustness in

single-view hand pose estimation but lacked the abil-

ity to model the hand’s detailed shape. Hand Point-

Net (Ge et al., 2018) utilized point clouds to esti-

mate hand poses directly, avoiding reliance on inter-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

798

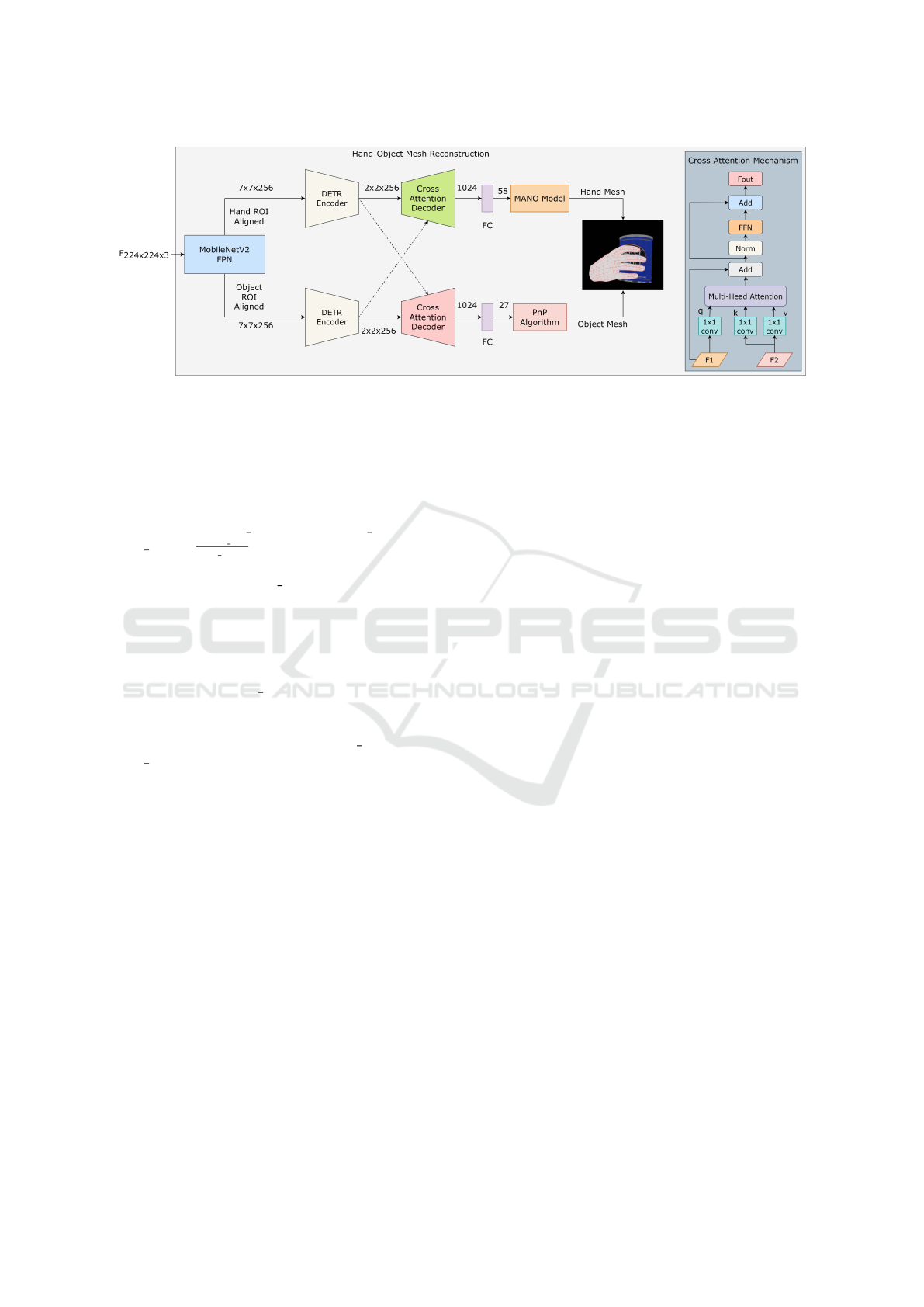

Figure 2: The architecture of the proposed 3D hand pose estimation and unified hand-object pose estimation.

mediate 2D representations. By operating on point

sets, this method was robust to occlusions and noise.

The approach effectively captured geometric features

but required depth input, limiting its applicability in

RGB-only scenarios. The work introduced in (Baek

et al., 2019) contains a neural rendering framework

that iteratively refines hand pose estimations by com-

paring the rendered hand image with the observed in-

put. This iterative approach improved pose accuracy

and made the network more resilient to occlusions and

ambiguous poses.

In contrast, to achieve accurate hand pose estima-

tion, many works adopt a model-based method uti-

lizing the differentiable MANO model introduced in

(Romero et al., 2017). This approach enables the si-

multaneous estimation of 3D hand pose and shape,

represented as a detailed mesh. The authors in (Ge

et al., 2019) propose a method for estimating both

hand shape and pose by predicting the parameters

of the MANO hand model. By leveraging the dif-

ferentiable nature of MANO (Romero et al., 2017),

the method reconstructed realistic hand meshes while

maintaining computational efficiency. Later many re-

search works such as (Cai et al., 2019), (Moon et al.,

2020), (Park et al., 2022a), (Pavlakos et al., 2024)

were developed on MANO based hand model with

different backbones.

2.2 Unified Hand-Object Pose

Estimation

HOPE-Net (Wang et al., 2022) integrates hand and

object pose estimation into a unified framework us-

ing a shared latent space. The network employs a

disentangled representation for joint and independent

pose estimations of hands and objects. The use of

multi-task learning allows simultaneous hand and ob-

ject pose prediction, resulting in efficient processing.

A key advantage of this approach is its ability to han-

dle occlusions effectively due to the shared feature

space between hands and objects, enabling robust es-

timation under challenging conditions.

HOISDF (Xu et al., 2022) employs global signed

distance fields (SDFs) for simultaneous learning of

hand and object shapes. It leverages SDFs to encode

mutual constraints between hands and objects, focus-

ing on global plausibility rather than fine-grained de-

tails. The approach includes a U-Net-based encoder-

decoder for hierarchical feature extraction and SDF

decoders for estimating distances to hand and object

surfaces. This method excels in handling occlusions

and capturing robust global information. Later many

works improved and extended based of SDFs (Chen

et al., 2022b), (Chen et al., 2023).

The framework (Qu et al., 2023) combines neu-

ral rendering and model-based fitting for joint hand-

object pose estimation. The method uses offline learn-

ing to build generative implicit models for hand and

object geometry. During online inference, rendering-

based model fitting refines poses under geometric

constraints. A key advantage is the ability to generate

smooth and stable pose sequences for videos, reduc-

ing jitter and improving temporal consistency.

Dense Mutual Attention (Zhao et al., 2023) in-

troduces a novel approach for estimating 3D hand-

object poses by explicitly modeling fine-grained in-

teractions using a dense mutual attention mechanism.

This method aims to improve the physical plausibil-

ity and quality of pose estimations while maintain-

ing real-time inference speed. The approach con-

structs hand and object graphs based on their mesh

structures. Each node in the hand graph aggregates

features from all nodes in the object graph through

learned attention weights, and vice versa. This dense

Multi-Modal Multi-View Perception Feature Tracking for Handover Human Robot Interaction Applications

799

interaction captures detailed dependencies between

the hand and object, enhancing interaction modeling.

HFL-Net (Wang et al., 2023) presents a frame-

work that integrates hand and object pose estima-

tion into a unified process by focusing on capturing

mutual constraints and interactions. The core con-

tribution lies in a harmonious feature learning strat-

egy, which emphasizes extracting joint features that

represent both the hand and the object while main-

taining their distinct identities. The approach lever-

ages advanced neural architectures to encode fine-

grained hand-object relationships and applies atten-

tion mechanisms to dynamically prioritize critical in-

teraction regions. Experimental results show that this

method achieves superior accuracy and robustness,

particularly in scenarios involving occlusions or com-

plex hand-object interactions, making it well-suited

for real-world applications in human-robot collabora-

tion and augmented reality.

The work (Hoang et al., 2024)proposes a novel ap-

proach to hand-object pose estimation that combines

multiple modalities, such as RGB and depth images,

to enhance the accuracy and robustness of the estima-

tion. The method employs adaptive fusion techniques

to intelligently combine information from different

sensory inputs, optimizing the model’s ability to han-

dle varying input conditions. The core innovation of

this work lies in the introduction of interaction learn-

ing, which models the dynamic interactions between

the hand and object to improve pose predictions, es-

pecially in challenging scenarios involving complex

hand-object interactions.

Figure 3: Early efficient RGBD fusion. Attention-based fu-

sion of RGB and depth image.

3 METHODOLOGY

In this work, we aim to develop a comprehensive

model for object handover with a strong focus on

safety. To achieve this, we utilize perception fea-

ture extraction networks capable of real-time opera-

tion. These include 3D human body pose estimation,

3D hand pose estimation, and unified hand-object

pose estimation. Rather than designing all compo-

nents from scratch, we leverage existing state-of-the-

art methods. Specifically, for 3D human body pose

estimation, we adopt the recently introduced RTMW

model (Jiang et al., 2024), which offers high accu-

racy and real-time performance, making it suitable

for multi-person whole-body pose estimation scenar-

ios. The model processes input images to detect mul-

tiple people and their detailed poses simultaneously,

even in crowded or dynamic scenarios. By balancing

speed and precision, RTMW demonstrates robust per-

formance in real-time applications such as sports an-

alytics, augmented reality, and human-robot interac-

tion. Its real-world usability is enhanced by its ability

to handle occlusions and variations in body configu-

rations.

To optimize system performance, we chose to

track features continuously, except for 3D human

body pose estimation, to avoid unnecessary computa-

tional overhead. To minimize redundant processing,

we implemented a hand-object proximity detection

method to bypass 6D object pose estimation when

it is not required. The proximity detection relies on

two simple but effective approaches. The first ap-

proach involves monitoring the intersection of bound-

ing boxes over time, as detected using YOLOv8.

However, due to the cluttered arrangement of ob-

jects, multiple items may overlap within certain dura-

tions. To address this, we incorporated additional cri-

teria, including depth proximity and geometric cues.

Specifically, we check if the depth of both the hand

and object is within close range (less than 0.5 cm)

and overlaps persist for a set number of frames. When

these conditions are met, we assume the object is in

the human hand and trigger unified hand-object pose

estimation. Otherwise, we only compute 3D hand

pose reconstruction, reducing unnecessary computa-

tional load. The complete architecture is illustrated in

Figure 2

The process begins by passing the RGB image

through the YOLOv8 (Ultralytics, 2023) object de-

tection model, which has been retrained for this work

to detect YCB (Calli et al., 2017) objects and hu-

man hands. The model outputs bounding boxes for

all YCB (Calli et al., 2017) objects and the hand,

if present. Using this bounding box information,

proximity is assessed based on depth and geometric

cues. The RGB and depth images are then cropped

to focus on either the hand pose or the unified hand-

object pose, guided by the bounding box and proxim-

ity data. To ensure consistency, the cropped regions

maintain their original aspect ratios and are resized to

dimensions of 224 × 224 × 3 for the RGB image and

224 × 224 × 1 for the depth image.

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

800

3.1 RGBD Attention-Based Fusion

The initial step involves performing efficient early-

stage RGB-D attention fusion. Direct fusion at this

stage often results in information loss, so we em-

ploy an attention mechanism with learnable parame-

ters to selectively integrate critical depth information

into the model. This approach eliminates the need for

additional networks, such as PointNet++ (Qi et al.,

2017) or CNNs, which can introduce latency and

hinder real-time inference. By integrating depth in-

formation efficiently, the system maintains high per-

formance without compromising real-time processing

capabilities. The process of Efficient RGBD fusion

with attention mechanism is illustrated in Figure 3.

3.2 Hand Mesh Reconstruction

Network

The backbone of the Hand Mesh Reconstruc-

tion (HMR) network is the vision transformer

(ViT) (Dosovitskiy et al., 2020). we follow the similar

process as the work (Pavlakos et al., 2024) to encode

the hand features using vision transformer. The en-

coded features are then decoded to obtain the mano

parameters. The MANO parameters are then for-

warded to the MANO model to obtain 3D hand mesh

and 3D hand joint locations.

3.2.1 MANO Parametric Model

The MANO (Model-based Articulated hand tracking

using a NOnlinear representation) hand parametric

model is a statistical 3D model that represents hu-

man hand shapes and poses in a compact and effi-

cient form. It is an adaptation of the SMPL (Skinned

Multi-Person Linear) model, customized for hand

pose and shape estimation. MANO parameterizes a

3D hand mesh using two components: pose param-

eters θ ∈ R

K×3

, which control the rotation of K =

16 joints in axis-angle format, and shape parameters

β ∈ R

N

, which define individual hand shape variations

based on N = 10 principal components derived from

a dataset of scanned hand shapes.

The MANO model outputs a triangulated 3D

mesh with V = 778 vertices connected by faces to

form the hand’s surface. The pose and shape param-

eters (16 × 3 + 10 = 58) are combined with a linear

blend skinning algorithm to deform the mesh accord-

ing to the desired articulation and morphology. This

allows for realistic and anatomically plausible hand

representations. A key feature of MANO is its ability

to directly regress joint locations, making it suitable

for both hand pose estimation and applications requir-

ing high-quality hand-object interaction modeling.

3.3 Architecture

The input to the proposed architecture is a fused im-

age of size 224 × 224 × 3. This image is divided

into 16 non-overlapping patches, which are then for-

warded to the Vision Transformer (ViT) (Dosovitskiy

et al., 2020) architecture to encode hand-specific fea-

tures. The ViT-H backbone outputs a sequence of to-

kens that encapsulate the encoded hand information.

To decode these features, a transformer decoder is

employed. It processes the output tokens from the

ViT and regresses the MANO parameters similar to

the work in (Pavlakos et al., 2024). These parameters

are subsequently passed to the MANO model, which

generates 3D hand joint locations and 3D mesh ver-

tices.

3.4 Hand-Object Mesh Reconstruction

Network

Once the proximity is triggered, the system performs

unified hand-object pose estimation. The fused im-

age F

fused

∈ R

224×224×3

is forwarded as input to

the Hand-Object Mesh Reconstruction (HOMR) net-

work. For feature extraction from F

fused

, the Mo-

bileNetV2 FPN (Lin et al., 2017) architecture is uti-

lized, which ensures computational efficiency while

capturing rich feature representations.

MobileNetV2 (Sandler et al., 2018) is a

lightweight and efficient convolutional neural

network architecture designed for mobile and em-

bedded vision applications. The core innovation in

MobileNetV2 is the use of inverted residual blocks

and linear bottlenecks. MobileNetV2 FPN (Lin et al.,

2017) (Feature Pyramid Network) combines the

efficient MobileNetV2 backbone with the multi-scale

feature processing capabilities of FPN for improved

object detection and segmentation tasks. In the FPN

architecture, features from different stages of the

network are combined to form a feature pyramid,

allowing the model to leverage both high-resolution

and high-level semantic information. Once the

hand-object features from MobileNetV2 FPN are

extracted, the region of interest (ROI) aligned infor-

mation of each of the features are extracted. The ROI

aligned features are then forwarded to the deformable

transformer (Zhu et al., 2021) (DETR) encoder for

both hand and object.

The input to the deformable multi-headed trans-

former attention is a feature map of size 7 × 7 × 256,

which corresponds to a spatial resolution of 7 × 7

with 256 feature channels. This input is first flat-

tened into a sequence of size 49 × 256, where 49 is

the total number of spatial tokens (7 × 7). A learn-

Multi-Modal Multi-View Perception Feature Tracking for Handover Human Robot Interaction Applications

801

Figure 4: The architecture of the Hand-Object Mesh Reconstruction (HOMR) network. This network employs a MobileNet-

FPN backbone, deformable transformers, and a cross-attention mechanism to achieve unified hand-object pose estimation.

able positional embedding of size 49 × 256 is added

to the input sequence to incorporate spatial informa-

tion. The input is then projected into query, key,

and value tensors, each of size 49 × 256. These ten-

sors are reshaped for multi-head attention into the

dimensions B × num heads × 49 × head dim, where

head dim =

embed dim

num heads

. Offsets for deformable sam-

pling are predicted through a linear layer, producing

a tensor of size 49 × num heads × 2 for each spatial

token. These offsets dynamically determine the sam-

pling locations within the feature map and B is the

batch size, number of heads is 8, and head dimension

is 128.

The attention mechanism computes attention

scores of size B × num heads × 49 × 49 using scaled

dot-product attention. These scores are used to com-

pute a weighted sum of the value tensor, resulting

in an attended output of size B × num heads × 49 ×

head dim. The outputs from all heads are concate-

nated back into the shape B × 49 × 256. After ap-

plying a final projection layer, the output is reshaped

back into the original spatial resolution of 7×7 ×256.

To meet the desired output size of 2 × 2 × 256, bilin-

ear interpolation is applied to downsample the spatial

dimensions from 7 × 7 to 2 × 2, while preserving the

256 feature channels. The final output is a tensor of

size B × 2 × 2 × 256.

The extracted features are forwarded to the cross-

attention decoder layer, where the query for the hand

decoder consists of the object-encoded information,

while the query for the object decoder is derived from

the hand decoder’s features. After performing the

cross-attention mechanism, a fully connected layer is

employed to generate the respective output features.

Specifically, the hand decoder outputs 58 parameters

representing the MANO (Romero et al., 2017) hand

model, and the object decoder outputs 27 features cor-

responding to 9 keypoints, each with 3 dimensions.

For the object keypoints, the first two dimensions

(x, y) represent the 2D location of the keypoint, while

the third dimension represents the confidence score of

the keypoint being accurately predicted.

The predicted MANO parameters are subse-

quently passed into the MANO model to compute

the hand mesh vertices and the 3D keypoints of the

hand. Similarly, using the predicted 2D keypoints and

known 3D correspondences of the object, the 6D pose

of the object is estimated by solving the Perspective-

n-Point (Lepetit et al., 2009) (PnP) problem itera-

tively. This approach ensures accurate estimation of

both the hand’s mesh structure and the object’s pose in

a unified framework. The complete HOMR network

is illustrated in Figure 4

3.4.1 Loss Function

To train the network, we define a composite loss

function that minimizes the L2 distances between the

predicted and ground truth values of H (object key-

points), θ (pose parameters), β (shape parameters), V

(3D vertices), and J (3D joints). The total loss for

hand pose estimation, denoted as L

overall

, is formu-

lated as:

L

overall

= L

Obj

+ L

3D

+ L

MANO

The term L

Ob j

corresponds to the L2 loss for 2D

object keypoint location predictions, ensuring accu-

rate localization of keypoints in the 2D space:

L

Ob j

=

K

∑

i=1

o

i

− o

gt

i

2

2

Here, o

i

and o

gt

i

denote the predicted and ground

truth keypoints for the i-th keypoint, respectively, and

K is the total number of keypoints.

The term L

3D

accounts for the L2 loss between the

predicted and ground truth 3D vertices (V) and joint

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

802

Table 1: Comparison with the state-of-the-art on the Frei-

HAND dataset.

Method PA-MPJPE↓ PA-MPVPE↓ F@5↑ F@15↑

I2UV-HandNet (Chen et al., 2021) 6.7 6.9 0.707 0.977

METRO (Lin et al., 2021) 6.5 6.3 0.731 0.984

Tang et al. (Tang et al., 2021) 6.7 6.7 0.724 0.981

MobRecon (Chen et al., 2022a) 5.7 5.8 0.784 0.986

AMVUR (Jiang et al., 2023) 6.2 6.1 0.767 0.987

HaMeR (Pavlakos et al., 2024) 6.0 5.7 0.785 0.990

Ours 5.7 5.6 0.797 0.990

Table 2: Comparison with the state-of-the-art on the HO-3D

dataset.

Method PA-MPJPE↓ PA-MPVPE↓ F@5↑ F@15↑

Liu et al. (Liu et al., 2021) 9.9 9.5 0.528 0.956

HandOccNet (Park et al., 2022b) 9.1 8.8 0.564 0.963

I2UV-HandNet (Chen et al., 2021) 9.9 10.1 0.500 0.943

Hampali et al. (Hampali et al., 2020) 10.7 10.6 0.506 0.942

Hasson et al. (Hasson et al., 2019) 11.0 11.2 0.464 0.939

METRO (Lin et al., 2021) 10.4 11.1 0.484 0.946

MobRecon (Chen et al., 2022a) 9.2 9.4 0.538 0.957

AMVUR (Jiang et al., 2023) 8.3 8.2 0.608 0.965

HaMeR (Pavlakos et al., 2024) 7.7 7.9 0.635 0.980

Ours 7.7 7.8 0.635 0.978

coordinates (J), promoting accurate 3D mesh recon-

struction and joint localization:

L

3D

=

V − V

gt

2

2

+

J − J

gt

2

2

The term L

MANO

imposes L2 losses on the

MANO shape parameters (β) and pose parameters

(θ), ensuring accurate estimation of the hand’s pose

and shape:

L

MANO

=

β − β

gt

2

2

+

θ − θ

gt

2

2

Here, V

gt

, J

gt

, β

gt

, and θ

gt

represent the ground

truth 3D vertices, joint coordinates, shape parame-

ters, and pose parameters, respectively. The com-

bined loss L

overall

ensures robust hand pose, object

pose and mesh estimation by optimizing both spatial

accuracy and parametric consistency.

4 EXPERIMENTATION

This section presents a comprehensive evaluation of

the proposed approach on three widely used RGB-

D datasets: FreiHand (Only hand interactions) (Zim-

mermann et al., 2020), HO-3D (Zhang et al., 2020)

and DexYCB (Mishra et al., 2020) (these contain

hand-object interactions). These datasets are de-

signed to reflect realistic hand pose scenarios, offer-

ing a robust benchmark for assessing the performance

of hand pose estimation techniques in practical set-

tings. Our analysis includes a detailed comparison

with leading RGB-based and depth-based methods,

allowing us to effectively validate the robustness and

accuracy of our approach against state-of-the-art al-

ternatives.

4.1 Implementation Details

For hand and YCB (Calli et al., 2017) object detec-

tion, we utilize bounding box annotations from all

three datasets. While YOLOv8 (Ultralytics, 2023)

is employed for detection tasks, we do not conduct

an extensive evaluation of its transfer learning perfor-

mance, as this aspect has already been thoroughly ex-

plored in prior studies.

The HMR network was trained for 70 epochs us-

ing the Adam optimizer. To improve generalization,

a weight decay of 5 × 10

−4

was applied, which was

scheduled to update every 10 epochs. During training,

the aspect ratios of all input images were preserved to

ensure realistic representations of hand poses. The

images were resized to a resolution of 224 × 224 pix-

els while maintaining their original proportions.

The HOMR architecture was trained under a setup

similar to the hand mesh reconstruction network, with

a few adjustments. The HOMR model was trained for

100 epochs using the Adam optimizer, with a weight

decay of 5× 10

−4

applied every 10 epochs. The train-

ing process also preserved the aspect ratios of all in-

put images, which were resized to a fixed resolution

of 224 × 224 pixels to align with the network’s input

requirements while retaining critical spatial informa-

tion.

4.2 Datasets and Evaluation Metrics

The HO-3D (Hand-Object 3D) dataset (Zhang

et al., 2020) is a publicly available resource designed

for research in hand pose estimation and hand-object

interaction analysis. It provides a comprehensive col-

lection of RGB-D images capturing real-world in-

teractions between hands and various objects. The

dataset emphasizes scenarios involving natural hand

poses while manipulating objects, making it highly

suitable for studying complex hand-object interac-

tions.

The DexYCB dataset (Mishra et al., 2020) is a

comprehensive resource designed for studying hand-

object interactions, particularly focusing on 6D ob-

ject pose estimation and 3D hand pose estimation. It

features a diverse set of RGB-D sequences capturing

real-world interactions with objects from the YCB ob-

ject set, a widely used benchmark for robotic manip-

ulation research.

The FreiHand dataset (Zimmermann et al.,

2020) is a high-quality resource for advancing re-

search in 3D hand pose estimation and shape recon-

struction. It is specifically designed to provide chal-

lenging and realistic scenarios, featuring diverse hand

poses captured from real-world settings. The dataset

Multi-Modal Multi-View Perception Feature Tracking for Handover Human Robot Interaction Applications

803

Figure 5: The qualitative samples of the DexYCB dataset obtained from the HOMR network.

includes 134,000 samples collected from 32 unique

subjects, ensuring significant variation in hand shape,

size, and pose.

For FreiHand dataset and HO-3D dataset, we re-

port the F-scores, the mean joint error (PAMPJPE),

and the mean mesh error (PAMPVPE) in millimeters

after performing Procrustes alignment. For DexYCB

dataset, we report non procrustes aligned MPJPE. For

6D object pose estimation, we compute ADD-S (Av-

erage Distance of Model Points with Symmetry). The

Average Distance of Model Points (ADD) is a widely

used metric to evaluate the accuracy of 6D object

pose estimation. It calculates the mean distance be-

tween corresponding 3D points of the ground truth

object model and the estimated object model under a

predicted pose. In particular, for symmetric objects,

the ADD-s variant is employed to handle symmetry.

ADD-s is defined as:

ADD-s =

1

|M|

∑

x∈M

min

y∈M

∥(Rx+t)−(R

gt

y+t

gt

)∥, (1)

where M is the set of 3D model points, R and t

are the predicted rotation and translation of the ob-

ject, and R

gt

and t

gt

are the ground truth rotation and

translation. The term min

y∈M

accounts for symmetry

by finding the closest point y in the model set M for

each transformed point x.

ADD-S measures the average alignment error be-

tween the predicted and ground truth poses. Lower

ADD-s values indicate more accurate pose predic-

tions, making it a key metric for evaluating object

pose estimation in scenarios involving symmetrical

objects.

Table 3: Performance comparison with state-of-the-art

methods on hand pose estimation on the HO3D dataset.

Method PA-MPJPE↓ PA-MPVPE↓ F@5↑ F@15↑

Hasson et al. (Hasson et al., 2020) 11.4 11.4 42.8 93.2

Hasson et al. (Hasson et al., 2019) 11.0 11.2 46.4 93.9

Hampali et al. (Hampali et al., 2020) 10.7 10.6 50.6 94.2

Liu et al. (Liu et al., 2021) 10.1 9.7 53.2 95.2

HFL-Net (Wang et al., 2023) 8.9 8.7 57.5 96.5

Ours 8.87 8.79 58.5 96.9

Table 4: Performance comparison on the object pose esti-

mation task for Cleanser, Bottle, and Can categories.

Method Cleanser↑ Bottle↑ Can↑ Average↑

Liu et al. (Liu et al., 2021) 88.1 61.9 53.0 67.7

HFL-Net (Wang et al., 2023) 81.4 87.5 52.2 73.3

Ours 85.4 86.3 51.4 74.3

4.3 Comparison to the State-of-the-Art

In this study, we implement two distinct networks:

HMR and HOMR. For the model trained with the

HMR network, we evaluate and compare the 3D

hand pose and 3D mesh errors against state-of-the-

art methods using the HO-3D and FreiHand datasets.

For the model trained with the HOMR network,

which is a unified framework, we perform compar-

isons on both the HO-3D (Zhang et al., 2020) and

DexYCB (Mishra et al., 2020) datasets, benchmark-

ing the results against state-of-the-art techniques.

4.3.1 HMR Network Comparisons

Initially, we trained the HMR network using the Frei-

Hand dataset (Zimmermann et al., 2020). A detailed

comparison with state-of-the-art methods on the Frei-

Hand dataset is provided in Table 1. The evaluation

follows the standard protocol, with metrics reported

for assessing 3D joint and 3D mesh accuracy. The

PA-MPVPE and PA-MPJPE metrics are presented in

millimeters and low the error higher the 3D pose ac-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

804

Table 5: Comparison of hand pose estimation results with

state-of-the-art methods on the DexYCB dataset.

Method MPJPE↓ PAMPJPE↓ RGB-D

Hasson (Hasson et al., 2019) 17.6 - RGB

Hasson (Hasson et al., 2020) 18.8 - RGB

Tze et al. (Tse et al., 2022) 15.3 - RGB

Liu et al. (Liu et al., 2021) 15.27 6.58 RGB

DMA (Zhao et al., 2023) 12.7 - RGB

HFL-Net (Wang et al., 2023) 12.56 5.47 RGB

Hoang et al. (Hoang et al., 2024) 12.15 4.54 RGBD

Ours 11.9 4.61 RGBD

Table 6: Performance comparison of the object pose esti-

mation on DexYCB datset.

Method AUC↑ ADD-S < 2cm↑

Hasson et al. (Hasson et al., 2019) 0.69 0.65

Hasson et al. (Hasson et al., 2020) 0.75 0.71

Cao et al. (Cao et al., 2021) 0.70 0.72

Chen et al. (Chen et al., 2022b) 0.72 0.74

Chen et al. (Chen et al., 2023) 0.75 0.77

Hoang et al. (Hoang et al., 2024) 0.84 0.82

Ours 0.86 0.83

curacy.

To assess the performance of our model on hand-

object datasets, we further evaluate the HMR network

using the HO-3D (Zhang et al., 2020) dataset. Consis-

tent with the evaluation on the FreiHand dataset, we

report PA-MPVPE and PA-MPJPE metrics, both ex-

pressed in millimeters. A detailed comparison of the

results is presented in Table 2. From these compar-

isons, it is evident that our model achieves error rates

comparable to HaMeR (Pavlakos et al., 2024). The

slight differences in error values can be attributed to

our use of a fused RGB and Depth image approach,

where the depth fusion introduces marginal variations

in performance.

4.3.2 HOMR Network Comparisons

We evaluate the performance of the HOMR network

on two datasets: HO-3D (Zhang et al., 2020) and

DexYCB (Mishra et al., 2020). The evaluation in-

cludes both hand pose estimation errors and object

pose estimation metrics. Our proposed HOMR net-

work is compared against existing state-of-the-art

methods for hand-object pose estimation on HO-3D

dataset. The detailed results are presented in Ta-

ble 3. From the comparison, it is evident that the F-

scores and mesh error (PA-MPVPE) achieved by our

method surpass those of the current state-of-the-art

approaches. Additionally, the joint error (PA-MPJPE)

is slightly lower than that of the most recent state-of-

the-art methods.

Limited comparisons regarding object pose esti-

mation on the HO-3D (Zhang et al., 2020) dataset

have been presented in prior works. Two studies re-

ported the ADD-0.1D error for four objects from the

YCB dataset (Calli et al., 2017). For a fair evaluation,

we compare these specific objects, and the results are

detailed in Table 4. From the comparison, it can be

observed that the average object pose estimation error

in our method is slightly higher than the state-of-the-

art methods.

Similarly, limited works have reported hand-

object pose estimation performance on the

DexYCB (Mishra et al., 2020) dataset. Based

on our research, we compare the results with the

state-of-the-art methods. The reported values for

hand pose estimation are presented in Table 5. For

object pose evaluation, not all works use same

metrics so we compute ADD-S because it wasmostly

mentioned by research works. The ADD-S and area

under the curve (AUC) for object pose evaluation is

mentioned in the Table 6. Few qualitative samples

obtained from HOMR network on DexYCB dataset

is illustrated in Figure 5.The primary limitation of

this work arises when the hands are significantly

occluded, leading to failures in accurately estimating

hand joints.

5 CONCLUSIONS

In this work, we present a comprehensive architec-

tural framework tailored for human-robot interaction

applications, particularly focusing on tasks such as

object handover. Our key contribution lies in uni-

fied hand-object pose estimation, achieved through an

early-stage fusion of RGB and depth modalities. The

fused data is processed by a MobileNetV2 FPN-based

backbone to extract region-of-interest (ROI) aligned

features for both the hand and the object. These fea-

tures are subsequently encoded using a deformable

transformer, with cross-attention-based decoding em-

ployed to estimate both hand and object parameters.

From these parameters, we derive 3D hand mesh re-

constructions and 6D object pose estimations. The

proposed models are evaluated on large-scale open-

source datasets, demonstrating competitive, state-of-

the-art performance. Our future work will focus on

thoroughly evaluating the proposed system within a

human-robot interaction (HRI) workspace. While we

have tested the inference speed in real-time and con-

ducted preliminary tests on a limited number of sam-

ples to validate the system’s functionality in the HRI

environment, further efforts will include creating a

new dataset and testing the system in entirely unseen

environments to assess its robustness and generaliza-

tion capabilities.

Multi-Modal Multi-View Perception Feature Tracking for Handover Human Robot Interaction Applications

805

ACKNOWLEDGEMENTS

Funded by the German Federal Ministry of Educa-

tion and Research (BMBF) – Project-ID 01IS23047B

– aiRobot.

REFERENCES

Baek, S., Kim, K. I., and Kim, T.-K. (2019). Pushing the

envelope for rgb-based dense 3d hand pose estimation

via neural rendering. In 2019 IEEE/CVF Conference

on Computer Vision and Pattern Recognition (CVPR),

pages 1067–1076.

Cai, Y., Ge, L., Liu, J., Cai, J., Cham, T.-J., Yuan, J., and

Thalmann, N. M. (2019). Exploiting spatial-temporal

relationships for 3d pose estimation via graph convo-

lutional networks. In Proceedings of the IEEE In-

ternational Conference on Computer Vision, pages

2272–2281.

Calli, B., Siu, A., Walsman, A., Matusik, W., and Allen, P.

(2017). The ycb object and model set: Towards com-

mon benchmarks for manipulation research. arXiv

preprint arXiv:1709.06965.

Cao, Z., Radosavovic, I., Kanazawa, A., and Malik, J.

(2021). Reconstructing hand-object interactions in

the wild. In Proceedings of the IEEE/CVF Interna-

tional Conference on Computer Vision (ICCV), pages

12417–12426.

Chen, P., Chen, Y., Yang, D., Wu, F., Li, Q., Xia, Q., and

Tan, Y. B. (2021). I2uv-handnet: Image-to-uv pre-

diction network for accurate and high-fidelity 3d hand

mesh modeling. 2021 IEEE/CVF International Con-

ference on Computer Vision (ICCV), pages 12909–

12918.

Chen, X., Liu, Y., Dong, Y., Zhang, X., Ma, C., Xiong, Y.,

Zhang, Y., and Guo, X. (2022a). Mobrecon: Mobile-

friendly hand mesh reconstruction from monocular

image. In Proceedings of the IEEE/CVF Conference

on Computer Vision and Pattern Recognition (CVPR),

pages 12912–12921.

Chen, Z., Hampali, S., Schmid, C., and Laptev, I. (2023).

Gsdf: Geometry-driven signed distance functions for

3d hand-object reconstruction. In Proceedings of the

IEEE/CVF Conference on Computer Vision and Pat-

tern Recognition (CVPR), pages 12890–12900.

Chen, Z., Hasson, Y., Schmid, C., and Laptev, I. (2022b).

Alignsdf: Pose-aligned signed distance fields for

hand-object reconstruction. In Proceedings of the

European Conference on Computer Vision (ECCV),

pages 231–248, Cham, Switzerland. Springer.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn,

D., Zhai, X., Unterthiner, T., Dehghani, M., Min-

derer, M., Heigold, G., Gelly, S., Uszkoreit, J., and

Houlsby, N. (2020). An image is worth 16x16 words:

Transformers for image recognition at scale. ArXiv,

abs/2010.11929.

Ge, L., Cai, Y., Weng, J., and Yuan, J. (2018). Hand point-

net: 3d hand pose estimation using point sets. In 2018

IEEE/CVF Conference on Computer Vision and Pat-

tern Recognition, pages 8417–8426.

Ge, L., Ren, Z., Li, Y., Xue, Z., Wang, Y., Cai, J., and

Yuan, J. (2019). 3d hand shape and pose estimation

from a single rgb image. 2019 IEEE/CVF Conference

on Computer Vision and Pattern Recognition (CVPR),

pages 10825–10834.

Hampali, S., Rad, M., Oberweger, M., and Lepetit, V.

(2020). Honnotate: A method for 3d annotation

of hand and object poses. In Proceedings of the

IEEE/CVF Conference on Computer Vision and Pat-

tern Recognition (CVPR), pages 3196–3206.

Hasson, Y., Tekin, B., Bogo, F., Laptev, I., Pollefeys, M.,

and Schmid, C. (2020). Leveraging photometric con-

sistency over time for sparsely supervised hand-object

reconstruction. In Proceedings of the IEEE/CVF Con-

ference on Computer Vision and Pattern Recognition

(CVPR), pages 571–580.

Hasson, Y., Varol, G., Tzionas, D., Kalevatykh, I., Black,

M. J., Laptev, I., and Schmid, C. (2019). Learning

joint reconstruction of hands and manipulated objects.

In Proceedings of the IEEE/CVF Conference on Com-

puter Vision and Pattern Recognition (CVPR), pages

11807–11816.

Hoang, D.-C., Tan, P. X., Nguyen, A.-N., Vu, D.-Q., Vu,

V.-D., Nguyen, T.-U., Hoang, N.-A., Phan, K.-T.,

Tran, D.-T., Nguyen, V.-T., Duong, Q.-T., Ho, N.-

T., Tran, C.-T., Duong, V.-H., and Ngo, P.-Q. (2024).

Multi-modal hand-object pose estimation with adap-

tive fusion and interaction learning. IEEE Access,

12:54339–54351.

Jiang, T., Xie, X., and Li, Y. (2024). Rtmw: Real-time

multi-person 2d and 3d whole-body pose estimation.

arXiv preprint arXiv:2407.08634.

Jiang, Z., Rahmani, H., Black, S., and Williams, B. M.

(2023). A probabilistic attention model with

occlusion-aware texture regression for 3d hand recon-

struction from a single rgb image. In Proceedings of

the IEEE/CVF Conference on Computer Vision and

Pattern Recognition (CVPR), pages 6276–6286.

Lepetit, V., Moreno-Noguer, F., and Fua, P. (2009). Epnp:

An accurate o(n) solution to the pnp problem. In Pro-

ceedings of the IEEE Conference on Computer Vision

and Pattern Recognition (CVPR), pages 1–8.

Lin, K., Wang, L., and Liu, Z. (2021). End-to-end human

pose and mesh reconstruction with transformers. In

Proceedings of the IEEE/CVF Conference on Com-

puter Vision and Pattern Recognition (CVPR), pages

10690–10699.

Lin, T.-Y., Doll

´

ar, P., Girshick, R., He, K., Hariharan, B.,

and Belongie, S. (2017). Feature pyramid networks

for object detection. Proceedings of the IEEE Con-

ference on Computer Vision and Pattern Recognition

(CVPR), pages 2117–2125.

Liu, S., Jiang, H., Xu, J., Liu, S., and Wang, X.

(2021). Semi-supervised 3d hand-object poses esti-

mation with interactions in time. In Proceedings of

the IEEE/CVF Conference on Computer Vision and

Pattern Recognition (CVPR), pages 14687–14696.

Mishra, A., Fathi, A., Jain, M., and Handa, A. (2020). Dex-

ycb: A benchmark for dexterous manipulation of ob-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

806

jects in cluttered environments. In Proceedings of

the IEEE/RSJ International Conference on Intelligent

Robots and Systems (IROS), pages 3473–3480.

Moon, G., Yu, S.-I., Wen, H., Shiratori, T., and Lee, K. M.

(2020). Interhand2.6m: A dataset and baseline for

3d interacting hand pose estimation from a single rgb

image. In European Conference on Computer Vision

(ECCV).

Park, J., Oh, Y., Moon, G., Choi, H., and Lee, K. M.

(2022a). Handoccnet: Occlusion-robust 3d hand mesh

estimation network. In Conference on Computer Vi-

sion and Pattern Recognition (CVPR).

Park, J., Oh, Y., Moon, G., Choi, H., and Lee, K. M.

(2022b). Handoccnet: Occlusion-robust 3d hand mesh

estimation network. In Conference on Computer Vi-

sion and Pattern Recognition (CVPR).

Pavlakos, G., Shan, D., Radosavovic, I., Kanazawa, A.,

Fouhey, D., and Malik, J. (2024). Reconstructing

hands in 3D with transformers. In CVPR.

Qi, C. R., Liu, W., Wu, C., Su, H., and Guibas, L. J.

(2017). Pointnet++: Deep hierarchical feature learn-

ing on point sets in a metric space. In Advances

in Neural Information Processing Systems (NeurIPS),

volume 30.

Qu, W., Cui, Z., Zhang, Y., Meng, C., Ma, C., Deng, X., and

Wang, H. (2023). Novel-view synthesis and pose esti-

mation for hand-object interaction from sparse views.

2023 IEEE/CVF International Conference on Com-

puter Vision (ICCV), pages 15054–15065.

Romero, J., Masi, I., Ranjan, A., Zhu, Z., Liu, Y., Shih, Y.,

Joo, H., Niebles, J. C., and Black, M. J. (2017). Em-

bodied hands: Modeling and capturing hands and bod-

ies together. In Proceedings of the IEEE Conference

on Computer Vision and Pattern Recognition (CVPR),

pages 4514–4523.

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., and

Chen, L.-C. (2018). Mobilenetv2: Inverted residuals

and linear bottlenecks. Proceedings of the IEEE Con-

ference on Computer Vision and Pattern Recognition

(CVPR), pages 4510–4520.

Tang, X., Wang, T., and Fu, C.-W. (2021). Towards accurate

alignment in real-time 3d hand-mesh reconstruction.

In Proceedings of the IEEE/CVF International Con-

ference on Computer Vision (ICCV), pages 13909–

13918.

Tse, T. H. E., Kim, K. I., Leonardis, A., and Chang, H. J.

(2022). Collaborative learning for hand and object re-

construction with attention-guided graph convolution.

In Proceedings of the IEEE/CVF Conference on Com-

puter Vision and Pattern Recognition (CVPR), pages

1664–1674.

Ultralytics (2023). Yolov8: State-of-the-

art object detection and segmentation.

https://github.com/ultralytics/ultralytics.

Wang, H., Wang, C., Li, H., and Li, Y. (2022). Hope net:

Hierarchical object pose estimation. IEEE Robotics

and Automation Letters, 7(4):7519–7526.

Wang, H., Wang, C., Li, H., and Li, Y. (2023). Har-

monious features learning for hand-object pose es-

timation. IEEE Robotics and Automation Letters,

8(2):1683–1690.

Xu, Y., Wang, H., Wang, C., Li, H., and Li, Y. (2022).

Hoisdf: A hierarchical object-interaction dataset with

spatial and functional dependencies. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

45(11):10379–10393.

Zhang, W., Wu, X., Luo, Z., Zhou, Z., Li, C., and Bao, X.

(2020). Ho-3d: A dataset for 3d hand object inter-

action. In Proceedings of the IEEE/CVF Conference

on Computer Vision and Pattern Recognition (CVPR),

pages 5867–5876.

Zhao, Y., Wang, H., Wang, C., Li, H., and Li, Y. (2023).

Interacting hand-object pose estimation via dense mu-

tual attention. IEEE Robotics and Automation Letters,

8(2):1675–1682.

Zhu, X., Su, W., Lu, L., Xu, B., Li, X., and Wang, J. (2021).

Deformable detr: Deformable transformers for end-

to-end object detection. In Proceedings of the In-

ternational Conference on Learning Representations

(ICLR).

Zimmermann, C. and Brox, T. (2017). Learning to estimate

3d hand pose from single rgb images. In 2017 IEEE

International Conference on Computer Vision (ICCV),

pages 4913–4921.

Zimmermann, C., Rother, C., Saito, J., Pock, T., Sumer, H.,

Loper, M., Deigel, M., and Geiger, A. (2020). Frei-

hand: A dataset for hand mesh estimation. In Pro-

ceedings of the IEEE/CVF Conference on Computer

Vision and Pattern Recognition (CVPR), pages 4316–

4325.

Multi-Modal Multi-View Perception Feature Tracking for Handover Human Robot Interaction Applications

807