Consent Understanding and Verification for Personalized Assistive

Systems

Ismael Jaggi

1 a

, Rachele Carli

2 b

, Berk Buzcu

1,3 c

, Michael Schumacher

1 d

and Davide Calvaresi

1 e

1

University of Applied Sciences and Arts Western Switzerland (HES-SO Valais/Wallis), Sierre, Switzerland

2

UMEA University, UMEA, Sweden

3

The Sense Innovation and Research Center, Lausanne, Switzerland

{ismael.jaggi, berk.buzcu, davide.calvaresi, michael.schumacher}@hevs.ch, rachele.carli@umea.se

Keywords:

Dynamic Informed Consent, Agent-Based Virtual Assistants, Healthcare, Data Protection.

Abstract:

The rapid adoption of personalized systems, driven by advancements in natural language processing, sensor

technologies, and AI, has transformed the role of virtual personal assistants (VPAs), particularly in healthcare.

While VPAs promise to enhance patient experiences through tailored support and adaptive workflows, their

complexity often results in opaque functionalities hindering user understanding. This lack of transparency

poses significant challenges, particularly in the context of informed consent, where users must comprehend

the implications of sharing sensitive personal data. Existing consent systems often rely on static declarations

and extensive documentation, which overwhelm users and fail to ensure informed decision-making. To address

this problem, this paper presents a novel consent management approach integrated into the EREBOTSv3.0, an

agent-based GDPR-compliant explainable framework for virtual assistants. The proposed solution introduces

(i) an interactive method that structures consent into clear sections with summaries and examples to improve

user comprehension and (ii) a question-based verification mechanism that assesses understanding and rein-

forces knowledge when needed. By leveraging EREBOTS’ modular architecture, real-time feedback, and

secure data management, the proposed approach enhances transparency, fosters trust, and simplifies the con-

sent understanding for dialog-based healthcare systems. This work lays the foundation for addressing critical

challenges at the intersection of personalized AI, healthcare, and data protection.

1 INTRODUCTION

The adoption of personalized systems providing tai-

lored support is rapidly increasing, driven by ad-

vances in natural language processing Eguia et al.

(2024), sensor technologies Cusack et al. (2024), and

artificial intelligence Wang et al. (2021). Virtual

personal assistants (VPAs) are particularly promis-

ing in delivering impactful, customized outcomes. In

healthcare, they hold the potential to enhance pa-

tient experiences and improve outcomes by address-

ing individual needs and supporting complex medical

workflows Fang et al. (2024).

Despite these advancements, VPAs are often so-

a

https://orcid.org/0009-0005-0092-5446

b

https://orcid.org/0000-0002-8689-285X

c

https://orcid.org/0000-0003-1320-8006

d

https://orcid.org/0000-0002-5123-5075

e

https://orcid.org/0000-0001-9816-7439

phisticated systems with opaque functionalities and

decision-making processes that most users struggle to

understand. This lack of transparency is especially

concerning healthcare, where AI systems are increas-

ingly employed to assist or replace human operators

for less safety-critical tasks. Examples include tools

promoting healthier habits Cruz Casados et al. (2024),

monitoring therapy adherence Ma et al. (2024), pro-

viding medication reminders Corbett et al. (2021),

and suggesting intervention strategies Sezgin et al.

(2020).

These intelligent systems depend on collecting

and analyzing personal data provided by patients,

with outcomes that can significantly impact users’

health and safety. This highlights the critical role of

informed consent, a cornerstone of the professional-

patient relationship. Informed consent ensures auton-

omy and addresses the inherent information asymme-

try where the professional or service provider holds

greater knowledge and authority.

694

Jaggi, I., Carli, R., Buzcu, B., Schumacher, M. and Calvaresi, D.

Consent Understanding and Verification for Personalized Assistive Systems.

DOI: 10.5220/0013376300003890

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 1, pages 694-701

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

When intelligent systems mediate this relation-

ship, it becomes vital to implement mechanisms that

not only disseminate legal documentation but also

ensure users comprehend the provided information.

These mechanisms mitigate potential adverse conse-

quences arising from misunderstanding or insufficient

awareness and reduce developers’ liability by demon-

strating efforts to inform users effectively. This is es-

pecially the case for consent given by patients in clin-

ical settings.

Current consent systems typically rely on static

declarations and detailed descriptions Kaye et al.

(2014). However, these approaches often over-

whelm users with complexity and information over-

load Schermer et al. (2014), leaving them unable to

make informed decisions. As a result, users may inad-

vertently agree to terms they do not fully understand,

risking unintended uses of their data. For instance,

sensitive medical data could be shared with third par-

ties without the user’s explicit awareness Falagas et al.

(2009). This underscores the urgent need for innova-

tive consent solutions that simplify processes while

enhancing user comprehension and competence.

To address these challenges, this paper proposes

two key contributions: (i) an interactive method for

structuring consent into distinct sections with sum-

maries and examples to convey their meaning effec-

tively, particularly in scenarios lacking professional

support; and (ii) a question-based verification mecha-

nism to confirm user comprehension, supplemented

by reinforcement measures to address gaps in un-

derstanding. To test the overall system architec-

ture, we integrate it into the EREBOTSv3.0 frame-

work, designed to ensure users are thoroughly in-

formed about data usage and to promote long-term

understanding of consent. The EREBOTSv3.0 frame-

work is a sophisticated multi-agent system for de-

veloping modular and explainable virtual assistants.

It employs a multi-agent architecture, where each

user is represented by a personal agent managing in-

dividual interactions for personalized, adaptive ex-

periences. Additional agents, such as a gateway

agent, facilitate seamless communication between

front-end interfaces (e.g., mobile apps) and back-end

systems. EREBOTS adheres to principles of modu-

larity and data protection, supporting plug-and-play

components for customizable workflows and employ-

ing GDPR-compliant databases like Pryv to securely

manage sensitive data. This ensures transparency in

data processing and robust data protection.

By leveraging EREBOTS’ modularity and real-

time feedback mechanisms, the proposed consent sys-

tem offers a seamless and customizable user experi-

ence. It addresses critical challenges in healthcare,

such as ensuring transparency in data usage and fos-

tering trust. This approach simplifies the consent pro-

cess while laying the foundation for user-friendly and

reliable solutions in dialog-based healthcare systems.

The remainder of this paper is organized as fol-

lows. Section 2 reviews the relevant state-of-the-art.

Section 3 describes the system architecture and mod-

ule behaviors. Finally, Section 4 discusses the contri-

butions and their potential impact and outlines future

works concluding the paper.

2 STATE OF THE ART

The increasing reliance on digital systems for data

management has heightened the importance of ro-

bust consent mechanisms, particularly in sensitive do-

mains such as healthcare and conversational AI. This

section reviews advancements in consent manage-

ment systems, their application in assistive technolo-

gies, and ongoing challenges in ensuring informed

and verifiable consent.

2.1 Consent Information in Modern

Systems

Consent management has emerged as a central com-

ponent of online systems, driven by the latent value

of user data and the increasing diversity of its ap-

plications. A key regulatory framework underpin-

ning consent management is the General Data Pro-

tection Regulation (GDPR)Robol et al. (2022). The

advent of digitalization has catalyzed research into

Dynamic Consent Management Systems (DCMS).

These systems enable users to continually manage

and update their consent preferences, offering them

greater control over their personal data in real time Al-

banese et al. (2020). By leveraging online and mo-

bile platforms, consent forms can be stored elec-

tronically, allowing users to review and modify their

preferences at any time. These platforms also fa-

cilitate direct communication with researchers, en-

abling users to ask questions, request additional

information, or specify preferences for future re-

search projects. Furthermore, DCMS can accom-

modate diverse consent modalities—such as broad

consent, specific consent, or meta-consent—tailored

to the research context Budin-Ljøsne et al. (2017).

Technological advancements have significantly en-

hanced the usability and security of consent man-

agement systems. Blockchain technology, for ex-

ample, is increasingly employed to ensure the im-

mutability, traceability, and accessibility of consent

and data records Albanese et al. (2020). Simulta-

Consent Understanding and Verification for Personalized Assistive Systems

695

neously, privacy-preserving techniques such as dif-

ferential privacy and zero-knowledge proofs are be-

ing utilized to safeguard data confidentiality Khalid

et al. (2023a). Research has demonstrated that inter-

active platforms and multimedia tools improve user

satisfaction and comprehension of the consent pro-

cess. Moreover, user-centered and transparent design

methodologies have been shown to strengthen trust in

digital services Gesualdo et al. (2021). The health-

care sector represents a critical application domain for

DCMS, where robust confidentiality measures are es-

sential to safeguard patient privacy. These systems

ensure that data is utilized strictly within the scope

of the granted consent, thereby mitigating risks such

as privacy breaches and identity theft Khalid et al.

(2023b).

2.2 Consent in Assistive Conversational

Systems

Conversational systems, including voice assistants

and chatbots, are increasingly utilized across vari-

ous healthcare applications, such as telerehabilita-

tion and accessibility. These AI-driven systems offer

unique opportunities to enhance the consent process

by addressing comprehension challenges during user

interactions. AI-powered conversational agents can

present the consent process in incremental steps, al-

lowing users to digest information at their own pace.

Moreover, the ability to interact with these systems

and pose questions in real time helps resolve ambigu-

ities. By designing these chatbots as friendly research

assistants that (pro)actively engage users, patients feel

more involved and are encouraged to participate ac-

tively Xiao et al. (2023). These systems provide pa-

tients with continuous access to detailed information,

eliminating the reliance on time-constrained human

interactions. Additionally, conversational agents can

adapt their responses to the patient’s level of lan-

guage comprehension, thereby alleviating the burden

on medical personnel while empowering patients to

make informed decisions Allen et al. (2024). Ethi-

cal and law-compliant considerations remain essen-

tial in the design and implementation of such systems.

Concerns surrounding privacy, algorithmic fairness,

and the potential for misunderstandings in agent-user

interactions necessitate the adoption of transparent,

user-centered design processes. This is particularly

critical when developing assistive technologies for

vulnerable populations, such as older adults or indi-

viduals with cognitive impairments. To address these

challenges, iterative development and active involve-

ment of end users are essential Wangmo et al. (2019).

2.3 Challenges in Consent

Comprehension and Verification

Both clinical and non-clinical domains face signifi-

cant challenges in ensuring the comprehension and

verification of informed consent Manson and O’Neill

(2007), a cornerstone of patient autonomy Harish

et al. (2015). Despite its importance, numerous stud-

ies reveal persistent deficiencies in the informed con-

sent process (ICP), undermining its legal validity and

ethical integrity Delany (2005).

A primary issue is the limited comprehension of

consent information by participants. Research indi-

cates that many individuals fail to grasp critical as-

pects of consent, such as the purpose of a study, as-

sociated risks, and the concept of randomization. A

systematic review by Pietrzykowski and Smilowska

(2021) found that fewer than half of the partici-

pants could recall essential study details, including

risks and the voluntary nature of participation. Sim-

ilarly, Wisgalla and Hasford (2022) highlighted that

consent documents are often excessively lengthy and

written in complex language. This complexity makes

such documents challenging to understand even for

individuals with advanced education, such as those

holding PhDs. Consequently, such structures can ob-

scure critical information for laypersons or individu-

als with limited literacy, contributing to widespread

misunderstandings. Nearly 45% of participants in

clinical research, for instance, are unable to identify

a single risk associated with the studies in which they

participate. Another critical challenge is cognitive

and informational overload. The extensive and in-

tricate nature of consent information can overwhelm

patients, hindering their ability to provide truly in-

formed consent. This phenomenon, termed “informa-

tional overload”, is well-documented by Bester et al.

(2016), who emphasize that excessive details can ren-

der the ICP ineffective.

To address these challenges, strategies such as the

teach-back method have been proposed. This tech-

nique involves asking patients to reiterate the infor-

mation they have received in their own words, allow-

ing misunderstandings to be identified and corrected

proactively. A study on surgical education demon-

strated that the teach-back method significantly im-

proved patients’ understanding of risks and benefits

while enhancing their trust in medical professionals.

These findings underscore the positive impact of ac-

tive engagement and repetition on the informed con-

sent process Seely et al. (2022).

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

696

3 ARCHITECTURE AND

IMPLEMENTATION

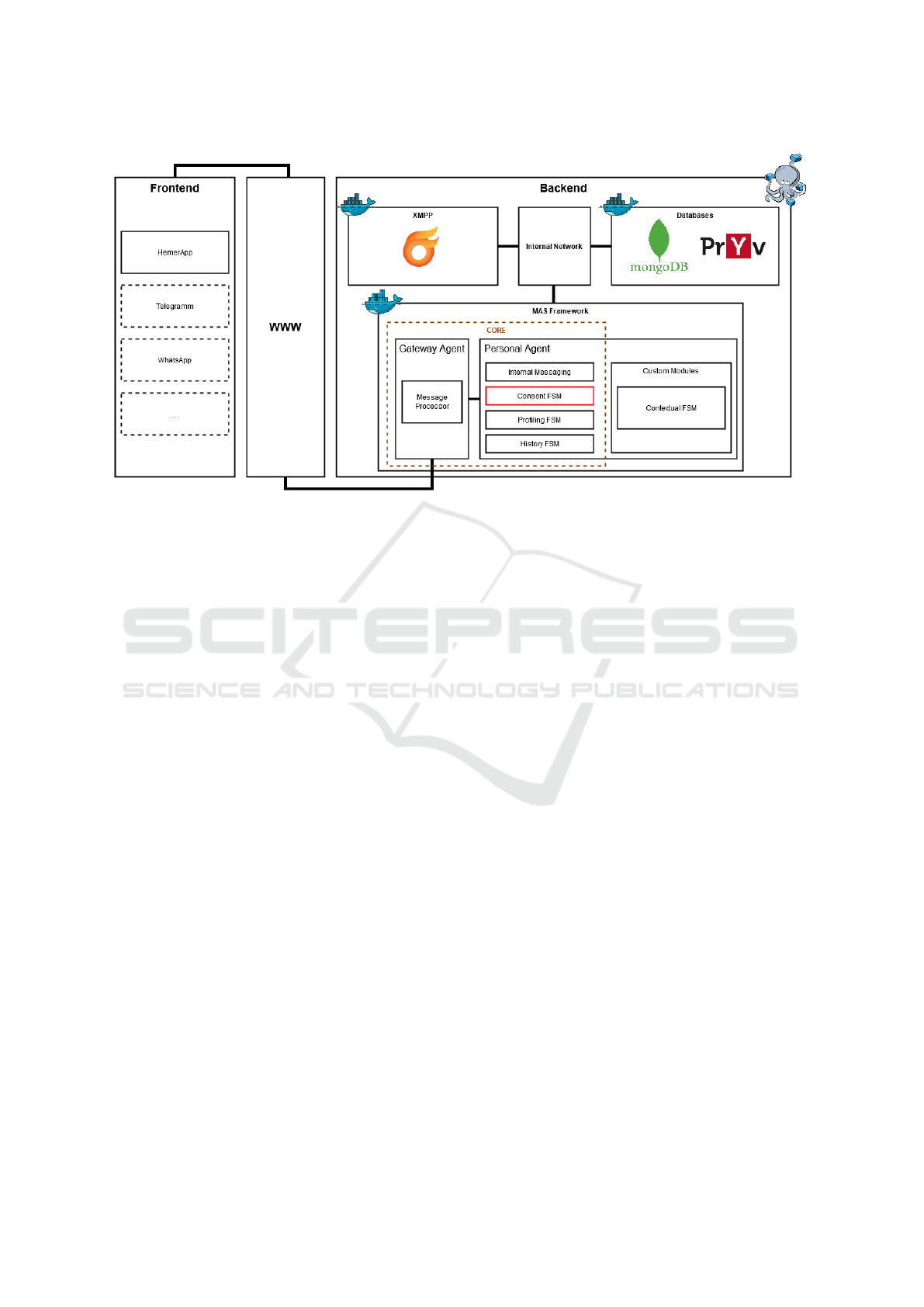

Figure 1 illustrates the extension of the EREBOTS

framework with a dynamic consent management sys-

tem. This modular, agent-based platform supports

the creation of GDPR-compliant virtual assistants, in-

corporating both core modules (which facilitate the

underlying communication mechanisms) and custom

modules (such as integrating an Explainable AI (XAI)

engine for user-oriented explanations Buzcu et al.

(2024)). The framework’s customization and modu-

larity allow for plug-and-play extensions, including

finite state machines (FSMs), user profiling systems,

and feedback management. Its adaptability, trans-

parency, and one-to-one user-agent mapping make it

an ideal candidate for integrating a customized con-

sent management system.

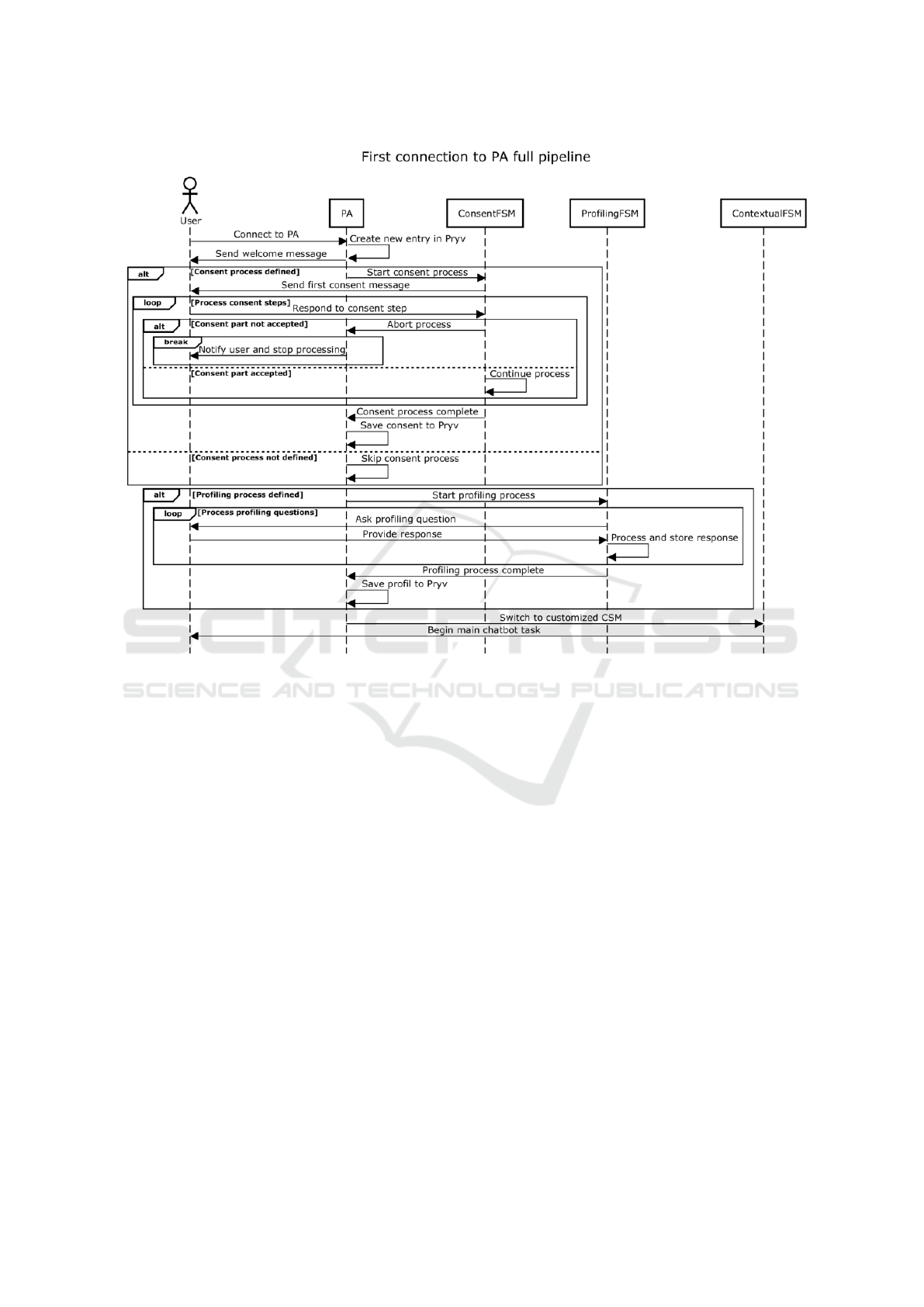

3.1 Pipeline

Consent management is integrated as an optional

module into the core functionality of the personal

agents (PAs) within the EREBOTS architecture. This

pipeline maintains consistency across all PAs to en-

sure fairness and observability. Figure 2 depicts the

entire consent management process, which can be di-

vided into four main stages based on user interaction.

1. Initialization:

• The user connects to the PA, creating a new en-

try with private information in Pryv.

• The user receives an explanation of the chat-

bot’s purpose and interaction instructions.

2. Consent Process:

• If no consent process is defined (see [Consent

process not defined]), the interaction proceeds

without user input. Otherwise, the consent pro-

cess begins following the welcome message

(explained in Section 3.2).

• User consent is gathered in incremental steps

(see [Process consent steps]). At each stage, the

user is prompted to indicate their understanding

of the system’s implications.

• If the user declines any part of the consent (see

[Consent part not accepted]), the process termi-

nates, and the PA stops processing messages.

• Upon completion, the consent is stored in Pryv

for compliance and future interactions.

3. User Profiling & Task Execution:

• The system transitions to the profiling state,

where ProfilingFSM is used to create a custom

user profile stored in Pryv.

• The PA switches to the customized Conversa-

tional State Machine (CSM) defined by the de-

veloper, marking the start of the PA’s main task.

3.2 The Consent FSM

The Consent FSM manages user interactions

throughout the consent process. Each state represents

a segment of the consent form or a related question.

Configuration is managed via a web interface and

stored in two YAML files: one for consent segments

and one for questions. These files are validated by

experts to ensure accuracy. The FSM loads all states

at startup, and user responses determine the transition

to the next state. Consent is gathered in two key

moments: (i) before account registration, where

consent segments are proposed alongside examples

and brief explanations, and (ii) during usage of the

VPa, where follow-up questions further elaborate on

the initial consent.

Consent Segmentation. The user is presented with

sections of the consent form that can be accepted or

rejected, as per legal requirements European Parlia-

ment and European Council (2016). Upon accep-

tance, the FSM proceeds to the next section or begins

providing services. Rejection at any point terminates

the process, and the decision is stored in the privacy-

preserving database, Pryv

1

. If consent is withdrawn

at any point, the chatbot stops, ceasing all operations.

If new consent is provided (the consent process can

be restarted at any time), the services are reactivated.

Informed consent is a critical legal principle

in the EU, safeguarding autonomy and personal

data European Parliament and European Council

(2016). It must be given freely, explicitly, and with

clear information, ensuring the user comprehends

the terms European Commission (2018); European

Parliament and European Council (2016). This is

especially important in AI systems, where ensuring

real understanding—not just acceptance to pro-

ceed—poses a significant challenge. To enhance

comprehension, the consent information is organized

into discrete blocks (e.g., data collected, purpose,

risks). The moment of consent should be clearly

distinct from other interactions, and design elements

such as text segmentation and visual cues can aid

in clarity. Insights from cognitive science and

psychology can inform the design, ensuring users

are not overwhelmed with technical language. A

summary of key concepts may also be provided if

necessary. The FSM validates user responses: correct

1

https://www.pryv.com/

Consent Understanding and Verification for Personalized Assistive Systems

697

Figure 1: Architecture of the EREBOTS Framework.

answers transition to the next state, while incorrect

ones prompt the user to review and provide a new

response. Once all consent sections are accepted, the

user’s consent is stored in Pryv. If consent is modified

or new segments are added or changed, they will be

presented to the user upon reconnecting. The user

must review and either accept or reject the updates. If

rejected, the chatbot stops operating.

Consent Verification. After consent is given, peri-

odic checks will be conducted to ensure the user’s

understanding remains (or is) accurate. To do so,

throughout the system use, users will be prompted

with questions to verify their understanding of the

consented terms. If any misunderstandings are de-

tected, relevant information will be provided to re-

solve them. Once clarified, users can modify or re-

confirm their consent. The consent validation pro-

cess includes multiple question types: (i) True/False,

to verify correctness; (ii) numerical answers, such as

time periods; and (iii) open-ended responses, such as

specific terms. Future work will address the timing

and frequency of questions, as well as which ones to

prompt and when to re-prompt given users or cate-

gories of users.

4 DISCUSSION & CONCLUSIONS

This paper presents a dynamic approach to consent

management that addresses key challenges, including

mitigating the information overload inherent in tradi-

tional models, verifying the actual understanding of

consent, and reinforcing correct understanding over

time. Central to the proposed system is a stepwise

approach to presenting consent, which breaks down

complex and monolithic terms into smaller, more di-

gestible sections. By segmenting the consent process

into manageable parts, users are more likely to under-

stand the information they are consenting to. Addi-

tionally, alongside a straightforward presentation of

the terms (to comply with legal requirements), the

system guides users step-by-step and offers simplified

explanations, examples, and aggregated information

for those less familiar with formal terminology. This

approach reduces the risk of misunderstanding or un-

intentional, uninformed consent.

The proposed system is integrated within the

EREBOTS v3.0 framework, a modular, agent-based

platform that allows for flexible adaptation to dy-

namic consent management needs. By default, this

system adheres to GDPR-compliant standards, ensur-

ing that privacy is maintained throughout the process.

The modularity of the system facilitates not only the

development and configuration of the consent com-

munication process but also enables personalized ver-

ification of users’ understanding of consent. This is

achieved through periodic follow-up questions, rein-

forcing comprehension and ensuring that consent is

granted only after users fully understand its implica-

tions.

A key feature of this model is its balance between

offering users adequate time to review the consent

process and avoiding information overload. By break-

ing consent into smaller, digestible steps and incor-

porating regular checks on user understanding, the

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

698

Figure 2: Sequence Diagram of the Pipeline Flow.

system significantly reduces the risk of uninformed

consent, a common issue in many existing systems.

This iterative review process enables the early iden-

tification of gaps in understanding, preventing misin-

terpretations before they lead to uninformed decision-

making.

Despite its advantages, the use of AI-based con-

sent management raises concerns regarding potential

persuasive techniques that could influence user de-

cisions. It is critical to ensure that the iterative na-

ture of the consent process does not inadvertently co-

erce users into accepting terms they do not fully com-

prehend. Maintaining the integrity of informed con-

sent requires that the process remains free from undue

influence. To address this, the system incorporates

transparent, well-defined mechanisms that track both

the information provided and the manner in which it is

delivered. This transparency ensures that the system

is subject to rigorous oversight, reducing the trust gap

that might arise in the absence of human-human inter-

actions (such as a doctor explaining terms in simpler

language). Furthermore, the system enhances human-

human interactions by mitigating the risk of coercive

behaviors—often difficult to detect and prove in tra-

ditional settings. In this way, the AI system offers a

more efficient means of ensuring the reliability and le-

gitimacy of consent, promoting ethical standards and

user autonomy.

In summary, the proposed dynamic consent man-

agement system, with its step-by-step design and con-

tinuous verification of understanding, holds promise

for enhancing both user trust and the security of the

consent process. The system’s modular architecture

enables a flexible and adaptable approach that pre-

vents cognitive overload while supporting informed

decision-making. This approach not only addresses

the limitations of traditional consent systems, particu-

larly in complex environments such as healthcare, but

also complies with legal and ethical standards, ensur-

ing that consent is fully informed and free from coer-

cion.

The development of this system within the ERE-

BOTS v3.0 framework represents a significant ad-

vancement in consent management. The interactive,

user-centered design, coupled with continuous com-

prehension checks, provides a comprehensive solu-

tion to the challenges posed by conventional consent

systems. Future work will focus on refining the indi-

Consent Understanding and Verification for Personalized Assistive Systems

699

vidual components of the consent process in collabo-

ration with legal AI experts, creating testable consent-

related questions and examples, and conducting initial

trials in the healthcare sector as part of a European

project. These trials will offer valuable insights into

the system’s real-world effectiveness and its potential

for broader adoption.

ACKNOWLEDGEMENTS

Partially supported by CHIST-ERA project EXPEC-

TATION (EU grant CHIST-ERA-19-XAI-005 and na-

tional grant 20CH21

195530).

REFERENCES

Albanese, G., Calbimonte, J.-P., Schumacher, M., and Cal-

varesi, D. (2020). Dynamic consent management for

clinical trials via private blockchain technology. Jour-

nal of ambient intelligence and humanized computing,

11(11):4909–4926.

Allen, J. W., Earp, B. D., Koplin, J., and Wilkinson, D.

(2024). Consent-gpt: is it ethical to delegate procedu-

ral consent to conversational ai? Journal of Medical

Ethics, 50(2):77–83.

Bester, J., Horsburgh, C., and Kodish, E. (2016). The lim-

its of informed consent for an overwhelmed patient:

Clinicians’ role in protecting patients and preventing

overwhelm. AMA journal of ethics, 18:869–886.

Budin-Ljøsne, I., Teare, H. J. A., Kaye, J., Beck, S.,

Bentzen, H. B., Caenazzo, L., Collett, C., D’Abramo,

F., Felzmann, H., Finlay, T., Javaid, M. K., Jones, E.,

Kati

´

c, V., Simpson, A., and Mascalzoni, D. (2017).

Dynamic consent: a potential solution to some of

the challenges of modern biomedical research. BMC

Medical Ethics, 18(1):4.

Buzcu, B., Pannatier, Y., Aydo

˘

gan, R., Ignaz Schumacher,

M., Calbimonte, J.-P., and Calvaresi, D. (2024). A

framework for explainable multi-purpose virtual as-

sistants: A nutrition-focused case study. In Inter-

national Workshop on Explainable, Transparent Au-

tonomous Agents and Multi-Agent Systems, pages 58–

78. Springer.

Corbett, C. F., Combs, E. M., Chandarana, P. S., Stringfel-

low, I., Worthy, K., Nguyen, T., Wright, P. J.,

and O’Kane, J. M. (2021). Medication adher-

ence reminder system for virtual home assistants:

Mixed methods evaluation study. JMIR Form Res,

5(7):e27327.

Cruz Casados, J., Cervantes L

´

opez, M. J., and Gil Herrera,

R. d. J. (2024). Promoting healthy eating habits via

intelligent virtual assistants, improving monitoring by

nutritional specialists: State of the art. In Xie, X.,

Styles, I., Powathil, G., and Ceccarelli, M., editors,

Artificial Intelligence in Healthcare, pages 170–184,

Cham. Springer Nature Switzerland.

Cusack, N. M., Venkatraman, P. D., Raza, U., and Faisal, A.

(2024). Review—smart wearable sensors for health

and lifestyle monitoring: Commercial and emerging

solutions. ECS Sensors Plus, 3(1):017001.

Delany, C. M. (2005). Respecting patient autonomy

and obtaining their informed consent: ethical the-

ory—missing in action. Physiotherapy, 91(4):197–

203.

Eguia, H., S

´

anchez-Bocanegra, C. L., Vinciarelli, F.,

Alvarez-Lopez, F., and Saig

´

ı-Rubi

´

o, F. (2024). Clin-

ical decision support and natural language processing

in medicine: Systematic literature review. J Med In-

ternet Res, 26:e55315.

European Commission (2018). How should my consent be

requested? Accessed: 2024-12-13.

European Parliament and European Council (2016). Gen-

eral data protection regulation (gdpr), regulation (eu)

2016/679 of the european parliament and of the coun-

cil of 27 april 2016 on the protection of natural per-

sons with regard to the processing of personal data and

on the free movement of such data, and repealing di-

rective 95/46/ec (2016).

Falagas, M. E., Korbila, I. P., Giannopoulou, K. P., Kondilis,

B. K., and Peppas, G. (2009). Informed consent: how

much and what do patients understand? The American

Journal of Surgery, 198(3):420–435.

Fang, C. M., Danry, V., Whitmore, N., Bao, A., Hutchison,

A., Pierce, C., and Maes, P. (2024). Physiollm: Sup-

porting personalized health insights with wearables

and large language models.

Gesualdo, F., Daverio, M., Palazzani, L., Dimitriou, D.,

Diez-Domingo, J., Fons-Martinez, J., Jackson, S., Vi-

gnally, P., Rizzo, C., and Tozzi, A. E. (2021). Digital

tools in the informed consent process: a systematic

review. BMC Medical Ethics, 22(1):18.

Harish, D., Kumar, A., and Singh, A. (2015). Patient auton-

omy and informed consent: the core of modern day

ethical medical. Journal of Indian Academy of Foren-

sic Medicine, 37(4):410–414.

Kaye, J., Whitley, E., Lund, D., Morrison, M., Teare, H.,

and Melham, K. (2014). Dynamic consent: A patient

interface for twenty-first century research networks.

European journal of human genetics : EJHG, 23.

Khalid, M. I., Ahmed, M., Helfert, M., and Kim, J. (2023a).

Privacy-first paradigm for dynamic consent manage-

ment systems: Empowering data subjects through

decentralized data controllers and privacy-preserving

techniques. Electronics, 12(24).

Khalid, M. I., Ahmed, M., and Kim, J. (2023b). Enhanc-

ing data protection in dynamic consent management

systems: Formalizing privacy and security definitions

with differential privacy, decentralization, and zero-

knowledge proofs. Sensors, 23(17).

Ma, Y., Achiche, S., Tu, G., Vicente, S., Lessard, D., Engler,

K., Lemire, B., Laymouna, M., Pokomandy, A., Cox,

J., and Lebouche, B. (2024). The first ai-based chatbot

to promote hiv self-management: A mixed methods

usability study. HIV Medicine, pages n/a–n/a.

Manson, N. C. and O’Neill, O. (2007). Rethinking informed

consent in bioethics. Cambridge University Press.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

700

Pietrzykowski, T. and Smilowska, K. (2021). The real-

ity of informed consent: empirical studies on patient

comprehension—systematic review. Trials, 22(1):57.

Robol, M., Breaux, T. D., Paja, E., and Giorgini, P. (2022).

Consent verification monitoring.

Schermer, B. W., Custers, B., and van der Hof, S. (2014).

The crisis of consent: how stronger legal protection

may lead to weaker consent in data protection. Ethics

and Information Technology, 16(2):171–182.

Seely, K. D., Higgs, J. A., Butts, L., Roe, J. M., Merrill,

C. B., Zapata, I., and Nigh, A. (2022). The “teach-

back” method improves surgical informed consent and

shared decision-making: a proof of concept study. Pa-

tient Safety in Surgery, 16(1):33.

Sezgin, E., Militello, L. K., Huang, Y., and Lin, S. (2020).

A scoping review of patient-facing, behavioral health

interventions with voice assistant technology target-

ing self-management and healthy lifestyle behaviors.

Translational Behavioral Medicine, 10(3):606–628.

Wang, D., Wang, L., Zhang, Z., Wang, D., Zhu, H., Gao,

Y., Fan, X., and Tian, F. (2021). “brilliant ai doctor”

in rural clinics: Challenges in ai-powered clinical de-

cision support system deployment. In Proceedings of

the 2021 CHI Conference on Human Factors in Com-

puting Systems, CHI ’21, New York, NY, USA. Asso-

ciation for Computing Machinery.

Wangmo, T., Lipps, M., Kressig, R. W., and Ienca, M.

(2019). Ethical concerns with the use of intelligent

assistive technology: findings from a qualitative study

with professional stakeholders. BMC Medical Ethics,

20(1):98.

Wisgalla, A. and Hasford, J. (2022). Four reasons why too

many informed consents to clinical research are in-

valid: a critical analysis of current practices. BMJ

Open, 12(3).

Xiao, Z., Li, T. W., Karahalios, K., and Sundaram, H.

(2023). Inform the uninformed: Improving online in-

formed consent reading with an ai-powered chatbot.

In Proceedings of the 2023 CHI Conference on Hu-

man Factors in Computing Systems, CHI ’23, page

1–17. ACM.

Consent Understanding and Verification for Personalized Assistive Systems

701