A Virtual Reality Prototype for Evaluating Emotion Induced by

Poetry and Ambient Electronic Music

Irene Fondón

1a

and María Luz Montesinos

2b

1

Departamento de Teoría de la Señal y Comunicaciones, Universidad de Sevilla, Sevilla, Spain

2

Departamento de Fisiología Médica y Biofísica, Universidad de Sevilla, Sevilla, Spain

Keywords: Virtual Reality, Poetry, Electronic Music, Ambient Music, Emotion Induction, Emotion Recognition.

Abstract: Music and poetry evoke emotion. The neural correlates of emotion are not well known but constitute a

growing research field in neuroscience. Using brain imaging techniques, emotion elicited by music has been

addressed by several laboratories. However, most studies are focused on classical music. Surprisingly,

ambient electronic music, which possesses the aesthetic characteristics prone to evoke auto-memories and

emotion, has not been evaluated, and only a few studies are available regarding poetry. In the last years, virtual

reality (VR) has become a powerful tool to elicit and enhance emotions, since this technology immerses the

user in a three-dimensional environment, increasing the sense of presence. In this work we describe a VR

prototype which combines ambient electronic music and poetry, as a preliminary step in our project to address

the neural correlates of emotion and self-awareness evoked by these arts.

1 INTRODUCTION

Music, like poetry, is an exclusively human

phenomenon, present in all cultures. The aesthetic

experience of music and poetry, separate or in

combination, evokes emotion.

Neural basis of human emotion is still poorly

understood, despite constituting a relevant research

field in neuroscience. Non-invasive functional

imaging techniques such as electroencephalography

(EEG) and functional magnetic resonance imaging

(fMRI) are applied to study complex brain processes,

including memory, problem solving, planning,

decision making, language, abstract reasoning, or

emotion.

Using these techniques, several works have

shown that listening to pleasant music involves the

activation of the mesolimbic system (i.e., the reward

brain system), the auditory cortex (related to auditory

memory), and the default mode network (DMN), a

network of brain regions active during mind-

wandering (Trost et al., 2012; Wilkins et al., 2014).

Most of the data available on music-elicited

emotion refers to classical music. Curiously, and

despite the relevance of electronic music in the

a

https://orcid.org/0000-0002-8955-7109

b

https://orcid.org/0000-0003-3525-5874

musical panorama since the appearance of the first

commercial synthesizers in the late 60s and early 70s,

very few brain imaging studies have paid attention to

this type of music (Aparicio-Terrés et al., 2024).

Electronic music encompasses a large and varied

number of musical genres, including popular styles

(breakbeat, EDM, techno, trance, house, dance,

dubstep, among others) and more experimental

forms, such as ambient music. In the context of

emotion and self-awareness, ambient music is

particularly interesting due to its timbral and

“atmospheric” characteristics.

Regarding poetry, only a few neuroimaging

studies have been carried out. They have been

focused on some aspects of the poetic language, on

the neural correlates of perceived literariness in

poetry as compared to prose, or on the brain

mechanisms involved in poetry composition (Gao &

Guo, 2018; Liu et al., 2015; O’Sullivan et al., 2015).

The emotional effect of poetic language and its

associated aesthetic value has been studied

(Wassiliwizky et al., 2017), and fMRI has shown the

activation of brain areas belonging to the primary

reward circuit. An important limitation in the study of

emotions in general, and the emotions evoked by

398

Fondón, I. and Montesinos, M. L.

A Virtual Reality Prototype for Evaluating Emotion Induced by Poetry and Ambient Electronic Music.

DOI: 10.5220/0013376400003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 398-407

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

music in particular, is that in most published works

the stimuli are presented to the subjects in a passive,

non-immersive way. However, it is well known that

emotions are elicited much more effectively when

real experiences are simulated (Chirico et al., 2017;

Riva et al., 2007). Virtual reality (VR) constitutes a

new powerful tool for experiencing deep impact

sensations and emotions in a multisensorial and

interactive way. Thus, in recent years, VR has

become a powerful tool for researching in the context

of behavior and emotions both from the perspective

of psychology and neuroscience.

VR and Poetry. VR environments, when used to

enhance experiences related to poetry, allow artist to

abandon the restrictive two-dimensional space of

written words and enter in a new 3D environment

where anything can exist. However, the limited

number of existing experiences are not interactive and

manly consist of 360º videos (MDB, 2024). Another

different use of VR in poetry is the virtual events

hosted in social platforms (Poetry & Virtual Reality:

A Curious Combo. Does It Work? - YouTube, n.d.)

where the users can interact with their avatars. This

use constitutes a new opportunity to gather people no

matter their real location.

VR and Electronic Music. The integration of

electronic music and VR has been addressed in

different ways. For instance, Virtual Reality Musical

Instruments are used to create and play music in

virtual environments taking advantage of the

interactions and 3D space (Serafin et al., 2016).

Another case of use is virtual concerts that offer a

high level of presence. And high level of engagement

as the user becomes an active agent in the experience

(Onderdijk et al., 2023), (Sansar | Events, n.d.; Wave

| The Show Must Go Beyond., n.d.) . When talking

about electronic music generation, several tools have

been proposed in the past. Virtuoso (Serafin et al.,

2016), is a 3D studio that offers six virtual

instruments. Another VR tool is GENESIS-RT

(Leonard et al., 2014), a platform that allows users to

create instruments and interact with sound objects.

However, the study of electronic music mechanisms

of emotion induction is a recently opened and

promising research field. For instance, it is known

that VR combined with electronic music induces

positive, negative and neutral emotions in a more

effective way improving the quality of performance

of several singers (Zhang et al., 2021). Moreover,

several studies based on EEG have been developed in

the past showing a promising way of understanding

music-induced emotions (Su et al., 2024). State of the

art literature suggests that VR combined with

electronic music produces a complex effect that

involves sensory immersion, neural activation and

emotion induction, that could be utilized in artistic

applications, medicine, psychology, etc.

VR, Poetry and Electronic Music. Considering

this, the combination of electronic music, poetry and

VR is expected to produce a high impacting

experience that will improve the sensations

experienced by users with poetry or music by

themselves. The uncounted opportunities that appear

with this combination, overcome the difficulties of

artistic expression, offering a multisensorial

impressive experience.

We are interested in analyzing the neural

correlates of emotion and consciousness (in particular

self-awareness) elicited by ambient music and poetry,

by using EEG to register changes in brain activity. As

a preliminary step, here we present a VR prototype

which combines both elements in a virtual scenario

specially designed to enhance the emotional response

to the particular music and poems used in this art

experience.

2 MUSIC, POETRY, EMOTION

AND CONSCIOUSNESS

2.1 Brain Regions Activated in

Response to Music Listening

Based on the detection of changes in hemoglobin

oxygenation or BOLD signal (blood-oxygen-level-

dependent signal), fMRI makes it possible to identify

the brain regions that are activated in a given task.

When no specific task is performed, several resting

state networks have been described (Greicius et al.,

2003). Among them, the default mode network is

particularly interesting. The DMN encompasses a set

of functionally interconnected brain regions that are

deactivated when attention is focused on external

stimuli but are consistently activated when the mind

is focused on introspective or creative thinking, when

personal experiences are remembered, or when the

individual is imagining the future. Therefore, in the

last 20 years, numerous studies have evaluated the

role of the DMN in self-awareness and the

construction of a sense of self (for a recent review see

Menon, 2023).

The DMN includes cortical regions such as the

posterior cingulate cortex (PCC), retrosplenial cortex

(RSC), medial prefrontal cortex (mPFC), anterior

temporal cortex (ATC), middle temporal gyrus

(MTG), medial temporal lobe (MTL), and angular

gyrus (AG). Subcortical nodes include the anterior

A Virtual Reality Prototype for Evaluating Emotion Induced by Poetry and Ambient Electronic Music

399

and mediodorsal thalamic nuclei, medial septal

nuclei, and nucleus accumbens.

Interestingly, preferred music enhances DMN

connectivity, while the precuneus is relatively

isolated from the rest of the DMN when listening to

disliked music (Wilkins et al., 2014). Favorite songs

influence auditory cortex-hippocampus connectivity,

linked to memory, and activate the mesolimbic

reward system, releasing dopamine in the caudate and

nucleus accumbens (Salimpoor et al., 2011). Finally,

sad music, compared to happy music, fosters stronger

mind-wandering and greater DMN node centrality

(Taruffi et al., 2017).

In summary, these works show that preferred

and/or sad music, engages brain circuits involved in

memory, pleasure, and self-awareness.

2.2 Evaluation of Music-Evoked

Emotion: The Geneva Emotional

Music Scale (GEMS)

From a psychophysiological point of view, emotions

are usually classified and quantified using a two-

dimensional valence-arousal space (Russell, 1980).

This 2D model is extensively used in EEG and/or

fMRI analyses of emotion recognition and

classification. However, most of these neuroimaging

studies use visual stimuli to induce emotions.

Emotions elicited by music seems to be different from

those produced by other types of stimuli, as they are

not behavioural-oriented or adaptive (Scherer, 2004).

Hence, a more appropriate psychological model has

been proposed for their assessment (Strauss et al.,

2024; Trost et al., 2012; Zentner et al., 2008), named

GEMS (Geneva Emotional Music Scale), in which 9

dimensions of emotion are proposed (Table 1).

Table 1: Dimensions of the Geneva Emotional Music Scale

(GEMS).

GEM Dimension Description

Sadness Sad, sorrowful

Tension A

g

itated, nervous

Powe

r

Stron

g

, trium

p

hant

Jo

y

Jo

y

ful, amuse

d

Peacefulness Calm, meditative

Tenderness In love, tende

r

Nostalgia Sentimental, dream

y

Transcendence Fascinated, overwhelme

d

Wonde

r

Dazzled, move

d

Using fMRI, the regions that are activated for

classical music excerpts belonging to each of the 9

dimensions of the GEMS model have been identified

(Trost et al., 2012). Interestingly, it was observed that

the dimensions considered as “low arousal”

(tenderness, peacefulness, transcendence, nostalgia

and sadness) activated a brain network centered in

regions of the hippocampus and the vmPFC,

including the subgenual anterior cingulate cortex.

This study corroborates the relevance of memory and

the DMN in the processing of this type of music,

which would be more related to introspection,

autobiographical memories and emotion regulation

(Trost et al., 2012).

EMMA is a database containing hundreds of

music excerpts from different genres, representative

of the nine GEMS emotion categories (Strauss et al.,

2024), and it is available at https://musemap-

tools.uibk.ac.at/emma. Although EMMA database

contains some electronic music excerpts, no ambient

electronic music is included, which is understandable,

since electronic music encompasses a large number

of different genres.

2.3 Ambient Electronic Music

Considering the GEMS model, ambient electronic

music (which can be considered as “low arousal”)

could be a particularly interesting candidate in the

context of introspection and self-awareness,

compared to other types of electronic music in which

the rhythmic component is predominant, such as

EDM, dance, techno, drum and bass or trance.

Ambient music is a type of minimalist

instrumental electronic music, which is characterized

by a predominance of drone-type sounds, and a

special relevance of the sound textures and the

atmosphere they generate, as opposed to pop, rock or

classical music, where there is a focus on melody,

harmony and rhythms. Sometimes ambient music

also includes nature sounds, along with pad-type

synthesis sounds. Frequently, there is a gradual

exploration of timbre, and notes or chords are

maintained over time, changing their harmonic

content by using filters, and introducing additional

sound layers. The harmonic progression is minimal,

and sound effects such as reverb or delay are

frequently used to collaborate in the generation of a

soundscape.

2.4 Poetry and Neural Correlates

As mentioned in the Introduction section, data on the

neural correlates of poetry listening are scarce. Using

fMRI, it has been shown that the caudate nucleus, the

putamen, the mediodorsal thalamus, the nucleus

accumbens and the anterior insula are activated by

listening to recorded poems (Wassiliwizky et al.,

2017).

GRAPP 2025 - 20th International Conference on Computer Graphics Theory and Applications

400

Another recent study compares not listening but

reading Chinese poetry versus prose and highlights

the importance of the left inferior orbitofrontal cortex

(OFC) and the bilateral insula as aesthetic pleasure

centers, relevant for the appreciation of the poetry

beauty (Gao & Guo, 2018).

3 VR PROTOTYPE

DESCRIPTION

VR is a powerful tool to elicit and enhance emotions,

since this technology increases the sense of presence

by immersing the user in a three-dimensional

environment. As mentioned before, we are interested

in the neural correlates of emotion and the sense of

self evoked by ambient electronic music and poetry.

Here we present a VR prototype, that can be used in

combination of EEG, specifically designed to

enhance the experience of listening to a particular set

of poems and electronic music (see Appendix).

3.1 Concept and Objectives

The proposed VR experience, combines VR,

electronic music and poetry to elicit impressive

emotions in the user. Taking advantage of the key

aspects of these forms of expression (sense of

presence, interactivity, immersion and neural

responses to music and poetry), the experience aims

to break the traditional limits of art. The primary

objective is to study the brain response of users to a

sequence of interactive visual and auditive stimuli

specifically designed to induce emotions on them.

The experience constitutes a journey that guides the

users from their inner self to a fragile world of poetry,

finishing with the immersion in a poem related to

dead, from the book “Dressed in clay” (Appendix).

3.2 the Narrative of the Experience

The experience unfolds through four interconnected

scenes.

3.2.1 Scene 1. Inner World

Purpose. This scene purpose is to focus the

users on the experience forgetting about the

exterior, while getting used to the head set and

hands tracking system.

Design. It has a minimalistic design to not

overwhelm the users and focus their attention

on several specific objects as depicted in Figure

1 (a).

Technical elements. We have added 3D audio

(a low frequency heartbeat), a spatial audio

sound area with high echo effect and simple but

effective visuals.

(a) (b)

Figure 1: Inner world. Teleportation areas (a) let the users

to reach the magic sphere (b).

The teleportation areas glow blue with a magic

ring effect. The main object of the scene is a

glowing sphere with particle effects within it,

shown in Figure 1 (b). The teleporting and object

interaction is driven by hand recognition, elimi-

nating the need of controllers that could interfere

with the artistic nature of the experience. The

colour palette is black and blue to emphasize the

intimate and magic feeling of the scene.

Narrative intention. To prepare users to the

upcoming revealing of the poetry clay world,

with the subsequent deeper engagement.

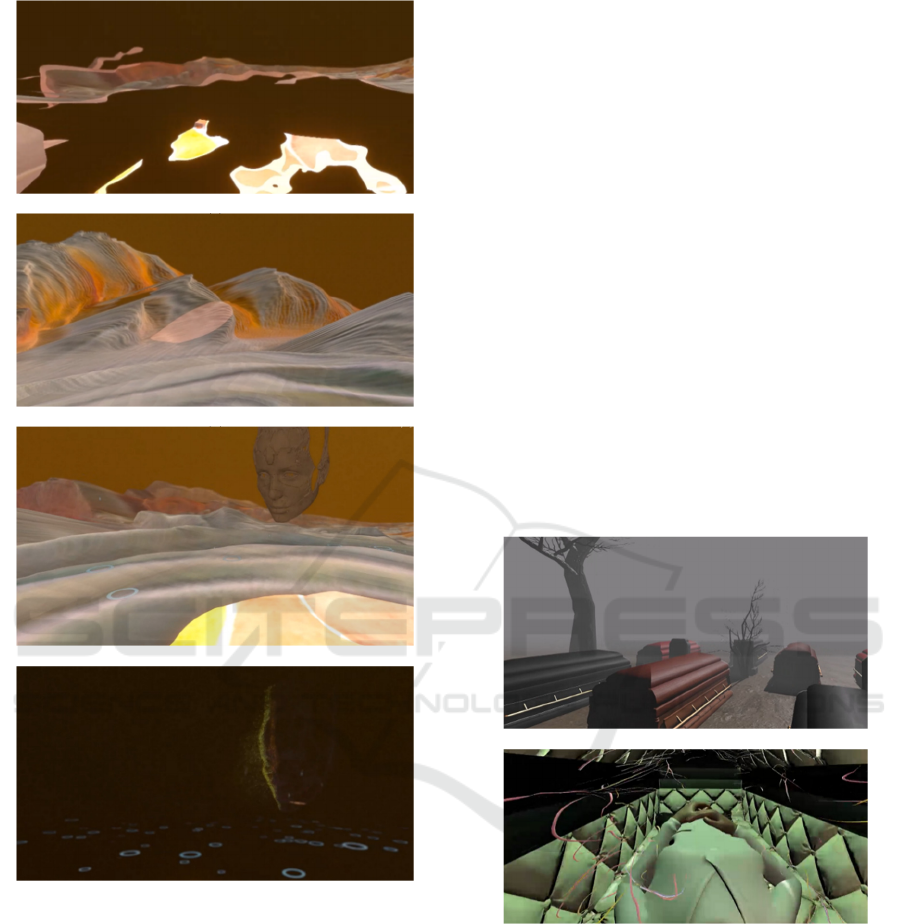

3.2.2 Scene 2. Fragility

Transition. Triggered with the interaction with

the glowing sphere. A clay landscape of

mountains and craters gradually appears,

Figure 2 (a), with synchronized lighting and sky

adjustments.

Purpose. To improve the engagement level to

its highest peak before entering the main part of

the experience.

Design. A yellow, white and orange landscape

of mountains and craters inspired in the book

cover, Figure 2 (b).

Technical elements. Several particle systems

and visual graphs effects, spatial audio and

shaders animation. The user can teleport and

interact with objects made with clay such as

masks, hands or faces, activating the

corresponding animation. This environment

has been specifically designed to follow the

look of the book and, therefore, the colour

palette is orange, yellow and white.

Narrative intention. The role of this scene in

this narrative is to express the book’s central

theme, the fragility of the users, just like

covered with a thin clay layer, while serving as

a bridge between the abstract initial scene to the

tangible manifestation of thoughts or dreams.

A Virtual Reality Prototype for Evaluating Emotion Induced by Poetry and Ambient Electronic Music

401

(a)

(b)

(c)

(d)

Figure 2: Fragility. The scenario’s shader allows the

environment to appear smoothly (a) like a thin layer. The

look of the scene is inspired in the book cover (b). There are

several interactive objects, here “Soulless” by Ali Rahimi,

(Sketchfab, n.d.), made of clay (c) that finally vanish with

the rain (d).

3.2.3 Scene 3. Wooden Box

Transition. Rain begins to fall with the subse-

quent dissolution of objects and environment.

The fragile layer of clay disappears, making way

to a new dark environment with a key centre

object: a coffin. A voice recites a poem while

electronic music, specifically composed to

intensify the feeling of the poem, is played.

Purpose. This scene is the main one where the

emotion induction measures will take place.

Design. A dark scene with a coffin as the key

central object, Figure 3 (a).

Technical elements. Synchronization of lights

with music through the audio spectrum analysis

with the Fast Fourier Transform. This rhythmic

flickering allows for the study of potential

psychedelic effects, Signed Distant Field

effects, Figure 3 (a) and (b), sound area, hand-

tracking.

Narrative intention. The elicitation of intense

emotions related to the poem’s theme.

3.2.4 Scene 4. end

Transition. The coffin ends filled up with

abstract “worms” as the poems ends. The book

title appears and a fade in black transports the

user into the mountain landscape again.

Purpose. To give the option to the user of

restarting, quitting the application or perform a

survey.

(a)

(b)

Figure 3: Wooden box. The central element is a coffin (a),

that is gradually filled up with abstract lines while lights

flicker synchronized with music (b).

Design. The scenario is the same of Scene 2.

Technical elements. UI canvas, Figure 4 (a),

three questionnaires to assess the quality of the

experience based on VR software toolkit

(VRSTK), Figure 4 (b). Two of them are

focused on the quality of the experience (QoE)

itself and the third one evaluates specifically

the emotions experienced. This one has been

specifically designed for this application.

GRAPP 2025 - 20th International Conference on Computer Graphics Theory and Applications

402

Narrative intention. The conclusion of the

emotional journey.

(a) (b)

Figure 4: End. The users return to the landscape of scene 2

where the application can be exited or repeated (a).

Moreover, VRSTK questionaries could be presented to the

user (b).

3.3 Technical Innovation

The presented VR environment enhances user

experience through several technical innovations:

Hand tracking. The experience eliminates the

need for traditional controllers allowing users

to interact with their own hands, Figure 5. This

approach enhances sense of presence and

immersion avoiding the use of a second layer

between users and application.

Figure 5: The experience uses a hand tracking system

instead of traditional controllers.

OpenXR. The experience is developed with

OpenXR and therefore it is compatible with

many available headsets. This choice

eliminates hardware dependencies.

AI-personalized environments. The use of

generative AI has led to a completely

personalized experience, due to use of

customized environments that align perfectly

well with the artistic theme of the experience

while saving developing time.

VRSTK integration. To assess the quality of the

experience and the emotional responses

elicited, VRSTK has been used, Figure 4 (b).

VRSTK is an open-source software toolkit that

provides prefabs for Unity to implement

questionaries within VR applications to obtain

users evaluation seamlessly, without breaking

users immersion. QoE questionaries are

“Simulation Sickness Questionary” and

“Uncanny Valley Questionary”. Using

VRSTK, all questionnaire responses are

automatically stored and exported as structured

CSV files. This capability allows for systematic

post-experience data analysis, ensuring

reproducibility and facilitating correlations

between subjective user feedback and external

measures such as EEG signals or physiological

responses.

Real-time synchronization of visual effects and

audio. A dynamic synchronization system

adjusts visual effects like particle systems or

lighting in real-time, based on audio stimuli.

3.4 Used Tools

Unity 6 0.25.f1 (Unity Technologies, n.d.) . The

main software tool for developing the

experience VR systems, interactivity,

synchronization, visual and audio effects, etc.

3ds Max 2025 (Autodesk, n.d.) for object

modelling and refining.

Blender 3.1.0 (Blender, n.d.) for scenario

modelling and texture image mapping.

Dall-E. AI (DALL·E 3 | OpenAI, n.d.). Tool

used for the generation of base maps in the

creation of materials realistic and customized

Audacity (Audacity ®). For audio edition to

process sound effects and music to achieve a

high level of emotion induction

Gimp (GIMP, n.d.). For image editing tasks,

including base map adjustments or creation of

normal map.

Zoe depth (Bhat et al., 2023). To generate the

environment from images

Several objects, effects or sound have been

obtained, as well, from:

Unity asset store, (Unity Asset Store, n.d.): “3D

Modern Menu UI” by SlimUI, “Coffin Royale”

by @PaulosCreations, “Free Sound Effects

Pack” by Olivier Girardot, “Magic Effects” by

HOVL Studios, “Nature Sound FX” by

Lumino, “PowerUp Particles” by MHLAB and

“Stone Graves” by Sephtis Von Kain. All these

A Virtual Reality Prototype for Evaluating Emotion Induced by Poetry and Ambient Electronic Music

403

models are under the Standard Unity Asset

Store EULA.

Sketchfab, (Sketchfab, n.d.): "Hand animation

test", by GabrielNeias,"Soulless" by Ali

Rahimi. Both models are licensed under

Creative Commons Attribution

(http://creativecommons.org/licenses/by/4.0/).

Pixabay (Pixabay, n.d.), Mixamo (Mixamo,

n.d.), Freesound (Freesound, n.d.) and

Polyhaven (Textures • Poly Haven, n.d.).

4 CONCLUSIONS

In this paper VR experience that combines poetry and

electronic music has been presented. On one hand,

this tool constitutes an innovative way to express

poetry and intensify the emotions in the users. On the

other hand, this tool is a technical element that will let

experiments about, electronic music mechanisms of

emotion induction or psychedelic effects caused by

light flickering. The tool is not designed for any

specific headset and do not need the use of controls,

due to the hand tracking system. Shader graphs,

visual effects, particles systems and light and audio

synchronization work together to make the

experience impressive and engaging, with a high

level of immersion and sense of presence. The

experience is interactive, letting the users to interact

with objects, trigger transitions or changing visual

and audio effects. The narrative and visual design has

been carefully performed to add realism and details to

the experience. Another advantage is the possibility

of take questionaries seamless inside the experience

eliminating the effect of environment awareness

inherent to traditional surveys. In addition, data from

users is real time stored. Future work will focus on

gather users’ data, including EEG signals that will be

further analysed and processed to find patterns that

could help in the search of electronic music emotion

induction mechanisms.

ACKNOWLEDGEMENTS

We would like to thank Marina Martínez Barroso for

the critical reading and the advice offered to improve

the translation of the poems used in this work (see

Appendix). This project received financial support

from VII PPIT-US, Universidad de Sevilla. AI-based

tools (Chat GPT, Copilot) were used for grammar and

style editing only in sections 1 and 3.

REFERENCES

Aparicio-Terrés, R., López-Mochales, S., Díaz-Andreu,

M., & Escera, C. (2024). Assessing the relationship

between neural entrainment and altered states of

consciousness induced by electronic music. bioRxiv.

https://doi.org/10.1101/2024.01.16.575849

Audacity ® | Free Audio editor, recorder, music making

and more! (n.d.). Retrieved December 15, 2024, from

https://www.audacityteam.org/

Autodesk. (n.d.). Retrieved January 12, 2025, from

https://www.autodesk.com/es

Bhat, S. F., Birkl, R., Wofk, D., Wonka, P., & Müller, M.

(2023). ZoeDepth: Zero-shot Transfer by Combining

Relative and Metric Depth. https://arxiv.org/abs/23

02.12288v1

Blender. (n.d.). Retrieved January 12, 2025, from

https://www.blender.org/

Chirico, A., Cipresso, P., Yaden, D. B., Biassoni, F., Riva,

G., & Gaggioli, A. (2017). Effectiveness of Immersive

Videos in Inducing Awe: An Experimental Study.

Scientific Reports, 7(1), 1218. https://doi.org/10.1038/

s41598-017-01242-0

DALL·E 3 | OpenAI. (n.d.). Retrieved December 15, 2024,

from https://openai.com/index/dall-e-3/

Freesound. (n.d.). Retrieved January 12, 2025, from

https://freesound.org/

Gao, C., & Guo, C. (2018). The Experience of Beauty of

Chinese Poetry and Its Neural Substrates. Frontiers in

Psychology, 9, 1540. https://doi.org/10.3389/fpsyg.201

8.01540

GIMP. (n.d.). Retrieved December 20, 2024, from

https://www.gimp.org/

Greicius, M. D., Krasnow, B., Reiss, A. L., & Menon, V.

(2003). Functional connectivity in the resting brain: a

network analysis of the default mode hypothesis.

Proceedings of the National Academy of Sciences of the

United States of America, 100(1), 253–258.

https://doi.org/10.1073/pnas.0135058100

Leonard, J., Cadoz, C., Castagne, N., Florens, J. L., &

Luciani, A. (2014). A virtual reality platform for

musical creation: Genesis-RT. In: Aramaki, M.,

Derrien, O., Kronland-Martinet, R., Ystad, S. (eds)

Sound, Music, and Motion. CMMR 2013. Lecture

Notes in Computer Science, vol 8905. Springer, Cham.

https://doi.org/10.1007/978-3-319-12976-1_22

Liu, S., Erkkinen, M. G., Healey, M. L., Xu, Y., Swett, K.

E., Chow, H. M., & Braun, A. R. (2015). Brain activity

and connectivity during poetry composition: Toward

a multidimensional model of the creative process.

Human Brain Mapping, 36(9), 3351–3372.

https://doi.org/10.1002/HBM.22849

MDB. (2024). Empire Soldiers, a VR experience about

WW1. https://www.mbd.limited/mbd-projects/empire-

soldiers

Menon, V. (2023). 20 years of the default mode network: A

review and synthesis. Neuron, 111(16), 2469–2487.

https://doi.org/10.1016/j.neuron.2023.04.023

Mixamo. (n.d.). Retrieved January 12, 2025, from

https://www.mixamo.com/#/

GRAPP 2025 - 20th International Conference on Computer Graphics Theory and Applications

404

Onderdijk, K. E., Bouckaert, L., Van Dyck, E., & Maes, P.

J. (2023). Concert experiences in virtual reality

environments. Virtual Reality, 27(3), 2383–2396.

https://doi.org/10.1007/S10055-023-00814-Y

O’Sullivan, N., Davis, P., Billington, J., Gonzalez-Diaz, V.,

& Corcoran, R. (2015). “Shall I compare thee”: The

neural basis of literary awareness, and its benefits to

cognition. Cortex; a Journal Devoted to the Study of the

Nervous System and Behavior, 73, 144–157.

https://doi.org/10.1016/j.cortex.2015.08.014

Pixabay. (n.d.). Retrieved December 20, 2024, from

https://pixabay.com/

Poetry & Virtual Reality: A Curious Combo. Does it Work?

- YouTube. (n.d.). Retrieved December 14, 2024, from

https://www.youtube.com/watch?v=dcTArj0WAb8

Riva, G., Mantovani, F., Capideville, C. S., Preziosa, A.,

Morganti, F., Villani, D., Gaggioli, A., Botella, C., &

Alcañiz, M. (2007). Affective interactions using virtual

reality: the link between presence and emotions.

Cyberpsychology & Behavior: The Impact of the

Internet, Multimedia and Virtual Reality on Behavior

and Society, 10(1), 45–56. https://doi.org/10.1089/

cpb.2006.9993

Russell, J. A. (1980). A circumplex model of affect. Journal

of Personality and Social Psychology, 39(6), 1161–

1178. https://doi.org/10.1037/h0077714

Salimpoor, V. N., Benovoy, M., Larcher, K., Dagher, A., &

Zatorre, R. J. (2011). Anatomically distinct dopamine

release during anticipation and experience of peak

emotion to music. Nature Neuroscience, 14(2), 257–

262. https://doi.org/10.1038/nn.2726

Sansar | Events. (n.d.). Retrieved December 14, 2024, from

https://events.sansar.com/

Scherer, K. R. (2004). Which Emotions Can be Induced by

Music? What Are the Underlying Mechanisms? And

How Can We Measure Them? Journal of New Music

Research. https://doi.org/10.1080/0929821042000317

822

Serafin, S., Erkut, C., Kojs, J., Nilsson, N. C., & Nordahl,

R. (2016). Virtual Reality Musical Instruments: State of

the Art, Design Principles, and Future Directions.

Computer Music Journal, 40(3), 22–40.

https://doi.org/10.1162/COMJ_A_00372

Sketchfab. (n.d.). Retrieved January 12, 2025, from

https://sketchfab.com/feed

Strauss, H., Vigl, J., Jacobsen, P.-O., Bayer, M., Talamini,

F., Vigl, W., Zangerle, E., & Zentner, M. (2024). The

Emotion-to-Music Mapping Atlas (EMMA): A

systematically organized online database of

emotionally evocative music excerpts. Behavior

Research Methods. https://doi.org/10.3758/s13428-

024-02336-0

Su, Y., Liu, Y., Xiao, Y., Ma, J., & Li, D. (2024). A review

of artificial intelligence methods enabled music-evoked

EEG emotion recognition and their applications.

Frontiers in Neuroscience, 18, 1400444.

https://doi.org/10.3389/fnins.2024.1400444

Taruffi, L., Pehrs, C., Skouras, S., & Koelsch, S. (2017).

Effects of Sad and Happy Music on Mind-Wandering

and the Default Mode Network. Scientific Reports,

7(1), 14396. https://doi.org/10.1038/s41598-017-

14849-0

Textures • Poly Haven. (n.d.). Retrieved January 12, 2025,

from https://polyhaven.com/textures

Unity Asset Store. (n.d.). Retrieved January 12, 2025, from

https://assetstore.unity.com/

Trost, W., Ethofer, T., Zentner, M., & Vuilleumier, P.

(2012). Mapping aesthetic musical emotions in the

brain. Cerebral Cortex (New York, N.Y.: 1991), 22(12),

2769–2783. https://doi.org/10.1093/cercor/bhr353

Unity Technologies. (n.d.). Retrieved January 12, 2025,

from https://unity.com/

Wassiliwizky, E., Koelsch, S., Wagner, V., Jacobsen, T., &

Menninghaus, W. (2017). The emotional power of

poetry: neural circuitry, psychophysiology and

compositional principles. Social Cognitive and

Affective Neuroscience, 12(8), 1229–1240.

https://doi.org/10.1093/scan/nsx069

Wave | The show must go beyond. (n.d.). Retrieved

December 14, 2024, from https://wavexr.com/

Wilkins, R. W., Hodges, D. A., Laurienti, P. J., Steen, M.,

& Burdette, J. H. (2014). Network Science and the

Effects of Music Preference on Functional Brain

Connectivity: From Beethoven to Eminem. Scientific

Reports 2014 4:1, 4(1), 1–8. https://doi.org/10.1038/

srep06130

Zentner, M., Grandjean, D., & Scherer, K. R. (2008).

Emotions evoked by the sound of music:

characterization, classification, and measurement.

Emotion (Washington, D.C.), 8(4), 494–521.

https://doi.org/10.1037/1528-3542.8.4.494

Zhang, J., Xu, Z., Zhou, Y., Wang, P., Fu, P., Xu, X., &

Zhang, D. (2021). An Empirical Comparative Study on

the Two Methods of Eliciting Singers’ Emotions in

Singing: Self-Imagination and VR Training. Frontiers

in Neuroscience, 15, 693468. https://doi.org/10.3389/

fnins.2021.693468

APPENDIX

Original Poems and Music: Text from “Vestida con

arcilla” (“Dressed in clay”), by María Luz

Montesinos; Talón de Aquiles ed., 2024. ISBN: 978-

84-10403-57-4. Translation (from the original in

Spanish) by María Luz Montesinos; reviewed by

Marina Martínez Barroso. Music from “Poemas de la

caja de madera” (“Poems of the wooden box”), Slow

Project (María Luz Montesinos), ml Records, 2024.

UPC: 198665226245; ISRC: QZTB22449760.

Spotify link:

https://open.spotify.com/intl-es/album/5ehF6NEu8y

xLn5vjbk1tVC

YouTube link:

https://www.youtube.com/watch?v=EiuRTGnofVM

A Virtual Reality Prototype for Evaluating Emotion Induced by Poetry and Ambient Electronic Music

405

Crown of Flowers

Cut flower

Moss

The scent of jasmine

Bitter

Death carries

Blind eyes

Fluids abandoning the body

The vomit

Bitter

Death carries

The thinning hair

Stained skin

Poison

Bitter

Death carries

The swollen belly

The absent mind

Breath

Bitter

Death carries

The wooden box

The dead woman dressed in death’s clothing

Crown of flowers

Bitter

Death carries

She is there, but she is not

She is there, but she is not

In the wooden box

Box for only one day

So expensive

So ugly

She is there, but it is not her

You cry and you laugh

And you cry

But you laugh too

Because you cannot cry all the time

And because if you laugh you forget she is not here

Even if she is in her wooden box

Have I told you that the box is expensive and

ugly?

A very polite man showed it to me

In a catalogue

Among a hundred of other boxes

Very ugly all of them, very expensive also

Just to use it for a while

But the gentleman shows them to you

And he tells you about the quality

About the detail of the cross

About the elegance of the model

(this one sold a lot last year)

It does not matter what he says

Because you are not paying attention

And you do not care about the detail

Or the quality

Or the elegance of the model

Because there she is

But because she is not there

And she is no longer her

You do not want to look at her anymore

You want to remember her as she was

Not the one who is there

Because she is no longer there

Even if she is

In the wooden box

Catalogue

Adult wooden upholstered model

Paskal model

Madrid model

Varnished adult model

Sena eco model

Pádova model

Rainbow model without glass

Lacquered children's model

Luxury model

Párvulo model

Alejandría model, Rómulo, Alicante, 99, metallic

children's, extra-large, round with glass

(two for one)

(they are flying)

Classic and elegant design

Wide range

Ideal

Customisable

Eco-friendly

Sustainable

Adaptable

Fully opening lid

To display the dead woman

The dead woman in her wooden box

(box for only one day, so expensive, so ugly)

The Procession

The procession moves slowly

Between the tombs

Which are like little apartments

Marked

With name and date

So, we know who lives in the little apartment

That no longer lives, because they are dead

GRAPP 2025 - 20th International Conference on Computer Graphics Theory and Applications

406

(so, we know who dies in the little apartment)

All we hear is

The creak

Of the wooden box

With each step

And some wailing

Feet dragging

Through the gravel

The procession moves slowly

Because the eyes

Are weeping

And we cannot see where we step

Some are torn apart

For a few seconds

Helpless

As they reach the hole

That must be filled

With the wooden box

The hole is beyond description

But the box enters the hole

Pushed

And on the base, the wood is scratched

(the box, that was so ugly and so expensive)

And the wails become silent screams

When the hole is covered

And the man on the ladder

Puts the first scoop

Of plaster

And then another

And another

And another

And another

And then nothing

A-92

I drive down the motorway

For a long while

Down the motorway, you say?

Yes, yes, that seems to be it

And what are you thinking?

Nothing, just nonsense, things of mine:

The astonishment of the astonished

The wickedness of the wicked

The mouse running in its wheel, anaesthetized...

I drive down the motorway and overtake a hearse

Could this be the one to carry your body later?

I do not think so, no one picks up a dead woman

unless they have been paid first

I drive down the motorway and an ambulance, siren

blaring, overtakes me

Could this, in the end, be the manoeuvre that kills

you?

The dead woman in her little wooden box

But it is a music box

A music box, you say?

Yes, yes, that seems to be it

And what are you thinking?

Nothing, I am dead

But the lid of the box opens, and a

waltz plays...

A Virtual Reality Prototype for Evaluating Emotion Induced by Poetry and Ambient Electronic Music

407