Exploring Machine Learning and Remote Sensing Techniques for

Mapping Pinus Invasion Beyond Crop Areas

Andrey Naligatski Dias, Maria Eduarda Guedes Pinto Gianisella, Amanda Dos Santos Gonc¸alves,

Rodrigo Minetto and Mauren Louise Sguario Coelho de Andrade

Universidade Tecnol

´

ogica Federal do Paran

´

a, Ponta Grossa, Paran

´

a, Brazil

Keywords:

Machine Learning, Classification, Remote Sensing.

Abstract:

The spread of the exotic tree species from the Pinus spp. family has been increasing over the years in the

Ponta Grossa region and other areas of southern Brazil, making its monitoring necessary. This study proposes

to monitor this spread using deep neural networks trained on satellite images from the Campos Gerais region.

For this task, three deep neural network models focused on pixel-by-pixel classification were employed: U-

Net, SegNet, and FCN (Fully Convolutional Network). These models were trained on a dataset containing

34 images with a resolution of 2048x2048 pixels, obtained from Google Earth satellites. All images were

downloaded using the QuickMapServices extension available in QGIS, and labeled using the same program.

Promising results suggest that the U-Net model outperformed the others, achieving 82.49% accuracy, 69.62%

Jaccard index, 41.19% recall, and 78.47% precision. The SegNet model showed good accuracy at 82.84%,

but underperformed on the Jaccard index at 45.93%, with 58.34% recall and 68.35% precision. Meanwhile,

the FCN model produced less reliable results among the three, with 79.37% accuracy, 29.17% Jaccard index,

34% recall, and 67.21% precision.

1 INTRODUCTION

Pinus spp. plantations in southern Brazil have intro-

duced an alternative to the deforestation of trees in na-

tive reserve areas. Despite the positive economic im-

pacts, the environmental impacts, on the other hand,

have proven to be concerning, as Pinus is an exotic

and highly invasive species (Instituto

´

Agua e Terra,

2024). The region surrounding the Vila Velha State

Park in Paran

´

a, for example, is surrounded by farms

with large cultivation areas for this species. As a re-

sult, the park, home to around 1376 species of an-

giosperms and 151 species of pteridophytes (Cervi

et al., 2007), has been suffering threats for over two

decades. This has compromised soil fertility and the

region’s biodiversity.

Monitoring the spread of Pinus within the park’s

boundaries is a challenging task, particularly due to

the lack of manpower, the park’s vast area, and the

presence of wild animals that threaten the safety of

the inspectors. Remote sensing via imagery emerges

as an efficient alternative, providing information on

the location of Pinus plantations and helping gov-

ernments and forest managers effectively manage fo-

rest resources, ecological protection, and timber eco-

nomic planning, as is already being implemented in

China (Li et al., 2020).

Remote sensing image acquisition methods are di-

vided into satellite-based methods (Brandt and Stolle,

2020) and those using remotely piloted aircraft sys-

tems (RPAs) (Nascente and Nunes, 2020). In the first

case, data is freely available from various space agen-

cies and provides images on a global scale (Brandt

and Stolle, 2020), while RPAs require specialized la-

bor for their use.

Monitoring the spread of Pinus spp. must also effi-

ciently identify the species on a large scale. Integra-

ting satellite imagery and machine learning has shown

promising results in detecting green areas, especially

in identifying tree species (Zhang et al., 2023b).

So, the hypotheses are: the deep neural networks

U-Net, FCN and SegNet are effective in the task

of classifying Pinus spp. plantations using RGB

satellite images, demonstrating distinct capabilities in

pixel-wise segmentation? Furthermore, these models

are expected to exhibit significantly different perfor-

mances when evaluated using classification metrics

such as precision, recall, accuracy and Jaccard coeffi-

cient.

The hypothesis also posits that the U-Net architec-

ture, due to its design optimized for image segmenta-

tion, will outperform the other architectures in identi-

fying areas with the presence of Pinus spp. This work

seeks to answer these hypotheses.

Dias, A. N., Gianisella, M. E. G. P., Gonçalves, A. S., Minetto, R. and Coelho de Andrade, M. L. S.

Exploring Machine Learning and Remote Sensing Techniques for Mapping Pinus Invasion Beyond Crop Areas.

DOI: 10.5220/0013383900003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 3: VISAPP, pages

873-879

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

873

2 BACKGROUND AND RELATED

WORK

John Brandt (Brandt and Stolle, 2020) proposed

the detection of tree canopies outside forests using

medium-resolution satellite images. Beloiu et al. (Be-

loiu et al., 2023) proposed a study on the detection

of tree species in heterogeneous forests using RGB

satellite images trained on deep neural network mo-

dels. More recently, Qin et al. (Qian et al., 2023)

studied the detection of dominant tree species in ur-

ban areas using the Zhuhai-1 satellite. The work of

Ortega Adarme et al. (Aparecido de Almeida et al.,

2020) evaluates deep learning techniques for detec-

ting deforestation in the Amazon and Cerrado re-

gions of Brazil. Zhan et al. (Zhang et al., 2023b)

demonstrated the importance of combining satellite

sensor data and machine learning algorithms to map

the distribution of tree species in urban areas, par-

ticularly in the context of reforestation project ma-

nagement and pest infestations. Li et al. (Li et al.,

2019) conducted a study proposing a two-stage con-

volutional neural network (TS-CNN) for large-scale

detection of oil palm plants using high-resolution

satellite images in Malaysia, addressing a common

challenge in agriculture and environmental monito-

ring. Zheng et al. (Zheng et al., 2021) developed a

method for detecting coconut tree canopies to iden-

tify and count coconuts in the Tenarunga region, using

high-resolution satellite images acquired from Google

Earth. This method involves three main procedures:

feature extraction, a multi-level Region Proposal Net-

work (RPN), and a large-scale coconut tree detection

workflow. Usman et al. (Usman et al., 2023) assessed

the use of high-resolution images from WorldView-

2 (WV-2) for classifying tree species in the agro-

forestry landscape of the Kano Close Settlement Zone

(KCSZ) in northern Nigeria. Their method involved

geographic object-based image analysis (GEOBIA) to

extract individual tree canopies and remotely identify

nine dominant species.

This interest is related to the increase in satellite

images available at no cost, as well as the ease of im-

plementation. In addition to being a powerful tool

for environmental study and preservation, it directly

contributes to natural resource management and the

fight against environmental crimes. To optimize the

use of these images, neural network models have been

widely employed, being trained with satellite data for

efficient application in various fields, such as fire pre-

vention, deforestation, agricultural area mapping, and

soil health monitoring (Handan-Nader et al., 2021).

In this context, this work aims to apply deep learning

techniques to detect the proliferation of the Pinus spp.

tree in various areas of the Ponta Grossa region, where

the species has been hindering the growth of native

vegetation. For this purpose, images obtained from

Google Earth will be labeled to be inserted into three

neural network models: U-Net, SegNet, and FCN,

which will be evaluated based on the chosen metrics.

3 MATERIALS AND METHODS

This chapter will be divided into subsections that will

cover the tools used, the creation of the dataset, the

models employed for training, and the evaluation me-

trics.

3.1 Study Area Characteristics

The vegetation of Vila Velha State Park, composed

mainly of woody grassy savanna and mixed rainfo-

rest, makes up the Atlantic Forest Biome. With an

area of 3,122.11 ha, located in the municipality of

Ponta Grossa, state of Paran

´

a (25º 14’ 09” South lati-

tude, and 50º 00’ 17” West longitude).

3.2 Utilized Materials

The training of the algorithms was carried out in the

Google Colab PRO environment, which offers the fol-

lowing specifications:

• 53 GB of RAM;

• Nvidia L4 with 22 GB of VRAM;

• 235 GB of storage.

All stages, including training, validation, and tes-

ting, were performed using the Python programming

language, in conjunction with its machine learning

and deep learning libraries, Scikit-Learn, Keras, and

TensorFlow.

3.3 Database Creation

For the formation of the database used in the trai-

ning, validation, and testing stages, satellite images

of specific coordinates in the Ponta Grossa region,

containing the Pinus spp., were collected in the RGB

channels. These images were obtained through the

QuickMapServices plugin in the QGIS software, an

open-source tool widely used in cartography, which

offers several options for labeling and image segmen-

tation (QGIS, ). The satellite images were down-

loaded from Google Earth satellites, including Land-

sat data, using the QuickMapServices plugin. They

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

874

were selected based on specific coordinates to encom-

pass a larger number of cases for the database, inclu-

ding areas where the planting of Pinus spp. is regu-

lated, such as the environmental reserve of the Vila

Velha Park. Additionally, images were collected from

distinct regions where Pinus spp. has spread naturally,

such as along roadsides. This decision was made

with the objective of understanding the behavior of

these plantations in relation to surrounding areas. Af-

ter downloading, the images were divided into tiles

with a resolution of 2048x2048 pixels, totaling 34

images. Then, using the same program, labeling was

performed, as illustrated in Figure 1.

Figure 1: Example of a satellite image and its respective

mask.

As shown in the figure above, the entire area con-

taining Pinus in the satellite image was labeled using

the color green, while the rest was labeled with the

color gray.

3.4 Algorithms Used

Three image segmentation algorithms were used for

this work: U-Net, SegNet, and FCN. The models

were implemented using the Keras and TensorFlow

libraries.

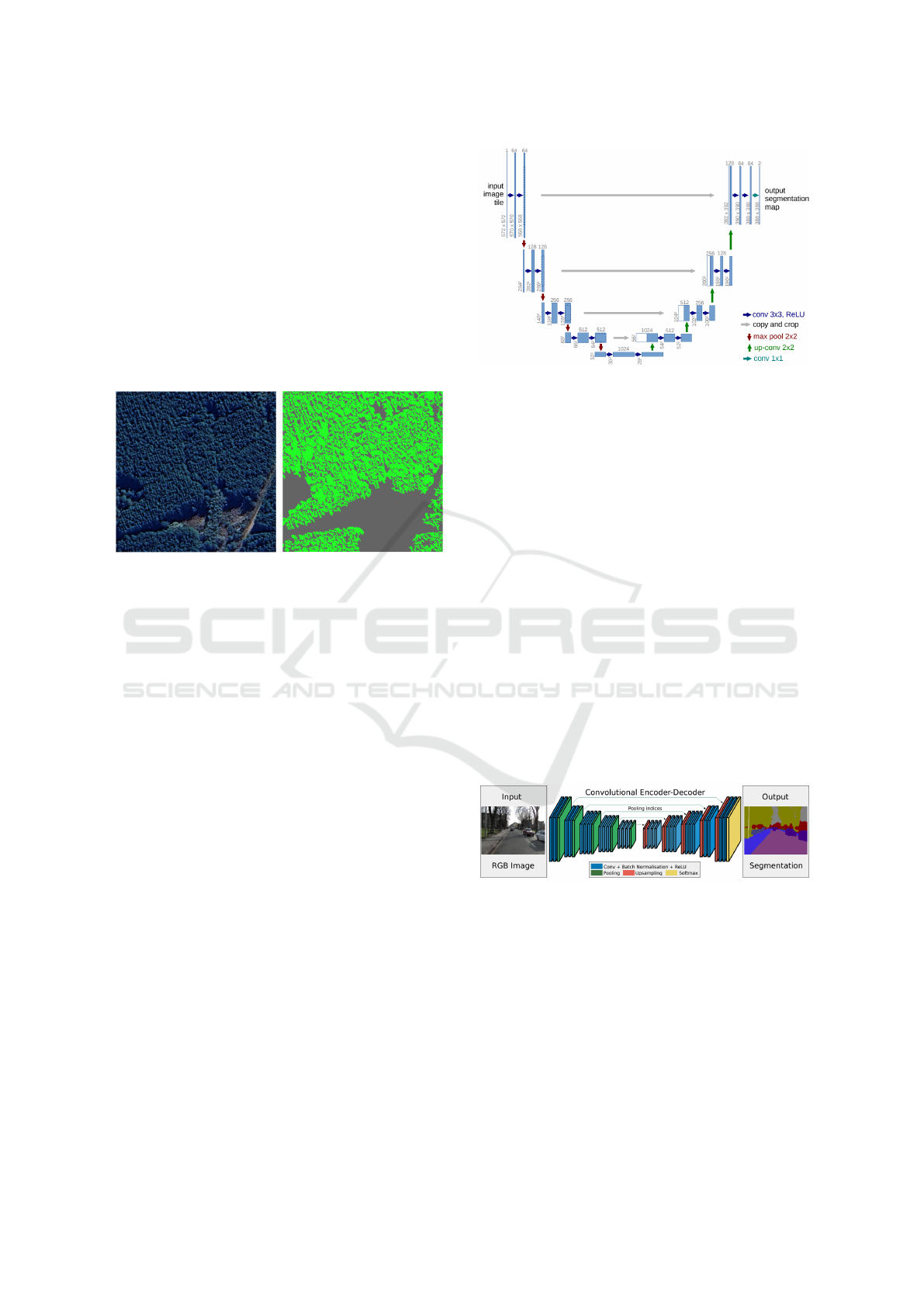

3.4.1 U-Net

The U-Net algorithm was chosen for its ability to use

data augmentation layers to achieve superior perfor-

mance compared to more robust models that require

a large amount of data (Ronneberger et al., 2015).

Although U-Net is frequently used in medical image

segmentation, such as in tumor detection, it can also

be adapted for other segmentation domains. Figure 2

illustrates the U-Net architecture as proposed by Ron-

neberger et al.

Following the same structure as the original

model, only a few adaptations were made, such as re-

ducing the number of filters in each layer (the origi-

nal model ranges from 64 to 1024 filters, while this

research used between 16 and 256 filters) due to the

reduced size of the dataset. This modification helped

Figure 2: Each blue box corresponds to a multi-channel fea-

ture, with its value displayed on top of it.

save time and computational effort.

3.4.2 SegNet

The second model chosen was SegNet, designed for

pixel-by-pixel semantic segmentation. SegNet is a

trainable segmentation architecture that consists of

an encoding network, a corresponding decoding net-

work, and a pixel-by-pixel classification layer. The

architecture of the encoding network is topologi-

cally identical to the 13 convolutional layers of the

VGG16 network (Simonyan and Zisserman, 2015).

This model stands out for its robustness, requiring

a larger amount of data to achieve optimized perfor-

mance, and was primarily developed for scene under-

standing. It is efficient in terms of memory and com-

putational time, even when compared to other robust

architectures (Badrinarayanan et al., 2015). Figure 3

shows the original SegNet architecture, as proposed

by Badrinarayanan et al.

Figure 3: SegNet Architecture. This network is fully con-

volutional, meaning there are no fully connected layers.

For this research, fewer filters were used in the

encoding and decoding layers, starting with 32 fil-

ters instead of the 64 filters used in the original ver-

sion. Additionally, the upsampling method with poo-

ling indices was replaced by Conv2dTranspose to re-

duce computational cost. The activation function

in the output layer was modified to sigmoid, com-

monly used in binary classifications, while the ori-

ginal model uses softmax, typically used in multiclass

classifications.

Exploring Machine Learning and Remote Sensing Techniques for Mapping Pinus Invasion Beyond Crop Areas

875

3.4.3 FCN

The last model chosen was a Fully Convolutional Net-

work (FCN), which is a fully convolutional model

designed for pixel-by-pixel segmentation, similar to

the SegNet model. FCNs stand out for their ability

to handle images of any size, producing segmenta-

tion maps with the same dimensions as the input,

which is crucial for detailed segmentation (Shelhamer

et al., 2015). These networks maintain spatial reso-

lution throughout the segmentation process by repla-

cing fully connected layers with convolutional layers

and using upsampling techniques to reconstruct the

original resolution of the image. This design allows

the FCN to be trained in an end-to-end manner, sim-

plifying the training process and directly optimizing

the segmentation task. Figure 4 demonstrates the ba-

sic architecture of an FCN.

Figure 4: Example of an FCN architecture. All layers are

convolutional, forming a pixel-by-pixel final prediction.

In the implemented FCN model, the architecture

includes two initial convolutional layers followed by

pooling layers, which reduce the image resolution.

Then, a fully convolutional part reconstructs the ori-

ginal resolution of the image. The use of Conv2D

layers with ReLU activation and UpSampling2D lay-

ers helps create a detailed reconstruction of the ima-

ge’s features. The final Conv2D layer with sigmoid

activation is used to generate the segmentation map,

suitable for binary classification tasks.

3.5 Metrics Used

For this project, four commonly used evaluation me-

trics in classification were chosen: Accuracy, Jac-

card coefficient, recall, precision, and confusion ma-

trix. All the metrics used were implemented using the

Scikit-learn library.

3.6 Results and Discussion

After training and prediction on the test dataset, the

results suggest a higher accuracy rate for the deep

neural network U-Net compared to the other models.

As seen in Table 1, the accuracy results of the three

models were very close, with the SegNet network

achieving the highest result at 82.84%. Regarding

the Jaccard metric, the discrepancy between the re-

sults became more significant, with the U-Net model

achieving 69.62%, the highest result, followed by

SegNet at 45.93% and FCN at 29.17%, which was the

lowest result. In terms of recall, the results showed

some differences, with SegNet achieving the highest

result at 58.34%, U-Net at 41.19%, and FCN at only

34%, which again was the lowest result. Finally, the

precision results were relatively close, with U-Net

achieving 78.47%, SegNet at 68.35%, and FCN with

the worst result at 67.21%.

Table 1: Model results on the test database.

Models Accuracy Jaccard Recall Precision

U-Net 82,49% 69,62% 41,19% 78,47%

SegNet 82,84% 45,93% 58,34% 68,35%

FCN 79,37% 29,17% 34,00% 67,21%

The following figures present the segmentation of

the best prediction from each model. It is notable the

significant difference in the Jaccard metrics, where U-

Net consistently achieves the highest similarity to the

ground truth. This model is able to disregard a large

amount of vegetation that was not classified as Pinus,

in contrast to the competing models.

Figure 5: U-Net model segmentation.

Figure 6: SegNet model segmentation.

Figure 7: FCN model segmentation.

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

876

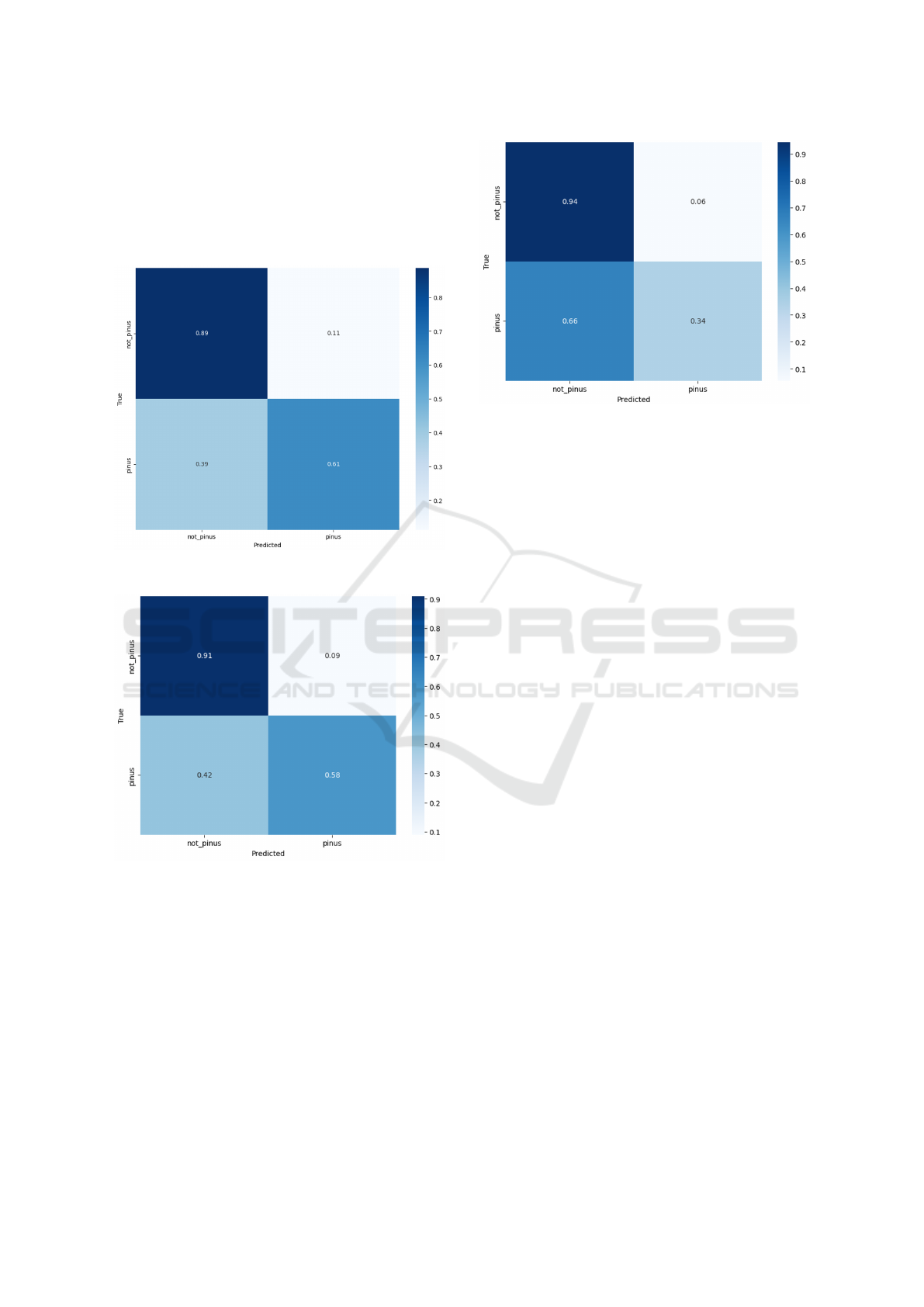

Finally, by visualizing the following figures con-

taining the confusion matrix for each model, it is evi-

dent that U-Net outperforms the others. It is possible

to observe that this network correctly predicts 89% of

the true negative label samples (non-Pinus spp.) and

63% of the true positive samples.

Figure 8: U-Net confusion matrix.

Figure 9: SegNet confusion matrix.

Comparing the results obtained by the U-Net

model, which showed the best performance, with

studies in similar domains, such as the work by So

and Yokota (So and Yokota, 2024), aimed at mapping

the distribution of alien species in river embankments,

and the study by Zhang et al. (Zhang et al., 2023a),

which focused on mapping the distribution of trees in

the Beijing Plain reforestation project, a notable diffe-

rence in the choice of datasets and methodologies for

each situation can be observed.

So and Yokota (So and Yokota, 2024) used

WorldView-3 satellite images fused with drone ima-

Figure 10: FCN confusion matrix.

gery, demonstrating how data fusion can enhance spa-

tial resolution and mapping accuracy. This method re-

sulted in high overall accuracy (98.39% for Solidago

and 97.78% for Sorghum halepense), proving to be

an effective approach for river embankment environ-

ments.

On the other hand, Zhang et al. (Zhang et al.,

2023a) applied a three-level hierarchical approach,

using sources such as Pl

´

eiades-1B, WorldView-2, and

Sentinel-2 combined with algorithms like SVM and

Random Forest. This method achieved varying results

depending on forest heterogeneity, with Sentinel-

2 being effective for homogeneous forests (OA of

89.29%) and WorldView-2 excelling in mixed forests

(OA of 90.91%).

The three studies analyzed demonstrate the effec-

tiveness of remote sensing and machine learning al-

gorithms in different contexts. While So and Yokota

emphasize the use of data fusion for high spatial reso-

lution, Zhang et al. explore hierarchical combinations

of data and algorithms. This article aims to highlight

the usefulness of deep neural networks for mapping

specific species.

4 CONCLUSION

The U-Net model demonstrated the best performance

among the three, especially excelling in the metrics

most relevant to this research, where the primary goal

is to correctly classify true positives (Pinus spp.). This

is evident from its confusion matrix, which showed

the highest number of correct predictions. While the

accuracy of all models was satisfactory, it is important

to note that a significant portion of this value is at-

tributed to the classification of the true negative class

(non-Pinus spp.), which is not the focus of this work.

Exploring Machine Learning and Remote Sensing Techniques for Mapping Pinus Invasion Beyond Crop Areas

877

This imbalance in the dataset highlights an impor-

tant limitation: the data used in this study is not ba-

lanced with the non-Pinus spp. class being dispro-

portionately represented. As a result, the models tend

to achieve higher precision and accuracy by correctly

identifying the dominant class, even if their perfor-

mance on the minority class (Pinus spp.) remains less

robust.

That limitation is particularly reflected in the Jac-

card metrics, which provide a more nuanced evalua-

tion of model performance by considering both false

positives and false negatives. Among the models,

only U-Net achieved a superior result in this me-

tric, underscoring its effectiveness in identifying areas

dominated by Pinus spp. despite the dataset’s imba-

lance.

The dataset used in this project is publicly avai-

lable for use, making it a valuable resource for re-

searchers interested in advancing methodologies for

the classification and monitoring of exotic tree species

in similar contexts. For now, the preliminary results

are promising, and in the future, new approaches will

be explored to improve the results.

ACKNOWLEDGMENT

The authors gratefully acknowledge the support provided

by:

REFERENCES

Aparecido de Almeida, C., Nigri Happ, P., Ortega Adarme,

M., Queiroz Feitoza, R., and Rodrigues Gomes, A.

(2020). Avaliac¸

˜

ao de t

´

ecnicas de aprendizagem pro-

funda para detecc¸

˜

ao de desmatamentos na regi

˜

ao da

amaz

ˆ

onia e cerrados brasileiros. Remote Sensing,

12(6):1024–1038.

Badrinarayanan, V., Kendall, A., and Cipolla, R. (2015).

Segnet: A deep convolutional encoder-decoder archi-

tecture for image segmentation. IEEE Transactions on

Pattern Analysis and Machine Intelligence, 39:2481–

2495. Available at: https://api.semanticscholar.org/

CorpusID:60814714. Accessed on: September 17,

2024.

Beloiu, M., Gessler, A., Griess, V. C., Heinzmann, L., and

Rehush, N. (2023). Individual tree-crown detection

and species identification in heterogeneous forests us-

ing aerial rgb imagery and deep learning. Remote

Sensing, 15(5):1463. Available at: https://doi.org/10.

3390/rs15051463. Accessed on: September 23, 2024.

Brandt, J. and Stolle, F. (2020). A global method to identify

trees outside of closed-canopy forests with medium-

resolution satellite imagery. International Journal

of Remote Sensing, 42(5):1713–1737. Available at:

https://doi.org/10.1080/01431161.2020.1841324. Ac-

cessed on: September 23, 2024.

Cervi, A. C., Linsingen, L. v., Hatschbach, G., and

Ribas, O. S. (2007). A vegetac¸

˜

ao do parque es-

tadual de vila velha, munic

´

ıpio de ponta grossa,

paran

´

a, brasil. Boletim do Museu Bot

ˆ

anico

Municipal, 69:1–52. Available at: https:

//www.researchgate.net/publication/313642957

A vegetacao do Parque Estadual de Vila Velha

Municipio de Ponta Grossa Parana Brasil.

Handan-Nader, C., Ho, D. E., and Liu, L. Y. (2021).

Environmental Enforcement: Enhancing the Role of

Satellite Imagery and Deep Learning. Stanford Law

School. Publication date: June 7, 2021; Date created:

2021; Date modified: August 10, 2021; December 5,

2022.

Instituto

´

Agua e Terra (2024). Estado regulamenta cultivo

de pinus e outras plantas ex

´

oticas invasoras no paran

´

a.

Accessed on December 16, 2024.

Li, J., Pei, Y., Zhao, S., Xiao, R., Sang, X., and Zhang, C.

(2020). A review of remote sensing for environmen-

tal monitoring in china. Remote Sensing, 12(7):1130.

Submission received: 17 February 2020; Revised: 27

March 2020; Accepted: 30 March 2020; Published: 2

April 2020.

Li, W., Dong, R., Fu, H., and Yu, L. (2019). Large-scale oil

palm tree detection from high-resolution satellite im-

ages using two-stage convolutional neural networks.

Remote Sensing, 11(1):11. Submission received: 2

November 2018; Revised: 2 December 2018; Ac-

cepted: 19 December 2018; Published: 20 December

2018.

Nascente, J. C. and Nunes, G. M. (2020). Uso de sis-

temas de aeronaves remotamente pilotadas (rpas) na

quantificac¸

˜

ao de incremento anual em volume de

aterro sanit

´

ario. Revista Brasileira de Gest

˜

ao Ambi-

ental e Sustentabilidade, 7(16):665–678. Published

on: August 31, 2020.

QGIS. Qgis geographic information system. Open Source

Geospatial Foundation Project. Available at: https://

qgis.org. Accessed on: September 17, 2024.

Qian, Y., Qin, H., Wang, W., Xiong, X., Yao, Y., and Zhou,

W. (2023). Detecting dominant tree species in urban

areas using zhuhai-1 satellite imagery. Journal of Ap-

plied Remote Sensing, 17(2):289–300.

Ronneberger, O., Fischer, P., and Brox, T. (2015).

U-net: Convolutional networks for biomedi-

cal image segmentation. ArXiv. Available at:

https://api.semanticscholar.org/CorpusID:3719281.

Accessed on: September 17, 2024.

Shelhamer, E., LONG, J., and Darrell, T. (2015). Fully

convolutional networks for semantic segmentation.

In IEEE Conference on Computer Vision and Pat-

tern Recognition (CVPR), pages 3431–3440. Avail-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

878

able at: https://api.semanticscholar.org/CorpusID:

1629541. Accessed on: September 17, 2024.

Simonyan, K. and Zisserman, A. (2015). Very deep convo-

lutional networks for large-scale image recognition.

So, M. R. and Yokota, S. (2024). Alien species distribution

mapping using satellite and drone fusion on river dikes

along the tone river, japan. Journal of Applied Remote

Sensing, 18(4):044505–044505.

Usman, M., Ejaz, M., Nichol, J. E., Farid, M. S., Abbas,

S., and Khan, M. H. (2023). A comparison of ma-

chine learning models for mapping tree species using

worldview-2 imagery in the agroforestry landscape

of west africa. ISPRS International Journal of Geo-

Information, 12(4):142. Submission received: 25 Jan-

uary 2023; Revised: 19 March 2023; Accepted: 22

March 2023; Published: 25 March 2023.

Zhang, S., Cui, Y., Zhou, Y., Dong, J., Li, W., Liu, B.,

and Dong, J. (2023a). A mapping approach for euca-

lyptus plantations canopy and single-tree using high-

resolution satellite images in liuzhou, china. IEEE

Transactions on Geoscience and Remote Sensing.

Zhang, X., Yu, L., Zhou, Q., Wu, D., Ren, L., and Luo, Y.

(2023b). Detection of tree species in beijing plain af-

forestation project using satellite sensors and machine

learning algorithms. Forests, 14(9):1889. Submis-

sion received: 10 August 2023; Revised: 7 September

2023; Accepted: 15 September 2023; Published: 17

September 2023. This article belongs to the Special

Issue Mapping Forest Vegetation via Remote Sensing

Tools.

Zheng, J., Wu, W., Yu, L., and Fu, H. (2021). Coconut

trees detection on the tenarunga using high-resolution

satellite images and deep learning. In 2021 IEEE In-

ternational Geoscience and Remote Sensing Sympo-

sium IGARSS, pages 6512–6515.

Exploring Machine Learning and Remote Sensing Techniques for Mapping Pinus Invasion Beyond Crop Areas

879