Usability in Software for People with Disabilities: Systematic Mapping

Luiz Felipe Cirqueira dos Santos

1 a

, Edmir Queiroz

2 b

, Igor Rafael Eloi dos Santos

2 c

,

Elisrenan Barbosa da Silva

2 d

, Mariano Florencio Mendonc¸a

1 e

and Fabio Gomes Rocha

1 f

1

Postgraduate Program in Computer Science - PROCC/UFS, Federal University of Sergipe, Av. Mal. C

ˆ

andido Rondon,

Rosa Elze, 1861, S

˜

ao Cristpv

˜

ao, Brazil

2

IT Courses, Est

´

acio University Center, R. Teixeira de Freitas, Salgado Filho, 10, Aracaju, Brazil

Keywords:

Usability, Accessibility, Analysis Tools, Digital Inclusion, Inclusive Interfaces.

Abstract:

This study presents a systematic mapping of usability analysis tools focused on accessibility, aiming to

identify technologies, methods, and challenges related to improving inclusive interfaces. Tools such as

DUXAIT-NG, Guideliner, and MUSE were analyzed, standing out for integrating automated evaluations

and specific adaptations. However, they exhibited technical limitations in customization and application to

different contexts and types of disabilities. The results demonstrated the positive impact of these tools on the

development of accessible software while also highlighting research gaps, such as the lack of empirical studies

and the absence of real-time dynamic analyses. Based on this analysis, the study contributes by organizing and

systematizing knowledge on accessibility tools, identifying research gaps that emphasize the need for greater

flexibility in solutions and validations, and suggesting technological and methodological advancements. It

reinforces the importance of expanding research to other databases and developing more robust and dynamic

tools.

1 INTRODUCTION

With the growing popularity of mobile devices, the

alignment between web applications and usability

guidelines has become one of the key factors for

user satisfaction and the success of an application

(Marenkov et al., 2018).

However, manual usability evaluation is

time-consuming and resource-intensive, making

automated evaluation a promising alternative to

overcome these limitations (Marenkov et al., 2018).

Evaluating user satisfaction with user interfaces (UIs)

presents additional challenges due to the dynamic

nature of UIs and the constant movement within

the usage context (Yigitbas et al., 2019). These

challenges are even more evident in mobile devices,

where developers have focused on creating interfaces

that are intuitive and easy to use (Bessghaier et al.,

a

https://orcid.org/0000-0003-4538-5410

b

https://orcid.org/0009-0004-6930-3031

c

https://orcid.org/0009-0000-9106-2896

d

https://orcid.org/0000-0001-8890-9718

e

https://orcid.org/0000-0003-0732-3980

f

https://orcid.org/0000-0002-0512-5406

2021).

Achieving these qualities requires an iterative

process of evaluating mobile interfaces and

eliminating structural flaws that could compromise

visual and functional consistency—crucial factors for

the user experience.

At the same time, technological advancements

have driven the development of smart cities, which

demand the creation of applications that meet

rigorous usability criteria, such as the ten usability

heuristics and the principles of usability analysis

(Adinda and Suzianti, 2018). This scenario

underscores the importance of continually evaluating

software usability to ensure its effectiveness and

accessibility, particularly for people with disabilities.

In this context, the present study systematically

maps the literature to identify and evaluate usability

analysis tools focused on software accessibility. To

this end, articles in the selected databases were

analyzed, providing a broad understanding of the

available tools and methods.

The remainder of this article is structured as

follows: Section 2 provides the theoretical context,

addressing concepts of usability and accessibility in

Santos, L. F. C., Queiroz, E., Santos, I. R. E., Barbosa da Silva, E., Mendoncça, M. F. and Rocha, F. G.

Usability in Software for People with Disabilities: Systematic Mapping.

DOI: 10.5220/0013393600003929

In Proceedings of the 27th International Conference on Enterprise Information Systems (ICEIS 2025) - Volume 2, pages 597-604

ISBN: 978-989-758-749-8; ISSN: 2184-4992

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

597

software. Section 3 presents related work. Section

4 details the methodology used. Section 5 describes

the results, while Section 6 discusses the analyzed

issues. Finally, Section 7 presents the conclusions of

this study.

2 THEORETICAL FRAMEWORK

Including all students in education, particularly in

higher education, has become a global concern, with

efforts focused on meeting the learning needs of

students with disabilities (Ndlovu, 2021). In this

context, assistive technologies (AT) play a crucial

role in providing academic, social, and physical

support to promote the well-being and independence

of these students (McNicholl et al., 2021). AT

encompasses devices such as iPods, iPads, computers,

and software that help overcome barriers imposed by

the environment or the disability itself (McNicholl

et al., 2021).

Providing AT in higher education is a fundamental

strategy to equalize learning opportunities for

students with and without disabilities. While all

students at this level meet the required academic

standards, specific limitations can impact the

performance of students with disabilities due to

environmental or individual factors (Ndlovu, 2021).

Equipping these students with appropriate devices

and technologies helps eliminate barriers and create

a more inclusive and accessible learning environment

(Mji et al., 2009; Tony, 2019; Lyner-Cleophas, 2019;

Alnahdi, 2014).

In this scenario, mobile learning emerges as

an opportunity to make the educational process

more flexible, allowing students to learn anytime

and anywhere (Kumar and Mohite, 2018). Mobile

learning provides adaptable and collaborative

environments, extending teaching possibilities

beyond the traditional classroom (Nedungadi and

Raman, 2012). However, the design of mobile

educational applications faces significant challenges,

such as adapting to small screens, input method

limitations, and the ever-changing context of use

(Kumar and Mohite, 2018). These factors highlight

the importance of usability testing to ensure that

applications are practical, effective, and easy to use

(Harrison et al., 2013).

The usability of mobile applications, especially

those designed for learning, is an emerging and

essential area in human-computer interaction (HCI).

Considered a determining factor for technological

success and adoption, usability is associated with

ease of use, perceived utility, and a satisfying

user experience (Nielsen, 1994; Cole et al., 2008).

Despite its importance, few studies are systematically

evaluating the usability of mobile educational

applications, representing a critical gap in research

and development (Zhang and Adipat, 2005).

Finally, the rapid technological evolution and the

introduction of more sophisticated mobile devices

continue to challenge developers to create interfaces

that meet user expectations while overcoming

technical limitations. Usability studies are an

indispensable tool to ensure that mobile learning

applications achieve not only a high level of user

satisfaction but also contribute to a more inclusive and

accessible education (Kumar and Mohite, 2016).

3 RELATED WORK

The work by Falconi et al. (Falconi et al.,

2023) presents an integrated usability evaluation

tool that combines heuristic evaluation methods

and tree testing. The goal is to automate the

usability evaluation process, allowing evaluators of

different experience levels to visualize the evaluation

process and its associated tasks. DUXAIT-NG

was developed in response to the need for tools

that support standardized usability evaluations,

especially in contexts where conducting in-person

tests is challenging, such as during the COVID-19

pandemic. The paper also discusses the importance

of conducting case studies in different domains to

validate the tool. It plans to expand its functionalities

to include other types of usability evaluations.

Paltern

`

o et al. (Patern

`

o et al., 2016) presents a

system based on timelines for visualizing interactive

events, which is used in a usability evaluation tool

for mobile web applications. The proposal is to

collect user interaction data while performing tasks

in web applications, allowing usability experts to

analyze this data to identify problems. The system

is designed to record various interaction events, such

as taps and gestures, and offers visualizations that

help compare the actual user behavior with an ideal

behavior. The paper concludes that improvements in

future visualization and data analysis are needed to

support better identification of usability issues.

Vasconcelos and Baldochi (de Vasconcelos and

Baldochi Jr, 2012) discuss the USABILICS system,

which aims to facilitate the usability evaluation of

web applications through an automated approach.

The main focus of the system is the analysis of

user interactions related to defined tasks, allowing

the identification of incorrect actions during task

execution. The paper also reports experiments

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

598

conducted with two web applications, an e-learning

system, and a technology article publishing website,

where several tasks were monitored. The results

showed that usability scores significantly improved

after implementing recommendations based on

USABILICS analyses, and incorrect actions were

reduced.

Zhang (Zhang et al., 2009) investigates the

usability of three digital libraries: ACM Digital

Library, IEEE Computer Society Digital Library, and

IEEE Xplore. The research was conducted with 36

participants who performed search and navigation

tasks. The results revealed several difficulties users

face, especially those with less experience. The study

used objective and subjective measures to assess

usability, including the number of queries, search

time, and user satisfaction. The authors discuss the

study’s limitations and suggest that future research

should include a more diverse sample and a wider

range of tasks to validate the results.

Marsh (Marsh, 1999) analyzes the complexity

of usability evaluation in virtual reality (VR),

highlighting the inadequacy of traditional 2D

interface methods for 3D environments. The author

explores the definition of VR, its differences from

GUIs, and the challenges in usability evaluation. He

emphasizes the need to develop new methodologies

adapted to the VR user experience and suggests

future research directions.

Marsh proposes the need to develop new

evaluation methods that consider the user experience

within the virtual environment and suggests future

research directions. The paper emphasizes the

importance of adapting evaluation methodologies to

meet the specific needs of VR to improve the user

experience. These studies show how automated and

innovative methods are essential for enhancing the

user experience and facilitating usability evaluation,

especially in specific environments such as VR

and digital libraries, while promoting inclusion and

accessibility.

4 METHODOLOGY

The goal of this article was to characterize, in a

structured manner, an initial perspective on tools

that perform usability analysis of software, with a

special focus on accessibility. A systematic mapping

was chosen as the research instrument to achieve

this. A systematic mapping is a review of a specific

topic or area, which allows the identification of

various approaches and their associated challenges

(Vel

´

asquez-Dur

´

an and Ram

´

ırez Montoya, 2018;

Keele et al., 2007). The systematic mapping in

this article follows the guidelines of Kitchenham and

Charters (Petersen et al., 2008), divided into three

phases: planning, execution, and communication

of results. We defined the research topic, applied

methodology, and selected the databases where the

articles were searched. Research questions were

prepared to be answered through this study, and the

search string related to the theme was used using a

tool called Parsifal.

A detailed protocol for article classification was

followed to ensure the study’s transparency and

reproducibility. This protocol included the following

steps:

• Definition of Inclusion and Exclusion Criteria

• Selection of Articles

• Classification and Analysis of Articles

• Definition of Research Questions

• Number of Publications per Year

In the definition of inclusion criteria, articles that

addressed, even partially, usability tools for software

and studies that included usability analysis for people

with disabilities were included. As for the exclusion

criteria, we removed duplicate articles, studies that

did not evaluate software or assistive technologies,

studies that did not focus on usability analysis, and

publications that did not address accessibility or tools

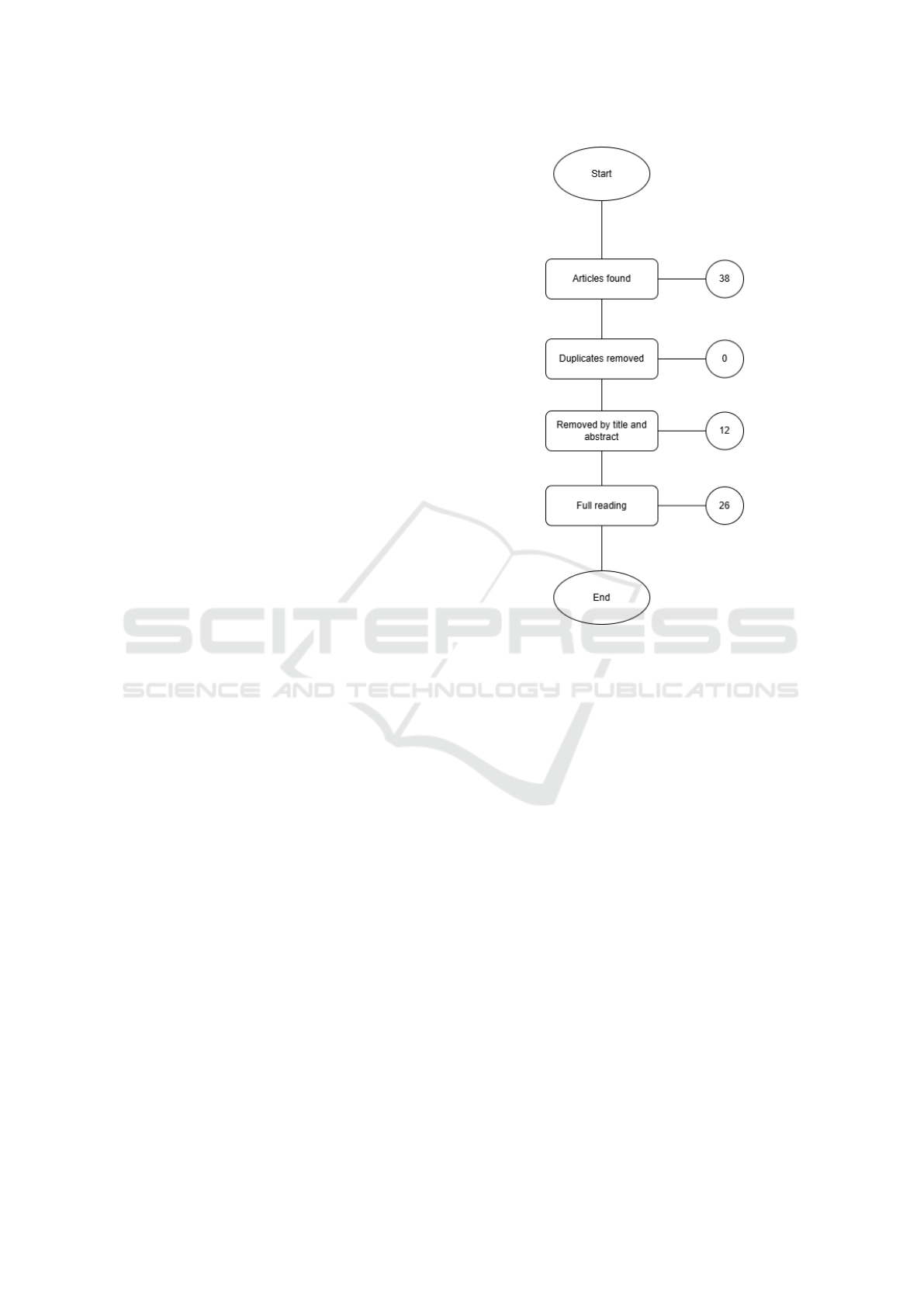

aimed at people with disabilities, as shown in Table 1.

Table 1: Inclusion and Exclusion Criteria.

Inclusion Articles that address, even partially,

usability tools for software

Exclusion

Duplicate articles

Studies that do not evaluate

software or assistive technology

Studies that do not directly address

usability analysis

Publications that do not address

accessibility or tools aimed at

people with disabilities

We conducted the search using the terms

(”usability analysis tools” OR ”usability evaluation

tools” OR ”usability testing software” OR ”software

usability assessment” OR ”usability metrics

software”) AND (”software usability evaluation”

OR ”user experience analysis” OR ”UX analysis”

OR ”human-computer interaction” OR ”HCI”

OR ”interface evaluation”) AND (”tools” OR

”frameworks” OR ”programs” OR ”systems”) AND

(”evaluation methods” OR ”heuristic evaluation” OR

”usability metrics” OR ”task analysis” OR ”cognitive

Usability in Software for People with Disabilities: Systematic Mapping

599

walkthrough” OR ”eye-tracking” OR ”heatmaps”),

with no period restriction, in the ACM and IEEE

databases on September 11, 2024. The selected

databases are hybrid, search engines, or bibliometric

databases, widely used in studies as they provide

good coverage for systematic reviews (Kitchenham

and Brereton, 2013). A total of 38 relevant articles

were identified, which met, even partially, the

inclusion criteria listed in Table 1.

We verified that there were no duplicate articles

concerning the search databases used. Of 38 articles,

26 were considered relevant to the research, as their

titles and abstracts addressed the inclusion criteria,

while 12 met the exclusion criteria, as shown in

Figure 1. As part of the study stages, we read the

articles that met the inclusion and exclusion criteria

to find answers to the research questions. Among

them, 13 provided answers using structured questions

that can assist in future research sequences and in

evaluating the research process (Kitchenham and

Brereton, 2013), as described in Table 2.

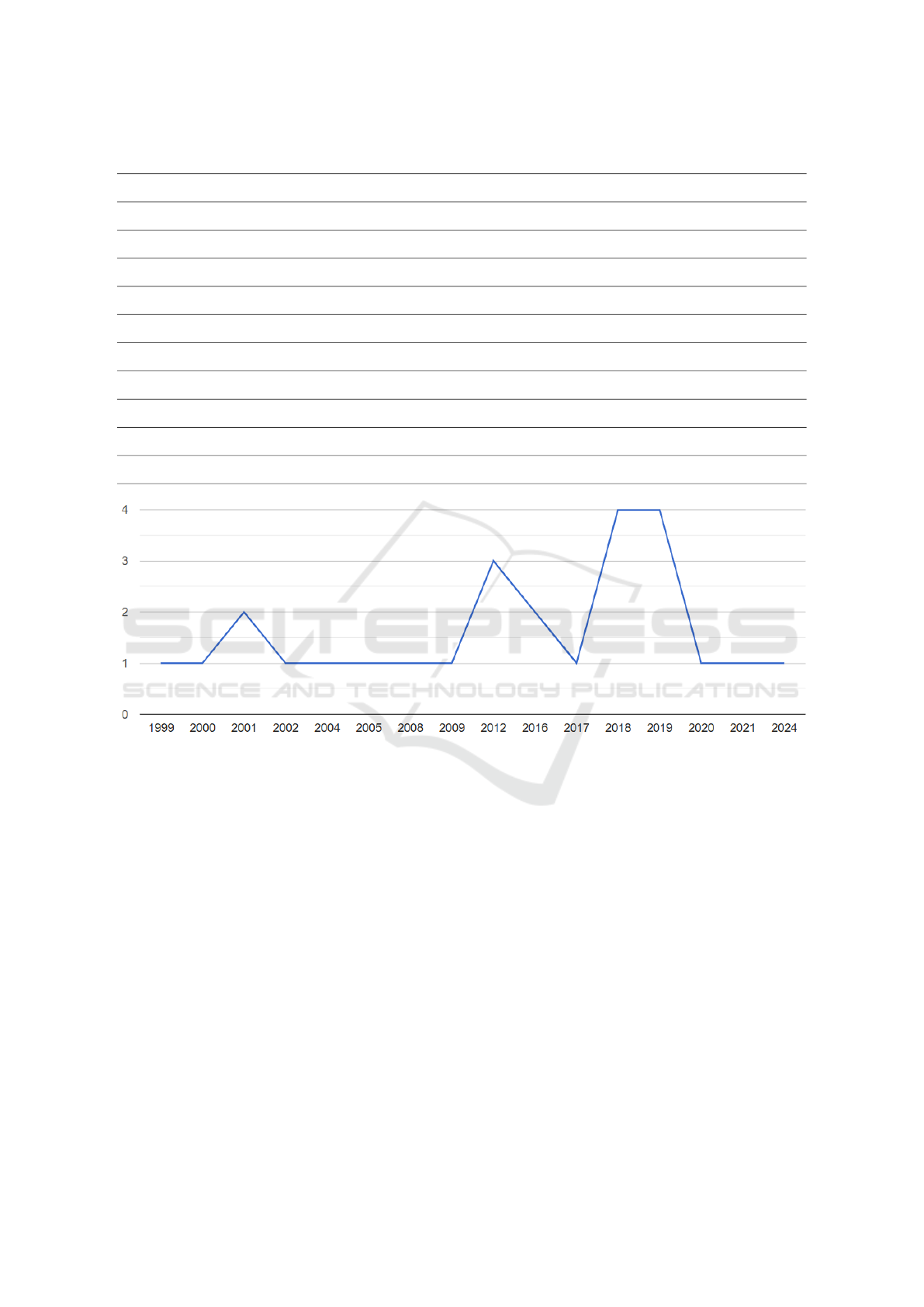

Figure 2 illustrates the variation in research

involving the topic, showing that publications in

the searched databases have experienced significant

fluctuations in interest in the subject mentioned in

this research, with both increases and decreases in the

number of publications over time.

5 RESULTS

In this section, we present the results of our

systematic mapping. We divided our findings into

subtopics to facilitate understanding and visualization

of the information. In each subtopic, we will show the

answers to the specific questions.

5.1 RQ1. What Tools Are Available for

Software Usability Analysis?

Several software usability analysis tools exist,

such as DUXAIT-NG, MOBILICS, and Guideliner.

DUXAIT-NG is a heuristic evaluation tool that

inspects user interfaces, focusing on user experience

(Falconi et al., 2023). MOBILICS (Gonc¸alves et al.,

2016), which focuses on mobile interface analysis,

helps evaluate software on mobile devices. At the

same time, Guideliner automates the verification of

UI compliance with usability guidelines, such as

links, images, and buttons (Marenkov et al., 2018).

Figure 1: Selection of The Articles.

5.2 RQ2. What Usability Tools

Specifically Focus on Evaluating

Software Accessible to People with

Disabilities?

Tools such as Google’s Mobile Friendly Test Tool and

Bing Mobile Friendliness Test focus on evaluating

usability on mobile devices, considering access on

mobile platforms (Patern

`

o et al., 2017). Guideliner,

as described in the article (Marenkov et al., 2018),

includes specific accessibility guidelines, with 13

guidelines aimed at making the interface accessible,

addressing issues such as contrast and navigation on

mobile devices.

5.3 RQ3. How Do Usability Analysis

Tools Address Different Types of

Disabilities (Visual, Auditory,

Motor, Cognitive)?

Usability analysis tools adapt to handle different

disabilities. Guideliner, for example, includes

16 guidelines to ensure accessibility for visual

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

600

Table 2: Research Questions and Justifications.

Question

Number

Question Text Justification

Q1 What tools are available for software usability analysis? Identify the main tools used and their features, providing a

broad view of the current state of available technologies.

Q2 What usability tools specifically focus on evaluating software

accessible to people with disabilities?

Investigate the tools that meet accessibility needs, promoting

the development of more inclusive solutions.

Q3 How do usability analysis tools address different types of

disabilities (visual, auditory, motor, cognitive)?

Understand how different needs are addressed by the tools to

ensure that all disabilities are adequately considered.

Q4 What are inaccessible software’s most frequently used usability

evaluation methods?

Identify the most effective methods for evaluating accessibility

and guiding best development practices.

Q5 How are usability tools evaluated in terms of their effectiveness

in identifying accessibility barriers?

Evaluate the performance of tools in identifying barriers,

ensuring they meet accessibility goals.

Q6 Is there a correlation between the type of disability and the

preference for certain usability tools?

Explore user preferences to adapt tools to their specific needs

and improve their acceptance.

Q7 How do usability analysis tools handle accessible mobile and

web interfaces?

Verify the adequacy of the tools to modern technologies and

their impact on the usability of mobile and web interfaces.

Q8 What challenges are faced when adapting usability tools for

users with disabilities?

Identify the main obstacles in adapting tools to meet users’

needs better.

Q9 What is the impact of usability analysis tools on developing

more inclusive software?

Examine how these tools contribute to a more inclusive design

aligned with accessibility needs.

Q10 What are the gaps in research on usability tools focused on

accessibility?

Highlight underexplored areas to guide future research and

innovations in the field.

Figure 2: Articles per Year.

and auditory disabilities, as well as adjustments

for mobile interfaces (Marenkov et al., 2018).

DUXAIT-NG focuses on improving software

interfaces to ensure inclusion (Falconi et al., 2023),

and other tools like MUSE (Patern

`

o et al., 2017) offer

recommendations and perform heuristic evaluations

focusing on cognitive and motor needs.

5.4 RQ4. What Are the Most

Frequently Used Usability

Evaluation Methods Inaccessible

Software?

Traditional methods such as usability testing,

interviews, and heuristic evaluations are commonly

used (Falconi et al., 2023; Marsh, 1999). However,

new approaches are being developed, such as

automated testing with DUXAIT-NG (Falconi

et al., 2023), which allows continuous and real-time

usability evaluation. Other methods, such as

cognitive walkthroughs and user feedback, are

also essential to understanding how people with

disabilities interact with software and identifying

areas that need improvement (Yigitbas et al., 2019).

5.5 RQ5. How Are Usability Tools

Evaluated in Terms of Their

Effectiveness in Identifying

Accessibility Barriers?

The effectiveness of tools is generally measured

by their ability to identify accessibility flaws and

the accuracy in adapting interfaces for specific

disabilities. Tools like MUSE (Patern

`

o et al., 2017)

are evaluated for their ability to detect flaws during

navigation and offer recommendations. Moreover, the

Usability in Software for People with Disabilities: Systematic Mapping

601

flexibility of tools like Guideliner (Marenkov et al.,

2018) and the Mobile Friendly Test Tool (Patern

`

o

et al., 2017) is crucial, as they can be applied in

different accessibility contexts for users with varying

needs.

5.6 RQ6. Is There a Correlation

Between the Type of Disability and

Preference for Specific Usability

Tools?

Yes, the preference for usability tools may vary

depending on the type of disability. For example,

people with visual impairments may benefit from

tools that adjust contrast and text readability, such

as Google’s Mobile Friendly Test Tool (Patern

`

o

et al., 2017). In contrast, people with motor

impairments prefer tools that adapt navigation and

facilitate interaction control (Yigitbas et al., 2019;

Jahan et al., 2019; Bessghaier et al., 2021).

5.7 RQ7. How Do Usability Analysis

Tools Handle Accessible Mobile and

Web Interfaces?

Tools like Google’s Mobile Friendly Test Tool and

MUSE are designed to evaluate mobile interfaces

and test navigability and accessibility on mobile

devices. These tools use contextual and behavioral

data to optimize design and ensure that interfaces are

accessible and functional on different devices. Tools

like Guideliner, in addition to evaluating usability

guidelines, also allow customization for accessing

content in an optimized way on mobile and web

platforms (Lettner and Holzmann, 2012; Patern

`

o

et al., 2017; Marenkov et al., 2018; Bessghaier et al.,

2021; Gonc¸alves et al., 2016; Zhang et al., 2009;

Baguma, 2018).

5.8 RQ8. What Challenges Are Faced in

Adapting Usability Tools for People

with Disabilities?

The main difficulty is personalizing the tools to fit

the specific needs of each type of disability. The

tools need to be adaptable enough to ensure

accessibility without losing effectiveness in

evaluation. Additionally, integrating automated

resources that provide continuous feedback tailored

to different types of disabilities continues to be an

essential technical challenge (Patern

`

o et al., 2017;

Bessghaier et al., 2021; Marenkov et al., 2018;

Marsh, 1999; Baguma, 2018; Holmes et al., 2019).

5.9 RQ9. What Is the Impact of

Usability Analysis Tools on

Developing More Inclusive

Software?

Usability analysis tools have a significant impact

as they help identify accessibility flaws and adjust

the software to serve users with disabilities better.

This contributes to digital inclusion, promoting better

experiences and greater functionality for all users

(Falconi et al., 2023; Jahan et al., 2019; Bessghaier

et al., 2021; Marsh, 1999; Baguma, 2018; Holmes

et al., 2019).

5.10 RQ10. What Are the Gaps in

Research on Usability Tools

Focused on Accessibility?

Research still faces gaps, especially in developing

tools that effectively integrate automated analysis

with accessibility. For example, the article by

Yigitbas (Yigitbas et al., 2019) highlights that

real-time customization of interfaces is still an open

area of research. Another need is empirical studies on

the impact of tools on users with specific disabilities

(Au et al., 2008; Patern

`

o et al., 2017; Jahan et al.,

2019; Yigitbas et al., 2019; Holmes et al., 2019).

6 DISCUSSION

The analysis of the results demonstrates significant

progress in developing tools focused on software

usability and accessibility, especially those that

integrate automated assessments with specific

guidelines, such as DUXAIT-NG, Guidelines, and

MUSE. These solutions are central to identifying

barriers and promoting inclusive interfaces for users

with different disabilities. There are still challenges

to be overcome, especially in customization to meet

the specific needs of each user group, considering

the technical limitations that hinder effective

universal adaptation. Although there are tools

focused on mobile devices and the web, such as

the Mobile-Friendly Test Tool and MUSE, the

restrictions of these environments, such as reduced

space and interaction limitations, continue to

represent substantial challenges for accessibility.

The impact of usability analysis tools on digital

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

602

inclusion is evident, promoting continuous interface

improvements and strengthening accessible design

practices. There are still gaps to be filled, such as

the lack of empirical studies validating these tools’

application in diverse contexts and in real-time. The

need for technological advances becomes crucial to

enable dynamic and continuous adaptations, ensuring

that tools not only identify problems but also assist

in implementing practical solutions for software

accessibility.

7 FINAL CONSIDERATIONS

The study provides a comprehensive overview of

how different technologies and methods have been

applied to identify and address accessibility barriers

in software. Tools such as DUXAIT-NG, Guideliner,

and MUSE demonstrate advances in integrating

automated assessments and specific adaptations for

different disabilities but still have limitations in

universally meeting user needs. This systematic

mapping highlights the importance of documenting

the state of the art in usability and accessibility,

providing a basis for future research and improving

practices in developing inclusive software. We

emphasize that accessibility is not just a technical

issue but an ethical and social commitment, requiring

collaborative efforts between researchers, developers,

and stakeholders.

For future work, we intend to expand the research

to include databases such as IEEE Xplore, Web

of Science, and Scopus, broadening the scope of

the studies analyzed. This approach will allow

for the identification of new methodologies and the

validation of the results obtained. Empirical studies

will be conducted to evaluate the effectiveness of

the tools analyzed in different scenarios and with

various audiences, deepening the understanding of

their limitations and potential in creating accessible

and inclusive software.

REFERENCES

Adinda, P. P. and Suzianti, A. (2018). Redesign of

user interface for e-government application using

usability testing method. In Proceedings of the

4th International Conference on Communication and

Information Processing, pages 145–149.

Alnahdi, G. (2014). Assistive technology in

special education and the universal design for

learning. Turkish Online Journal of Educational

Technology-TOJET, 13(2):18–23.

Au, F. T., Baker, S., Warren, I., and Dobbie, G.

(2008). Automated usability testing framework. In

Proceedings of the ninth conference on Australasian

user interface-Volume 76, pages 55–64.

Baguma, R. (2018). Usability evaluation of the etax portal

for uganda. In Proceedings of the 11th International

Conference on Theory and Practice of Electronic

Governance, pages 449–458.

Bessghaier, N., Soui, M., Kolski, C., and Chouchane, M.

(2021). On the detection of structural aesthetic defects

of android mobile user interfaces with a metrics-based

tool. ACM Transactions on Interactive Intelligent

Systems (TiiS), 11(1):1–27.

Cole, J. S., Bergin, D. A., and Whittaker, T. A. (2008).

Predicting student achievement for low stakes tests

with effort and task value. Contemporary Educational

Psychology, 33(4):609–624.

de Vasconcelos, L. G. and Baldochi Jr, L. A. (2012).

Towards an automatic evaluation of web applications.

In Proceedings of the 27th Annual ACM Symposium

on Applied Computing, pages 709–716.

Falconi, F., Moquillaza, A., Lecaros, A., Aguirre, J.,

Ramos, C., and Paz, F. (2023). Planning and

conducting heuristic evaluations with duxait-ng. In

Proceedings of the XI Latin American Conference on

Human Computer Interaction, pages 1–2.

Gonc¸alves, L. F., Vasconcelos, L. G., Munson, E. V.,

and Baldochi, L. A. (2016). Supporting adaptation

of web applications to the mobile environment with

automated usability evaluation. In Proceedings of the

31st Annual ACM Symposium on Applied Computing,

pages 787–794.

Harrison, R., Flood, D., and Duce, D. (2013). Usability

of mobile applications: literature review and rationale

for a new usability model. Journal of Interaction

Science, 1:1–16.

Holmes, S., Moorhead, A., Bond, R., Zheng, H., Coates,

V., and McTear, M. (2019). Usability testing

of a healthcare chatbot: Can we use conventional

methods to assess conversational user interfaces? In

Proceedings of the 31st European Conference on

Cognitive Ergonomics, pages 207–214.

Jahan, M. R., Aziz, F. I., Ema, M. B. I., Islam, A. B.,

and Islam, M. N. (2019). A wearable system for

path finding to assist elderly people in an indoor

environment. In Proceedings of the XX International

Conference on Human Computer Interaction, pages

1–7.

Keele, S. et al. (2007). Guidelines for performing

systematic literature reviews in software engineering.

Technical report, Technical report, ver. 2.3 ebse

technical report. ebse.

Kitchenham, B. and Brereton, P. (2013). A systematic

review of systematic review process research in

software engineering. Information and software

technology, 55(12):2049–2075.

Kumar, B. A. and Mohite, P. (2016). Usability

guideline for mobile learning apps: An empirical

study. International Journal of Mobile Learning and

Organisation, 10(4):223–237.

Usability in Software for People with Disabilities: Systematic Mapping

603

Kumar, B. A. and Mohite, P. (2018). Usability of mobile

learning applications: a systematic literature review.

Journal of Computers in Education, 5:1–17.

Lettner, F. and Holzmann, C. (2012). Automated and

unsupervised user interaction logging as basis for

usability evaluation of mobile applications. In

Proceedings of the 10th International Conference on

Advances in Mobile Computing & Multimedia, pages

118–127.

Lyner-Cleophas, M. (2019). Assistive technology enables

inclusion in higher education: The role of higher

and further education disability services association.

African Journal of Disability, 8(1):1–6.

Marenkov, J., Robal, T., and Kalja, A. (2018). Guideliner:

A tool to improve web ui development for better

usability. In Proceedings of the 8th International

Conference on Web Intelligence, Mining and

Semantics, pages 1–9.

Marsh, T. (1999). Evaluation of virtual reality systems for

usability. In CHI’99 extended abstracts on Human

factors in computing systems, pages 61–62.

McNicholl, A., Casey, H., Desmond, D., and Gallagher,

P. (2021). The impact of assistive technology use

for students with disabilities in higher education:

a systematic review. Disability and rehabilitation:

assistive Technology, 16(2):130–143.

Mji, G., MacLachlan, M., Melling-Williams, N., and

Gcaza, S. (2009). Realising the rights of disabled

people in africa: An introduction to the special issue.

Disability and Rehabilitation, 31(1):1–6.

Ndlovu, S. (2021). Provision of assistive technology

for students with disabilities in south african higher

education. International Journal of Environmental

Research and Public Health, 18(8):3892.

Nedungadi, P. and Raman, R. (2012). A new

approach to personalization: integrating e-learning

and m-learning. Educational Technology Research

and Development, 60:659–678.

Nielsen, J. (1994). Usability engineering. Morgan

Kaufmann.

Patern

`

o, F., Schiavone, A. G., and Conti, A. (2017).

Customizable automatic detection of bad usability

smells in mobile accessed web applications. In

Proceedings of the 19th international conference on

human-computer interaction with mobile devices and

services, pages 1–11.

Patern

`

o, F., Schiavone, A. G., and Pitardi, P. (2016).

Timelines for mobile web usability evaluation. In

Proceedings of the International Working Conference

on Advanced Visual Interfaces, pages 88–91.

Petersen, K., Feldt, R., Mujtaba, S., and Mattsson, M.

(2008). Systematic mapping studies in software

engineering. In 12th international conference on

evaluation and assessment in software engineering

(EASE). BCS Learning & Development.

Tony, M. P. (2019). The effectiveness of assistive

technology to support children with specific learning

disabilities: Teacher perspectives.

Vel

´

asquez-Dur

´

an, A. and Ram

´

ırez Montoya, M. S. (2018).

Research management systems: Systematic mapping

of literature (2007-2017).

Yigitbas, E., Hottung, A., Rojas, S. M., Anjorin, A., Sauer,

S., and Engels, G. (2019). Context-and data-driven

satisfaction analysis of user interface adaptations

based on instant user feedback. Proceedings of the

ACM on human-computer interaction, 3(EICS):1–20.

Zhang, D. and Adipat, B. (2005). Challenges,

methodologies, and issues in the usability testing

of mobile applications. International journal of

human-computer interaction, 18(3):293–308.

Zhang, X., Liu, J., Li, Y., and Zhang, Y. (2009). How usable

are operational digital libraries: a usability evaluation

of system interactions. In Proceedings of the 1st

ACM SIGCHI symposium on Engineering interactive

computing systems, pages 177–186.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

604