Machine Learning for Ontology Alignment

Faten Abbassi

1 a

, Yousra Bendaly Hlaoui

1 b

and Faouzi Ben Charrada

2 c

1

LIPSIC Laboratory, University of Tunis El Manar Tunis, Tunisia

2

LIMTIC Laboratory, University of Tunis El Manar Tunis, Tunisia

Keywords:

Machine Learning, Normalization Techniques, Reference Ontologies, Conference Track, Benchmark Track.

Abstract:

This article proposes an ontology alignment approach that combines supervised machine learning models and

schema-matching techniques. Our approach analyzes reference ontologies and their alignments provided by

OAEI to extract ontological data matrices and confidence vectors. In addition, these ontological data matrices

are normalized using normalization techniques to obtain a coherent format for enhancing the accuracy of the

alignments. From the normalized data, syntactic and external similarity matrices are generated via individual

matchers before being concatenated to build a final similarity matrix representing the correspondences be-

tween two ontologies. This matrix and the confidence vector are then used by six machine learning models,

such as Logistic Regression, Random Forest Classifier, Neural Network, Linear SVC, K-Neighbors Classifier

and Gradient Boosting Classifier, to identify ontological similarities. To evaluate the performances of our ap-

proach, we have compared our results with our previous results (Abbassi and Hlaoui, 2024a). The experiments

are performed over the reference ontologies of the benchmark and conference tracks based on their reference

alignments provided by OAEI.

1 INTRODUCTION

The heterogeneity of information representations in

computer science results from the use of different vo-

cabularies, concepts and structures, which makes in-

teroperability between systems complex, particularly

on the Internet. To overcome these challenges, on-

tologies have been proposed as a solution. An ontol-

ogy is a formal and explicit specification of a shared

conceptualization (Gruber, 1993; Gruber and Olsen,

1994). They unify different points of view by re-

ducing or eliminating conceptual and terminological

confusion (Uschold and Gruninger, 1996). Indeed,

the same ontology conceptualizes the same knowl-

edge that could be specified by different ontologies.

The management of ontological diversity relies on

ontology alignment, which aims to unify heteroge-

neous entities while conserving the coherence of in-

formation. An ontology alignment process consists

of computing similarity measures between the differ-

ent classes and links of a pair of ontologies (Euzenat

et al., 2007). This process is performed by schema

matching techniques, which calculate similarities at

a

https://orcid.org/0000-0001-7525-4505

b

https://orcid.org/0000-0002-3476-0185

c

https://orcid.org/0000-0001-5484-547X

different levels of ontology granularity (Rahm and

Bernstein, 2001; Euzenat et al., 2007; Shvaiko and

Euzenat, 2005): element level (classes and individu-

als), internal structure level (data properties) and ex-

ternal structure level(subclasses, disjoint classes and

relationships). Each of these techniques is imple-

mented by individual matchers that compute similar-

ity measures at different levels of ontology granular-

ity (Rahm and Bernstein, 2001; Euzenat et al., 2007;

Shvaiko and Euzenat, 2005) . In addition, the differ-

ent individual matchers depend on how they generally

interpret the input information(Rahm and Bernstein,

2001; Euzenat et al., 2007; Shvaiko and Euzenat,

2005). Each of them calculates similarity measures

according to syntactic interpretation criteria, where

labels are treated as character strings, and/or external

interpretation criteria, where labels are perceived as

linguistic objects through external resources, such as

a thesaurus. Schema-matching techniques are based

not only on individual matching matchers but also

on composite matchers. In fact, composite match-

ers combine ontology similarity measures obtained

by different individual matchers to determine the fi-

nal ontology alignment decision for a given pair of

ontologies(Rahm and Bernstein, 2001; Euzenat et al.,

2007; Shvaiko and Euzenat, 2005). We have opted

668

Abbassi, F., Hlaoui, Y. B. and Ben Charrada, F.

Machine Learning for Ontology Alignment.

DOI: 10.5220/0013425700003928

In Proceedings of the 20th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE 2025), pages 668-675

ISBN: 978-989-758-742-9; ISSN: 2184-4895

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

to use machine learning algorithms to automate com-

posite matchers. This choice is justified by the effi-

ciency and the potential of these algorithms, to en-

hance the accuracy of this task, presented in (Abbassi

and Hlaoui, 2024a; Abbassi and Hlaoui, 2024b; Xue

and Huang, 2023). Since we manipulate labeled data

as well as continuous data representing the values of

ontological similarity measures defined in the inter-

val [0, 1], we have developed an approach based on

supervised machine learning algorithms dedicated to

classification.

Despite the large number of ontology alignment

approaches based on supervised machine learning in

the literature (Abbassi and Hlaoui, 2024a; Abbassi

and Hlaoui, 2024b; Xue and Huang, 2023), these ap-

proaches have several limits, including:

• The use of a limited number of similarity measure

techniques and machine learning models.

• Partial exploitation of the ontology structure in

the alignment process reduces the accuracy of ob-

tained results.

• Use of incorrect values for similarity measures,

leading to erroneous results.

• Aligning classes separately from their subclasses,

disjoint classes, data properties, object properties,

individuals and comments, which has a negative

impact on the accuracy of the alignment process.

To overcome these limits, we propose a new on-

tology alignment approach that extends our previ-

ous work (Abbassi and Hlaoui, 2024a) by integrat-

ing other ontological information, exploring new su-

pervised machine learning models and comparing our

results with our previous approach.

Given the current state of the literature, the contri-

bution of the present work consists of :

• Testing a large number of supervised machine

learning techniques to ensure better ontology

alignment.

• Enrichment of the alignment approach by adding

new ontological information.

• Alignment of entities according to ontology struc-

ture.

The remainder of this paper is structured as fol-

lows. Section 2 provides a review of related works.

Section 3 presents our alignment approach in de-

tail. Section 4 presents the experimentation and qual-

ity evaluation of our alignment approach. Section 5

presents a discussion of this paper. Finally, Section

6 concludes this paper and proposes future perspec-

tives.

2 RELATED WORKS

In this study, we are particularly interested in ap-

proaches based on machine learning and schema-

matching techniques (Abbassi and Hlaoui, 2024a;

Abbassi and Hlaoui, 2024b; Xue and Huang, 2023).

The approach proposed by (Abbassi and Hlaoui,

2024b) uses several machine learning models such

as LogisticRegression, GradientBoostingClassifier,

GaussianNB, and KNeighborsClassifier to perform

ontology alignment. It uses 21 string-based similarity

measures and three language-based similarity mea-

sures. These techniques are applied to class labels,

data property labels and labels of relationships be-

tween classes. However, this approach has certain

limits, notably (i) limited exploitation of the global

ontological structure and (ii) lack of precision in some

cases. The authors in (Abbassi and Hlaoui, 2024a)

have developed an approach using 30 similarity mea-

sure techniques based on string and language as-

pects. These techniques are applied to class labels,

sub-classes, data properties, relationships between

classes and individual labels. It uses five machine

learning models: LogisticRegression, RandomForest-

Classifier, Neural Network, LinearSVC and Gradi-

entBoostingClassifier. However, this approach has

certain limits. It lacks precision for some ontology

pairs and does not exploit the integral structure of

ontologies. The approach described in (Xue and

Huang, 2023) is based on syntactic similarity mea-

sure techniques such as the Levenshtein distance, the

Jaro distance, the Dice coefficient, the N-gram and the

WordNet language technique. It combines an unsu-

pervised machine learning model, the Generative Ad-

versarial Network, with the Simulated Annealing Al-

gorithm (SA-GAN). However, this approach has an

important limit: a lack of accuracy, mainly due to

the non-respect of ontology structure. Specifically,

the alignment process treats entities independently of

their properties and subclasses, which reduces align-

ment accuracy.

3 PROPOSED APPROACH

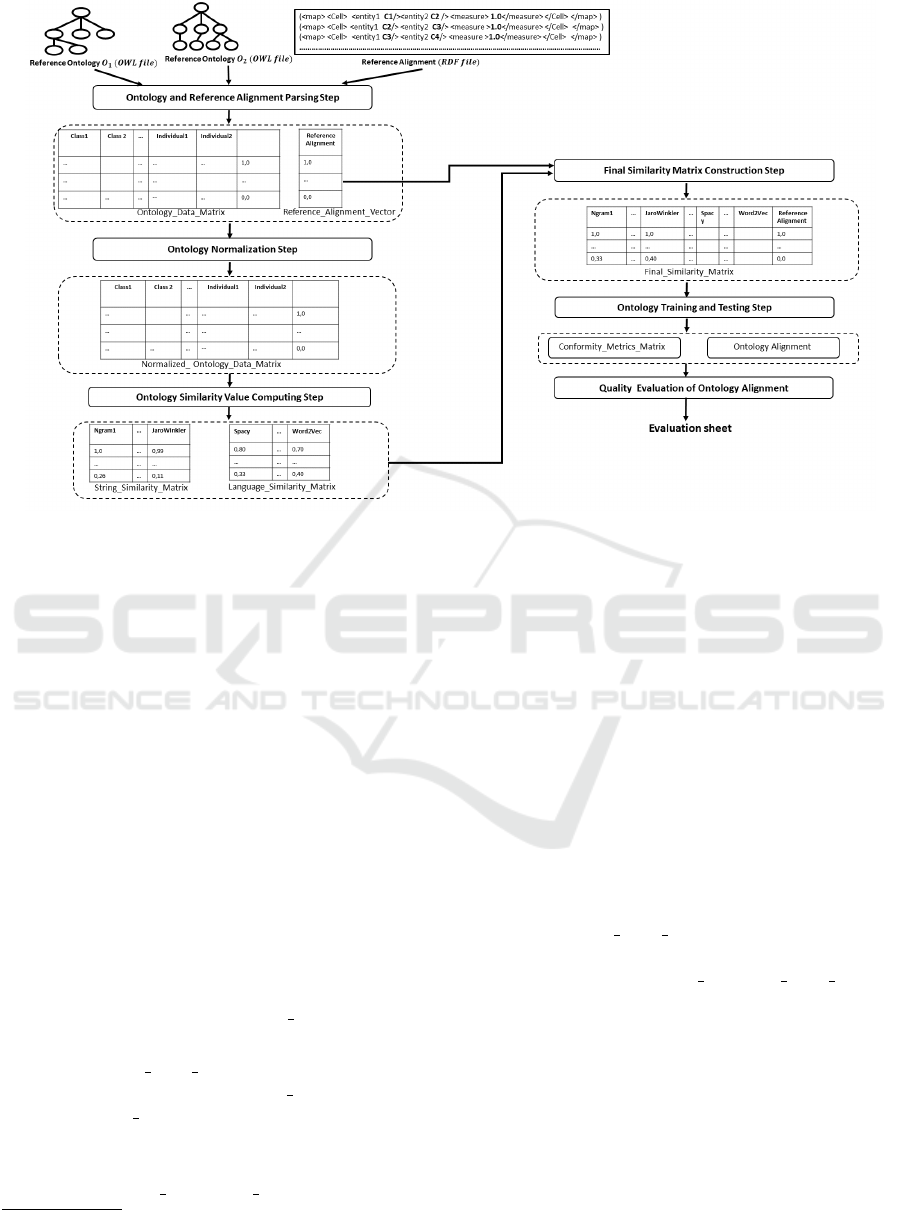

According to figure1, this approach is mainly com-

posed of five steps, namely Ontology and Refer-

ence Alignment Parsing Step, Ontology Normaliza-

tion Step, Ontology Similarity Value Computing Step,

Final Similarity Matrix Construction Step, Ontology

Training and Testing Step and Quality Evaluation of

Ontology Alignment Step.

Machine Learning for Ontology Alignment

669

Figure 1: Proposed Approach.

3.1 Step 1: Ontology and Reference

Alignment Parsing

As Figure1 shows, this phase takes as input a pair of

reference ontologies in the form of two OW L

1

files

and their corresponding reference alignment in the

form of an RDF

2

files, which are provided by OAEI.

As output, it produces a matrix and a vector, which are

essential for the next step in our alignment approach.

The construction of the matrix consists of the extrac-

tion of ontological data from the input ontologies,

while the construction of the vector is based on the ex-

traction of the confidence values associated with these

ontological data, calculated by the OAEI from the cor-

responding reference alignment file. The extracted

ontological data includes class labels, sub-class la-

bels, disjoint class labels, data property labels (dat-

aProperty), relationship labels between classes (ob-

jectProperty), individual labels and class comments.

This phase is implemented by Data

Construction al-

gorithm 1, which generates the following results:

• The Ontology Data Matrix, where each row rep-

resents an instance of a VData Ontology vector.

Each VData Ontology vector contains the onto-

logical data extracted from the input ontology

pair.

• The Reference Alignment Vector, which con-

1

https://www.w3.org/OWL/

2

https://www.w3.org/RDF/

tains the confidence values (calculated by OAEI)

associated with the data in the ontology data ma-

trix from the reference alignment file.

Thus, each of the product matrix and the vector has

a number of rows equal to |ClassesO1 ×ClassesO2|.

Where |ClassesO1 ×ClassesO2| represents the cardi-

nality of the Cartesian product of ontology classes O1

and ontology classes O2.

3.2 Step 2: Ontology Normalization

The objective of this step is to clear and transform

the ontological data extracted in the first phase into

a common format, in order to enhance the alignment

results. This step takes as input the ontological data

matrix (Ontology Data Matrix) produced in the pre-

vious step and generates, as output, a normalized

data matrix ( Normalized Ontology Data Matrix)

required for the next step. In fact, we have ap-

plied diverse normalization techniques to class la-

bels, sub-class labels, disjoint class labels, data prop-

erty labels (dataProperty), relationship labels between

classes (objectProperty), individual labels and com-

ments associated to classes. The used normalization

techniques include: case normalization technique,

blank normalization technique, link stripping tech-

nique, punctuation elimination, diacritics suppres-

sion technique and digit suppression technique.

ENASE 2025 - 20th International Conference on Evaluation of Novel Approaches to Software Engineering

670

Algorithm 1: Data Construction.

Data: O1.owl, O2.owl, Re f erence Alignment.rd f;

Result: Ontology Data Matrix: array of V Data Ontology

vector (CSV file);

Re f erence Alignment Vector: Vector of reference alignment;

i ← 0;

for class, ClassO1, of O1.OW L do

for class, ClassO2, of O2.OW L do

Sub-Class1←Sub-classes Of ClassO1;

Sub-Class2←Sub-classes Of ClassO2;

Disjoint-Class1←Disjoint-classes Of ClassO1;

Disjoint-Class2←Disjoint-classes Of ClassO2;

Data-Propclass1 ← Data Properties Of ClassO1;

Data-Propclass2 ← Data Properties Of ClassO2;

Object-Propclass1 ← Object Properties Of ClassO1;

Object-Propclass2 ← Object Properties Of ClassO2;

Individuals-class1 ← individuals Of ClassO1;

Individuals-class2 ← individuals Of ClassO2;

Comments-class1 ← Comments Of ClassO1;

Comments-class2 ← Comments Of ClassO2;

V Data Ontology←(ClassO1, ClassO1, Sub −Class1,

Sub −Class2, Dis joint −Class1, Dis joint −Class2,

Data − Propclass1, Data − Propclass1,

Ob ject − Propclass1, Ob ject −Propclass2,

Individuals − class1, Individuals − class2,

Comments − class1, Comments − class2);

Ontology Data Matrix[i]← V Data Ontology;

if (ClassO1, ClassO2 ) ∈ Re f erence Alignment.rd f

then

Con f ident alignment ← 1 ;

else

Con f ident alignment ← 0 ;

end

Re f erence Alignment Vector[i]←

Con f ident alignment;

i ← i+1;

end

end

3.3 Step 3: Ontology Similarity Value

Computing

This step takes as input the normalized ontology data

matrix produced at the end of the Ontology Nor-

malization step. It aims to calculate the syntactic

and external similarity measures for the different en-

tity pairs stored in the input normalized ontology

data matrix. As output, the current step generates

two matrices: a String Similarity Matrix and a Lan-

guage Similarity Matrix. These matrices contain the

syntactic and external similarity values calculated for

the ontological data stored in the input matrix.

To build the syntactic similarity matrix, we used

26 individual matchers implementing 26 string-based

techniques (Abbassi and Hlaoui, 2024a)(see Table 1).

For the construction of the external similarity ma-

trix, we have used four individual matchers imple-

menting four language-based techniques(see Table 1).

Algorithm 2: Calculation Similarity Values.

Data: Normalized Ontology Data Matrix: matrix of

Normalized V Data Ontology vectors;

Result: String Similarity Matrix, Language similarity Matrix :

matrices of real values (CSV file);

i ← 0;

for V DATA of Normalised Ontology Data Matrix do

for each in f ormation of VDATA do

SV sim Class←String Sim Class

(NClassO1,NClassO2);

SV sim SubClass←String Sim SubClass

(NSubClassO1,NSubClassO2);

SV sim Dis jointClass←String Sim DisjointClass

(NDis jointClassO1, NDis jointClassO2);

SV sim DataProperties←String Sim DataProperties

(NDataPropertiesO1,NDataPropertiesO2);

SV sim Ob jectProperties←String Sim ObjectProperties

(NOb jectPropertiesO1,NOb jectPropertiesO2);

SV sim Individuals←String Sim Individuals

(NIndividualsO1,NIndividualsO2);

SV sim ClassComments←String Sim ClassComments

(NClassCommentsO1,NClassCommentsO2);

Syntactic V Sim←GlobalConstructor(SVsim Class,

SV sim SubClass, SVsim Dis jointClass,

SV sim DataProperties, SVsim Ob jectProperties,

SV sim Individuals, SVsim ClassComments);

String Similarity Matrix [i]←Syntactic V Sim ;

i ← i+1;

end

end

j ← 0;

for V DATA of Normalised Ontology Data Matrix do

for each in f ormation of VDATA do

LV sim Class←Language Sim Class

(NClassO1,NClassO2);

LV sim SubClass←Language Sim SubClass

(NSubClassO1,NSubClassO2);

LV sim Dis jointClass←Language Sim DisjointClass

(NDis jointClassO1,NDis jointClassO2);

LV sim DataProperties←Language Sim DataProperties

(NDataPropertiesO1,NDataPropertiesO2);

LV sim Ob jectProperties←Language Sim ObjectProperties

(NOb jectPropertiesO1,NOb jectPropertiesO2);

LV sim Individuals←Language Sim Individuals

(NIndividualsO1,NIndividualsO2);

LV sim ClassComments←Language Sim ClassComments

(NClassCommentsO1,NClassCommentsO2);

Language V Sim←GlobalConstructor(LV sim Class,

LV sim SubClass, LV sim Dis jointClass,

LV sim DataProperties, LV sim Ob jectProperties,

LV sim Individuals, LV sim ClassComments);

Language Similarity Matrix [j]←Language VSim ;

j ← j+1;

end

end

These techniques are applied to each pair of elements

stored in the input ontology data matrix. They in-

clude the pairs of classes, the pairs of subclasses,

the pairs of disjoint classes, the pairs of data proper-

Machine Learning for Ontology Alignment

671

Table 1: Used String and language based techniques.

Technique class Techniques

String-based

techniques

N-gram 1, N-gram 2, N-gram 3, N-gram 4, Dice coefficient, Jaccard similarity, Jaro measure, Monge-Elkan, Smith-Waterman,

Needleman-Wunsh, Affine gap, Bag distance, Cosine similarity, Partial Ratio, Soft TF-IDF, Editex, Generalized Jaccard, Jaro-

Winkler, Levenshtein distance, Partial Token Sort Fuzzy Wuzzy Ratio, Soundex, TF-IDF, Token Sort, TverskyIndex, Overlap

coefficient (Euzenat et al., 2007; Abbassi and Hlaoui, 2024a).

Language-based

techniques

Wu and Palmer similarity,Word2vec, Sentence2vec similarity and Spacy (Euzenat et al., 2007; Abbassi and Hlaoui, 2024a).

ties (dataProperty), the pairs of relationships between

classes (objectProperty), the pairs of individuals and

the pairs of class comments. The current step is im-

plemented by the Calculation

Similarity Values al-

gorithm(cf. Algorithm 2), which uses the following

functions:

1. String Sim Entity to create and return syn-

tactic similarity vectors for each pair of

ontological entities stored in the Normal-

ized Ontology Data Matrix.

2. Language Sim Entity to create and return exter-

nal similarity vectors for each pair of entities in

the Normalized Ontology Data Matrix.

3. GlobalConstructor to concatenate the syntactic

and external similarity vectors and produce the

Syntactic VSim and Language VSim vectors for

each pair of classes of the pair of ontologies to be

aligned. As a result, the Syntactic VSim vector

contains 182 similarity values (26 x 7), while the

Language VSim vector contains 28 (4 x 7) simi-

larity values.

Indeed, each of generated matrices has a number of

rows equal to the cardinality of the cartesian product

of ontology O1 classes and ontology O2 classes, i.e.

|ClassesO1 ×ClassesO2|.

3.4 Step 4: Final Similarity Matrix

Construction

This step takes as input the similarity matri-

ces generated by the previous step and the

Re f erence Alignment Vector produced by the On-

tologies and Reference Alignment Parsing step.

The objective of this step is to combine these ma-

trices with the reference vector to obtain a final

similarity matrix. Hence, the construction of this

Final

Similarity Matrix proceeds as follows:

• Step 1: Each row of the syntactic simi-

larity matrix, representing an instance of the

Syntactic V Sim vector, each row of the exter-

nal similarity matrix, representing an instance of

the Language V Sim vector, and each row of the

Re f erence Alignment Vector are concatenated to

form a final similarity vector, named Final V Sim.

This vector contains all the calculated similarity

values and the reference alignment of each pair of

elements concerned. It is defined as follows:

Final VSim =(Syntactic VSim, Language VSim

, ConfidentAlignement)

This vector contains 210 similarity values (re-

sulting from the concatenation of the of the

Syntactic V Sim and the Language V Sim vectors)

and integrates the reference alignment for the cor-

responding pair of classes.

• Step 2: The Final Similarity Matrix is then con-

structed, where each row represents an instance

of the calculated Final V Sim. This matrix has the

same number of rows as the similarity matrices

created at the end of the previous step.

3.5 Step 5: Ontology Training and

Testing

The role of this phase is to produce the final align-

ment result between a given pair of ontologies. It

takes as input the Final Similarity Matrix generated

by the previous step and six machine learning models.

The selected models are Logistic Regression, Random

Forest Classifier, Neural Network, Linear SVC, K-

Neighbors Classifier and Gradient Boosting Classi-

fier, which are the most frequently used in the litera-

ture (Bento et al., 2020; Abbassi and Hlaoui, 2024a;

Xue and Huang, 2023). As an output, this step pro-

vides the degree of similarity of the input ontology

pair and generates a matrix of conformity metrics.

This matrix includes the precision (P), recall (R) and

f-measure , calculated by each used machine learn-

ing model (Euzenat et al., 2007; Abbassi and Hlaoui,

2024a). These measures are defined as follows:

P : Λ × Λ → [0..1] R : Λ × Λ → [0..1]

P(A, T ) =

|T ∩ A|

|A|

R(A, T ) =

|T ∩ A|

|T |

f − measure =

2 ∗ P(A, T ) ∗ R(A, T )

P(A, T )+ R(A, T )

ENASE 2025 - 20th International Conference on Evaluation of Novel Approaches to Software Engineering

672

Where Λis the set of all values of the computed

alignments and the reference alignments provided by

OAEI, T is the set of all values of the reference align-

ments, A is the set of all values of the computed align-

ments and |T ∩A| is the cardinality of the set of values

of the calculated alignments relative to the values of

the reference alignments.

The training and testing process for each used ma-

chine learning model is detailed as follows:

• Step 1 Model Training: In this first step, we

build a training matrix consisting of 60% of the

rows of the final similarity value matrix for a pair

of ontologies to be aligned. Each machine learn-

ing model is then trained from this matrix, us-

ing the first 210 columns to build their necessary

datasets for evaluating the degree of similarity be-

tween the ontologies. This creates trained models

capable of capturing the relationships between on-

tological entities.

• Step 2 Model Testing:: This stage consists of

preparing a test matrix containing the remaining

40% of the rows of Final Similarity Matrix for

the same pair of ontologies. Each trained model

is tested based on the first 210 columns of this test

matrix, to produce the final alignment for the cur-

rent pair of ontologies. This test evaluates each

model’s capacity to generalize and predict align-

ments for new data.

• Step 3 Model Evaluation: Finally, each ma-

chine learning model is evaluated using confor-

mity metrics, which measure the degree of corre-

spondence between the degrees of similarity pre-

dicted by the models and the confidence align-

ment values provided in the Re f erenceAlignment

column. This evaluation is used to determine the

accuracy and efficiency of the models in establish-

ing alignments between ontologies.

We have implemented the training and testing process

for each pair of reference ontologies and for the six

machine learning models that we have used. As a

result, we have generated 29 matrices of conformity

metrics.

3.6 Step 6: Quality Evaluation of

Ontology Alignment

The main objective of this step is to validate the re-

sults obtained by the different machine learning mod-

els that we have used. This validation is based on

the matrices of conformity metrics generated by the

previous step, specifically focusing on the f-measure

metric provided by each model for the set of align-

ment tests performed on the reference ontology pairs.

This choice is justified because the f-measure calcu-

lates the harmonic mean of precision and recall, giv-

ing them equivalent importance (Euzenat et al., 2007;

Abbassi and Hlaoui, 2024a). This validation consists

of comparing the results provided by our approach

with those of our previous approach (Abbassi and

Hlaoui, 2024a).

4 EXPERIMENTATION AND

QUALITY EVALUATION OF

THE ALIGNMENT APPROACH

To implement the different steps of our approach,

we have configured a work environment using Ana-

conda 1.10.1 and the Spyder editor, specially de-

signed for Python development. This configuration

includes the installation and the use of tools such

as the py stringmatching, the beauti f ulsoup4, the

Owlready2, the pandas, the f uzzycomp, the NGram,

the WordNet, the nltk(NaturalLanguageToolkit), the

spacy, the en core web lg, the Gensim, the tqdm,

the Keras and the sklearn libraries, as well as the

GoogleNews − vectors − negative3 − 0011 Dictio-

nary. These tools are running on a laptop with Win-

dows 10 Professional N 64-bit operating system, In-

tel Core i7-8550U processor (1.80 GHz - 1.99 GHz)

and 8 GB RAM. To configure the hyper parameters

of the different machine learning models that we have

used, we have employed those described in Table 2.

To experiment our approach, we have focused on the

benchmark

3

and conference

4

tracks, which are fre-

quently used in the literature. Each includes refer-

ence ontologies in OW L file format and their refer-

ence alignments in RDF file format. The reference

track comprises various ontologies modified accord-

ing to three test families: the 1xx family, the 2xx fam-

ily and the 3xx family. The conference track presents

the highest degree of heterogeneity compared with the

other tracks. It includes seven reference ontologies,

generating 21 pairs of ontologies with their reference

alignments. We have used eight cases of alignment of

the benchmark track and 21 cases of alignment of the

conf

´

erence track.

4.1 Quality Evaluation of the Current

Ontology Alignment Approach

The validation of the results provided by our align-

ment approach applied to the different tested machine

3

https://oaei.ontologymatching.org/2016/benchmarks/

4

http://oaei.ontologymatching.org/2024/conference/

Machine Learning for Ontology Alignment

673

Table 2: Hyper parameter tuning of used machine learning models.

Machine Learning Model Hyper parameters

Random Forest Classifier n estimators=100, max features=None, max depth=2

Neural Network Dense layer = 211 neurons with Activation function = relu. Dense output layer 1 neuron with activation

function sigmoid. Compiler of the algorithm with an adam optimizer. The Metric accuracy. The binary

crossentropy loss function.

Linear SVC C=0.5, penalty=”l2”, dual=False

K-Neighbors Classifier n neighbors=1

Gradient Boosting Classifier learning rate = 1, n estimators= 100

Logistic Regression max iter= 1000, solver=’lbfgs’

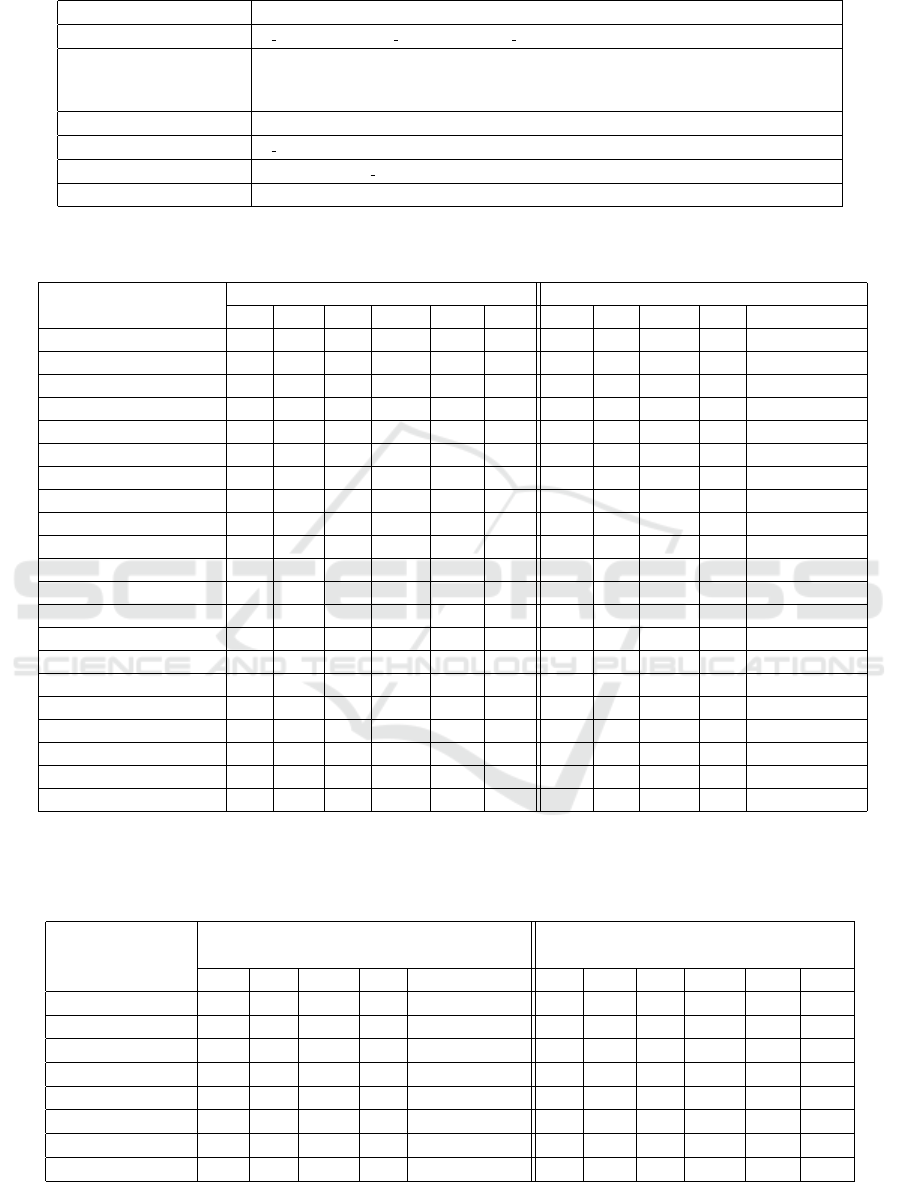

Table 3: Values of f-measure of our approach compared to our previous approach (Abbassi and Hlaoui, 2024a) for each pair

of ontology tests in the 2024 OAEI conference track.

Pair of Reference Ontologies

Our Approach Our previous Approach (Abbassi and Hlaoui, 2024a)

LR RFC NN LSVC KNC GBC XGB NN LSVC LR RFC

cmt-conference 0.66 0.40 0.88 0.40 0.52 0.57 0.44 0.80 0.44 0.44 0.40

cmt-confOf 0.60 0.50 0.64 0.62 0.50 0.66 0.53 0.53 0.53 0.53 0.57

cmt-edas 0.90 0.97 1.00 1.00 0.97 1.00 1.00 1.00 1.00 1.00 0.85

cmt-ekaw 0.74 0.82 0.90 0.88 0.86 0.85 0.76 0.76 0.76 0.76 0.76

cmt-iasted 0.64 0.60 1.00 0.97 0.90 0.80 0.88 1.00 0.88 0.88 0.57

cmt-sigkdd 0.88 0.77 0.97 0.86 0.81 0.96 0.88 0.88 0.88 0.82 0.62

conference-confOf 0.88 0.85 0.90 0.88 0.86 0.88 0.73 0.80 0.73 0.73 0.73

conference-edas 0.53 0.51 0.77 0.77 0.74 0.61 0.63 0.66 0.63 0.63 0.47

conference-ekaw 0.58 0.51 0.40 0.53 0.50 0.60 0.50 0.47 0.45 0.45 0.50

conference-iasted 0.50 0.44 0.51 0.40 0.44 0.53 0.44 0.55 0.44 0.52 0.44

conference-sigkdd 0.85 0.88 0.85 0.85 0.81 0.85 0.76 0.76 0.76 0.76 0.76

confOf-edas 0.66 0.66 0.77 0.71 0.76 0.77 0.69 0.69 0.69 0.63 0.57

confOf-ekaw 0.63 0.77 0.74 0.62 0.60 0.57 0.60 0.60 0.55 0.55 0.64

confOf-iasted 0.66 0.74 0.77 0.73 0.70 0.76 0.61 0.61 0.61 0.61 0.61

confOf-sigkdd 0.80 0.90 0.96 0.88 0.81 0.88 0.80 0.80 0.80 0.80 0.80

edas-ekaw 0.76 0.77 0.76 0.70 0.69 0.76 0.62 0.64 0.64 0.68 0.68

edas-iasted 0.53 0.63 0.66 0.60 0.54 0.44 0.51 0.51 0.51 0.46 0.51

edas-sigkdd 0.88 0.85 0.86 0.81 0.86 0.88 0.77 0.77 0.77 0.77 0.70

ekaw-iasted 0.74 0.88 0.90 0.88 0.80 0.90 0.74 0.75 0.74 0.66 0.74

ekaw-sigkdd 0.81 0.90 0.96 0.88 0.85 0.81 0.77 0.77 0.77 0.77 0.77

iasted-sigkdd 1.00 0.90 1.00 0.97 0.96 0.96 0.85 0.81 0.81 1.00 0.85

LR: LogisticRegression, RFC: RandomForest, GNB: GaussianNB, NN: Neural Network, LSVC: Linear SVC, KNC: KNeighborsClassifier, GBC:

GradientBoostingClassifier, XGB: XGBoost.

Table 4: Values of f-measure of our approach compared to our previous approach (Abbassi and Hlaoui, 2024a) for eight pairs

of ontology tests in the benchmark track.

Pair of Reference

Ontologies

Our previous approach (Abbassi and Hlaoui, 2024a) Our Approach

RFC NN LSVC LR GBC LR RFC NN LSVC KNC GBC

201-208 0.79 0.81 0.85 0.83 0.75 0.80 0.88 0.92 0.96 0.74 0.86

221-247 0.89 0.95 0.96 0.93 0.92 0.97 0.90 1.00 1.00 0.96 0.97

301-304 0.80 0.88 0.95 0.80 0.82 0.88 0.85 0.85 0.96 0.77 0.90

248-266 0.50 0.55 0.59 0.52 0.52 0.66 0.66 0.77 0.74 0.69 0.74

101-104 0.96 1.00 1.00 1.00 0.98 1.00 0.96 1.00 1.00 0.88 1.00

101-302 0.97 0.90 1.00 0.92 0.92 0.96 0.95 0.95 1.00 0.74 0.97

101-303 0.97 0.93 1.00 0.92 0.88 1.00 1.00 1.00 1.00 0.90 0.90

101-304 0.98 1.00 0.97 0.99 1.00 1.00 1.00 1.00 1.00 0.97 1.00

LR: LogisticRegression, GBC: GradientBoostingClassifier, KNC: KNeighborsClassifier, NN: Neural Network, RFC: RandomForest, LSVC: Linear SVC.

ENASE 2025 - 20th International Conference on Evaluation of Novel Approaches to Software Engineering

674

learning models is based on comparing the results ob-

tained and those of our previous approach (Abbassi

and Hlaoui, 2024a). This comparison is based on the

f-measure metric (see section 3.5), applied to all used

models (see Tables 3 and 4). The choice of the f-

measure is justified by its capacity to calculate the

harmonic mean of precision and recall according to

them equal importance (Euzenat et al., 2007; Abbassi

and Hlaoui, 2024a)(see section 3.5). Then, these re-

sults show a percentage higher than 70% up to 90%

for the conference track and a percentage higher than

60% up to 100% for the benchmark track. These per-

centages represent the better performance achieved

by our approach compared to the previous approach.

This confirms the enhanced quality and performance

of our results compared to those obtained by our pre-

vious results (Abbassi and Hlaoui, 2024a). This per-

formance is due to the construction of ontological

data matrices, respecting the structure of ontologies,

which was crucial in improving alignment results. Al-

though the same machine learning models are used,

with the addition of the Neighbors Classifier model,

the accuracy of the results is improved through richer

data matrices. However, some special cases, such as

the ontology pairs 101-104, 221-247, cmt-conference,

conference-ekaw, conference-iasted and edas-iasted,

show lower accuracy. This is often due to the struc-

ture and absence of certain ontological elements, such

as disjoint classes or comments.

5 CONCLUSION

This paper presents an ontology alignment method

based on supervised machine learning and automatic

schema-matching. Our approach follows a series of

successive steps: ontology and reference alignment

parsing step, ontology normalization step, ontology

similarity value computing step, final similarity ma-

trix construction step, ontology training and testing

step, and quality evaluation of ontology alignment

step. The first step consists to analyze the reference

ontologies and their alignments provided by the OAEI

to extract ontological data matrices and confidence

vectors. This forms a basis for further processing.

Then, these data are normalized into a coherent for-

mat, which enhances the accuracy of the alignments.

Syntactic and external similarity matrices are subse-

quently generated by individual matches applied to

the normalized data and then merged to create a fi-

nal similarity matrix representing the correspondence

between a pair of ontologies. This matrix is exploited

by machine learning models to identify ontological

similarities, and the quality of our alignment is evalu-

ated by comparing our results with those of our previ-

ous results (Abbassi and Hlaoui, 2024a). Our exper-

iments indicate that our approach improves accuracy

over previously published methods.

As future work, we propose enriching the align-

ment approach by adding other ontological enti-

ties, such as ontology comments, equivalent classes,

super-classes and equivalent individuals of a given

class.

REFERENCES

Abbassi, F. and Hlaoui, Y. B. (2024a). An ontology

alignment validation approach based on supervised

machine learning algorithms and automatic schema

matching approach. In 2024 IEEE 48th Annual

Computers, Software, and Applications Conference

(COMPSAC), pages 332–341. IEEE.

Abbassi, F. and Hlaoui, Y. B. (2024b). Supervised machine

learning models and schema matching techniques for

ontology alignment.

Bento, A., Zouaq, A., and Gagnon, M. (2020). Ontol-

ogy matching using convolutional neural networks. In

Proceedings of the 12th language resources and eval-

uation conference, pages 5648–5653.

Euzenat, J., Shvaiko, P., et al. (2007). Ontology matching,

volume 18. Springer.

Gruber, T. R. (1993). A translation approach to portable

ontology specifications. Knowledge acquisition,

5(2):199–220.

Gruber, T. R. and Olsen, G. R. (1994). An ontology for

engineering mathematics. In Principles of Knowledge

Representation and Reasoning, pages 258–269. Else-

vier.

Rahm, E. and Bernstein, P. A. (2001). A survey of ap-

proaches to automatic schema matching. the VLDB

Journal, 10(4):334–350.

Shvaiko, P. and Euzenat, J. (2005). A survey of schema-

based matching approaches. In Journal on data se-

mantics IV, pages 146–171. Springer.

Uschold, M. and Gruninger, M. (1996). Ontologies: Princi-

ples, methods and applications. The knowledge engi-

neering review, 11(2):93–136.

Xue, X. and Huang, Q. (2023). Generative adversarial

learning for optimizing ontology alignment. Expert

Systems, 40(4):e12936.

Machine Learning for Ontology Alignment

675