Time to Face the Truth: Do Digital Applications Really Help Students

Learn?

Vassilis Trizonis

a

, Odysseus Tsakiridis

b

, Dimitrios Metafas

c

and Panos Photopoulos

d

University of West Attica, 250 Thivon & P. Ralli Str., T.K. 12241, Athens, Greece

Keywords: Digital Learning Objects, COVID-19, Technology, Cognitive Engagement, Formative Multiple-Choice Tests.

Abstract: This study explores how first-year Electronics students interact with digital learning resources. The

information collected pertains to recorded lectures from the first year of the health crisis in 2020, short videos

created at students' request in 2021, a test/video/test (T/V/T) application developed in 2021, and the Multiple-

Choice Help (M-CH) application, which was created in 2024. The findings indicate that students' use of digital

learning resources is often disconnected from the creators' intentions. Instead of being used systematically,

these resources are typically accessed opportunistically, primarily during exam preparation. Factors such as

background knowledge and study persistence significantly influence how students utilise these resources. For

more complex applications, like the T/V/T and M-CH, students tend to select only those components that

align with their immediate needs. High-performing students are generally more inclined to take advantage of

digital learning resources, whereas low-performing students and those who do not attend lectures are less

engaged. The digital resources employed proved insufficient to reconnect students with learning. Interestingly,

satisfaction with a digital resource does not imply its usage or increased academic performance. While the

usefulness of digital resources cannot be denied, they play only an assistive role in students’ learning.

1 INTRODUCTION

Information and Communication Technologies (ICT)

are related to education in two interconnected ways:

The first is the total transformation of education and

its transfer from the classrooms to the internet, and

the second is the cultivation of student personalities

that will fit the remote learning environment, and this

is the engaged student. For both objectives,

technology is the purgatory of political choices and

the vehicle for education privatisation.

International organisations treated the COVID-19

hygienic crisis as an opportunity to convince society

of the inevitable total transformation of education.

UNESCO, the UN, and UNICEF were the active

agents to depoliticise the transformation agenda

(Facer & Selwyn, 2021; Sharma & Hudson, 2022).

Taking advantage of their humanistic image, they

became the ideal advocators of the irreversibility of

online learning after the end of the pandemic.

a

https://orcid.org/0009-0007-8128-0778

b

https://orcid.org/0009-0006-2100-7385

c

https://orcid.org/0009-0009-2480-0081

d

https://orcid.org/0000-0001-7984-666X

Soon, it was found that students were

disappointed with online learning and, more

importantly, learning was marginal. A survey

conducted by the European Students' Union in April

2020 provided reliable evidence of students'

preference for in-person education (OECD, 2021).

An early World Bank publication concluded that

school closures resulted in significant learning losses

despite teachers' online efforts (Donnelly et al.,

2021). More recent research has provided ample

evidence of the learning losses that occurred during

forced online learning, undermining the sustainability

of the digital transformation proposal (Alasino et al.,

2024; Arenas & Gortazar, 2024; Durongkaveroj,

2023; Reich, 2020).

Digital learning resources are considered to

promote discovery and create a new type of person:

the engaged student (Ahshan, 2021). Evidence in

favour of the positive impact of technology on

learning comes from studies conducted in specific

Trizonis, V., Tsakiridis, O., Metafas, D. and Photopoulos, P.

Time to Face the Truth: Do Digital Applications Really Help Students Learn?.

DOI: 10.5220/0013433000003932

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 1, pages 573-580

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

573

contexts and as part of planned research projects.

However, such results are not susceptible to

generalisations. Evidence from real classrooms has

failed to establish a robust relationship between

technology and learning, while studies on technology

integration in real classrooms have yielded mixed

results. Moreover, the novelty effect, poses additional

problems in establishing a clear relationship between

education technology and learning (Fütterer et al.,

2022).

To address the lack of supporting evidence and

maintain a positive relationship between technology

and engagement, some researchers suggest that

previous studies have mistakenly searched for a link

between cognitive engagement and the frequency of

technology use. As a result, recent research has

shifted attention to the relationship between cognitive

engagement and the quality of technology integration

(Cattaneo et al., 2025; Chi et al., 2018; Fütterer et al.,

2022; Trask, 2024). The emphasis on the “quality of

integration” rejuvenates the discussion of the

relationship between technology and cognitive

engagement, overcoming the lack of evidence on the

cognitive engagement–technology relationship.

Digital education is as challenging to generate

results as analogue education. This study focuses on

the evidence on how students interact with digital

learning tools designed to enhance their learning. The

idiosyncratic and sometimes opportunistic ways

students interact with digital learning tools question

the extent to which they can play the role their

creators consider they would play (Pitso, 2023). It is

not the deployment of digital learning tools per se that

generates results but the extent to which learning

resources are incorporated into a carefully designed

process that makes students put effort into their

learning (Alahmadi, 2023; Biehler et al., 2024; Gao

et al., 2024).

The pro-technology rhetoric and the forced

familiarisation of tutors with digital learning tools

cultivated the belief that technology can cure the

weaknesses of classroom teaching. Teachers must

correctly identify students’ educational needs and

develop or utilise appropriate digital resources

(Kostaki & Linardakis, 2024). Teachers develop

applications in tandem with high expectations

regarding their impact on student learning. However,

what digital learning resources mean to students

remains an open question. This study highlights how

students interacted with digital learning tools between

2020 and 2024. The research questions are the

following:

How did the students use the digital learning

tools?

What impact did the digital learning tools have on

students’ learning?

Did the digital learning tools benefit all students

equally?

2 DATA AND SOURCES

The collected data covers the period from 2020 to

2024 and focuses on first-year Electrical and

Electronic Engineering courses. Information on video

viewing was extracted from YouTube analytics. In

2021, a questionnaire distributed to 72 students

captured feedback on the T/V/T application. Finally,

information regarding the usage of the M-CH

application was obtained from the application’s

database, and a survey gathered additional student

feedback.

3 RESULTS AND DISCUSIONS

The following sections describe students’ interaction

with a) the video lectures during 2020, the first year

of the movement restrictions due to the COVID

pandemic, b) A set of 24 short videos made available

to the students in 2024, c) An application combining

a pre-test, a video and a post-test made available in

March 2021, d) The custom web application

“Multiple-Choice Help” consisting of multiple-

choice tests with a “Help” button.

3.1 Recorded Video Lectures

During the pandemic, video lectures were considered

a better solution compared to synchronous lectures.

They made any-time, any-place learning a reality

aligning with OECD recommendations for

‘grassroots solutions’ (p. 67), development of

material accessible “at any time” (p. 121), learning

tools of “quick and easy scalability (p.134) “,

multimodal pedagogical resources” (p.165), and

“recordings of daily video lessons” (p.236) (OECD,

2022).

The video lectures discussed in this section refer

to remote teaching between March and June 2020. On

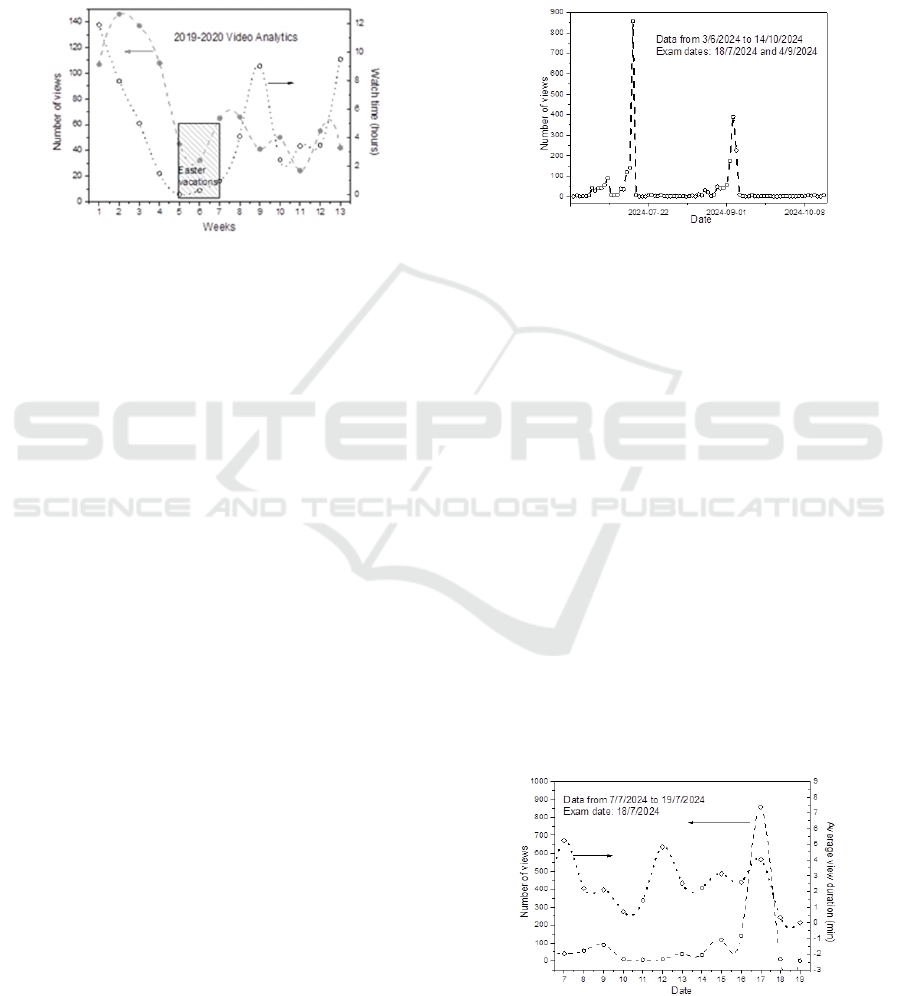

the basis of data collected from YouTube, Figure 1

shows the variation in watching time and number of

viewers over a period of 13 weeks.

The first two weeks were the exploration period

when the number of visitors increased but the total

viewing time decreased. The students accessed the

video lectures to decide whether they suited their

CSEDU 2025 - 17th International Conference on Computer Supported Education

574

learning and personal needs. After the second week,

the number of viewers and total viewing time

decreased in tandem until the Easter holidays.

Viewing time increased before phase and final exams,

during the 9th and the 13th week, while the number

of viewers remained low. This suggests that a small

group of students engaged with the videos for longer

durations (Dermy et al., 2022).

Figure 1: The viewers decrease 50% in 8 weeks. The

watching hours varied depending on students’ duties (phase

exams).

It was expected that posting recorded video

lectures on a virtual platform would enable the

students to download and watch them offline,

allowing them to review the learning material. Still,

recorded video lectures are often considered

ineffective teaching material because they can be

lengthy and do not promote the dialogue between

students and teachers. They attracted students’

attention only at the beginning of a course and before

the exams (Cavanlit et al., 2023; Karnad, 2013).

Although they were considered to facilitate flexible

learning, only a small number of students benefited

from them.

3.2 Targeted Video Material

Short-length videos are more effective than video

lectures because they prevent viewers’ passivity and

reduce mind-wandering. Segmenting a long text into

small videos reduces the cognitive load during

viewing and improves the structuring of the learning

material (Seidel, 2024). This section discusses how

students interacted with 24 short videos, each lasting

approximately 10 minutes, uploaded on the LMS in

July 2024. The videos covered management and

technology, covering half of the learning material for

two first-year electives: “Management” and “Science,

Technology, and Society.”

A calculation combining viewing times, average

video duration, and the number of views per video,

yielded that, on average, the viewers watched 41% of

the average video length, translating to about 3.5

minutes. Some videos garnered significantly more

attention, with viewers watching, on average, 66% of

their duration, while others received less attention,

with viewers only watching about 13%. As illustrated

in Figure 2, the students engaged with the videos a

few days before the July and September exams as an

alternative to reviewing course notes

Figure 2: The students interacted with the videos

opportunistically only a few days before the July and

September exams.

Figure 3 depicts student engagement with videos

in July 2024. Following the videos' release on July 7,

2024, a small number of viewers watched the videos,

with an average viewing time of approximately 5

minutes, accounting for about 60% of the average

video duration. Over the following 12 days, viewing

times fluctuated, showing local peaks on July 7, 12,

and 17. The number of views remained low until July

17—the day before the examination—when views

surged to approximately 850, with an average

viewing time of 4 minutes per video. Students used

the videos reactively, not as a core resource, resulting

in viewing spikes before exams. Engagement with the

videos was not continuous and did not result in a

continuous rate of knowledge acquisition. However,

digital learning tools, including videos, can lead to

robust learning when they are integrated in carefully

designed learning events (Fütterer et al., 2022;

Koedinger et al., 2012).

Figure 3: The students engaged with the videos only the day

before the exams.

Time to Face the Truth: Do Digital Applications Really Help Students Learn?

575

3.3 The “M-C Test/Video/M-C Test”

(T/V/T) Application

Students learn from videos when they take control of

their learning process. Instructional strategies

prompting the students to complete tasks alongside

watching videos help them take control of their

learning. In a T/V/T exercise, a video was paired with

an objective test to enhance students’ active

engagement with video learning (Tan et al., 2022).

Other publications use self-explanations instead of

objective tests (Bai et al., 2022; Lawson & Mayer,

2021; Wang & Xu, 2024). A T/V/T exercise

proceeded as follows: First, students took a problem-

based multiple-choice test to self-assess their

knowledge of a specific topic and identify potential

areas of confusion (Photopoulos et al., 2021;

Photopoulos & Triantis, 2022). Students scoring

below 6.5 out of 10 were prompted to watch an online

video to clarify misunderstandings. After viewing the

video, they were further prompted to retake the test to

demonstrate improvement. Students who scored

above 7/10 did not have to proceed to the above steps

but were welcome to do so if they chose.

In the spring of 2021, approximately 90 students

participated in synchronous remote lectures in

Electronics. Attendance remained high throughout

the semester. The learning resources included text

files with solved and unsolved problems for each

teaching unit. Additionally, two Test/Video/Test

(T/V/T) exercises were uploaded to the Learning

Management System (LMS) for home study on April

4, 2021. These exercises were designed to help low-

performing students identify areas of confusion and

improve on them.

A questionnaire was distributed to the students to

gather feedback on the ways students interacted with

the T/V/T exercises. A total of 72 students responded.

Regarding their year of study, 51% were first-year

students, 25% were sophomores, and the remaining

students were from other years of study. Among the

participants, 89% were male, and 92% reported

attending lectures regularly. However, only 12

students (approximately 17%) reported studying the

exercises uploaded to the LMS.

3.3.1 The First T/V/T Exercise

Of 72 survey participants, 15 did not report their first

test grade and were excluded from the analysis.

Among the remaining 57 students, 26 scored lower

than 6.5/10 on the first test. Although these students

were directed to the video, only 10 reported watching

it (38%), and even fewer (31%) completed the third

application step, i.e. to retake the test. Of the eight

students who scored below 5/10 on the first test, only

3 watched the video, and just 2 (25%) took the

multiple-choice retest.

In contrast, 12 out of 31 students who scored 7 out

of 10 or higher on the first test watched the video and

retook the test, accounting for 39% even though the

application did not explicitly require this step. These

results indicate that high-performing students

benefited the most from the T/V/T application, while

students who performed poorly on the first test

showed less interest in completing the rest of the

application. Ultimately, the T/V/T application did not

equally benefit all students.

The students used the app idiosyncratically. Five

students took the two tests without watching the

video. Another four students took the first test and

watched the video but did not attempt the retest.

Among the 25 students who did not watch the videos,

11 cited having no time, and 12 that their first test

mark was above 7/10.

Despite these usage patterns, of the 20 text

responses on the video's effectiveness in enhancing

learning, only one student expressed dissatisfaction

about the video's length. In contrast, 11 participants

made positive remarks about the video, and eight

explained the reasons for not watching it, e.g., "I will

watch it during the weekend." Overall, the

participants rated the video's effectiveness in

facilitating learning with an average score of 4.2 on a

scale of 1 to 5.

3

.3.2 The Second T/V/T Exercise

Out of the 72 students surveyed, 15 did not report

their first test grade and were excluded from the

analysis. Approximately 17% of the respondents fully

utilised the application, completing both tests and

watching the video regardless of the first test

performance.

Among the six (6) students who scored less than

5/10, only one student (16.7%) reported that they

watched the video carefully and took the retest. Two

additional students took the retest without watching

the video, and one student skimmed through the video

before taking the retest.

Of the 12 students who scored between 5 and 6.5

out of 10, only two (16.7%) watched the video

carefully and took the multiple-choice retest. One

student skimmed through the video before retaking

the test, and another student only took the retest

without viewing the video.

Among the 39 students who scored above 7/10,

eleven self-reported watching the video carefully

CSEDU 2025 - 17th International Conference on Computer Supported Education

576

(28%), and seven of them went on to complete the

multiple-choice retest (18%). Five students took the

retest without watching the video, and three skimmed

through it without taking the retest.

Fourteen students provided written feedback on

the effectiveness of the video in enhancing their

learning, and no negative comments were received.

On a scale from 1 to 5, participants rated the video’s

contribution to their learning, with an average score

of 4.2.

The overall results indicate that, on average, 50%

of the students engaged with the exercise components

selectively. Students practised autonomous learning,

choosing which parts of the application suited their

perceived needs (Tan et al., 2022). Among low-

performing students, the percentage who treated the

app as a cohesive learning resource and completed all

three steps decreased from 25% in the first exercise

to 17% in the second. These findings suggest that

regardless of the designers' intentions and students'

evaluations, the digital application did not effectively

benefit all students as intended (Fiorella, 2022).

Cognitive load theory is a candidate for

explaining how students' performance affects

cognitive engagement with digital applications.

Cognitive load significantly influences the

effectiveness of integrated learning strategies that

involve multimedia. Decoding, assimilating, and

accommodating alternative representations and

understanding their relation to physical quantities

demand a high cognitive effort (Lawson & Mayer,

2021). Multiple-answer problem-based questions

demand extensive pen-and-paper calculations to

make an informed choice (Photopoulos & Triantis,

2022). High performers have learned to manage high

cognitive loads and accept the challenge of

completing the applications, while low performers

may struggle. When learning is left to the discretion

of the unaided learner, it is often unclear whether the

cognitive load will foster engagement or hinder

learning. Research indicates that excessive cognitive

load negatively impacts knowledge transfer (Bai et

al., 2022), while reduced cognitive load may result in

insufficient cognitive engagement (Wang & Xu,

2024). Finding an 'optimum' cognitive load that

works well for all students is as challenging as

offering personalised learning in the class

environment.

Combining multiple-choice tests with video

lectures is seemingly an effective strategy for

engaging students with video content and providing

immediate feedback on their learning (Divjak et al.,

2024; Jarwopuspito et al., 2023; Tolonen et al., 2023).

However, some students do not benefit either from

videos or traditional classroom teaching (TS &

Thandeeswaran, 2024).

3.4 The “Multiple-Choice Help”

(M-Ch) Application

Multiple-choice help (M-CH) is a custom web

application developed using PHP, with data managed

through a MariaDB database. Its architecture

followed a sequential series of Multiple-Choice

questions with a help text. The application recorded

various data, such as the correct answers, the time

spent on each item, and the time remaining on the

help screen. Users accessed the application through a

web browser. An Android mobile application was

also experimentally created, utilising the Apache

Cordova development environment. The tests were

on topics from two first-year electives:

"Management" and "Science, Technology, and

Society" (Table I). "An App for Everyone, Especially

for Students Not Attending Lectures", said the

announcement, making the application available on

February 11, 2024. Three months later, a single-digit

number of students had visited the app.

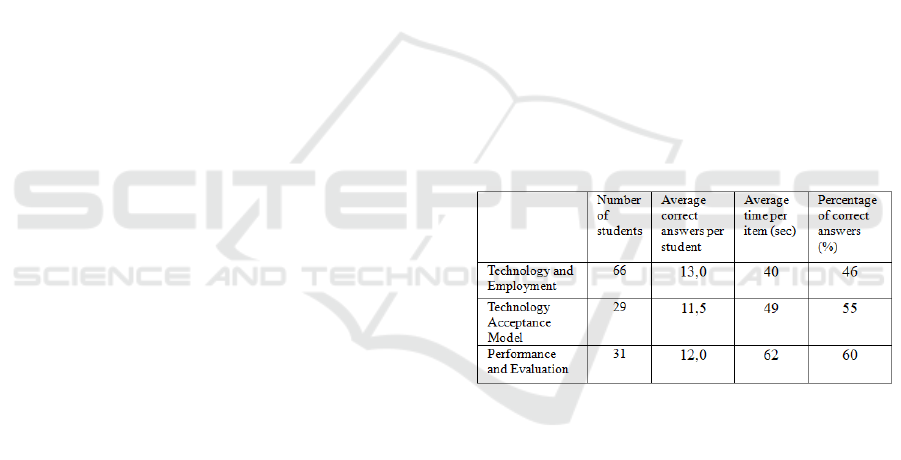

Table 1: Multiple-Choice Help Statistics.

A July 7, 2024, announcement, 11 days before the

exams, reminded students of the application. The

application aimed to scaffold students' learning using

the multiple-choice format. Table I presents the

information collected. Of the 140 students who took

the July 2024 exam, less than 50% visited the app.

The average time spent per item was about one

minute, indicating that students went through the

questions rather than paying attention to the 'help

texts'. The evidence indicates that the students used

the application to understand what exam questions to

expect rather than as a learning resource.

Additionally, the pass rate for students who used the

application was not significantly higher than the

overall pass rate, suggesting that the application did

not significantly impact student performance.

The preliminary results of a survey among

application users indicate a positive attitude. Eighty-

Time to Face the Truth: Do Digital Applications Really Help Students Learn?

577

five percent reported that the questions and "help

texts" were clear and understandable, and a similar

percentage said that the application helped them

learn. Ninety percent reported satisfaction with the

application, and 93% would recommend it to another

colleague.

4 CONCLUSIONS

How did the students use the digital learning tools?

The participants of this study used the digital learning

tools reactively, often focusing on responding to the

forthcoming assessment rather than engaging with the

content. The same pattern appeared in the case of the

short-targeted videos published in 2024. During

remote teaching in 2020, interest in video lectures

dropped by 50% within four weeks, with sporadic

viewing spikes appearing before exams. Students

used the components of the T/V/T components

selectively; some emphasised the multiple-choice

tests, others the videos. Only a small percentage of

low-performing students completed the applications,

with 25% finishing the first exercise and 17%

completing the second. Additionally, only 50% of the

students who took the July 2024 exams used the M-

CH application. On average, students spent about one

minute on each M-C item, indicating limited use of

the 'help text.' Overall, the digital learning tools

served as supplemental aids rather than the primary

learning resources with usage patterns that were

opportunistic and superficial. These behaviours

reflect reactive, exam-driven usage rather than

consistent, deep learning-focused (Boud & Molloy,

2013).

What impact did the digital learning tools have on

students’ learning? The tools had little impact on

students’ learning and maintained or reinforced

existing performance disparities rather than closing

gaps. Engagement patterns were determined by

exam-driven urgency and disparities in performance

rather than consistent, deep learning (Boud & Molloy,

2013). Low performers rarely took advantage of

applications’ remediation features, such as video

viewing and retests, resulting in minimal

improvement in learning. In the case of the M-CH

application, there was no significant improvement in

pass rates compared to non-users. Peaks in video

viewing before exams suggest surface-level

cramming rather than deep learning (Bjork et al.,

2013). Although the students were satisfied with the

applications, the learning tools did not ensure

learning gains for all.

Did the digital learning tools benefit all students

equally? The present study suggests that low-

performing and non-conventional students received

fewer benefits than high-performing students.

Evidence from the T/V/T application suggests that it

was more beneficial for high-performing students.

The M-CH application did not attract the attention of

the students who did not follow the lectures,

contradicting assertions about flexible digital

learning. Students often used tools idiosyncratically,

skipping videos or superficially interacting with

applications. The outcomes of the present study align

with publications showing that self-directed learning

tools, not paired with accountability mechanisms,

often widen achievement gaps (Koedinger et al.,

2012).

Several studies report positive outcomes from

introducing digital applications in higher education

(Tomić & Radovanović, 2024). Although some

students may improve in performance or feel satisfied

with digital learning tools, they do not ensure

effective learning for all (Santilli et al., 2025).

Moreover, the novelty effect further obscures safe

conclusions. In designed experiments, the use of an

application follows the research design. However, in

real life, students have the option to approach digital

tools idiosyncratically or opportunistically, contrary

to the designer’s intentions (Fütterer et al., 2022;

Tomberg et al., 2024).

This study suggests that, for the specific

community of learners, digital instructional resources

do not promote meaningful learning when left solely

to the student’s discretion. Apart from a portion of

students engaged with learning, the rest use the

instructional resources superficially and reactively to

meet examination requirements. For instructional

material to be effective, students must take ownership

of their learning process. Integrating the learning

tools in a carefully designed learning process

orchestrated by the instructor and implemented by the

students and the teacher can help achieve this

objective (Gao et al., 2024).

ACKNOWLEDGEMENTS

Publication and registration fees were covered by the

University of West Attica.

REFERENCES

Ahshan, R. (2021). A Framework of Implementing

Strategies for Active Student Engagement in

CSEDU 2025 - 17th International Conference on Computer Supported Education

578

Remote/Online Teaching and Learning during the

COVID-19 Pandemic. Education Sciences, 11(9), 483.

https://doi.org/10.3390/educsci11090483.

Alahmadi, A. A. S. (2023). The Experience of Using Online

Education in a Radiological Academic Department

During the Pandemic of Corona Virus COVID-19.

Journal of Radiology Nursing, 42(2), 236–240.

https://doi.org/10.1016/j.jradnu.2023.01.004.

Alasino, E., Ramírez, M. J., Romero, M., Schady, N., &

Uribe, D. (2024). Learning losses during the COVID-

19 pandemic: Evidence from Mexico. Economics of

Education Review, 98, 102492. https://doi.org/10.1016/

j.econedurev.2023.102492.

Arenas, A., & Gortazar, L. (2024). Learning loss one year

after school closures: evidence from the Basque

Country. SERIEs, 15(3), 235–258. https://doi.org/

10.1007/s13209-024-00296-4.

Bai, C., Yang, J., & Tang, Y. (2022). Embedding self-

explanation prompts to support learning via

instructional video. Instructional Science, 50(5), 681–

701. https://doi.org/10.1007/s11251-022-09587-4.

Biehler, R., Durand-Guerrier, V., & Trigueros, M. (2024).

New trends in didactic research in university

mathematics education. ZDM – Mathematics

Education, 56(7), 1345–1360. https://doi.org/10.1007/

s11858-024-01643-2.

Bjork, R. A., Dunlosky, J., & Kornell, N. (2013). Self-

regulated learning: Beliefs, techniques, and illusions. In

Annual Review of Psychology (Vol. 64). https://doi.org/

10.1146/annurev-psych-113011-143823.

Boud, D., & Molloy, E. (2013). Rethinking models of

feedback for learning: the challenge of design.

Assessment & Evaluation in Higher Education, 38(6),

698–712. https://doi.org/10.1080/

02602938.2012.691462.

Cattaneo, A., Schmitz, M.-L., Gonon, P., Antonietti, C.,

Consoli, T., & Petko, D. (2025). The role of personal

and contextual factors when investigating technology

integration in general and vocational education.

Computers in Human Behavior, 163, 108475.

https://doi.org/10.1016/j.chb.2024.108475.

Cavanlit, K. L., Encabo, E. M., & Vilbar, A. (2023). Using

Recorded Lectures in Teaching Higher Education in an

Online Remote Learning Context (pp. 187–194).

https://doi.org/10.1007/978-3-031-44097-7_20.

Chi, M. T. H., Adams, J., Bogusch, E. B., Bruchok, C.,

Kang, S., Lancaster, M., Levy, R., Li, N., McEldoon,

K. L., Stump, G. S., Wylie, R., Xu, D., & Yaghmourian,

D. L. (2018). Translating the ICAP Theory of Cognitive

Engagement Into Practice. Cognitive Science, 42(6),

1777–1832. https://doi.org/10.1111/cogs.12626.

Dermy, O., Boyer, A., & Roussanaly, A. (2022). A

Dynamic Indicator to Model Students’ Digital

Behavior. Proceedings of the 14th International

Conference on Computer Supported Education, 163–

170. https://doi.org/10.5220/0011039400003182.

Divjak, B., Svetec, B., & Horvat, D. (2024). How can valid

and reliable automatic formative assessment predict the

acquisition of learning outcomes? Journal of Computer

Assisted Learning, 40(6), 2616–2632. https://doi.org/

10.1111/jcal.12953.

Donnelly, R., Patrinos, H. A., & Gresham, J. (2021). The

Impact of COVID-19 on Education – Recommendations

and Opportunities for Ukraine.

https://www.worldbank.org/en/news/opinion/2021/04/

02/the-impact-of-covid-19-on-education-

recommendations-and-opportunities-for-ukraine.

Durongkaveroj, W. (2023). Learning loss due to university

closures during the COVID-19 pandemic: Evidence

from Thailand’s largest public university. Thailand

and The World Economy, 41(2), 103–122.

https://so05.tci-thaijo.org/index.php/TER/article/ view/

265360.

Facer, K., & Selwyn, N. (2021). Digital technology and the

futures of education-towards “non-stupid” optimism.

Background Paper for the Futures of Education

Initiative, April.

Fütterer, T., Scheiter, K., Cheng, X., & Stürmer, K. (2022).

Quality beats frequency? Investigating students’ effort

in learning when introducing technology in classrooms.

Contemporary Educational Psychology,69,102042.

https://doi.org/10.1016/j.cedpsych.2022.

Gao, Q., Tong, M., Sun, J., Zhang, C., Huang, Y., & Zhang,

S. (2024). A Study of Process-Oriented Guided Inquiry

Learning (POGIL) in the Blended Synchronous Science

Classroom. Journal of Science Education and

Technology. https://doi.org/10.1007/

s10956-024-10155-3.

Jarwopuspito, Syauqi, K., Sufyan, A., Pamungkas, A. A., &

Saifan, F. A. (2023). The handout and video learning of

manufacture engineering drawing. 050018.

https://doi.org/10.1063/5.0114536.

Karnad, A. (2013). Student use of recorded lectures, A

report reviewing recent research into the use of lecture

capture technology in higher education, and its impact

on teaching methods and attendance.

https://eprints.lse.ac.uk/50929/1/Karnad_Student_use_

recorded_2013_author.pdf.

Koedinger, K. R., Corbett, A. T., & Perfetti, C. (2012). The

Knowledge‐Learning‐Instruction Framework: Bridging

the Science‐Practice Chasm to Enhance Robust Student

Learning. Cognitive Science, 36(5), 757–798.

https://doi.org/10.1111/j.1551-6709.2012.01245.x.

Kostaki, S.-M., & Linardakis, M. (2024). Revealing

primary teachers’ preferences for general

characteristics of ICT-based teaching through discrete

choice models. Education and Information

Technologies. https://doi.org/10.1007/s10639-024-

13182-0.

Lawson, A. P., & Mayer, R. E. (2021). Benefits of Writing

an Explanation During Pauses in Multimedia Lessons.

Educational Psychology Review, 33(4), 1859–1885.

https://doi.org/10.1007/s10648-021-09594-w.

OECD. (2021). The State of Higher Education: One Year

into the COVID-19 Pandemic. https://doi.org/10.1787/

83c41957-en.

OECD. (2022). How Learning Continued during the

COVID-19 Pandemic: Global Lessons from Initiatives

to Support Learners and Teachers

(S. Vincent-Lancrin,

Time to Face the Truth: Do Digital Applications Really Help Students Learn?

579

C. Cobo Romaní, & F. Reimers, Eds.). OECD

Publishing. https://doi.org/https://doi.org/10.1787/

bbeca162-en.

Photopoulos, P., & Triantis, D. (2022). Problem-Solving

Multiple-Response Tests: Guessing is not a Favourable

Strategy. International Journal of Learning and

Teaching, 64–70. https://doi.org/10.18178/ijlt.8.1.64-

70.

Photopoulos, P., Tsonos, C., Stavrakas, I., & Triantis, D.

(2021). Preference for Multiple Choice and Constructed

Response Exams for Engineering Students with and

without Learning Difficulties. Proceedings of the 13th

International Conference on Computer Supported

Education, 220–231. https://doi.org/10.5220/

0010462502200231.

Pitso, T. (2023). Telagogy: New theorisations about

learning and teaching in higher education post-Covid-

19 pandemic. Cogent Education, 10(2).

https://doi.org/10.1080/2331186X.2023.2258278.

Reich, J. (2020). Failure to Disrupt: Why Technology Alone

Can’t Transform Education. Harvard University Press.

Santilli, T., Ceccacci, S., Mengoni, M., & Giaconi, C.

(2025). Virtual vs. traditional learning in higher

education: A systematic review of comparative studies.

Computers & Education, 227, 105214. https://doi.org/

10.1016/j.compedu.2024.105214.

Seidel, N. (2024). Short, Long, and Segmented Learning

Videos: From YouTube Practice to Enhanced Video

Players. Technology, Knowledge and Learning, 29(4),

1965–1991. https://doi.org/10.1007/s10758-024-

09745-2.

Sharma, A., & Hudson, C. (2022). Depoliticization of

educational reforms: the STEM story. Cultural Studies

of Science Education, 17(2), 231–249. https://doi.org/

10.1007/s11422-021-10024-0.

Tan, A. J. Y., Davies, J., Nicolson, R. I., & Karaminis, T.

(2022). A technology-enhanced learning intervention

for statistics in higher education using bite-sized video-

based learning and precision teaching. Research and

Practice in Technology Enhanced Learning, 18, 001.

https://doi.org/10.58459/rptel.2023.18001.

Tolonen, M., Arvonen, M., Renko, M., Paakkonen, H.,

Jäntti, H., & Piippo-Savolainen, E. (2023). Comparison

of remote learning methods to on-site teaching -

randomized, controlled trial. BMC Medical Education,

23(1), 778. https://doi.org/10.1186/s12909-023-04759-

3.

Tomberg, S., Dewispelaere, W., Wogu, A., & Wang, N.

(2024). Attendance at live vs virtual didactic sessions in

a U.S. emergency medicine residency. Education for

Health, 37(4), 383–388. https://doi.org/10.62694/

efh.2024.150.

Tomić, B., & Radovanović, N. (2024). The application of

artificial intelligence in the context of the educational

system in Serbia, with a special focus on religious

education. Socioloski Pregled, 58(2), 435–459.

https://doi.org/10.5937/socpreg58-48911.

Trask, G. M. (2024). Improving accessibility, engagement

and usefulness of online information literacy tutorials

based on student feedback. Performance Measurement

and Metrics, 25(2), 109–116. https://doi.org/10.1108/

PMM-11-2023-0040.

TS, S. K., & Thandeeswaran, R. (2024). Adapting video-

based programming instruction: An empirical study

using a decision tree learning model. Education and

Information Technologies, 29(11), 14205–14243.

https://doi.org/10.1007/s10639-023-12390-4.

Wang, L., & Xu, G. (2024). Self-explanation prompts in

video learning: an optimization study. Education and

Information Technologies. https://doi.org/10.1007/

s10639-024-12806-9

CSEDU 2025 - 17th International Conference on Computer Supported Education

580