Ontology-Driven LLM Assistance for Task-Oriented Systems

Engineering

Jean-Marie Gauthier, Eric Jenn and Ramon Conejo

IRT Saint Exup

´

ery, 3 Rue Tarfaya, 31400 Toulouse, France

{firstname.lastname}@irt-saintexupery.com

Keywords:

Systems Engineering, Large Language Model, Agent, Systems Modelling, Modelling Assistant, Ontology.

Abstract:

This paper presents an LLM-based assistant integrated within an experimental modelling platform to sup-

port Systems Engineering tasks. Leveraging an ontology-driven approach, the assistant guides engineers

through Systems Engineering tasks using an iterative prompting technique that builds task-specific context

from prior steps. Our approach combines prompt engineering, few-shot learning, Chain of Thought reason-

ing, and Retrieval-Augmented Generation to generate accurate and relevant outputs without fine-tuning. A

dual-chatbot system aids in task completion. The evaluation of the assistant’s effectiveness in the development

of a robotic system demonstrates its potential to enhance Systems Engineering process efficiency and support

decision-making.

1 INTRODUCTION

1.1 Context and Motivations

Model-Based Systems Engineering (MBSE), as a

multidisciplinary approach, offers the promise to

structure engineering data with the support of mod-

elling. It aims at facilitating activities encompassing

system requirements, design, analysis, as well as ver-

ification and validation, during the conceptual design

phase throughout the subsequent development stages

and life cycle phases (INCOSE, 2007).

At the same time, one of the most notable ad-

vancements in the artificial intelligence domain is the

emergence of Large Language Models (LLMs). Pow-

ered by deep learning techniques, those models have

demonstrated exceptional capabilities in generating

human-like text, images from natural language input.

These models, exemplified by OpenAI’s Generative

Pre-trained Transformer (GPT) series (Brown et al.,

2020), have garnered widespread attention for their

ability to generate natural language text or images at

an unprecedented scale (Ramesh et al., 2021).

Despite the increasing adoption of MBSE ap-

proaches in the industry, its full deployment remains

limited due to various challenges, ranging from tech-

nological hurdles to organizational barriers (Chami

and Bruel, 2018). These challenges often stem from

the complexity of integrating MBSE into existing

workflows and the need for specialized knowledge

and training. To overcome these obstacles, one of the

main leads is the integration of Artificial Intelligence

(AI) into SE and MBSE processes (Chami et al.,

2022). The intersection of MBSE and AI presents an

opportunity to improve the way systems engineering

tasks such as requirements writing, models authoring,

refinement, and analysis are performed.

Specifically, the integration of Large Language

Models (LLMs) with Systems Engineering (SE) and

Model-Based Systems Engineering (MBSE) offers

the potential to automate and enhance key SE and

MBSE tasks (Alarcia et al., 2024). A significant

number of applications of AI to MBSE use cases

have been identified in the literature (Schr

¨

ader et al.,

2022), and within the Systems Engineering commu-

nities (SELive, 2023). Moreover, industrial compa-

nies have initiated exploration and experimentation to

understand the possibilities and constraints associated

with implementing AI, and especially GPT-like mod-

els, in the systems engineering domain. In particu-

lar, LLMs were tested as an assistant from require-

ments authoring (Andrew, 2023) (Tikayat Ray et al.,

2023) and more complex Requirements Engineering

tasks (Arora et al., 2024), to MBSE assistance, such

as SysML V2 model edition (Fabien, 2023) and Plan-

tUML model generation from prompts (C

´

amara et al.,

2023). These examples show that LLMs can stream-

line and enhance SE and MBSE processes by en-

abling automated generation and refinement of mod-

els from natural language inputs.

Gauthier, J.-M., Jenn, E. and Conejo, R.

Ontology-Driven LLM Assistance for Task-Oriented Systems Engineering.

DOI: 10.5220/0013441100003896

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 13th International Conference on Model-Based Software and Systems Engineering (MODELSWARD 2025), pages 383-394

ISBN: 978-989-758-729-0; ISSN: 2184-4348

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

383

However, while LLMs have the potential to reduce

manual effort and increase the accuracy of SE and

MBSE, practical challenges remain. Indeed, the need

for reliable traceability, precision, and consistency in

SE and MBSE tasks emphasizes the importance of

further research on how LLMs can effectively sup-

port these objectives without extensive fine-tuning. In

addition, managing large datasets, handling context

limits, and achieving seamless integration within SE-

specific processes, are challenges that need to be ad-

dressed.

1.2 Research Questions and

Contributions

To address the limits identified in the previous sec-

tion, we propose to answer to the following main re-

search questions. The first research question is to

identify contexts, system engineering phases, and ac-

tivities in which LLMs can be effectively applied, and

add value. Specifically, the study seeks to identify the

stages and contexts in which LLMs can be effectively

applied. It addresses questions regarding their ability

to enhance task completion, improve efficiency, re-

duce human error, and support the consistency and

completeness of SE data.

RQ1: Which Systems Engineering activities can ben-

efit the most from the integration of LLMs?

RQ2: What implementation strategies can be em-

ployed to effectively integrate LLMs into these SE

activities?

RQ3: What tangible benefits do LLMs offer for en-

hancing the identified Systems Engineering activi-

ties?

To address these questions, the paper introduces an

experimental platform designed to evaluate the inte-

gration of LLMs into SE tasks, particularly in the con-

text of requirements engineering. While the focus re-

mains on textual artifacts, such as needs and require-

ments, the study also explores the potential for scala-

bility and adaptability to other SE domains.

Our contribution presents results obtained in the

AIDEAS and EasyMOD projects carried out at IRT

Saint Exupery. AIDEAS aims to evaluate the bene-

fits of LLM-based conversational agents to realize se-

lected engineering tasks in the field of aerospace and

space systems including SE tasks, technological sur-

veys, etc. Besides providing technical solutions to im-

plement those agents using LLMs, a significant part

of the study focuses on understanding how tasks dis-

tribute between the human and nonhuman agents and

how this collaboration can be evaluated and, eventu-

ally, improved. The contributions of our study are as

follows:

1. Processes and Tasks Identification: We use

LLM to identify the relevant ISO15288 techni-

cal processes (iso, 2015) necessary to meet an en-

gineering objective expressed by a systems engi-

neer. The LLM further decomposes each process

into specific tasks, creating a task hierarchy. This

sets the foundation for thorough task completion

and traceability throughout the project.

2. Ontology-Driven Task Assistant: Using a Sys-

tems Engineering ontology, the LLM identi-

fies the required inputs—such as specific data,

stakeholder requirements, or prior analysis re-

sults—that are essential to initiate and perform

the task effectively. Additionally, the LLM de-

termines the outputs that each task should pro-

duce, such as technical specifications, refined re-

quirements, or system component definitions. By

defining tasks with these input and output expec-

tations, the ontology-driven approach enhances

the LLM’s ability to deliver precise, relevant guid-

ance and ensures that each task contributes sys-

tematically to the overall engineering objectives.

3. Completeness Checking and Task Validation:

We implemented a completeness checker to en-

sure each task meets SE criteria and is thoroughly

documented, leveraging LLM capabilities for fi-

nal validation.

4. Dual-Chatbot Assistance: Two assistant chat-

bots were developed. A guided questioning as-

sistant helps engineers refine requirements, clar-

ify specifications, and streamline data manage-

ment, while a task-specific assistant provides

open-ended support, expanding the LLM’s capac-

ity for structured and unstructured queries.

1.3 Paper Overview

The remainder of this paper is organized as follows.

Section 2 provides background on Large Language

Models (LLMs), including their foundational princi-

ples and relevant advancements. Section 3 outlines

our methodology and presents the approach for im-

plementing the ontology-driven LLM solution. Sec-

tion 4 showcases insights from a robotic system case

study, and discusses the results. Section 5 reviews

related work on the integration of LLMs for Model-

Based Systems Engineering (MBSE). Finally, Section

6 concludes the paper and outlines future work.

MBSE-AI Integration 2025 - 2nd Workshop on Model-based System Engineering and Artificial Intelligence

384

2 BACKGROUND ON LANGUAGE

MODELS

In this section, we present the foundational knowl-

edge and context required to comprehend the follow-

ing content. First, foundations on LLMs are pre-

sented. Then, a focus is made on Augmented Lan-

guage Models (ALMs) that attempt to address the

limitations of LLMs.

2.1 Large Language Models

Large Language Models (LLMs) are a class of AI

models that predict the next character of a sequence,

or ”piece of word” based on the input text. They work

by leveraging advanced deep learning techniques,

specifically transformer architectures, to process and

generate human-like text. The following paragraphs

explain the main concepts related to LLMs.

2.1.1 Transformer Architecture

LLMs are based on the Transformer architecture,

which uses self-attention mechanisms and feed-

forward neural networks to process input efficiently

and capture long-range dependencies (Vaswani et al.,

2017). The self-attention mechanism allows the

model to weigh the importance of different parts of a

sequence, enabling a nuanced understanding of con-

text and relationships between tokens. Input text

is decomposed into smaller units called ”tokens”,

like words or sub-words, and represented as high-

dimensional vectors, allowing the model to predict the

next token in a sequence based on preceding context.

2.1.2 Pre-Training and Fine-Tuning

LLMs are pre-trained on vast text datasets, learning to

predict the next token in a sequence, which helps them

grasp syntax, semantics, and contextual relationships.

This large-scale training enables them to generate co-

herent, contextually relevant text. They can then be

fine-tuned on smaller, task-specific datasets to adapt

their capabilities for specialized applications.

2.1.3 Prompting, Zero-Shot, and Few-Shot

Settings

The effective use of LLMs is based on prompt engi-

neering, where the input prompts guide the model’s

text generation. Creating optimal prompts requires

an understanding of the model’s capabilities and lim-

itations, which is still a research problem in its own.

Researchers experiment with prompt length, formats,

and contextual cues to improve the quality and rel-

evance of the output. Well-engineered prompts are

particularly important in specialized fields, such as

Systems Engineering, where accurate and contextu-

ally appropriate responses are essential.

Prompting techniques are typically divided into

zero-shot and few-shot methods. In a zero-shot set-

ting (Kojima et al., 2022), the model generalizes to

unseen tasks without specific training examples, re-

lying solely on prior knowledge. In contrast, few-

shot learning (Brown et al., 2020) involves providing

a small number of task examples to help the model

adapt to and perform specific tasks more effectively.

2.2 Augmented Language Models

LLMs excel in capturing complex linguistic patterns,

understanding context, and generating human-like

text across various tasks. However, they face chal-

lenges, including the generation of non-factual yet

plausible responses (hallucinations) and the need for

large-scale storage to handle task-related knowledge

beyond their limited context. These issues stem from

the LLMs’ reliance on a single parametric model and

constrained context size, which impacts their accu-

racy and practical use.

To address these limitations, Augmented Lan-

guage Models (ALMs) have emerged. ALMs, as

defined by (Lecun et al., 2023), integrate reasoning

and external tools to enhance the model’s capabili-

ties. Reasoning strategies in ALMs decompose com-

plex tasks into manageable subtasks, often leveraging

step-by-step processes (Qiao et al., 2022). Techniques

like Chain of Thought (CoT) prompting have been

shown to significantly improve reasoning, especially

for multi-step tasks, by guiding the model through

a structured approach that enhances its performance

over traditional prompting methods (Wei et al., 2022).

2.3 Assessment Regarding Our

Contributions

In this study, we leverage Large Language Models

(LLMs) in their base form, without fine-tuning, due

to the substantial computational resources and spe-

cialized Systems Engineering (SE) datasets required

for fine-tuning, which are not available. To overcome

this limitation, our approach integrates several tech-

niques to enhance the LLM’s ability to perform SE

tasks effectively:

• Prompt Engineering and Few-Shot Setting: We

use prompt engineering to tailor the LLM’s be-

havior to Systems Engineering tasks, guiding it to

Ontology-Driven LLM Assistance for Task-Oriented Systems Engineering

385

generate SE-specific responses. Few-shot learn-

ing is employed to enhance the model’s general-

ization across various SE tasks by providing a few

examples, enabling the LLM to instantiate data

for new, unseen tasks efficiently.

• Chain of Thought (CoT) Reasoning: We im-

prove the model’s performance by applying CoT

reasoning to break complex tasks down into

smaller steps.

• Retrieval-Augmented Generation (RAG): We

use RAG to enable the LLM to access external,

task-specific knowledge on demand. By combin-

ing generative capabilities with information re-

trieval, the RAG ensures more accurate, contex-

tually relevant outputs without the need for time-

consuming fine-tuning.

3 LLM-BASED ASSISTANT FOR

SYSTEMS ENGINEERING

This paper presents an approach that leverages LLMs

to assist in Systems Engineering processes. Our ap-

proach combines LLMs with an SE ontology, generat-

ing task-specific guidance aligned with a user-defined

SE objectives. The LLM decomposes each SE pro-

cess into tasks, identifies required inputs and outputs,

sets completion criteria to ensure alignment with SE

standards, and provides guidance to achieve the tasks.

The SE assistant is integrated in a systems engi-

neering platform called EasyMOD. This platform en-

ables engineers to create system architectures, auto-

matically extract views for interactive review docu-

ment creation, and map system analysis results onto

system architectures. In this paper, we focus on the

assistant component of EasyMOD. An overview of

our systems engineering assistance approach is pre-

sented, particularly emphasizing the iterative prompt-

ing approach that builds the context for specific sys-

tems engineering tasks. By iterative prompting, we

mean a step-by-step approach where each task is

guided by context constructed from the outputs of

previous steps, ensuring continuity and informed pro-

gression.

3.1 Processes and Tasks Identification

The first step of our approach involves capturing the

systems engineering objective defined by the engineer

and proposing an appropriate process to achieve it.

Figure 1 outlines the workflow using ISO 15288 tech-

nical processes as a baseline. It begins with two types

of prompts: the context prompt (also known as sys-

tem prompt), which specifies the Systems Engineer-

ing role that the AI should assume while generating

responses. The constraints prompt (also known as

user prompt) provides specific instructions or queries

that the LLM must address within the context estab-

lished by the context prompt. It further captures the

engineer’s SE Objective, as the starting prompt, the

tasks to be performed by the LLM, and the expected

JSON format as answer.

Check and identify ISO15288 Technical Processes

Objective Assessment

& SoI Identification

Identified

ISO15288 Processes

Context Prompt

SE Role

Constraints Prompt

Tasks to Perform

Expected Answer

Format

SE Objective

if SEObjective in SE Domain

and SoI found

Figure 1: Prompts for ISO 15288 processes identification.

Using these inputs, the assistant validates whether

the SE objective aligns with the Systems Engineering

domain and identifies the corresponding System of In-

terest (SoI). Upon successful assessment, the assistant

identifies and outputs the relevant ISO 15288 techni-

cal processes, which form the foundation for subse-

quent task decomposition and validation.

Then the Systems Engineering processes are bro-

ken down into tasks. The SE role, objective, and iden-

tified list of processes are used to establish the context

for the assistant. The user selects one of the processes

from this list, which, with the tasks to perform and the

expected answer format, guides the LLM to generate

a task breakdown.

3.2 Ontology-Driven Task Assistant

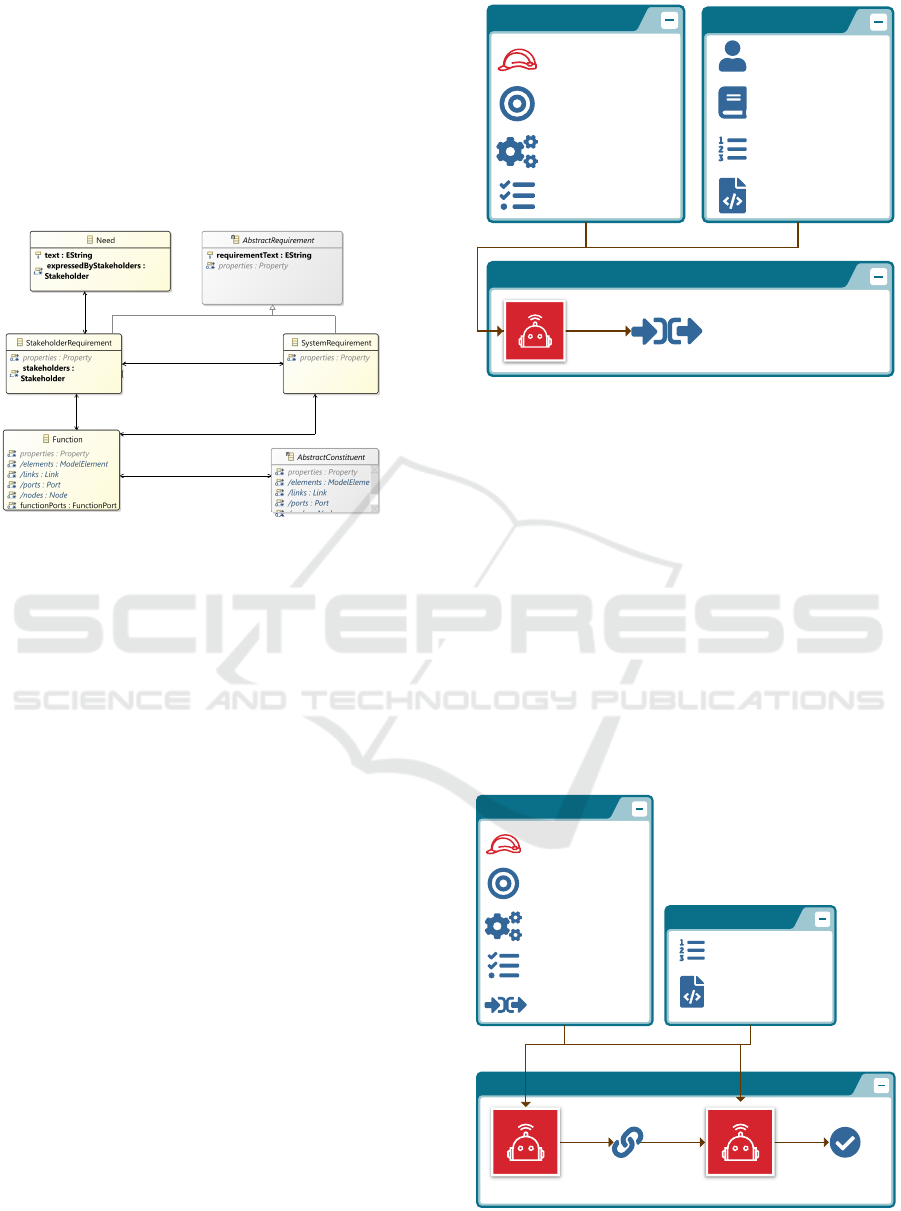

Central to our approach, an SE ontology called Sys-

tems Engineering Information Model (SEIM), which

the assistant uses to extract and define the essential

MBSE-AI Integration 2025 - 2nd Workshop on Model-based System Engineering and Artificial Intelligence

386

concepts for each Systems Engineering task. By in-

tegrating this ontology, the assistant has access to a

structured description of the relationships between the

key concepts in SE. An excerpt of this ontology is

shown in Figure 2. The concepts in this example in-

clude Needs, Stakeholder Requirements, Functions,

System Requirements, and Abstract Constituents, all

of which are linked with associations to establish

traceability.

[0..*] traceToStakeholderRequirements

[1..*] traceToNeeds

[0..*] satisfyStakeholderRequirements

[0..1] isSatisfiedByFunction

[0..*] satisfySystemRequirements

[0..1] isSatisfiedByFunction

[0..*] derivedFromStakeholderRequirements

[0..*] derivedIntoSystemRequirements

[0..*] allocatedFunctions

[0..1] functionAllocationToConstituent

Figure 2: Ontology Excerpt as a Metamodel.

Additionally, certain concepts within the ontology

are supplemented with specific documentation tai-

lored for the LLM. For example, stakeholder require-

ments and system requirements are enriched with best

practices and standardized patterns to ensure the writ-

ing of high-quality requirements.

The Figure 3 illustrates how the ontology is used

by the assistant to identify the engineering items that

must be consumed and produced by each task, ensur-

ing alignment with the overall system objectives. The

context prompt includes details such as the SE Role,

SE Objective, a list of available processes and tasks,

and the user-selected task for focused assistance. The

constraints prompt includes the SEIM ontology, the

task the assistant shall follow, and the required LLM’s

output format. The assistant uses these inputs to iden-

tify the necessary SEIM Input/Output (I/O) concepts

related to the selected task.

For example, the task of translating stakeholder

needs into stakeholder requirements requires the in-

put concepts of Stakeholders and Needs and produces

Stakeholder Requirements as the output.

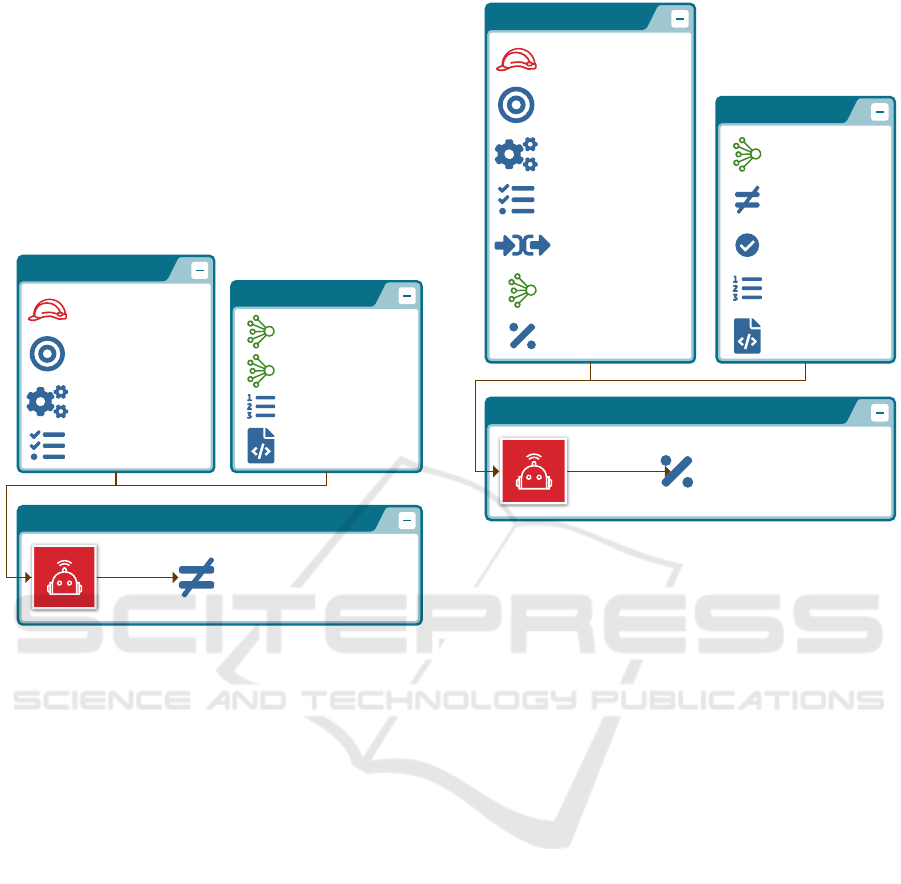

3.3 Task Completeness Checking

After tasks are defined, ensuring their completeness

and correctness is crucial. This is achieved by the

completeness checker, which evaluates whether all re-

quired task outputs have been produced based on pre-

defined criteria. The checker leverages LLMs to iden-

Identify Needed SEIM I/O Concepts

Identified SEIM

I/O Concepts

Constraints Prompt

Tasks to Perform

Expected Answer

Format

User Selected Task

SEIM Ontology

Context Prompt

SE Role

SE Objective

List of Processes

& Selected Process

List of Tasks

Figure 3: Prompts for task’s I/O identification.

tify missing elements, and provide feedback for itera-

tive refinement of the SE task.

To extract the completeness criteria, we use a

chain of thought (COT). The first phase involves

defining the SE task’s completeness criteria. We pro-

pose to use the association links between the task’s

output concepts and its input concepts, as illustrated

in Figure 4. For instance, if the task aims at produc-

ing stakeholder requirements from needs, then a com-

pleteness criterion is to ensure that each need is traced

to stakeholder requirements. Once these traceability

links are established, the assistant is further tasked

with defining completeness criteria grounded in these

links, while also incorporating additional criteria re-

lated to the data generated by the task.

Identify Traceability Links & Completeness Criteria

Completeness

Criteria

Traceability

Links

Constraints Prompt

Tasks to Perform

Expected Answer

Format

Context Prompt

SE Role

SE Objective

List of Processes

& Selected Process

List of Tasks &

Selected Task

Task's I/O

SEIM Concepts

Figure 4: Prompts and COT for completeness criteria iden-

tification.

Ontology-Driven LLM Assistance for Task-Oriented Systems Engineering

387

The second phase illustrated by Figure 5 involves

conducting a difference analysis between the succes-

sive versions of the data. This analysis serves to iden-

tify and categorize changes made during the task, in-

cluding elements that have been added, removed, or

updated. By systematically comparing the two ver-

sions, this process provides insights into the evolu-

tion of the task’s outputs, highlighting modifications

and enabling engineers to track progress, ensure con-

sistency, and address any unintended alterations.

Diff Analysis

Diff Analysis

Result

Constraints Prompt

Tasks to Perform

Expected Answer

Format

SE Data V(n-1)

SE Data V(n)

Context Prompt

SE Role

SE Objective

List of Processes

& Selected Process

List of Tasks &

Selected Task

Figure 5: Prompts for diff analysis.

Finally, the completeness criteria and the results

of the difference analysis are provided as inputs to the

assistant to conduct a task completeness assessment.

Figure 6 illustrates this process, highlighting the in-

tegration of completeness criteria, difference analysis

results, and the most recent completion assessment.

Leveraging these inputs, the assistant evaluates the

degree to which the task meets the predefined cri-

teria, adjusting the completion percentage as neces-

sary. Depending on the assessment, the completion

percentage is calculated, reflecting the current state

of the task’s outputs relative to the defined goals.

3.4 Dual-Chatbot Assistance

To further enhance user support, we propose a dual-

chatbot system, comprising a guided questioning as-

sistant and an open task-specific assistant. The guided

Question/Answer (Q/A) chatbot assists in task com-

pleteness by asking focused questions aimed at satis-

fying the predefined completeness criteria.

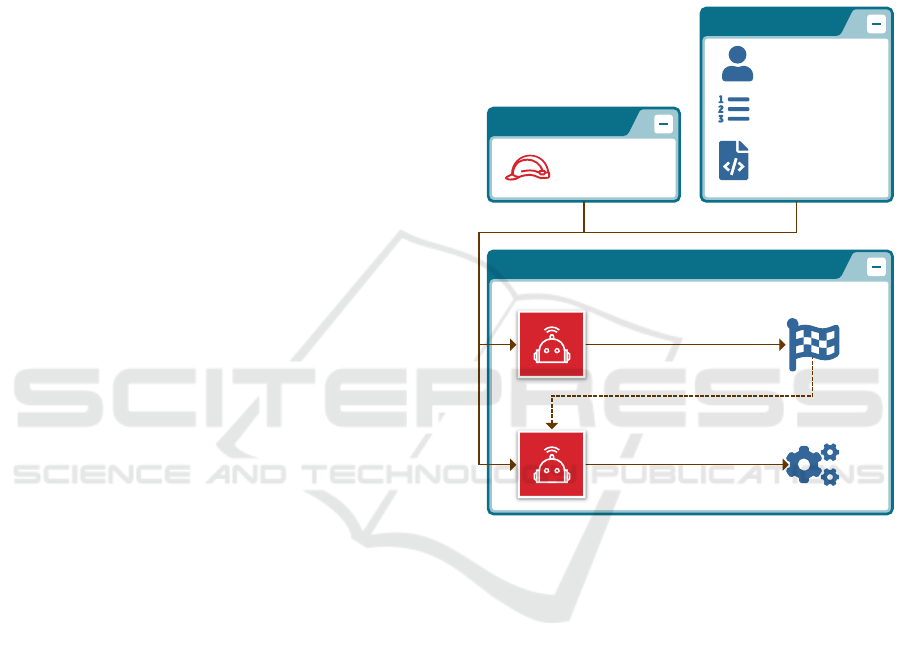

The Q/A chatbot, as illustrated in Figure 7, assists

systems engineers in completing specific tasks by ask-

ing context-aware, targeted questions. Its functional-

ity is driven by a detailed context prompt that aggre-

Task's Completeness Evaluation

New Task Completion

Assessment

Constraints Prompt

Tasks to Perform

Expected Answer

Format

SE Data V(n)

Diff Analysis

Result

Completeness

Criteria

Context Prompt

SE Role

SE Objective

List of Processes

& Selected Process

List of Tasks &

Selected Task

SE Data V(n-1)

Completion

Assessment V(n-1)

Task's I/O

SEIM Concepts

Figure 6: Prompts for task’s completeness evaluation.

gates critical information, such as the assistant’s role,

the global engineer’s SE objective, and the hierarchi-

cal relationship between tasks and processes, ensur-

ing tailored guidance aligned with the engineer’s re-

sponsibilities. The prompt further integrates domain-

specific knowledge through SEIM ontology concepts,

references existing Systems Engineering data for con-

sistency, and outlines task completion criteria to en-

sure the chatbot’s questioning focuses on achieving

successful task outcomes.

In addition, the open-ended chatbot provides

broader support for addressing complex or unstruc-

tured tasks, offering flexibility and adaptability.

4 EXPERIMENTS, RESULTS,

AND DISCUSSION

This section presents the experimental evaluation of

our approach. We detail the experimental platform

developed to test the proposed methodology, followed

by its application to a robotic system use case. We

also discuss the challenges and lessons learned during

the experiment, including insights on task complete-

ness, and consistency. Through these experiments, we

aim to assess the practical value of LLM integration

in real-world SE applications.

MBSE-AI Integration 2025 - 2nd Workshop on Model-based System Engineering and Artificial Intelligence

388

Ask 3 Questions to Complete the Task

Constraints Prompt

Tasks to Perform

Expected Answer

Format

Context Prompt

SE Role

SE Objective

List of Processes

& Selected Process

List of Tasks

& Selected Task

Existing Input SE Data

Existing Output SE Data

Task Completion

Assessment

Task's I/O

SEIM Concepts

Asked Questions

List

Figure 7: Prompts to ask a list of questions.

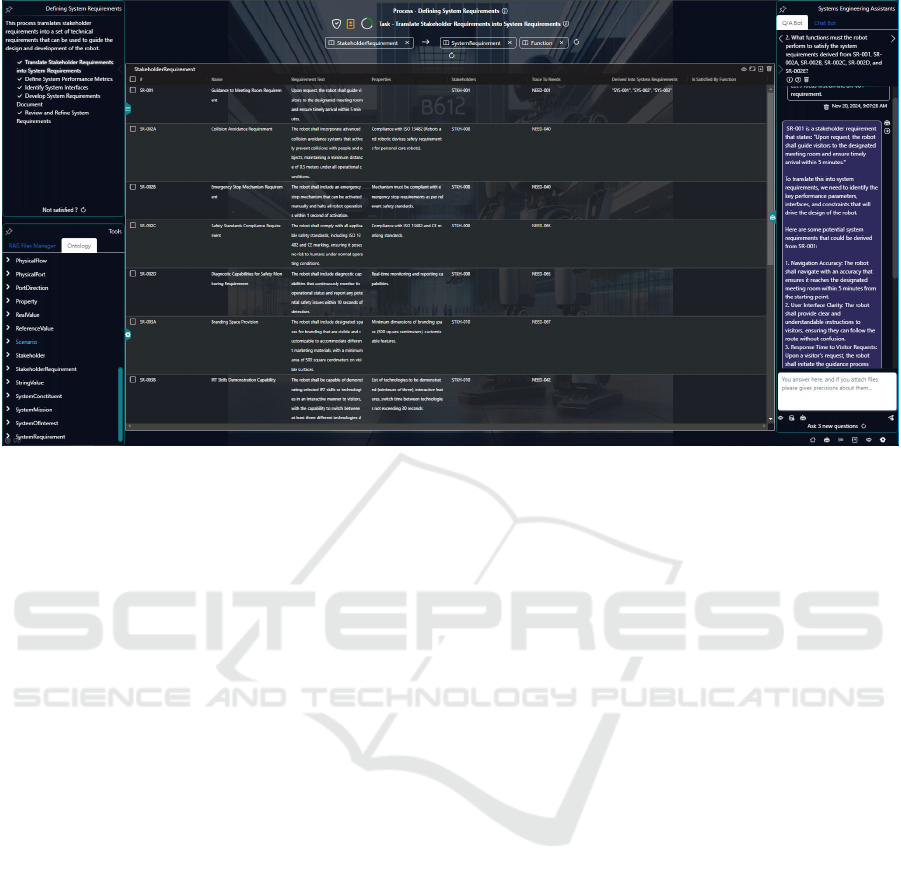

4.1 Experimental Platform

The main interface of the experimental platform is

shown in Figure 8. The interface is divided into sev-

eral key elements. On the top-left side of the screen,

is the Process Overview panel, which highlights the

current SE process and task being undertaken, such as

translating stakeholder requirements into system re-

quirements. Below this panel, the Ontology Manager

enables users to interact with predefined SE concepts,

ensuring alignment with the knowledge base.

The central section of the interface features an en-

gineering data table, which lists the required and pro-

duced SE data, such as traceability links to original

needs, and derived system requirements. Above the

table is the task completeness interface. This section

allows users to track progress and identify missing el-

ements.

To the right, the Dual-Chatbot System is displayed

in a panel. The guided questioning chatbot helps

users clarify inputs, outputs, and requirements for

task completion by asking focused questions, ensur-

ing traceability and alignment with task objectives.

For instance, it prompts the user to define system

functions necessary to satisfy derived requirements.

Additionally, the open-ended chatbot assistant offers

broader support for unstructured or complex tasks

by providing suggestions, explanations, or additional

context where needed.

4.2 Application on a Robotic Use Case

To validate our approach, we applied it to a robotic

system under development at IRT. The robot serves as

a demonstration platform integrating advanced tech-

nologies developed by the IRT and its partners. It is

designed to autonomously guide visitors within the

IRT premises while showcasing integrated technolog-

ical solutions. Its development emphasizes the reuse

of existing hardware and software components, with

minimal new development, prioritizing the integra-

tion of technologies to highlight their value.

The robot’s mission includes several key opera-

tional capacities:

• Responding to verbal commands from visitors,

such as adjusting speed, stopping, or resuming

movement.

• Dynamically adjusting its displacement speed to

match the visitor’s pace.

• Monitoring the visitor’s proximity, requesting

them to return if they move too far, and notify-

ing the supervision operator and host if the visitor

remains out of range for an extended period.

• Sending an automated email to the host upon

mission completion and returning to a designated

parking area.

We started the experiment with the following engi-

neering objective:

We are a team of engineers in charge of the

specification, the design and the implementa-

tion of a robot (named BIRD) which mission

is to welcome visitors and to bring them to

the right place. The robot will be deployed

in a research institute named IRT (Institut de

Recherche Technologique) at Toulouse in a

building named B612. The robot will serve

also to demonstrate our know-hows and will

embed technological bricks we are developing

at the IRT.

We present here the results obtained using the tool.

For interested readers, the complete set of data is pub-

licly available in our public repository

1

.

• System Mission Identification: First, we identi-

fied several system’s missions listed on Table 1.

The missions SM-001, SM-002, and SM-003 are

relevant and well-formed, effectively capturing

the robot’s primary objective. However, SM-004,

which focuses on accessibility features, appears

more aligned with a specific system feature rather

1

https://sahara.irt-saintexupery.com/jean-marie.gauthie

r/Data LLM/src/branch/master/BIRD LLM.pdf

Ontology-Driven LLM Assistance for Task-Oriented Systems Engineering

389

Figure 8: Experimental platform main interface.

than a high-level mission. For the rest of the ex-

periment we focused on SM-001 only.

• Stakeholder Identification: Starting from an ini-

tial set of 7 stakeholders provided by the systems

engineer, the tool allowed use to identify 11 ad-

ditional stakeholders. All stakeholders were rel-

evant. An excerpt of the resulting stakeholder is

shown on Table 2.

• Needs Capture: Starting from an initial set of 31

needs provided by the systems engineer, the tool

allowed use to identify 43 additional needs, and

to link all them to the corresponding stakeholder.

The Q/A chatbot assisted us in capturing all stake-

holder needs by prompting questions about stake-

holders who had not yet expressed any needs. All

the obtained needs were relevant. An excerpt of

the resulting needs is shown on Table 3.

• Needs Analysis: We used the open chatbot to an-

alyze the set of 74 identified needs, specifically

focusing on detecting conflicts. Our assistant suc-

cessfully identified 11 potential conflicts. A de-

tailed record of the needs analysis discussion is

provided in the complete data document.

• Stakeholder Requirements Definition: The tool

assisted in drafting 39 stakeholder requirements,

ensuring complete traceability to the original

needs expressed by stakeholders. All require-

ments are measurable and include verification

and validation tasks within their documentation.

While significant progress has been made, this

task remains ongoing as further refinement and

validation are still needed. An excerpt of the

stakeholder requirements is presented in Table 4.

• Task Completeness and Consistency Checks:

By leveraging, the ontology-driven and LLM-

assisted completeness checker, and the Q/A

chatbot, the tools assisted in ensuring that all

needs and requirements were logically consistent,

aligned with the system’s mission, and free from

hallucinations so far.

This experiment highlights the efficiency of our ap-

proach in supporting SE tasks, such as require-

ment elicitation, and traceability management. The

robot use case also underscores the scalability of our

methodology in handling diverse and interconnected

SE tasks while maintaining consistency across data

sets and ensuring system definition completeness.

4.3 Discussion

The experiments conducted focused primarily on tex-

tual artifacts—needs and requirements—where LLM

integration was shown to be highly effective. By iden-

tifying 18 stakeholders, extracting 74 needs, detect-

ing 11 conflicts, and drafting 29 stakeholder require-

ments, the tool demonstrated its capacity to enhance

task execution, efficiency, and completeness, address-

ing RQ1. Specifically, the Q/A chatbot’s guided ques-

tions ensured gaps were filled, while the ontology-

driven framework structured unstructured data into

a coherent and traceable form. This success builds

confidence for extending the tool to architecture-

related activities involving system models, reinforc-

ing the LLM’s potential to automate SE tasks and aug-

MBSE-AI Integration 2025 - 2nd Workshop on Model-based System Engineering and Artificial Intelligence

390

Table 1: Identified robot’s missions.

ID Name Description

SM-001 Visitor Guid-

ance

To assist visitors by providing directions and escorting them to specific loca-

tions within the B612 building.

SM-002 Demonstration

of Technol-

ogy

To showcase the technological advancements and capabilities developed at

IRT.

SM-003 Operational

Support

To assist IRT staff and reception staff in their daily operations by performing

tasks such as message delivery and fetching items.

SM-004 Accessibility

Features

To provide special accessibility features for visitors with disabilities.

Table 2: Extract of resulting stakeholders.

ID Name Documentation

STKH-001 Visitors Individuals visiting the IRT within the B612 building, interacting with the robot

for guidance to specific locations.

STKH-002 Supervisor Responsible for monitoring and controlling the operation of one or more

robots.

STKH-003 Maintenance

Operator

In charge of the system’s maintenance, particularly addressing potential break-

downs.

... ... ...

STKH-018 Cleaning

Staff

Responsible for maintaining cleanliness and order within the operational envi-

ronment of the robot, ensuring that the robot’s pathways are free of obstacles

and debris.

ment MBSE practices. However, the experiments did

not yet explore scenarios where engineering artifacts

from previous or similar systems exist. Addressing

RQ1 further, such reuse could optimize task comple-

tion by leveraging historical data for faster and more

accurate generation of requirements or models. Fu-

ture work will also focus on validating results through

comparison with human-generated benchmarks to as-

sess the tool’s accuracy and reliability.

The study highlights that even without fine-tuning,

Large Language Models (LLMs) can adequately per-

form many general Systems Engineering (SE) tasks,

providing an efficient and cost-effective solution.

This addresses RQ2, as it demonstrates how LLMs

can be practically implemented using prompt engi-

neering and ontology-driven frameworks to assist in

specific SE activities without the need for expensive

fine-tuning. Importantly, our approach remains highly

generic and adaptable to different SE processes, as

even the tasks themselves are dynamically generated

by the LLM. This flexibility answers part of RQ2, as

it highlights the tool’s process-agnostic nature. The

same framework can be seamlessly applied to stan-

dards like ISO 15288 or ARP4754A without requir-

ing infrastructure modifications. Such adaptability

ensures the tool’s broader applicability across diverse

SE contexts, which is a key advantage over traditional

SE tools.

However, the results also raise an important con-

sideration for RQ3: whether the performance im-

provement achieved through fine-tuning justifies the

additional investment in terms of data and computa-

tional resources. This remains an open avenue for fu-

ture work. In addition, a key concern tied to RQ3

is the quality of the outputs generated by LLMs.

While the assistant provided relevant and useful sup-

port during the experiments, rigorous verification pro-

tocols remain to be defined. For safety- or cost-

critical systems, where small errors in assumptions

or data can have severe consequences, a dedicated

validation framework is essential. Human-generated

benchmarks will also play a critical role in system-

atically evaluating and comparing the assistant’s out-

puts. Hence, as of now, LLMs should serve as a sup-

port tool rather than a replacement for human judg-

ment, particularly in high-stakes domains. This bal-

ance ensures the risk of undetected errors is mitigated

while still reaping the benefits of automation.

This study underscores that LLMs are particularly

suited to automating “tedious” SE tasks, which are

repetitive, time-consuming, and prone to human er-

ror—such as structuring data, ensuring traceability,

and detecting inconsistencies. By addressing these

tasks, the assistant allows systems engineers to fo-

cus on higher-value activities like strategic decision-

making and system innovation. This directly an-

Ontology-Driven LLM Assistance for Task-Oriented Systems Engineering

391

Table 3: Extract of resulting needs.

ID Name Need Text Stakeholders

NEED-001 Guidance to Meeting

Room

As a Visitor, I need to be guided to the meeting

room / office in order to reach the meeting room

at the appropriate time.

STKH-001

NEED-002 Guidance to Rest

Room

As a Visitor, I need to be guided to the nearest rest

room upon request.

STKH-001

... ... ... ...

NEED-073 Emergency Stop and

Manual Override

As Cleaning Staff, I need to have the ability to stop

the robot in case it obstructs the cleaning process

or in emergencies.

STKH-018

NEED-074 Network Connectiv-

ity

As IT Support Team, I need the robot to have re-

liable network connectivity for remote monitoring

and updates.

STKH-009

Table 4: Extract of resulting stakeholder requirements.

ID Name Text Trace to

Needs

SR-001 Guidance to Meeting

Room Requirement

Upon request, the robot shall guide visitors to the

designated meeting room and ensure timely ar-

rival within 5 minutes.

NEED-001

SR-004 Guidance to Rest

Room Requirement

Upon request, the robot shall guide visitors to the

nearest rest room and ensure timely arrival within

3 minutes.

NEED-002

... ... ... ...

SR-027 Emergency Stop Ca-

pability Requirement

The robot shall allow the Supervisor to initiate

an emergency stop, halting all operations imme-

diately within 1 second of the command being is-

sued.

NEED-014

swers RQ3 by showcasing tangible benefits in effi-

ciency and task completion while maintaining align-

ment with SE standards and objectives.

Finally, the findings demonstrate the relevance of

LLMs for SE tasks, their practical implementation

in a process-agnostic platform, and their ability to

enhance task execution, consistency, and traceabil-

ity. However, challenges remain in ensuring valida-

tion protocols, including comparisons with human-

generated benchmarks, and exploring the reuse of ex-

isting SE data, which will be crucial for broader adop-

tion and future advancements.

5 RELATED WORK

The increasing complexity of cyber-physical sys-

tems necessitates model-based systems engineering

(MBSE), but its broader adaptation requires special-

ized training. User-centric systems engineering and

AI can make this process more accessible. The re-

search in (Bader et al., 2024) demonstrates that a GPT

model, trained on UML component diagrams, can ef-

fectively understand and generate complex UML rela-

tionships, though challenges like extensive XMI data

and context limitations remain.

The paper (Fuchs et al., 2024) explores how large

language models (LLMs) can automate model cre-

ation within a new declarative framework. Users can

interact with the system using natural language, repre-

senting a significant shift in model development. The

paper provides examples demonstrating how LLMs

can improve the speed and cost of tasks like object

creation, content review, and artifact generation by 10

to 15 times.

An intelligent workflow engine that integrates an

AI chatbot to enhance traditional workflow man-

agement systems in presented in (Reitenbach et al.,

2024). By using Natural Language Processing and

Large Language Models, the chatbot serves as a user-

friendly interface, simplifying workflow creation and

decision-making.

The study in (du Plooy and Oosthuizen, 2023)

explores how GPT-4 can enhance the development

of system dynamics simulations within systems en-

gineering. Findings reveal that while GPT-4 signifi-

cantly improves simulation construction, error reduc-

MBSE-AI Integration 2025 - 2nd Workshop on Model-based System Engineering and Artificial Intelligence

392

tion, and learning speed, it also struggles with iden-

tifying errors and generating high-quality expansion

ideas. Technical challenges include AI’s limited abil-

ity to handle specialized domains and the potential for

errors due to data quality.

In (Timperley et al., 2024), researchers evaluated

the use of TEXT-DAVINCI-003 LLM for generat-

ing spacecraft system architectures for Earth obser-

vation missions, achieving at least two-thirds trace-

ability of design elements back to requirements across

three cases. Consistent outputs suggested potential

for diverse missions, though function generation chal-

lenges emphasized the need for expert oversight to re-

fine outputs. Higher-token-count prompts improved

traceability and alignment but increased computa-

tional costs. Lower classifier confidence for modes in-

dicated textual variation impacts, while high precision

over recall reflected training data limitations. Manual

assessments validated architecture quality, with future

work aimed at automated evaluation and ontology re-

finements to better map LLM output to MBSE mod-

els. Data security concerns also suggested the benefit

of self-hosted, fine-tuned LLMs.

Existing research demonstrates the potential of

LLMs to assist in systems engineering by automat-

ing specific tasks, such as UML generation (Bader

et al., 2024), declarative model creation (Fuchs et al.,

2024), and intelligent workflow simplification (Reit-

enbach et al., 2024). However, these approaches of-

ten address isolated tasks without establishing a ro-

bust foundational context for the overall systems en-

gineering process.

Our work complements these efforts by focusing

on building a comprehensive and traceable context for

SE tasks through ontology-driven task guidance, it-

erative prompting, and completeness checking. This

foundational approach ensures the alignment of tasks

with SE frameworks like ISO 15288, enabling more

reliable outputs before diving into detailed atomic

tasks. By integrating traceability and error-recovery

mechanisms, our methodology provides the struc-

tured groundwork necessary to support and enhance

future detailed SE workflows, bridging the gap be-

tween high-level guidance and fine-grained task ex-

ecution.

6 CONCLUSION AND

PERSPECTIVES

This paper presents an ontology-driven, LLM-based

assistant integrated into the EasyMOD platform to

support task-oriented Systems Engineering (SE) pro-

cesses. By combining context-aware and iterative

prompting with ontology-based task structuring, the

assistant ensures alignment with SE objectives, con-

tinuity across tasks, and the consistent generation of

high-quality data. The experimental platform and

dual-chatbot system provide structured guidance and

open-ended support, enhancing task completeness,

efficiency, and user engagement.

The study demonstrates that LLMs are particu-

larly effective in automating tedious and repetitive

SE tasks, such as stakeholder identification, needs

capture, requirements definition, and conflict detec-

tion. The results, validated on a robotic system case

study, highlight notable benefits in improving task ef-

ficiency, ensuring traceability, and reducing human

error. Additionally, the tool proves capable of trans-

forming unstructured textual data into structured out-

puts aligned with a formal SE ontology. However,

challenges remain, particularly in handling safety-

and cost-critical systems where rigorous verification

protocols are required. Furthermore, the current ap-

proach has yet to explore scenarios where pre-existing

engineering artifacts from similar systems can be

reused to accelerate task execution.

From a methodological perspective, the proposed

solution is highly generic and adaptable, capable of

dynamically generating tasks without relying on pre-

defined workflows. This flexibility enables the tool

to seamlessly integrate with various SE standards, en-

hancing its applicability across different industrial do-

mains. Nonetheless, the dependency on predefined

ontologies and the absence of user feedback mecha-

nisms for iterative refinement are improvement areas.

Future work will broaden the scope of SE tasks

addressed by the assistant, extending beyond require-

ments elicitation and quality assessment to include ar-

chitecture definition, model-based engineering, and

other SE lifecycle phases. This expansion will as-

sess the assistant’s applicability across diverse work-

flows and improve its utility for complex, intercon-

nected tasks. To support this, we plan to inte-

grate graph-based Retrieval-Augmented Generation

(RAG) to enhance task-specific knowledge retrieval

and strengthen interactions with external tools and

EasyMOD modules, such as model editing and review

processes.

Future validation efforts will focus on develop-

ing human-generated benchmarks to rigorously eval-

uate the assistant’s performance across larger, more

diverse case studies. This will include analyses of

task completeness, consistency, and alignment with

SE objectives. Additionally, human-machine interac-

tion studies and ergonomic evaluations will assess the

tool’s practical benefits and refine its capabilities. By

addressing these areas, future work aims to solidify

Ontology-Driven LLM Assistance for Task-Oriented Systems Engineering

393

the assistant’s role as a robust support system for Sys-

tems Engineering practitioners.

ACKNOWLEDGEMENTS

The authors thanks the Fondation de Recherche pour

l’Aeronautique et l’Espace (FRAE) for contributing

to the funding of the work presented in this paper. We

are also grateful, to Airbus Commercial Aircraft, Air-

bus Defense and Space, and the French ANR for con-

tributing to the funding of the EasyMOD project.

REFERENCES

(2015). ISO/IEC/IEEE International Standard - Systems

and Software Engineering – System Life Cycle Pro-

cesses.

Alarcia, R. M. G., Russo, P., Renga, A., and Golkar,

A. (2024). Bringing systems engineering models to

large language models: An integration of opm with

an llm for design assistants. In Proceedings of the

12th International Conference on Model-Based Soft-

ware and Systems Engineering-MBSE-AI Integration,

pages 334–345.

Andrew, G. (2023). Future consideration of generative arti-

ficial intelligence (ai) for systems engineering design.

26th National Defense Industrial Association Systems

Engineering Conference.

Arora, C., Grundy, J., and Abdelrazek, M. (2024). Advanc-

ing requirements engineering through generative ai:

Assessing the role of llms. In Generative AI for Effec-

tive Software Development, pages 129–148. Springer.

Bader, E., Vereno, D., and Neureiter, C. (2024). Facilitat-

ing user-centric model-based systems engineering us-

ing generative ai. In MODELSWARD.

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D.,

Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G.,

Askell, A., et al. (2020). Language models are few-

shot learners. Advances in neural information pro-

cessing systems, 33:1877–1901.

C

´

amara, J., Troya, J., Burgue

˜

no, L., and Vallecillo, A.

(2023). On the assessment of generative ai in model-

ing tasks: an experience report with chatgpt and uml.

Software and Systems Modeling, 22(3):781–793.

Chami, M., Abdoun, N., and Bruel, J.-M. (2022). Artifi-

cial intelligence capabilities for effective model-based

systems engineering: A vision paper. INCOSE Inter-

national Symposium, 32(1):1160–1174.

Chami, M. and Bruel, J.-M. (2018). A survey on mbse adop-

tion challenges. In INCOSE EMEA Sector Systems

Engineering Conference (INCOSE EMEASEC 2018).

Wiley Interscience Publications.

du Plooy, C. and Oosthuizen, R. (2023). Ai usefulness

in systems modelling and simulation: gpt-4 applica-

tion. South African Journal of Industrial Engineering,

34(3):286–303.

Fabien, B. (2023). Exploration AI and MBSE: Use Cases

in Aircraft Design. INCOSE Next AI Explorer.

Fuchs, J., Helmerich, C., and Holland, S. (2024). Trans-

forming system modeling with declarative methods

and generative ai. In AIAA SCITECH 2024 Forum,

page 1054.

INCOSE (2007). Systems Engineering Vision 2020. Inter-

national Council on Systems Engineering (INCOSE),

2nd edition.

Kojima, T., Gu, S. S., Reid, M., Matsuo, Y., and Iwasawa, Y.

(2022). Large language models are zero-shot reason-

ers. Advances in neural information processing sys-

tems, 35:22199–22213.

Lecun, Y., Dess

`

ı, R., Lomeli, M., Nalmpantis, C., Pa-

sunuru, R., Raileanu, R., Rozi

`

ere, B., Schick, T.,

Dwivedi-Yu, J., Celikyilmaz, A., et al. (2023). Aug-

mented language models: a survey. arXiv preprint

arXiv:2302.07842.

Qiao, S., Ou, Y., Zhang, N., Chen, X., Yao, Y., Deng, S.,

Tan, C., Huang, F., and Chen, H. (2022). Reason-

ing with language model prompting: A survey. arXiv

preprint arXiv:2212.09597.

Ramesh, A., Pavlov, M., Goh, G., Gray, S., Voss, C., Rad-

ford, A., Chen, M., and Sutskever, I. (2021). Zero-shot

text-to-image generation. In International Conference

on Machine Learning, pages 8821–8831. PMLR.

Reitenbach, S., Siggel, M., and Bolemant, M. (2024). En-

hanced workflow management using an artificial in-

telligence chatbot. In AIAA SCITECH 2024 Forum,

page 0917.

Schr

¨

ader, E., Bernijazov, R., Foullois, M., Hillebrand, M.,

Kaiser, L., and Dumitrescu, R. (2022). Examples of

ai-based assistance systems in context of model-based

systems engineering. In 2022 IEEE International

Symposium on Systems Engineering (ISSE), pages 1–

8.

SELive (2023). Artificial intelligence (ai) in model-

based systems engineering. https://www.selive.de/ai-

in-mbse/ [last visited:2023-11-27].

Tikayat Ray, A., Cole, B. F., Pinon Fischer, O. J., Bhat,

A. P., White, R. T., and Mavris, D. N. (2023). Ag-

ile methodology for the standardization of engineering

requirements using large language models. Systems,

11(7).

Timperley, L., Berthoud, L., Snider, C., and Tryfonas, T.

(2024). Assessment of large language models for use

in generative design of model based spacecraft system

architectures. Available at SSRN 4823264.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones,

L., Gomez, A. N., Kaiser, Ł., and Polosukhin, I.

(2017). Attention is all you need. Advances in neural

information processing systems, 30.

Wei, J., Wang, X., Schuurmans, D., Bosma, M., Xia, F.,

Chi, E., Le, Q. V., Zhou, D., et al. (2022). Chain-of-

thought prompting elicits reasoning in large language

models. Advances in Neural Information Processing

Systems, 35:24824–24837.

MBSE-AI Integration 2025 - 2nd Workshop on Model-based System Engineering and Artificial Intelligence

394