Towards a RAG-Based WhatsApp Chatbot for Animal Certification

Platform Support

Gabriel Vieira Casanova

a

, Pedro Bilar Montero

b

, Alencar Machado

c

and Vinicius Maran

d

Laboratory of Ubiquitous, Mobile and Applied Computing (LUMAC), Federal University of Santa Maria,

Santa Maria, Brazil

Keywords:

Question Answering Systems, Retrieval-Augmented Generation (RAG), WhatsApp, Virtual Assistant,

Automated Support, Chatbot, PDSA-RS.

Abstract:

Ensuring compliance with animal production certification requirements is often a complex and time-

consuming task. This paper presents a domain-specific chatbot designed to assist users in requesting certi-

fications within the PDSA-RS framework. By leveraging Retrieval-Augmented Generation (RAG) and large

language models (LLMs), the proposed system retrieves relevant information from specialized documents and

generates accurate, context-driven responses to user queries. The chatbot’s performance was evaluated on two

Brazilian certification platforms, demonstrating its potential to streamline certification requests, reduce errors,

and enhance user experience.

1 INTRODUCTION

Chatbots have become an integral part of modern

communication, assisting users in tasks such as cus-

tomer support, information retrieval, and service au-

tomation. However, traditional chatbots often face

significant limitations, including a lack of flexibility,

inability to handle complex queries, and reliance on

predefined scripts or rule-based logic. These short-

comings make it challenging for them to provide ac-

curate, context-aware responses in dynamic scenarios

(Kucherbaev et al., 2017).

The advent of Large Language Models (LLMs)

has addressed many of these challenges by en-

abling chatbots to understand and generate human-

like responses with remarkable fluency and contextual

awareness. Yet, despite their capabilities, LLMs have

their own limitations. They can struggle with retriev-

ing accurate, up-to-date information, as their training

data is static and finite, and may generate plausible

but incorrect answers when faced with queries beyond

their knowledge (Zhao et al., 2023).

To overcome these limitations, Retrieval-

Augmented Generation (RAG) offers a transforma-

a

https://orcid.org/0009-0009-5420-7334

b

https://orcid.org/0009-0002-9224-7694

c

https://orcid.org/0000-0003-2462-7353

d

https://orcid.org/0000-0003-1916-8893

tive solution. By integrating a retrieval mechanism

into LLMs, RAG enables chatbots to access real-

time, relevant information from external knowledge

sources or documents. This synergy between retrieval

and generation allows chatbots to move beyond pre-

trained data, delivering more accurate responses,

context-aware, and dependable (Zhao et al., 2024).

The Plataforma de Defesa Sanit

´

aria Animal do

Rio Grande do Sul (PDSA-RS) is a control system

designed to support animal health management by or-

ganizing and integrating all stages of certification pro-

cesses for poultry and swine farming in Rio Grande

do Sul, Brazil. While the platform is a critical tool

for ensuring operational efficiency and compliance,

users often encounter frequent questions and chal-

lenges when navigating its functionalities. These re-

curring user questions highlight the need for a sup-

port system capable of providing accurate and imme-

diate assistance regarding the platform’s use (Descovi

et al., 2021). WhatsApp, as one of the most widely

used messaging platforms globally, offers an ideal

medium for deploying such advanced chatbots. By

incorporating a RAG-based chatbot into WhatsApp,

users of the PDSA-RS platform can access intelli-

gent, dynamic assistance tailored to their needs. The

chatbot is specifically designed to address common

user questions about the platform, providing accurate

and context-aware responses to streamline user expe-

rience and reduce support overhead (Times, 2021).

Casanova, G. V., Montero, P. B., Machado, A. and Maran, V.

Towards a RAG-Based WhatsApp Chatbot for Animal Certification Platform Support.

DOI: 10.5220/0013473500003929

In Proceedings of the 27th International Conference on Enterprise Information Systems (ICEIS 2025) - Volume 1, pages 1077-1084

ISBN: 978-989-758-749-8; ISSN: 2184-4992

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

1077

This paper presents the development of a RAG-based

Chatbot for WhatsApp, designed to support users of

the PDSA-RS platform. Leveraging the combined

strengths of LLMs and retrieval systems, the chat-

bot aims to deliver accurate, contextually aware in-

teractions, enhancing user engagement and improving

understanding of the platform’s functionalities (Gao

et al., 2018).

The paper is structured as follows: Section 2 pro-

vides an overview of the key background concepts rel-

evant to the research and the proposed approach. Sec-

tion 3 introduces the methodology for developing the

Chatbot for the Rio Grande do Sul Animal Health De-

fense Platform (PDSA-RS), specifically designed to

provide support through WhatsApp. The simulation

of the proposed solution is detailed in Section 4. Fi-

nally, Section 5 presents the conclusions of this work.

2 BACKGROUND

2.1 Chatbots

Chatbots are software applications engineered to

mimic human conversation, predominantly through

text-based interfaces. Their development has seen

substantial advancements, transitioning from basic

rule-based systems to sophisticated AI-powered con-

versational agents (Pantano and Pizzi, 2023).

The inception of chatbots dates back to the mid-

20th century. A notable milestone was achieved in

1966 with the creation of ELIZA by Joseph Weizen-

baum. ELIZA utilized pattern matching and substitu-

tion techniques to simulate dialogue, setting a founda-

tional precedent for subsequent advancements in nat-

ural language processing (NLP) and conversational

AI (Weizenbaum, 1966).

The 2010s witnessed a pivotal transition towards

AI-driven chatbots. The emergence of machine learn-

ing and NLP technologies significantly enhanced

chatbots’ ability to comprehend and generate human-

like responses. Platforms such as IBM Watson, Mi-

crosoft Bot Framework, and Google’s Dialogflow

provided developers with robust tools to create more

intelligent and versatile chatbots (Wolf et al., 2019).

The 2020s have brought further innovations in

conversational AI, with chatbots becoming increas-

ingly context-aware and capable of managing intri-

cate queries. Large Language Models (LLMs) have

been instrumental in this progress, enabling chatbots

to understand and produce text in a manner akin to

human communication. Companies are progressively

integrating chatbots into diverse applications, ranging

from customer service to personal assistants and edu-

cational tools (Radford et al., 2019).

The evolution of chatbots, from simple rule-based

systems to advanced AI-driven softwares, has been

marked by continuous innovation. Driven by ad-

vancements in NLP and AI, chatbots have become in-

tegral to modern technology. As research progresses,

chatbots are set to further transform user experiences

across various domains (Adamopoulou and Moussi-

ades, 2020).

2.2 WhatsApp

WhatsApp is a popular messaging app that allows

users to send text messages, make voice and video

calls, and share media files. It was founded in 2009

by Brian Acton and Jan Koum and was acquired by

Facebook in 2014. WhatsApp has become one of the

most widely used communication tools globally, with

over 2 billion users.

WhatsApp offers a variety of features that make

it a versatile communication tool, including text mes-

saging, voice and video calls, media sharing, status

updates, and end-to-end encryption. Its widespread

adoption and continuous updates make it a reliable

choice for both personal and professional communi-

cation (Times, 2021).

2.3 Large Language Models

Large Language Models (LLMs) represent a signifi-

cant advancement in the field of artificial intelligence,

particularly in natural language processing. These

models have evolved rapidly, driven by innovations

in deep learning and the availability of vast amounts

of text data (Chang et al., 2024).

The 2010s saw the introduction of transformative

models like Word2Vec and GloVe, which focused on

word embeddings. The real breakthrough came with

the introduction of the Transformer architecture by

Vaswani et al. in their 2017 paper ”Attention is All

You Need.” This architecture, based on self-attention

mechanisms, allowed for more efficient processing of

sequential data, paving the way for larger and more

complex language models (Singh, 2024).

In 2018, Google introduced BERT (Bidirectional

Encoder Representations from Transformers), which

revolutionized the field by demonstrating state-of-

the-art performance on a wide range of natural lan-

guage processing tasks. The early 2020s witnessed a

proliferation of LLMs, with models like RoBERTa,

T5 (Text-to-Text Transfer Transformer), and others

building on BERT’s success. Notably, the introduc-

tion of models like ChatGPT by OpenAI showcased

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

1078

the practical applications of LLMs in conversational

AI (DATAVERSITY, 2024).

2.4 Retrieval-Augmented Generation

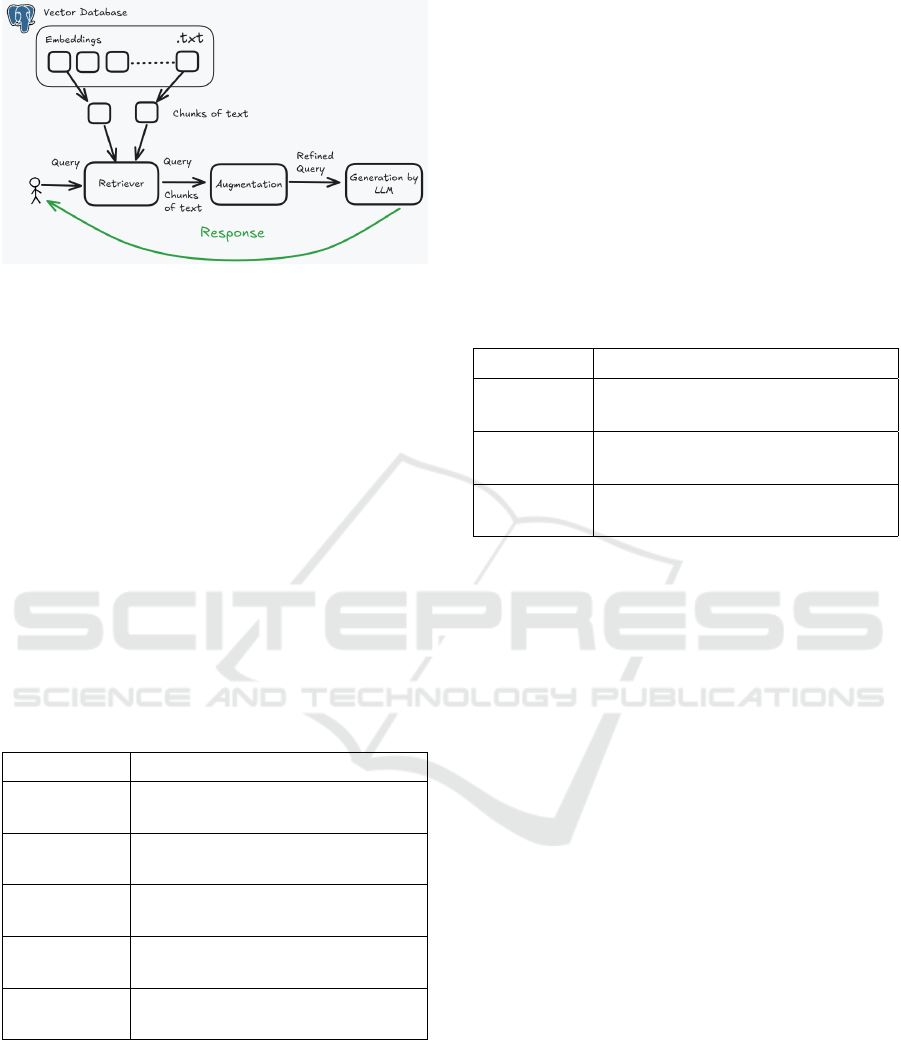

Retrieval-Augmented Generation (RAG) is a tech-

nique that combines information retrieval (IR) with

Large Language Models (LLMs). This method en-

hances the capabilities of language models by retriev-

ing relevant documents or pieces of information from

an external corpus and using that retrieved informa-

tion to generate more accurate and contextually rele-

vant responses (Gupta et al., 2024).

Traditional text generation models rely heavily

on the data they have been trained on and their

internal parameters to generate outputs. However,

these models can sometimes lack the ability to ac-

cess specific, up-to-date, or domain-specific knowl-

edge. RAG overcomes these limitations by incorpo-

rating an external information retrieval step into the

model pipeline, which consists of two main stages:

• Retrieval Phase. The first step involves retrieving

relevant documents or pieces of text from a large

external corpus, typically using an information re-

trieval system.

• Generation Phase. After retrieving the relevant

information, a generative model is employed to

process the retrieved documents along with the

original input, all in a single prompt, and gener-

ate a final response.

The usage of Retrieval-Augmented Generation

has a set of advantages:

• Access to External Knowledge. RAG leverages

external sources like databases, files, or websites

to enhance responses.

• Up-to-date Information. The retrieval step can

provide the latest information, keeping RAG mod-

els current, especially for rapidly changing topics.

• Domain-Specific Information RAG can be tai-

lored to specific domains, enabling expert-level

generation for niche topics.

• Reduced Model Size. By relying on external re-

trieval, RAG models avoid storing all knowledge

internally, resulting in a more efficient model.

In the context of enterprise information systems,

RAG can be applied to tasks such as automated query

answering, document summarization, and knowledge

management. For example, RAG could be used to

provide real-time, evidence-backed answers to em-

ployee queries, summarize complex legal or technical

documents, or assist in customer service by retrieving

and generating responses from enterprise knowledge

bases (Li et al., 2022).

2.5 PDSA-RS

The Plataforma de Defesa Sanit

´

aria Animal do Rio

Grande do Sul (PDSA-RS) is a web platform that of-

fers tools to official veterinarians in animal sanitary

control in Rio Grande do Sul state (Brazil). Using ad-

vanced technologies, the platform provides real-time

data to support decision-making, with features such

as epidemiological analysis, disease spread modeling,

and the evaluation of control measures. The platform

is continuously evolving to address emerging chal-

lenges in animal health (Perlin et al., 2023).

PDSA-RS can be accessed via a web platform and

a mobile application. The web platform allows for

detailed data analysis and visualization, ideal for pro-

fessionals needing in-depth information on disease

trends and control efforts. The mobile app provides

convenient, on-the-go access, enabling users to report

incidents, view outbreak details, and receive alerts.

This dual-access design enhances communication and

supports quick responses to disease threats, helping to

strengthen animal health defenses in Rio Grande do

Sul.

The platform includes a support center that is

hosted in WhatsApp to help users use its features ef-

fectively. An automated chatbot is being developed to

further streamline support, providing immediate help

with common inquiries and guidance on using the

platform. This feature aims to reduce waiting times

and improve user experience by offering quicker ac-

cess to necessary information and troubleshooting di-

rectly through WhatsApp.

3 METHODOLOGY

This section presents the methodology for developing

the Chatbot for the Plataforma de Defesa Sanit

´

aria

Animal do Rio Grande do Sul (PDSA-RS), specifi-

cally designed to provide support through WhatsApp.

The focus is on establishing a robust architecture that

integrates with the existing platform. The approach

involves a modular design, ensuring scalability and

flexibility in development. The architecture (Figure

1) includes distinct layers responsible for user inter-

action, data processing, and system management. The

development process has followed an agile methodol-

ogy, allowing for continuous improvement and adap-

tation based on feedback and testing throughout the

implementation stages.

Towards a RAG-Based WhatsApp Chatbot for Animal Certification Platform Support

1079

Figure 1: The proposed RAG Architecture.

3.1 Connection Module

This module is responsible for the direct integration

with WhatsApp, ensuring the reception and sending

of messages. When the server starts, a QR code is

displayed in the terminal to pair the connection with

a device. After that, the server listens for messages

from phone numbers, with no restriction to contacts.

All incoming messages are forwarded to the synchro-

nization module for further handling, while all outgo-

ing messages are processed via the processing mod-

ule.

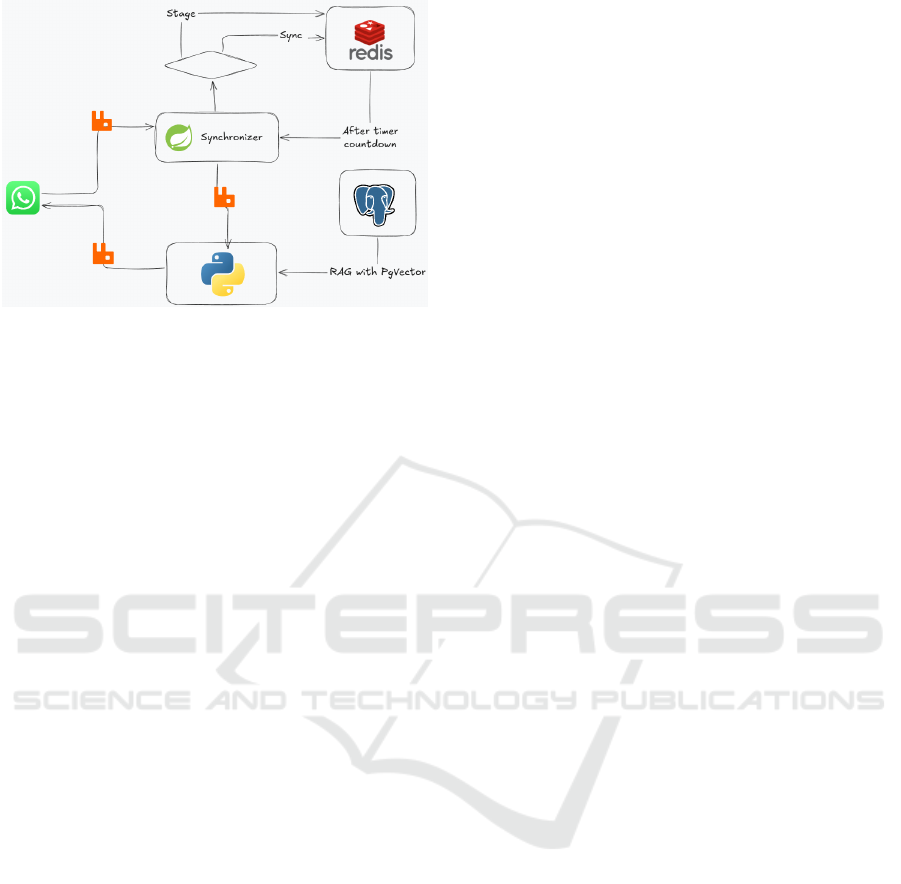

The technologies employed in the system module

are summarized in Table 1. This table provides an

overview of the core technologies used for message

handling, system communication, and WhatsApp in-

tegration, essential to the platform’s functionality.

Table 1: Employed Technologies.

Technology Description

TypeScript A JavaScript superset that adds

static types.

AMQPLib A library for interacting with Rab-

bitMQ in Node.js.

Node.js A runtime for server-side develop-

ment.

NPM A package manager for dependen-

cies.

WPPConnect A library for integrating WhatsApp

with Node.js.

3.2 Synchronization Module

This module addresses the need to reassemble frag-

mented messages, a common behavior among What-

sApp users. By ensuring that long messages split

across multiple parts are properly synchronized, it

maintains message integrity before forwarding to the

processing module.

When a message fragment is received, the mod-

ule checks Redis for an existing entry. If found, it

appends the fragment; if not, it creates a new entry.

The fragments are queued for processing after a 40-

second timer. If a new fragment arrives during this

time, the timer resets to 40 seconds. After 40 seconds

timer times out, we assume the user has finished his

question and the complete message is passed to the

next module.

The technologies employed in this system’s mod-

ule are summarized in Table 2. This table provides an

overview of the core technologies used for message

synchronization.

Table 2: Employed Technologies.

Technology Description

Java A popular programming language

used for server-side applications.

Spring A framework for building Java-

based server-side applications.

Redis An open-source in-memory data

structure store used for caching

3.3 Processing Module

The Processing Module applies the Retrieval-

Augmented Generation (RAG) technique to retrieve

and generate a response based on the question, re-

turning it to WhatsApp. Its operation involves re-

ceiving the defragmented message, i.e., the complete

user question, and performing a similarity search in a

vector database. This query returns similar questions

with their respective answers, enhancing the context

so that the LLM generates a human-like response that

aligns with the user’s intent. When the response is

ready, it is sent to a queue, where the Connection

Module listens and forwards it to the respective user

on WhatsApp. The sequence of steps that defines the

operation of the Processing Module is outlined as fol-

lows:

3.3.1 Document Ingestion

This step involves preparing the knowledge base by

processing a JSON file containing the most relevant

questions and answers from users of PDSA-RS and

converting them into vector representations suitable

for retrieval. The sub-processes include:

• Text Extraction. Extracting specialized query

collected relevant text data to a JSON file by con-

sulting team’s members a from user support at the

help center of PDSA-RS.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

1080

• Partitioning or Chunking Strategy. Splitting

the text content into chunks of size 7500 tokens

with an overlap of 100 tokens to preserve contex-

tual continuity. This Chuncking technique is sep-

cifically denominated as Sliding Window Chunk-

ing.

• Embedding or Vectorization. Transforming the

text chunks into vector representations using the

nomic-embed-text vectorization model. These

embeddings are then stored in the PostgreSQL

database integrated with PGVector for efficient re-

trieval.

3.3.2 Vector Storage

The vector representations generated during the in-

gestion process are stored in a PGVector-enabled

PostgreSQL database, optimized for similarity

search.

3.3.3 Retrieval

A similarity search is performed on the vector

database using the vectorized representation of the

user’s query to find the most relevant chunks of text

that match the user’s question.

3.3.4 Response Generation

With temperature set to zero ensuring precise and de-

terministic outputs, the retrieved information is used

to enhance the context, enabling a language model,

Ollama with LLaMA 3.2 7B, to generate a coherent

and human-like response aligned with the user’s in-

tent. This configuration minimizes randomness, en-

suring alignment with the setup.

3.3.5 Employed Technologies

The technologies employed in this system’s module

are summarized in Table 3.

Table 3: Employed Technologies.

Component Description

nomic-embed-text Large context length text en-

coder used for vectorizing

text chunks.

LLaMA 3.2b Large language model used

for generating human-like

responses.

Pip Package used to manage de-

pendencies.

Python Programming language.

3.4 Docker Infrastructure

The infrastructure supporting the proposed Chatbot is

built on Docker to ensure modularity, scalability, and

simplified deployment. The tech-stack is summarized

in Table 4.

Table 4: Docker Infrastructure.

Component Description

RabbitMQ Message broker for asynchronous

communication with a monitoring

interface.

Redis In-memory data store for message

fragments, with Redis Insight for

monitoring.

PostgreSQL A relational database for storing

structured data.

PgVector Extension for PostgreSQL enabling

vector similarity search.

PgAdmin Interface for managing PostgreSQL

databases.

Ollama Server A server to get up and running with

large language models.

Open WebUI Web interface for system config-

uration and monitoring of Ollama

Server.

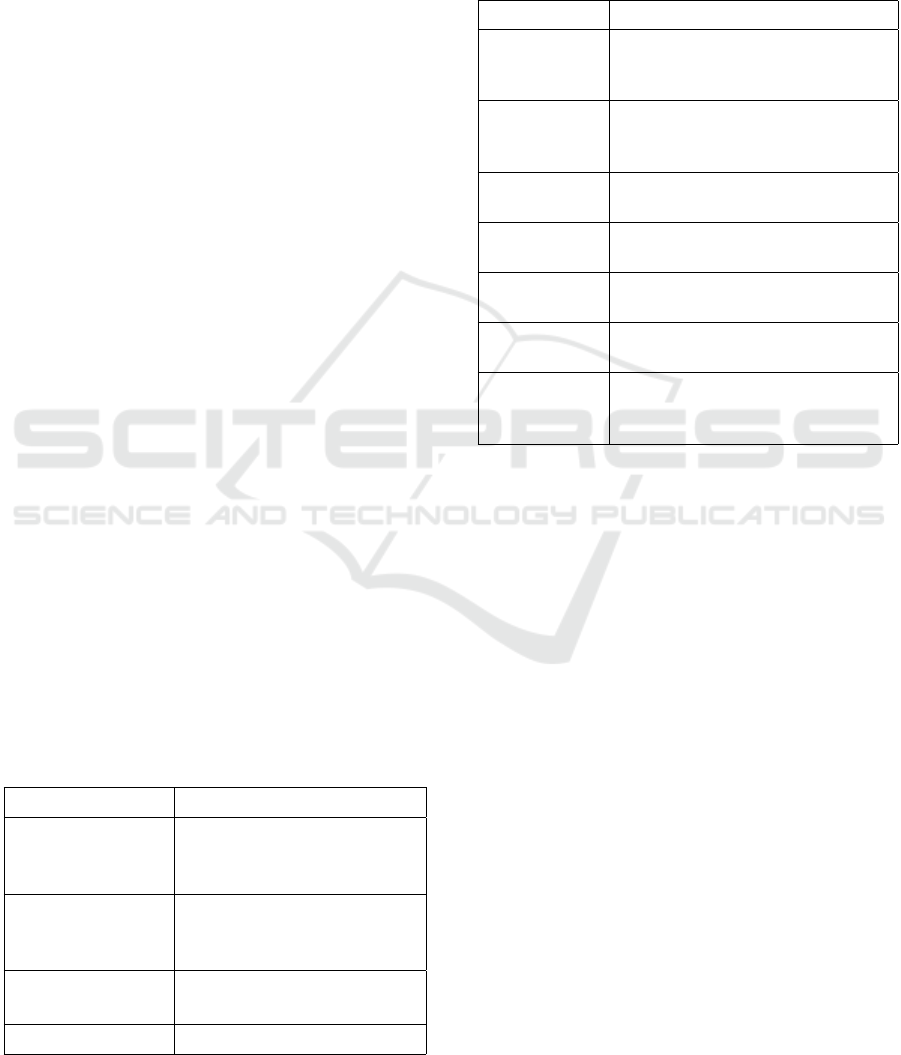

3.5 System Design

The system design of the proposed chatbot provides

a high-level view of its architecture, outlining the

main modules and their interconnections. It illustrates

how these modules collaborate to achieve the chat-

bot’s functionality. The design highlights the flow of

data, interactions between modules, and the integra-

tion points necessary for the system’s operation. This

image serves as a blueprint for understanding the sys-

tem’s overall structure and its cohesive operation (see

Figure 2).

4 SIMULATION

4.1 Objectives

The goal of the simulation is to test the behavior of the

proposed Chatbot in a controlled WhatsApp environ-

ment. The simulation aims to emulate a user-chatbot

interaction by leveraging two smartphones, where one

device acts as the user and the other as the interface

for the chatbot connected to the server.

Towards a RAG-Based WhatsApp Chatbot for Animal Certification Platform Support

1081

Figure 2: The Complete Chatbot System Design.

4.2 Requirementes

The simulation setup involves the following compo-

nents and roles:

• Smartphone 1 (User). Sends messages (ques-

tions or prompts) to the chatbot, emulating a typi-

cal PDSA-RS user interaction.

• Smartphone 2 (Chatbot). Acts as the interface

for the chatbot, paired with a server that processes

incoming messages and generates appropriate re-

sponses.

• Server. Runs the proposed chatbot application,

including components for receiving, processing,

and responding to messages. A computer is re-

quired to run the server, which is responsible for

handling all the message processing tasks.

• Extracted Conversations. From early 2023 to

late 2024, five conversations with PDSA users

were extracted to ingest in the vector database

recurring questions. Each file contained over

500 lines, highlighting common challenges and

needs. This data offers valuable insights to im-

prove PDSA’s support and user experience.

4.3 Configuration

The servers for this simulation are executed on a

Lenovo 3i Laptop with the following specifications:

• Processor. Intel Core i5 10th Gen

• RAM. 8 GB DDR4 3200MHz

• Storage. 256GB SSD

• Operating System. Arch Linux

• GPU. NVIDIA GeForce GTX 1650 with 4 GB of

dedicated memory

4.4 Conditions

To ensure controlled and repeatable testing behavior,

the following conditions are maintained:

• Both smartphones must be connected to a stable

network.

• The server is pre-configured with the chatbot ap-

plication and necessary dependencies.

• A predefined set of test messages (e.g., FAQ-style

questions) is used for consistency in testing.

4.5 Experiment

The experiment scenario is divided as follows:

• Message Transmission. Smartphone 1 sends a

text message to Smartphone 2 via WhatsApp.

The message mimics a typical PDSA-RS user in-

quiry, such as ”O que

´

e a PDSA-RS?” (What

is PDSA-RS?) or ”Como fac¸o para realizar uma

nova solicitac¸

˜

ao de certificado para uma granja de

su

´

ıno?” (How do I submit a new certificate request

for a swine farm?).

• Processing. Smartphone 2, paired with the server,

receives the message. The server processes the

text using the chatbot’s architecture, which in-

cludes natural language understanding, response

generation, and message formatting.

• Response. The connection server awaits the

message from the Processing server and sends

the generated response back to Smartphone 1 on

WhatsApp, completing the interaction.

4.6 Results

The expected outcomes of the simulation include:

• Smartphone 2 successfully receives and forwards

messages to the server.

• The server correctly processes the input and gen-

erates coherent, contextually relevant responses

using the RAG technique.

• Smartphone 1 receives accurate responses in real-

time, effectively mimicking a natural chatbot-user

interaction.

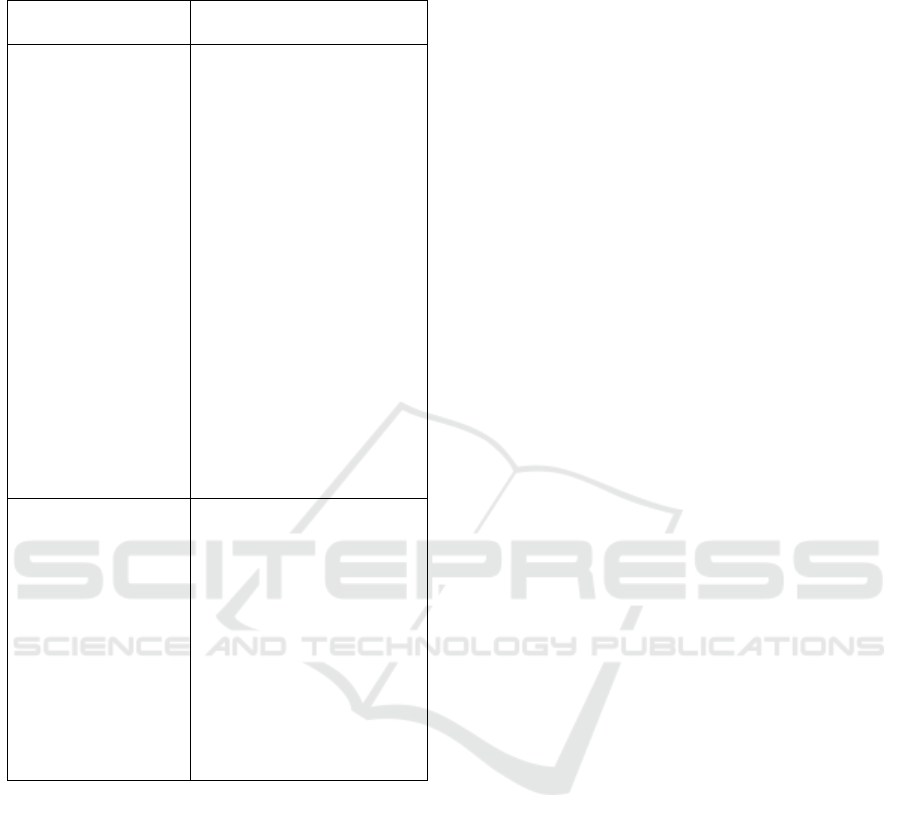

The final and expected output of the real simula-

tion is displayed in Table 5:

5 CONCLUSION

This paper presented the need to automate customer

service at PDSA-RS’s call center and proposed the

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

1082

Table 5: The Simulated PDSA user-chatbot interactions (in

Portuguese).

Smartphone 1 (User

Input)

Smartphone 2 (Chatbot

Response)

Como posso cadas-

trar um novo RT na

PDSA?

Para o cadastro como RT,

voc

ˆ

e pode optar por ser

independente ou repre-

sentante de uma empresa.

Se voc

ˆ

e

´

e um RT inde-

pendente,

´

e necess

´

ario

solicitar o cadastro di-

retamente na plataforma

PESA pelo e-mail registro-

granja@agricultura.rs.gov.

Se voc

ˆ

e est

´

a vinculado

a uma empresa, o RT

representante da empresa

tem a possibilidade de

cadastrar novos usu

´

arios

diretamente pelo m

´

odulo de

gest

˜

ao. Ap

´

os o cadastro,

o novo usu

´

ario receber

´

a

automaticamente um e-mail

com os detalhes de acesso,

incluindo login e senha.

E Preciso fazer uma

nova solicitac¸

˜

ao de

certificado para uma

granja de su

´

ıno,

como fac¸o?

´

E necess

´

ario que voc

ˆ

e es-

teja como RT da granja em

quest

˜

ao. Ap

´

os,

´

e necess

´

ario

a realizac¸

˜

ao de uma co-

leta, a inserc¸

˜

ao de no min-

imo dois relat

´

orios trimes-

trais e o preenchimento do

formul

´

ario de tuberculina e

leptospirose. Tendo todos

os dados necess

´

arios, basta

acessar o item ”Solicitar

Certificado” no menu lat-

eral da plataforma.

implementation of a chatbot capable of providing

contextualized responses to PDSA, using RAG and

LLM techniques. Many technologies were employed

in each module of the application to compose the to-

talitty of the RAG based WhatsApp Chatbot proposal.

The main contributions include the automation of

customer service, improving efficiency and availabil-

ity of the service, and the application of multiple tech-

nologies to solve real-world problems.

To further enhance the Chatbot and maximize its

impact, it’s recommend expanding its functionalities,

integrating with other platforms, and conducting live

user testing in the real-world scenario of PDSA-RS to

evaluate the long-term effects of the Chatbot imple-

mentation.

• Monitoring Dashboard. Develop a web inter-

face to monitor questions and answers in real

time, enabling performance analysis and the iden-

tification of areas for improvement.

• Conversation History Management. Implement

a module to manage and easily access interaction

history, assisting in analysis and future chatbot

training.

• Scalable Solutions. Replace PGVector with more

robust tools, such as Elasticsearch, for vector

queries.

• Long-Term Impact Evaluation. Conduct stud-

ies to assess the effects of the chatbot on user ex-

perience and organizational efficiency over time.

• Multimodal Agents. Utilize LLMs that process

not only text but also images, documents, and

even audio, expanding the chatbot’s capabilities.

• Real Environment Testing. It is necessary to test

the chatbot in a real test environment, with real

users from PDSA-RS, as the paper only covers a

simulation.

• Evaluation of Response Effectiveness. To en-

sure continuous improvement, it is essential to ap-

ply metrics that assess the relevance, precision,

and overall quality of the chatbot’s responses.

ACKNOWLEDGEMENTS

This research is supported by FUNDESA (project

’Combining Process Mapping and Improvement with

BPM and the Application of Data Analytics in the

Context of Animal Health Defense and Inspection

of Animal Origin Products in the State of RS -

UFSM/060496) and FAPERGS, grant n. 24/2551-

0001401-2. The research by Vin

´

ıcius Maran is par-

tially supported by CNPq, grant 306356/2020-1 -

DT2.

REFERENCES

Adamopoulou, E. and Moussiades, L. (2020). Chat-

bots: History, technology, and applications. Machine

Learning with Applications, 2:100006.

Chang, Y., Wang, X., Wang, J., Wu, Y., Yang, L., Zhu, K.,

Chen, H., Yi, X., Wang, C., Wang, Y., et al. (2024). A

survey on evaluation of large language models.

DATAVERSITY (2024). A brief history of large language

models - dataversity.

Descovi, G., Maran, V., Ebling, D., and Machado, A.

(2021). Towards a blockchain architecture for animal

sanitary control. In ICEIS (1), pages 305–312.

Gao, J., Galley, M., and Li, L. (2018). Neural ap-

proaches to conversational ai: Question answering,

Towards a RAG-Based WhatsApp Chatbot for Animal Certification Platform Support

1083

task-oriented dialogues and social chatbots. Founda-

tions and Trends in Information Retrieval.

Gupta, S., Ranjan, R., and Singh, S. N. (2024). A com-

prehensive survey of retrieval-augmented generation

(rag): Evolution, current landscape and future direc-

tions.

Kucherbaev, P., Psyllidis, A., and Bozzon, A. (2017). Chat-

bots as conversational recommender systems in urban

contexts. arXiv preprint arXiv:1706.10076.

Li, H., Su, Y., Cai, D., Wang, Y., and Liu, L. (2022). A

survey on retrieval-augmented text generation. arXiv

preprint arXiv:2202.01110.

Pantano, E. and Pizzi, C. (2023). Ai-based chatbots in con-

versational commerce and their effects on product and

price perceptions. Electronic Markets.

Perlin, R., Ebling, D., Maran, V., Descovi, G., and

Machado, A. (2023). An approach to follow microser-

vices principles in frontend. In 2023 IEEE 17th In-

ternational Conference on Application of Information

and Communication Technologies (AICT), pages 1–6.

IEEE.

Radford, A. et al. (2019). Language models are unsuper-

vised multitask learners. Technical report, OpenAI

GPT-2.

Singh, A. (2024). The rise of large language models(llms).

Accessed: 2025-01-19.

Times, T. N. Y. (2021). How chatbots are changing cus-

tomer service. Accessed: 2023-10-01.

Weizenbaum, J. (1966). Eliza—a computer program for

the study of natural language communication between

man and machine. Communications of the ACM,

9(1):36–45.

Wolf, T. et al. (2019). Transformers: State-of-the-art natural

language processing. In Proceedings of the 2020 Con-

ference on Empirical Methods in Natural Language

Processing: System Demonstrations (EMNLP 2020).

Zhao, P., Zhang, H., Yu, Q., Wang, Z., Geng, Y., Fu, F.,

Yang, L., Zhang, W., Jiang, J., and Cui, B. (2024).

Retrieval-augmented generation for ai-generated con-

tent: A survey. arXiv preprint arXiv:2402.19473.

Zhao, W. X., Zhou, K., Li, J. Y., Tang, T. Y., Wang, X. L.,

Hou, Y. P., Min, Y. Q., Zhang, B. C., Zhang, J. J.,

Dong, Z. C., Du, Y. F., Yang, C., Chen, Y. S., Chen,

Z. P., Jiang, J. H., Ren, R. Y., Li, Y. F., Tang, X. Y.,

Liu, Z. K., Liu, P. Y., Nie, J. Y., and Wen, J. R. (2023).

A survey of large language models. arXiv preprint

arXiv:2303.18223.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

1084