Towards a VR-BCI Based System to Evaluate the Effectiveness of

Immersive Technologies in Industry

Mateus Nazario Coelho

1 a

, Jo

˜

ao Victor Jardim

2 b

, Mateus Coelho Silva

3 c

, Fl

´

avia Silvas

4 d

and

Saul Delabrida

2 e

1

Programa de P

´

os-Graduac¸

˜

ao em Instrumentac¸

˜

ao, Controle e Automac¸

˜

ao de Processos de Minerac¸

˜

ao,

Federal University of Ouro Preto and Instituto Tecnol

´

ogico Vale, Brazil

2

Federal University of Ouro Preto, Ouro Preto, Brazil

3

Center of Mathematics, Computing, and Cognition, Federal University of ABC, Santo Andr

´

e, Brazil

4

Insituto Tecnol

´

ogico Vale, Ouro Preto, Brazil

fl

Keywords:

Virtual Reality, BCI, Industry 4.0.

Abstract:

Industry 4.0 demands from its operators’ knowledge and mastery of modern technologies, such as the Internet

of Things and Virtual Reality, as these offer the Operator 4.0 intelligent tools to improve its daily operations

and practices. Recent research shows promising results on immersive technologies, as they provide a safe and

effective tool for representing hazardous environments that are often difficult to replicate in the real world.

Nevertheless, there is a gap in research on behavioral changes in users while using these technologies, in

addition to evaluating the effectiveness of industrial processes and training and the challenges to implement

in the current Industry. This work seeks to evaluate and answer these questions by using modern technologies

such as VR, BCI, Eye Tracking, and xAPI for this evaluation through the perspectives of attention and fatigue

by capturing the user’s behavior and physiological data inside a Virtual Environment so that in the future will

be validated through a user test to evaluate and reflect on the effectiveness of using virtual reality in Industry.

1 INTRODUCTION

Safety training is crucial for companies globally as

it impacts health, safety, and the environment by

preventing accidents and promoting employee well-

being (Villani et al., 2022). This training develops

workers’ skills and capabilities to analyze risk situ-

ations and make appropriate decisions. Recent re-

search highlights promising outcomes with immer-

sive technologies, which offer a safe and illustrative

mechanism to simulate hazardous environments that

are often challenging to replicate in real-world sce-

narios (Pedram et al., 2017). Furthermore, these tech-

nologies have been adopted across various industries,

such as aviation (for landing scenarios) and firefight-

ing (for the proper use of equipment), aiming to eval-

a

https://orcid.org/0009-0003-8487-1232

b

https://orcid.org/0009-0002-9318-7822

c

https://orcid.org/0000-0003-3717-1906

d

https://orcid.org/0000-0001-7040-018X

e

https://orcid.org/0000-0002-8961-5313

uate human behavior and train skills in high-risk situ-

ations (Scorgie et al., 2024).

As described by (Werbi

´

nska-Wojciechowska and

Winiarska, 2023), Industry 4.0 introduces mod-

ern technologies into industrial processes, including

the Internet of Things (IoT), Virtual Reality (VR),

Augmented Reality (AR), Brain-Computer Interface

(BCI), and Digital Security. These technologies pro-

vide Industry 4.0 operators with intelligent tools to

enhance daily operations and practices. Notably,

(Guo et al., 2020) emphasizes that immersive tech-

nologies are fundamental to implementing Industry

4.0, particularly when integrated with other modern

technologies such as BCI, Blockchain, and IoT. Ac-

cording to (Douibi et al., 2021), using BCIs can con-

tribute to workplace safety, adaptive learning, and re-

mote control of devices.

However, as highlighted by (Stefan et al., 2023),

there is a scarcity of studies at level 3 (Behavioral)

of Kirkpatrick’s model that evaluate users’ behavioral

changes following immersive training. This work

aims to pioneer the application of modern technolo-

694

Coelho, M. N., Jardim, J. V., Silva, M. C., Silvas, F. and Delabrida, S.

Towards a VR-BCI Based System to Evaluate the Effectiveness of Immersive Technologies in Industry.

DOI: 10.5220/0013483800003929

In Proceedings of the 27th International Conference on Enterprise Information Systems (ICEIS 2025) - Volume 2, pages 694-701

ISBN: 978-989-758-749-8; ISSN: 2184-4992

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

gies such as VR and BCI to address this gap. While

limited studies have explored the use of VR for as-

sessing behavioral changes, what challenges might

arise in implementing this technology in industrial

processes and training? Moreover, what effects are

perceived and recorded by users?

Thus, this work proposes a proof of concept to

be presented as a framework for validating immer-

sive technologies within industrial training scenarios.

This prototype will be used in future research to vali-

date its application through a case study in the indus-

try. Therefore, the scope of this work does not in-

clude testing with participants but rather presenting

a framework based on gaps identified in the litera-

ture. It incorporates the combined use of tracking and

recording learning experiences with the xAPI, cogni-

tive state analysis through a non-invasive BCI, immer-

sive and personalized VR experiences, and attention

analysis using eye tracking.

Finally, this paper is organized as follows: Lit-

erature Review, which addresses key concepts and

foundations essential for the development of this

work, along with related studies; Methodology, which

presents the proof of concept of the framework and

explains how each technology impacts the system;

Experimental Design, detailing the plan for future

testing; and finally, Partial Results, Conclusion, and

Future Steps.

2 LITERATURE REVIEW

This section aims to define the theoretical concepts

and related works that will be discussed throughout

this research.

2.1 Challenges of Operator 4.0

The Kirkpatrick Model emerged from the need to

evaluate training programs and was proposed by

(Kirkpatrick and Craig, 1970) as a means to “assess

effectiveness before conducting evaluations.” Kirk-

patrick’s model divides training evaluation into four

hierarchical levels:

1. Level 1: Measures participants’ reactions to the

training.

2. Level 2: Assesses the knowledge and skills ac-

quired.

3. Level 3: Examines behavioral changes after train-

ing.

4. Level 4: Directly correlated with operational

improvements, such as reduced incidents or in-

creased revenue (e.g., productivity gains).

However, how can traditional training methods

(e.g., videos, slides, and quizzes) adapt to the rapid

emergence of modern technologies? (Scorgie et al.,

2024) highlights, in a meta-analysis of Virtual Reality

(VR) for safety training, that these traditional meth-

ods are cost-intensive and often suboptimal in effec-

tiveness. Nonetheless, immersive technologies have

proven effective in military and industrial safety train-

ing for high-risk scenarios.

(Thorvald et al., 2021) describes the Operator 4.0

framework, detailing eight scenarios where Industry

4.0 improves the human workforce. Two notable ex-

amples are the Augmented Operator (accessing real-

time information via overlays) and the Virtual Oper-

ator (replaces the physical world with a virtual one,

enabling immersive experiences for training and de-

sign scenario development).

As human resources remain one of the most valu-

able and scarce assets in industrial environments, the

concept of Operator 5.0 is emerging (Leng et al.,

2022), bringing discussion involving operator er-

gonomics, accessibility, and even monitoring fatigue

states through electrophysiological data.

2.2 Virtual Reality

The concept of Virtual Reality (VR) was introduced

in the late 1980s by (Lanier and Biocca, 1992), en-

visioning the integration of the real and imaginary

worlds. This technology offers limitless experiences,

transcending physical limitations and fostering new

forms of interaction.

In industrial and training applications, VR has

shown positive results. For example, (Teodoro et al.,

2023) utilized VR in energy concessionaire training,

employing the Kirkpatrick Model to assess learning

efficacy. Similarly, (Grabowski and Jankowski, 2015)

used VR to train underground mining personnel in ex-

plosive handling, yielding increased confidence and

knowledge. Systematic reviews, such as (Scorgie

et al., 2024), emphasize the growing use of HMDs

in various industries while also highlighting research

gaps in mining, chemical, and electrical training con-

texts.

These examples underscore VR’s potential be-

yond entertainment, establishing it as a cornerstone

for advancing industrial tools and methodologies

(Paszkiewicz et al., 2021; Pottle, 2019).

2.3 Eye Tracking

Eye tracking in VR hardware estimates gaze direction

using cameras or infrared sensors. This technology is

useful both for creating more realistic avatars and as

Towards a VR-BCI Based System to Evaluate the Effectiveness of Immersive Technologies in Industry

695

a form of input and user movement within a VR envi-

ronment (Adhanom et al., 2023). In (Jang, 2023), it is

demonstrated how eye tracking can be used to define

areas of interest in engagement studies, where atten-

tion and focus statistics analyze user involvement dur-

ing an experience in a real clothing store. However,

(Clay et al., 2019) discuss the lack of research utiliz-

ing eye tracking to analyze user behavior concerning

what they observe within VR, as well as to evaluate

where they looked in relation to their actions.

2.4 Brain-Computer Interface

With advances in medicine and technology and the

need to understand and utilize the complex brain sys-

tem, the first studies emerged in the 1970s using de-

vices capable of extracting brain signals and send-

ing them to external devices, such as a robotic arm

(Kober and Neuper, 2012). According to (Yadav

et al., 2020), these signals can be measured directly

or indirectly from the brain, with electroencephalog-

raphy (EEG) being one method of collecting brain

activity using electrodes. Additionally, BCIs can be

divided into invasive and non-invasive types, where

non-invasive methods involve collecting EEG data

from the scalp. In contrast, invasive methods use im-

planted electrodes directly on the cortical surface.

The capture of an EEG signal is based on the volt-

age difference between the active electrode and the

reference electrode, and the signals can be catego-

rized into specific bands according to their biologi-

cal significance (Rashid et al., 2020). However, in-

terpreting these data is challenging as signals can be

contaminated by noise from various sources, such as

facial muscle activity and eye movements (Porr and

Bohollo, 2022).

Studies demonstrate an increase in spectral den-

sity in the Theta (4–8 Hz) and Alpha (8–13 Hz) bands

when an individual is fatigued (Douibi et al., 2021)

(Cao et al., 2014). The work of (Pooladvand et al.,

2024) uses event estimation techniques from brain

bands combined with machine learning to identify

mental overload in workers, highlighting how time

pressure and multitasking can impose negative fac-

tors on cognitive resources, affecting reflexes and in-

formation processing, which can be critical in high-

stakes scenarios.

Additionally, there is a growing trend in studies

involving EEG as a tool for simultaneous monitoring

across individuals in a group, known as hyperscan-

ning (Gumilar et al., 2021). For example, this method

is employed by (Toppi et al., 2016) to evaluate pilot

behavior during emergency landing situations. How-

ever, the development of software capable of analyz-

ing and interpreting these data and research empha-

sizing the nuances of brain activity are complex en-

deavors. This complexity is compounded by the fact

that BCI equipment has become popular and acces-

sible to end users as a closed product in recent years

(Janapati et al., 2023), restricting its use to a highly

specialized audience.

2.5 Evaluation Methods

(Slater et al., 1994) presents in their infuential work

that the subjective sense of presence experienced by

a group of volunteers in a Virtual Research Environ-

ment (VRE) can be measured through a questionnaire

comprising six or more questions, using the Likert

scale (Jebb et al., 2021). As Slater described, these

questions address the feeling of being in the repre-

sented virtual environment or even recalling it as a

visited place.

In a recent review of their work, (Slater et al.,

2022) conclude that it is necessary to combine dif-

ferent methods to validate participants’ experiences,

utilizing some of the following tools:

1. Questionnaires: While helpful, questionnaires

have several limitations when used in isolation, as

they are generally administered after the experi-

ence and may influence the sense of presence by

prompting participants to consider feelings they

may not have experienced.

2. Physiological or Behavioral Analysis: This in-

cludes the measurement of brain waves using

BCIs or skin resistance in response to stress-

inducing situations within the simulation. How-

ever, this type of analysis may be problematic if

the simulation does not contain scenarios that trig-

ger measurable effects.

3. Configuration Transitions: This method ex-

poses participants to variations of the same sce-

nario (such as differences in lighting or virtual

body configurations) to validate the perception of

presence and the events occurring. Subsequently,

participants control these factors randomly, allow-

ing researchers to estimate the likelihood of a spe-

cific factor being present in the final configuration

for each participant. This method is noteworthy

as it does not require participants to provide opin-

ions or ratings but to make decisions about the

best configuration for themselves.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

696

3 METHODOLOGY

This section presents the proof of concept of the pro-

posed framework, outlining how each technology will

provide the necessary information and function in an

integrated manner.

The Kirkpatrick model serves as the foundational

basis for training level evaluation in the proof of con-

cept. Virtual Reality (VR) technology enables a de-

gree of immersion and presence (Slater et al., 1994)

in training scenarios that are both realistic and free

of physical risk to humans while being fully control-

lable. Human fatigue, though not the only factor, sig-

nificantly increases risk, particularly in hazardous en-

vironments. Fatigue can alter behavior, which in turn

impacts productivity and exposure to risks inherent

in industrial processes. Therefore, to identify human

behavior as proposed in level three of Kirkpatrick’s

model, we propose a framework that provides a sense

of presence and immersion without physical expo-

sure to risks. This framework simultaneously mea-

sures an operator’s level of physical or mental fatigue

through the Chalder Fatigue Questionnaire (Cella and

Chalder, 2010). Additionally, the xAPI library is used

to infer whether human behavior changes based on

training or after prolonged operational use of indus-

trial equipment. The xAPI can record both expected

user actions and reactions (e.g., in risky situations)

that may vary depending on the individual’s fatigue

state.

Combining attention measures obtained through

the eye-tracking capability of the Meta Quest Pro with

variations in Alpha and Theta brainwave bands col-

lected via the Unicorn BCI provides valuable cogni-

tive and physiological data. These data are analyzed

alongside self-reported physical and mental fatigue

from the questionnaire.

The following sections outline the proposed proof

of concept and the technologies employed.

3.1 Proof of Concept

The proof of concept involves the development of a

prototype that utilizes learning systems based on user

records, integrating BCI and eye-tracking technolo-

gies, as illustrated in Figure 1.

According to (Slater et al., 2022), VR scenar-

ios should be designed to stimulate participants suf-

ficiently to record individual user perceptions. This

study utilizes technologies such as BCI (to capture

changes in brainwave bands) and xAPI (to log user

actions and reactions), enabling a more personalized

experiment recording process.

The case study depicted in Figure 1 can repre-

Figure 1: Proposed system for user’s data collection in im-

mersive scenarios.

sent any immersive scenario developed within the

Unity game engine proposed by industrial stakehold-

ers. These scenarios may range from simulations of

hazardous environments (challenging to replicate in

a controlled, real-world setting) to rehabilitation of

techniques or processes by operators in specific in-

dustrial sectors.

Unity was chosen as the game engine due to its

wide array of tools for VR development, active com-

munity, and compatibility with various immersive de-

vices available in the market. The VR hardware se-

lected is the Meta Quest Pro, which offers comfort,

fidelity, performance, and high-resolution display im-

provements. Its eye-tracking functionality enables at-

tention data collection through defined targets in VR

scenarios, leveraging Meta’s native SDK for Unity.

The Unicorn Hybrid Black was chosen as the BCI de-

vice for its non-invasive design, eight-electrode con-

figuration, multiple language APIs, and Unity integra-

tion. This BCI offers a range of customizable soft-

ware environments and tools while eliminating the

need for data transmission cables, using radio signals

for improved usability and user mobility.

Participant actions and response times are tracked

using the xAPI. Key advantages of xAPI include its

ability to capture detailed learning activity data be-

yond traditional e-learning platforms and its inter-

operability with different systems and tools through

Towards a VR-BCI Based System to Evaluate the Effectiveness of Immersive Technologies in Industry

697

APIs in multiple languages, including Unity. A se-

cure server architecture for the LRS and database

will allow precise and standardized data capture

through xAPI. Additionally, an optimized SQL-based

database, separate from the LRS, will be implemented

to facilitate future analyses of the collected data,

which could employ Business Intelligence (BI) tech-

niques, Machine Learning, or other Artificial Intelli-

gence (AI) methods.

3.2 Materials and Methods

3.2.1 Unity

Unity is a comprehensive game development platform

offering tools for physics simulation, collision de-

tection, and immersive technologies such as Virtual

Reality (VR) and Augmented Reality (AR). It sup-

ports a variety of devices (ISAR, 2018). According to

(Nguyen and Dang, 2017), Unity is widely chosen for

its extensive community, diverse model library, sup-

port for popular programming languages (e.g., C#,

JavaScript, and Java), and its flexibility as the most

recognized game engine for VR development.

(Kuang and Bai, 2018) emphasize the importance

of detailed modeling for VR scenario development to

ensure optimized scene performance (free of crashes

or loss of immersion) alongside immersive features

such as audio and interactions.

3.2.2 xAPI

The Experience API (xAPI) is a learning architecture

designed to capture user-generated data through inter-

actions with a Learning Management System (LMS)

or VR application. The LMS serves as a software

platform for educators or trainers to create, organize,

deliver, and monitor educational courses and training

programs. It provides tools to track student progress

and detailed reports on individual performance and

effectiveness. These data are stored in a specific

database architecture known as a Learning Record

Store (LRS), enabling a comprehensive and interop-

erable view of progress and behavior during training

sessions, which can later be processed and analyzed

using analytical tools and techniques (Nouira et al.,

2018).

One advantage of xAPI lies in its well-defined for-

malization and semantics, facilitating integration and

interoperability between systems (Vidal et al., 2015).

Furthermore, the standard has been applied in various

emerging technologies, including mobile learning and

serious games (Farella et al., 2021).

xAPI has been utilized in both industrial and aca-

demic contexts. Studies such as (Schardosim Simao

et al., 2018) and (Viol et al., 2024) demonstrate its

application in virtual laboratories to log interactions

between trainers and student groups.

3.3 Proposed Architecture

In this section, we present each of the technologies

used in this research and their respective functionali-

ties.

3.3.1 Technology to Capture Time Response

The capture of behavioral records and response times

of participants in immersive scenarios is performed

using xAPI technology, employing the TinCan API

for the C# language

1

. For permanent storage of

this information, the YetAnalytics LRS

2

was selected.

This choice was based on its open-source nature

and the possibility of hosting the SQL database au-

tonomously, unlike other cloud-based solutions.

The data collected by xAPI in the test scenarios,

recorded in the LRS, will be analyzed later to assess

whether participants’ reaction times vary over time

and to evaluate expected behaviors within the pro-

totype. In an immersive training environment, the

instructor must be able to assess individual user be-

haviors, and xAPI facilitates this by modeling custom

statements within the environment.

3.3.2 Implementation of Attention Monitoring

The eye-tracking functionality integrated into the

Meta Quest Pro VR hardware was utilized to monitor

users’ attention. This feature estimates user attention

during immersive scenario usage by defining targets

of interest within the scenarios for data collection ref-

erence (within the user’s field of vision). These tar-

gets serve as a basis for determining which position

within the 3D VR simulation the participant is focus-

ing on, leveraging the Eye Gaze API available in the

Meta Movement SDK

3

.

The API implements individual eye movements

captured by the sensors of the Meta Quest Pro. Based

on these movements, a raycast (a projected line origi-

nating from the eyes) detects collisions with solid ob-

jects, in this case, predefined targets in the immer-

sive environment. By identifying these targets, it be-

comes possible to estimate how long the user focused

on key elements necessary for training. This allows

1

xAPI TinCan C#:

https://rusticisoftware.github.io/TinCan.NET/

2

SQL LRS: https://www.yetanalytics.com/sql-lrs

3

Movement SDK:

https://developer.oculus.com/documentation/unity/

move-overview/

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

698

the instructor to accurately evaluate, alongside other

technologies, whether the user directed their attention

where required during training.

3.3.3 Registering EEG Data During Experiment

EEG data collection during immersive scenario usage

was conducted using the non-invasive Unicorn Hy-

brid Black BCI device

4

. This device was selected

due to its wide range of available libraries in various

programming languages (for both data collection and

processing) and its ready-to-use integration library for

Unity.

The Unicorn Hybrid Black allows for the record-

ing, visualization, and exportation of EEG data in

common formats such as CSV. Additionally, it in-

cludes native high-pass and low-pass filters, as well

as feedback on the signal quality of each electrode.

Among the applications available in the device’s soft-

ware suite is Unicorn Bandpower, which provides

users with a real-time view of brain waves at the

eight electrode positions on the head. It also contin-

uously estimates the power of Delta (1–4 Hz), Theta

(4–8 Hz), Low Beta (12–16 Hz), Mid Beta (16–20

Hz), High Beta (20–30 Hz), and Gamma (30–50 Hz)

bands.

For subsequent EEG data analysis, signal process-

ing techniques will be employed using open-source li-

braries such as BrainFlow

5

and MNE-Python

6

. These

libraries provide APIs for various devices, including

the Unicorn Hybrid Black, in multiple programming

languages (e.g., C++, Python, Rust, and JavaScript).

3.3.4 Recording Personal Experiences after the

Experiment

The Chalder Fatigue Questionnaire, adapted for

Brazilian Portuguese by (Cho et al., 2007), will be

used to document participants’ experiences regarding

physical and mental fatigue during the immersive sce-

narios. This questionnaire will provide critical infor-

mation on participants’ perceptions of fatigue, com-

plementing the analysis of the collected brain data.

The complete questionnaire is included in the Ap-

pendix.

4 EXPERIMENTAL DESIGN

The proposed experimental design involves several

well-defined stages, starting with a pre-test phase con-

4

Unicorn BCI: https://www.unicorn-bi.com/

5

Brainflow: https://brainflow.org/features/

6

MNE-Python: https://mne.tools/stable/index.html

ducted by the authors to adjust the experiment’s dura-

tion. A group of voluntary participants will be formed

after obtaining approval from the university’s Ethics

Committee.

Before beginning the experiment, participants will

be briefed on the procedures and provided with the

Informed Consent Form (ICF) detailing the data to be

collected and the experiments to be conducted. An

initial familiarization stage will be carried out, partic-

ularly for participants with no prior experience with

the technology.

Data will be collected using the technologies de-

scribed in previous sections during the experiment’s

execution within the developed scenarios. EEG data

collected by the BCI will be synchronized with reac-

tion times and response data recorded through xAPI,

enabling a correlational analysis between the duration

of the experiment, participants’ focus, and their reac-

tions. Eye tracking will also supply data on partici-

pants’ visual behavior. Furthermore, the Chalder Fa-

tigue Questionnaire (CFQ) will be administered to as-

sess participants’ personal perceptions of their mental

and physical fatigue during the experiment, provid-

ing additional insights into the effectiveness of the VR

scenarios.

5 RESULTS AND CONCLUSIONS

This work proposes a VR-BCI-based system to eval-

uate the effects of integrating immersive technologies

in industrial operators. It is also a way to fill a signif-

icant gap in research on training and immersive envi-

ronments capable of inducing behavioral changes, as

the Kirkpatrick Model recommends.

The theoretical basis of this work involves the

challenges of the 4.0 operator in the industry, which

requires knowledge and mastery of modern market

technologies, such as Virtual Reality, Cybersecurity,

and the Internet of Things. These and other disruptive

technologies offer the 4.0 operator intelligent tools to

improve day-to-day operations and practices.

Thus, this work proposes a framework that inte-

grates VR, BCI, xAPI, and eye-tracking technologies.

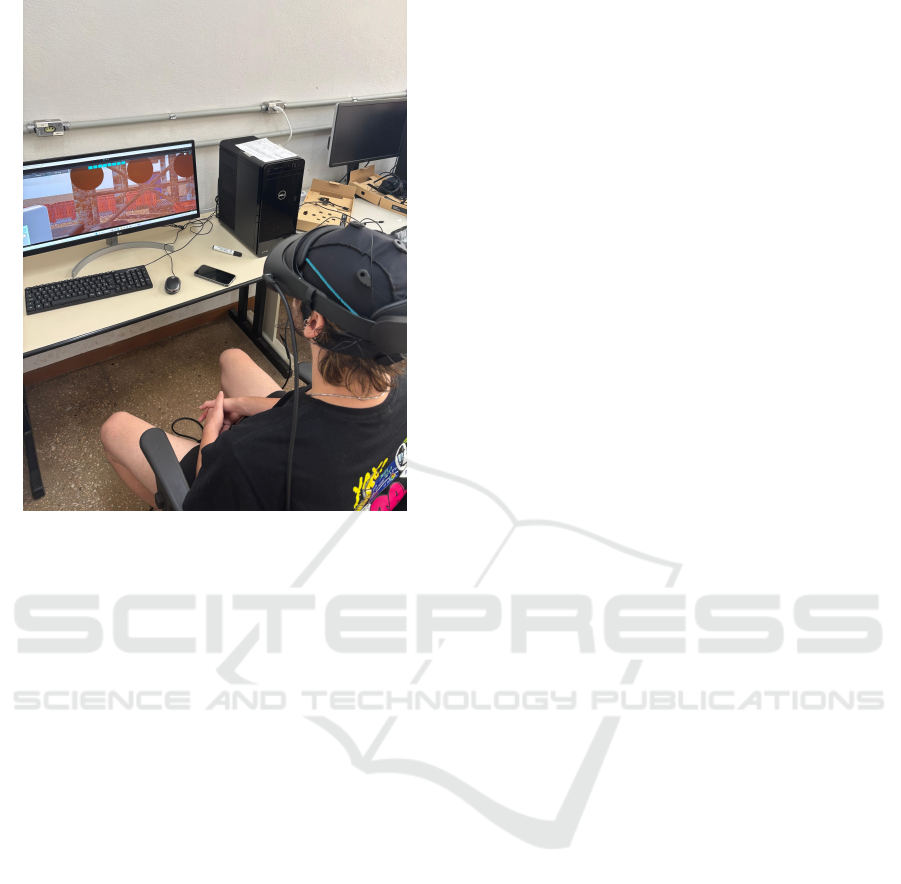

Figure 2 displays the working version of the proposed

system. As displayed, the proposed framework is

fully integrated and ready for users’ tests. The fol-

lowing stages include the execution of user’s experi-

ence tests. The experiments are already approved by

the institution’s ethics committee and are scheduled

to begin in further stages of this research.

Towards a VR-BCI Based System to Evaluate the Effectiveness of Immersive Technologies in Industry

699

Figure 2: Proposed VR-BCI based system working in a vir-

tual scenario of an industrial process.

6 GENERATIVE AI USAGE

The authors state that generative AI was only used for

translation and proof-reading. All presented text is

originally written by the authors.

ACKNOWLEDGEMENTS

This study was financed in part by the Coordenac¸

˜

ao

de Aperfeic¸oamento de Pessoal de N

´

ıvel Superior

- Brasil (CAPES) - Finance Code 001, the Con-

selho Nacional de Desenvolvimento Cient

´

ıfico e Tec-

nol

´

ogico (CNPQ) financing code 306101/2021-1,

FAPEMIG financing code APQ-00890-23, the Insti-

tuto Tecnol

´

ogico Vale (ITV) and the Universidade

Federal de Ouro Preto (UFOP).

REFERENCES

Adhanom, I. B., MacNeilage, P., and Folmer, E. (2023).

Correction to: Eye tracking in virtual reality: a broad

review of applications and challenges. Virtual Reality,

27(2):1569–1570.

Cao, T., Wan, F., Wong, C., da Cruz, J., and Hu, Y. (2014).

Objective evaluation of fatigue by eeg spectral analy-

sis in steady-state visual evoked potential-based brain-

computer interfaces. Biomedical engineering online,

13:28.

Cella, M. and Chalder, T. (2010). Measuring fatigue in clin-

ical and community settings. Journal of Psychoso-

matic Research, 69(1):17–22.

Cho, H. J., Costa, E., Menezes, P. R., Chalder, T., Bhugra,

D., and Wessely, S. (2007). Cross-cultural valida-

tion of the chalder fatigue questionnaire in brazilian

primary care. Journal of Psychosomatic Research,

62(3):301–304.

Clay, V., K

¨

onig, P., and Koenig, S. (2019). Eye tracking

in virtual reality. Journal of Eye Movement Research,

12.

Douibi, K., Le Bars, S., Lemontey, A., Nag, L., Balp,

R., and Breda, G. (2021). Toward eeg-based bci

applications for industry 4.0: Challenges and possi-

ble applications. Frontiers in Human Neuroscience,

15:705064.

Farella, M., Arrigo, M., Chiazzese, G., Tosto, C., Seta, L.,

and Taibi, D. (2021). Integrating xapi in ar applica-

tions for positive behaviour intervention and support.

In 2021 International Conference on Advanced Learn-

ing Technologies (ICALT), pages 406–408. Tartu, Es-

tonia.

Grabowski, A. and Jankowski, J. (2015). Virtual reality-

based pilot training for underground coal miners.

Safety Science, 72:310–314.

Gumilar, I., Sareen, E., Bell, R., Stone, A., Hayati, A.,

Mao, J., Barde, A., Gupta, A., Dey, A., Lee, G., et al.

(2021). A comparative study on inter-brain synchrony

in real and virtual environments using hyperscanning.

Computers & Graphics, 94:62–75.

Guo, Z., Zhou, D., Zhou, Q., Zhang, X., Geng, J., Zeng, S.,

Lv, C., and Hao, A. (2020). Applications of virtual re-

ality in maintenance during the industrial product life-

cycle: A systematic review. Journal of Manufacturing

Systems, 56:525–538.

ISAR, C. (2018). A Glance into Virtual Reality Develop-

ment Using Unity. Informatica Economica, 22(3):14–

22.

Janapati, R., Dalal, V., and Sengupta, R. (2023). Advances

in modern eeg-bci signal processing: A review. Mate-

rials Today: Proceedings, 80:2563–2566.

Jang, J. Y. (2023). Analyzing visual behavior of consumers

in a virtual reality fashion store using eye tracking.

Fashion and Textiles, 10(1):24.

Jebb, A. T., Ng, V., and Tay, L. (2021). A review of key

likert scale development advances: 1995–2019. Fron-

tiers in Psychology, 12.

Kirkpatrick, D. L. and Craig, R. (1970). Evaluation of train-

ing. Evaluation of short-term training in rehabilita-

tion, page 35.

Kober, S. E. and Neuper, C. (2012). Using auditory event-

related eeg potentials to assess presence in virtual real-

ity. International Journal of Human-Computer Stud-

ies, 70(9):577–587.

Kuang, Y. and Bai, X. (2018). The research of virtual re-

ality scene modeling based on unity 3d. In 2018 13th

International Conference on Computer Science & Ed-

ucation (ICCSE), page 611 – 613. Cited by: 15.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

700

Lanier, J. and Biocca, F. (1992). An insider’s view of the

future of virtual reality. Journal of Communication,

42(4):150–172.

Leng, J., Sha, W., Wang, B., Zheng, P., Zhuang, C., Liu, Q.,

Wuest, T., Mourtzis, D., and Wang, L. (2022). Indus-

try 5.0: Prospect and retrospect. Journal of Manufac-

turing Systems, 65:279–295.

Nguyen, V. T. and Dang, T. (2017). Setting up virtual re-

ality and augmented reality learning environment in

unity. In Adjunct Proceedings of the 2017 IEEE Inter-

national Symposium on Mixed and Augmented Real-

ity, ISMAR-Adjunct 2017, pages 315–320. Institute of

Electrical and Electronics Engineers Inc.

Nouira, A., Cheniti-Belcadhi, L., and Braham, R. (2018).

An enhanced xapi data model supporting assessment

analytics. Procedia Computer Science, 126:566–575.

Knowledge-Based and Intelligent Information And

Engineering Systems: Proceedings of the 22nd Inter-

national Conference, KES-2018, Belgrade, Serbia.

Paszkiewicz, A., Salach, M., Dymora, P., Bolanowski, M.,

Budzik, G., and Kubiak, P. (2021). Methodology of

implementing virtual reality in education for industry

4.0. Sustainability, 13(9).

Pedram, S., Perez, P., Palmisano, S., and Farrelly, M.

(2017). Evaluating 360-virtual reality for mining in-

dustry’s safety training. In HCI International 2017–

Posters’ Extended Abstracts: 19th International Con-

ference, HCI International 2017, Vancouver, BC,

Canada, July 9–14, 2017, Proceedings, Part I 19, vol-

ume 713, pages 555–561. Springer Verlag.

Pooladvand, S., Chang, W.-C., and Hasanzadeh, S. (2024).

Identifying at-risk workers using fnirs-based mental

load classification: A mixed reality study. Automation

in Construction, 164:105453.

Porr, B. and Bohollo, L. M. (2022). Bci-walls:

A robust methodology to predict success or fail-

ure in brain computer interfaces. arXiv preprint

arXiv:2210.16939.

Pottle, J. (2019). Virtual reality and the transformation

of medical education. Future healthcare journal,

6(3):181.

Rashid, M., Sulaiman, N., P. P. Abdul Majeed, A., Musa,

R. M., Ab. Nasir, A. F., Bari, B. S., and Khatun, S.

(2020). Current status, challenges, and possible solu-

tions of eeg-based brain-computer interface: A com-

prehensive review. Frontiers in Neurorobotics, 14.

Schardosim Simao, J. P., Mellos Carlos, L., Saliah-Hassane,

H., Da Silva, J. B., and Da Mota Alves, J. B. (2018).

Model for recording learning experience data from re-

mote laboratories using xapi. In Proceedings - 13th

Latin American Conference on Learning Technolo-

gies, LACLO 2018, page 450 – 457. Cited by: 7.

Scorgie, D., Feng, Z., Paes, D., Parisi, F., Yiu, T. W., and

Lovreglio, R. (2024). Virtual reality for safety train-

ing: A systematic literature review and meta-analysis.

Slater, M., Banakou, D., Beacco, A., Gallego, J., Macia-

Varela, F., and Oliva, R. (2022). A separate reality:

An update on place illusion and plausibility in virtual

reality. Frontiers in Virtual Reality, 3.

Slater, M., Usoh, M., and Steed, A. (1994). Depth of pres-

ence in virtual environments. Presence: Teleoperators

& Virtual Environments, 3:130–144.

Stefan, H., Mortimer, M., and Horan, B. (2023). Eval-

uating the effectiveness of virtual reality for safety-

relevant training: a systematic review. Virtual Reality,

27(4):2839 – 2869. Cited by: 1; All Open Access,

Hybrid Gold Open Access.

Teodoro, P. N. B., Cardoso, A., and Ramos, D. C. (2023).

Combinac¸

˜

ao do framework 70-20-10 com kirkpatrick

model para construc¸

˜

ao e avaliac¸

˜

ao de treinamentos

apoiados por realidade virtual. Revista Cient

´

ıfica Uni-

versitas, 10(1):73–87.

Thorvald, P., Fast Berglund,

˚

A., and Romero, D. (2021).

The cognitive operator 4.0. In 18th International Con-

ference on Manufacturing Research, ICMR 2021, in-

corporating the 35th National Conference on Man-

ufacturing Research, 7–10 September 2021, Univer-

sity of Derby, Derby, UK, volume 15, pages 3–8. IOS

Press.

Toppi, J., Borghini, G., Petti, M., He, E. J., De Giusti, V.,

He, B., Astolfi, L., and Babiloni, F. (2016). Investi-

gating cooperative behavior in ecological settings: an

eeg hyperscanning study. PloS one, 11(4):e0154236.

Vidal, J. C., Rabelo, T., and Lama, M. (2015). Semantic de-

scription of the experience api specification. In Pro-

ceedings - IEEE 15th International Conference on Ad-

vanced Learning Technologies: Advanced Technolo-

gies for Supporting Open Access to Formal and Infor-

mal Learning, ICALT 2015, page 268 – 269. Cited by:

10.

Villani, V., Gabbi, M., and Sabattini, L. (2022). Promoting

operator’s wellbeing in industry 5.0: detecting men-

tal and physical fatigue. In 2022 IEEE International

Conference on Systems, Man, and Cybernetics (SMC),

pages 2030–2036.

Viol, R. S., Fernandes, P. C., Lacerda, I. I., Melo, K. G. F.,

Nunes, A. J. D. A., Pimenta, A. S. G. a. M., Carneiro,

C. M., Silva, M. C., and Delabrida, S. (2024). Eduvr:

Towards an evaluation platform for user interactions

in personalized virtual reality learning environments.

In Proceedings of the XXII Brazilian Symposium on

Human Factors in Computing Systems, IHC ’23, New

York, NY, USA. Association for Computing Machin-

ery.

Werbi

´

nska-Wojciechowska, S. and Winiarska, K. (2023).

Maintenance performance in the age of industry 4.0:

A bibliometric performance analysis and a systematic

literature review. Ler os artigos 146, 158, 176, 187,

188, 189, 190.

Yadav, D., Yadav, S., and Veer, K. (2020). A comprehen-

sive assessment of brain computer interfaces: Recent

trends and challenges. Journal of Neuroscience Meth-

ods.

Towards a VR-BCI Based System to Evaluate the Effectiveness of Immersive Technologies in Industry

701