Face the Music: Summarizing Unscripted Music Practice from Audio

Christopner Raphael

Indiana University, Bloomington, U.S.A.

Keywords:

Music Practice, Dynamic Programming, Visualization, Switching Kalman Filter, Summarization.

Abstract:

We present ongoing work in developing a system to support instrumental practice in which a students plays

from a score but can move freely within the score, as is typical of score-based music practice. Our system

develops a correspondence between the practice audio and the score, partitioning the audio into a collection of

score-aligned excerpts using dynamic programming. We examine several offline approaches to help interpret

or summarize the practice audio. One is a tool that allows score-driven browsing of the audio. We also

look at several score-based visualization tools that highlight aspects of the practice data. Finally we develop

a technique that assembles an “optimal” audio performance from the score-aligned fragments, seeking an

assembly that is rhythmically most plausible according to a simple probabilistic model for musical timing.

1 INTRODUCTION

Music instruction systems provide support for instru-

mental music practice by identifying errors or inaccu-

racies, providing guidance, and, perhaps, even offer-

ing a kind of companionship during music practice.

These systems hold promise for making the reward-

ing, lifelong activity of music-making more broadly

available, increasing the individual attention received

by music students.

These systems can sense the student’s actions with

a variety of kinds of data, such as audio, video,

MIDI, haptic, etc., though we use audio due to our

focus on traditional musical instruments, such as

strings, woodwinds, brass, and piano, where other

data sources are not available.

Such instruction systems are often organized

around a call and response paradigm: the practicing

student is presented with a series of short score pas-

sages which are played by the student and, in turn,

evaluated the system. The commercial system Yousi-

cian (Yousician, 2022) as well as (Fober et al., 2004),

(Dannenberg et al., 1990), and (Zhang et al., 2019) are

all examples of such call and response approaches.

Of course, this paradigm is familiar, and often effec-

tive, in the larger computer supported education space

as well.

The evaluation of the passage allows the instruc-

tional system to determine if the presented challenge

has been met, thus influencing the choice of the next

passage — perhaps a repeat of the most recent one.

Thus the overall loop of call, response, and evalua-

tion becomes the basic structure of the system. The

call and response paradigm simplifies the evaluation

process since it is much easier to judge accuracy when

the system “knows” what the student intended to play.

However, it also decreases the agency of the student,

requiring the student to follow the practice regimen

presented by the system, rather than allowing a stu-

dent to direct the practice session. Evidence suggests

that taking “ownership” of the the practice session, as

well as the learning process more generally, is impor-

tant for long term success (Coutts, 2019).

Rather than requiring a music student to fit into

what is easiest for the computer, we explore an ap-

proach that works with the way music students natu-

rally practice. In typical score-based music practice

a student plays from a score, jumping around in the

score at will. Often short sections are practiced re-

peatedly, gradually moving forward through the score

in an attempt to build fluidity through a larger section.

Though, occasionally, the student may skip from one

section of the score to another. Sometimes a student

may even depart from the score temporarily to prac-

tice an exercise derived from a particular passage. It

is this “unscripted” score-based practice we address

here, assuming only that most of the audio played

during the session comes from the score, as is com-

mon in a broad range of practice scenarios. We de-

velop an audio recognition technique, a variant of tra-

ditional audio score alignment, that maps the audio

onto the music score as a sequence of “excerpts” —

Raphael, C.

Face the Music: Summarizing Unscripted Music Practice from Audio.

DOI: 10.5220/0013493800003932

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 1, pages 685-691

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

685

Transport

Notes

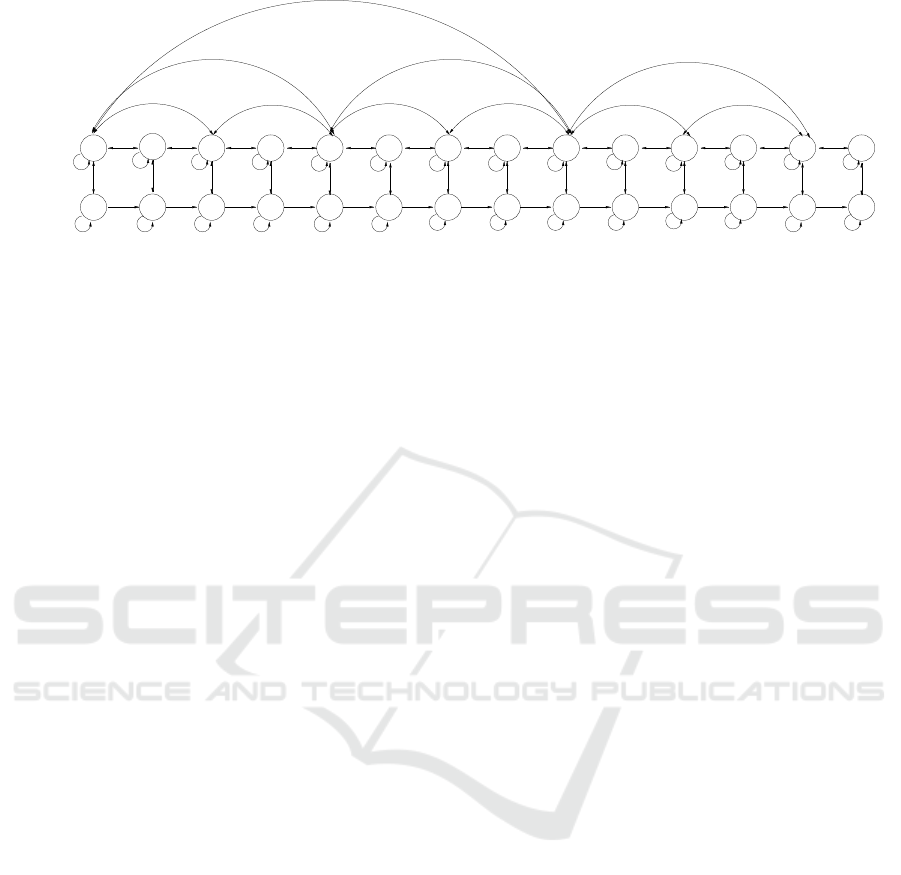

Figure 1: We associated the frame sequence with a path in the score graph, above, thus mapping the audio onto the score.

time-labeled sequences of score notes.

In the current work we limit our attention to the

“offline” version of unscripted score based practice in

which we analyze a recorded practice session after it

has been recorded. Offline analysis is the basis for

our computer-supported review of music practice, as

well as practice summary. In contrast, “online” score

following seeks to understand the practice audio as

it is generated, as would be necessary to offer real-

time support. As we seek to build actual systems and

get them into the hands of practicing music students,

we are interested in both offline and online analysis,

however the online aspect is beyond the scope of the

current discussion.

We describe the essential methodology behind the

offline score alignment in Section 2. Once we have

the alignment we seek meaningful ways to browse the

practice audio, and summarize what is important for

the student to know, presenting our results in accessi-

ble visualizations. Given the centrality of the score to

much of music practice, and to our statement of score

alignment problem, it makes sense to leverage the mu-

sic score in our student feedback. Section 3 sketches

a score-based practice browser that allows the student

to explore the practice in an easy and intuitive self-

directed manner, as well as several score-based visual

summaries of the practice. Section 4 considers the

problem of assembling an “optimal” complete perfor-

mance from the practice session. Section 5 provides

some larger context for the current work and discusses

developing ideas.

2 OFFLINE SCORE ALIGNMENT

Here we present briefly our approach to audio-score

alignment for unscripted score-based music practice.

Score following (online) and score alignment (of-

fline) were simultaneously proposed in 1984 by Dan-

nenberg (Dannenberg, 1984) and Vercoe (Vercoe,

1984). Many variations on score alignment have

been proposed, including adaptations for polyphonic

music (Hu et al., 2003), audio alignment with im-

ages of printed scores (Dorfer et al., 2018), the si-

multaneous identification tempo or other latent vari-

ables (Raphael, 2006), and audio-to-audio matching

(M

¨

uller et al., 2005). A common methodological

theme runs through nearly all of this work: the perfor-

mance is represented as a path through a state graph

in which nodes represent score positions, while dy-

namic programming (DP) is used to find the best in-

terpretation given the audio data. We use the term

“dynamic programming” in a general sense to in-

clude both most likely path and filtering computa-

tions (as with an HMM). An overview of the score

alignment and following can be found in (Dannenberg

and Raphael, 2006), while a thorough history of the

methodological ideas is given in (Cuvillier, 2016).

Our particular focus is on score alignment that al-

lows the player to “jump around” in the score, as is

typical in most realistic practice scenarios. Restrictive

versions of this idea were first proposed in (Pardo and

Birmingham, 2005) and (Fremery et al., 2010) con-

sidering possible jumps to and from important struc-

tural boundaries. The scenario involving arbitrary

jumps was first proposed by Nakamura and Sagayama

(Nakamura et al., 2015), while our modeling frame-

work is similar while (Jiang et al., 2019) compares

and contrasts the approaches.

We begin with an audio recording of a music prac-

tice session, typically about 20 minutes in length in

our experiments. The recording is divided into a se-

quence of “frames” of about 30 ms. each, y

1

, . . . , y

T

.

Our approach to score alignment views the music

score as a sequence of notes, without regard for the

notated lengths of these notes. If we are treating a

polyphonic instrument, such as the piano, then the

score can be regarded as a sequence of chords, with

a new chord appearing when any note of any voice

changes. We relate the audio to the score by mod-

eling the y

1

, . . . , y

T

as a path through the state graph

of Figure 1, x

1

, . . . , x

T

— one state for each frame of

CSME 2025 - 6th International Special Session on Computer Supported Music Education

686

audio. The graph explicitly models the possibility of

skips in the score.

The lower level of the graph, labeled “Notes” in

Figure 1, depicts the notes (or chords) of the score,

connected in left-to-right order, as indicated by the

right arrows that connect this level. Since the number

of frames the player spends in each note is unknown,

each of these states, and all other states in the graph,

contains a self-loop — we can remain in a state for

any number of frames. In reality each of the states in

the note layer are actually sub-models involving sev-

eral states, however this is omitted from Figure 1 for

simplicity’s sake.

The upper layer of Figure 1, labeled “Transport,”

models the player’s score skips: from any note in the

score one may move to the Transport layer, moving

in this layer to the next score note to be played. The

curved arcs in Figure 1 allow transitions in the Trans-

port layer that move several, or many, states to the

left or right, thus allowing long score skips spanning

many notes in a single frame. A probability distribu-

tion over the arcs that exit each state model our pref-

erence for transitions, favoring linear motion through

the Notes layer over skips, local skips over distant

ones, and backward skips over forward skips. Thus,

x

1

, . . . , x

T

is modeled as a Markov chain.

Our data model computes the probability of a

frame of audio given a particular model state, P(y

t

|x

t

)

with details presented in (Jiang et al., 2019). Implicit

in the notation is the assumption that the tth frame of

audio, y

t

, depends only on the current state, x

t

. While

we omit the details here, if x

t

is a note or chord in the

Notes layer the probability model depends on the as-

sociated pitches of the note or chord. If x

t

is in the

Transport layer we use a rest model for the audio, (we

assume no notes are currently sounding).

The result of these assumptions is a hidden

Markov model. We interpret the audio by identify-

ing the most likely sequence of states given the audio

data

ˆx

T

1

= argmax

x

T

1

P(x

T

1

|y

T

1

)

= argmax

x

T

1

P(x

T

1

)P(y

T

1

|x

T

1

)

= arg max

x

1

,...,x

T

P(x

1

)

T

∏

t=2

P(x

t

|x

t−1

)

T

∏

t=1

P(y

t

|x

t

)

The dynamic programming computation of the most

likely path, ˆx

T

1

is well known, e.g. (Rabiner, 1989),

and is omitted here. The most likely path can be par-

titioned into intervals, ˆx

hi(e)

lo(e)

, for e = 1 . . . , E that lie

completely in the Notes layer separated by intervals

that lie completely in the Transport layer. Each ˆx

hi(e)

lo(e)

,

becomes an excerpt in our interpretation of the au-

dio, identifying a sequence of score notes that were

played as well as the onset frame for each note in the

sequence.

0 20 40 60 80 100

0 50 100 150

Practice Overview

measure

excerpt #

Figure 2: Each horizontal segment shows the range of notes

covered by an excerpt for the first 150 excerpts of a practice

session. Aspects of the practice strategy are evident in this

simple overview.

Figure 2 gives an example of this distillation of

the audio into excerpts, showing the first 150 excerpts

in a practice session. From this simple analysis one

gets an overview of the practice strategy employed by

this particular student, beginning by playing through

the entire etude in long sections over the first several

excerpts, followed by a focus on shorter sections with

lots of repetition, gradually moving forward through

the current section.

(Jiang et al., 2019) evaluates the accuracy of this

approach on a small sample, showing promising re-

sults on the test set. However, there is a great

deal of variation in practice data, while the most

straightforward ways of collecting labeled data are

prohibitively time-consuming. Using synthetic data

may offer some clarity, though this approach must

make assumptions concerning the generation mech-

anism which are bound to be simplistic. Thus there

is more work to be done in validating the accuracy of

our proposed method.

3 REVIEWING PRACTICE

A former teacher impressed on his students the im-

portance of “facing the music,” by which he meant

Face the Music: Summarizing Unscripted Music Practice from Audio

687

Figure 3: Left: The coverage of the rehearsal is shown by tinting the note heads. Brighter notes were played more frequently.

Right: Tuning expressed by coloring note heads. Red notes are sharp while blue notes are flat in relation to equal A=440

tempered tuning.

listening to recordings of our playing. Such listening

is important because we often don’t hear ourselves

objectively in real-time, (Silveira J. M., 2016). Per-

haps this is because listening is a matter of habit —

we hear what we listen for, especially when so much

cognitive bandwidth is directed toward the mechanics

of playing. However, listening to a recording offline

— facing the music — has a way of breaking this at-

tention habit, making clear what we otherwise miss.

Our user interface, discussed here, seeks to facilitate

this directed listening.

The music score is usually the focal point dur-

ing score-based practice, thus we also orient our di-

rected listening around the score. Our interface al-

lows one to navigate through the practice session at

the note level, either using arrow keys to move for-

ward/backward while highlighting the current score

note with a box around the note — our “cursor.” Play-

back can be initiated from the cursor position at any

time. Alternatively, the user can click on a score note

to jump to the associated score and playback position

in the recorded audio. In contrast, a traditional audio

browser requires the user to search for musical events

in order to hear them.

Simply holding down the forward arrow key in

our interface advances through the score at the key-

board repeat rate, providing a movie-like summary of

the sequence of notes visited during practice, analo-

gous to Figure 2, as shown here. A more fine-grained

review process is shown here, where the user inter-

actively explores the practice clicking on notes that

deserve further review. One can imagine a produc-

tive exchange between a teacher and student oriented

around such an interactive tool, allowing the teacher

to observe and explore the student’s practice itself,

rather than just the final result.

The score also works well as a basis for static visu-

alization, much as a geographical map is an effective

reference for spatial data (Hogr

¨

afer et al., 2020). The

left panel of Figure 3 shows a score page where each

note is colored according to how many times it was

visited in the practice session. In the image a black

note would mean the note was never played while a

bright blue was played the most. One can see both re-

gions that were virtually untouched, as well as those

receiving special attention.

The right panel of Figure 3 shows an analogous

depiction of tuning, where a blue note is one that is

flat while a red note is sharp. We make these de-

terminations by estimating the frequency difference

between the A=440 equal-tempered target frequency

and the average frequency for the note, measured in

cents. We highlight the note when the discrepancy is

greater than a user-adjustable threshold. The refer-

ence tuning level of A=440 can also be adjusted. In

creating such tuning maps one can either consider ag-

gregate tuning, averaged over all of the excerpts in the

rehearsal, or a more targeted choice of tuning — say

CSME 2025 - 6th International Special Session on Computer Supported Music Education

688

the most recently played notes.

Of course there are other aspects of the rehearsal

that can be visualized in this way, such as rhythmic

accuracy, while one can combine the note tinting with

interactive browsing.

4 ASSEMBLING AN OPTIMAL

PERFORMANCE

Notes

Excerpts

32k=1

...

e=1

2

3

4

5

6

Figure 4: A schematic view of the assembly problem. We

seek to link together portions of the excerpts to make the

best overall performance.

In addition to having visual summaries of prac-

tice data, audio summaries are also helpful. In this

section we discuss a method for generating a single

“optimal” performance produced by assembling the

audio fragments generated during practice. Such a

recording could be shared with a teacher as a repre-

sentative example of the level attained by the student

(at her best). Or it may be useful to the student as

a distillation of the entire practice session (or several

sessions), reducing the data to a manageable quantity.

Our approach for performance assembly is based

on rhythm analysis, so, unlike Section 2, we need to

consider notated rhythm. We assume that our score is

composed of K notes with notated lengths l

1

, . . . , l

K

in

whole note units — that is, if note k is a quarter note

then l

k

= 1/4, regardless of the time signature. The

analysis of this section applies equally well to poly-

phonic scores, such as with piano practice, in which

l

k

describes the length of the kth chord, taking a ho-

mophonic (sequence of pitch simultaneities) view of

the score.

We begin with a collection of excerpts, as in Fig-

ure 2, from which we wish to assemble an “ideal”

complete performance by piecing together note se-

quences, schematically depicted in Figure 4. At

present we consider only rhythmic fluidity in as-

sembling this performance, though pitch accuracy or

other performance elements could easily be included

into our formulation. Thus, in short, we seek the path

through the excerpt notes of Figure 4, that is most

rhythmically fluid, linking these note sequences to-

gether to form the optimal performance.

Our measure for rhythmic fluidity is based on a

probabilistic model for musical timing that uses a

latent tempo process t

1

, . . . ,t

K

where t

k

is the local

tempo at note k measured in seconds per whole note.

The onset times for the complete performance we will

construct are given by o

1

, . . . , o

K

, measured in sec-

onds. The joint structure of these variables is modeled

by a joint Gaussian distribution, modelling the ini-

tial tempo, t

1

, as t

1

∼ N(µ

t

, σ

2

1

) where µ

t

is the tempo

given in the score, expressed in secs per whole note,

and letting the initial onset, o

1

, have o

1

∼ N(0, τ

2

1

)

where τ

2

1

is a nearly infinite variance — we do not care

when the sequence begins. The process then evolves

according to

t

k+1

= t

k

+ ε

t

k

o

k+1

= o

k

+ l

k

t

k

+ ε

o

k

for k = 1, . . . , K − 1 where the {ε

t

k

} are N(0, l

2

k

σ

2

)

variables, the {ε

o

k

} are N(0, l

2

k

τ

2

), with the variables

{ε

t

k

, ε

o

k

}

K−1

k=1

,t

1

, o

1

assumed mutually independent.

The 0-mean and small variances of the {ε

t

k

} lead

to a smoothly varying tempo process, which is rea-

sonable since we want the tempo to be stable within

and between our assembled fragments. In a situation

where a known tempo change occurs in the score, we

could easily allow a “reset” of the tempo process. The

kth note has length o

k+1

−o

k

, which, according to our

model, has mean length l

k

t

k

— this is the length that

would be predicted purely based on the tempo. How-

ever the model allows additional variation in the ac-

tual note lengths though the {ε

o

k

} variables.

The model defines a joint Gaussian density on all

model variables, P(t

K

1

, o

K

1

). Thus, the plausibility of

a sequence of onset times, o

K

1

, for the assembled per-

formance could be measured by max

t

K

1

P(t

K

1

, o

K

1

).

We now turn to the problem of constructing the

ideal performance. Our analysis of the practice ses-

sion results in E excerpts, indexed by e = 1, . . . , E,

where the eth excerpt covers the range of notes

lo(e). . . , hi(e) as in Figure 2 or Figure 4. For excerpt

e we denote the onset time of the kth by o

k,e

, where

lo(e) ≤ k ≤ hi(e). For each note we want to choose

one of the possibly many examples observed in the

practice session. As notation we let e

k

be the excerpt

from which we take the kth note. Once we have cho-

sen e

K

1

, we can construct a sequence of onset times,

o

K

1

according to

o

1

= o

1,e

1

(1)

o

k

= o

k−1

+ (o

k,e

k

− o

k−1,e

k−1

) (2)

k = 1, . . . , K − 1. For this construction to make sense

both o

k,e

k

and o

k−1,e

k−1

must come from the same ex-

cerpt so that their difference measures the length of

Face the Music: Summarizing Unscripted Music Practice from Audio

689

the kth note. Thus we require that for each note, k,

with k ̸= 1 we have lo(e

k

) < k.

Now, emphasizing the dependence of o

K

1

on e

K

1

by explicitly writing o

K

1

(e

K

1

), we could view the most

plausible assembly by choosing the e

K

1

that maxi-

mizes max

t

K

1

P(t

K

1

, o

K

1

(e

K

1

)). However, we also want

to reduce the amount of skipping around between ex-

cerpts, so we add a penalty for each such excerpt

switch, L(e

K

1

) = C

|{k:e

k+1

̸=e

k

}|

for some positive con-

stant C. Then we define our optimal e

k

1

, ˆe

K

1

, by

ˆe

K

1

= argmax

e

K

1

L(e

K

1

)max

t

K

1

P(t

K

1

, o

K

1

(e

K

1

)) (3)

The construction of ˆe

K

1

poses some interesting

methodological challenges that are beyond the scope

of our current effort. However, (Raphael, 2006) de-

scribes a method for computing the globally optimal

sequence of hidden states in a switching state space

model containing both 1-dimensional Gaussian and

discrete hidden variables. This situation is very close

to ours, so the methodology easily adapts to the situ-

ation at hand.

200 400 600 800 1000

0 50 100 150 200

Optimal Assembled Performance

Note Index

Excerpt

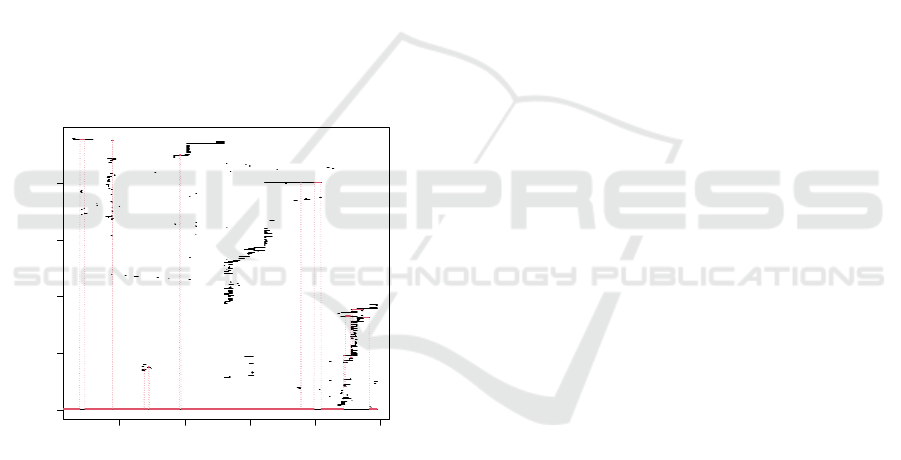

Figure 5: Assembly of a complete performance of the Bruch

Violin Concerto No. 1, Mvmt 1 from a practice session.

Figure 5 shows the analog of Figure 4 constructed

from a real practice session of the Bruch Violin Con-

certo No. 1, first movement. The figure shows that the

practice session began with a complete run through of

the movement, with later practice focusing on specific

sections, so a significant portion of the movement was

played only once. This is reflected in the optimal as-

sembly, shown in red, that draws heavily from the 1st

excerpt, as it must.

A score-aligned video of the result is given here.

In constructing the audio we have made no effort to

cover up the splice points, which can be heard as

clicks in the audio or places where the microphone

placement seems to suddenly change. While a user-

facing result might blend the audio over the splice

points, it seems better to leave them in the raw state

for purposes of our demonstration.

5 FUTURE WORK

The discussion above gives an overview of the ideas

we are developing for audio-based instrumental prac-

tice support systems. It is safe to say that the chal-

lenges are significant and open-ended, while many re-

main unmentioned in our discussion. We have pre-

sented a number of ideas for practice visualization

based on the mapping we construct from the practiced

notes to the score. Given the centrality of the score

for many practicing students, such score-based visu-

alization seems an obvious and essential component

of such a system.

It is worth mentioning, however, that the idea is

more general than what we have sketched. Our score

alignment technique of Section 2 establishes a many-

to-one map between the practice audio and something

familiar to the student, the score. However, having

mapped the notes to the score, we could reduce fur-

ther to say, the chromatic pitches that are playable on

the instrument. Such a mapping could display, for in-

stance, average tuning for the different notes or regis-

ters. This could be particularly useful for instruments,

such as woodwinds, that have particular notes or reg-

isters that tend to be out of tune. Or, rather than re-

ducing the mapping to a smaller range, one could ex-

pand it, looking, for example, at particular sequences

of pitches. From this analysis we may ask, for in-

stance, if a particular pattern of pitches tend to lack

rhythmic fluidity in the practiced audio? We men-

tion these examples to stress that there is a large and

largely-unexplored space that may contribute signifi-

cantly to the challenge at hand.

In addition to the algorithmic, visualization, and

modeling challenges, such as those discussed within,

we are also interested in building an actual work-

ing practice support system, making this available for

general use by music students on the familiar app

stores. This goal is motivated by the promise shared

by nearly all music instruction systems — to help

more people appreciate and enjoy music-making, es-

pecially those who cannot afford traditional one-on-

one music instruction, or do not have access to it.

In addition to the inherent value of such a sys-

tem, wide distribution would provide a means for col-

lecting score-aligned music practice data from willing

contributors, thus supporting a large scale, empirical

view of music practice. Such data could be used to

CSME 2025 - 6th International Special Session on Computer Supported Music Education

690

track a student’s progress over a large period of time,

or could be used as a tool for studying the effective-

ness of various practice strategies. The benefits in

transforming our approach from a single practice ses-

sion to a large corpus of practice data could make a

lasting contribution to music pedagogy.

REFERENCES

Coutts, L. (2019). Empowering students to take ownership

of their learning: Lessons from one piano teacher’s

experiences with transformative pedagogy. Interna-

tional Journal of Music Education, 37(3):493–507.

Cuvillier, P. (2016). On temporal coherency of probabilis-

tic modesl for audio-to-score alignment. PhD thesis,

Universit

´

e Pierre-et-Marie-Curie.

Dannenberg, R. and Raphael, C. (2006). Music score align-

ment and computer accompaniment. Commun. ACM,

49:38–43.

Dannenberg, R. B. (1984). An on-line algorithm for real-

time accompaniment. In Proceedings of the 1984 In-

ternational Computer Music Conference, ICMC 1984,

Paris, France, October 19-23, 1984. Michigan Pub-

lishing.

Dannenberg, R. B., Sanchez, M., Joseph, A., Capell, P.,

Joseph, R., and Saul, R. (1990). An expert sys-

tem for teaching piano to novices. In Proceedings of

the 1990 International Computer Music Conference,

ICMC 1990, Glasgow, Scotland, September 10-15,

1990. Michigan Publishing.

Dorfer, M., jr., J. H., Arzt, A., Frostel, H., and Widmer,

G. (2018). Learning audio–sheet music correspon-

dences for cross-modal retrieval and piece identifica-

tion. Transactions of the International Society for Mu-

sic Information Retrieval, 1(1):22.

Fober, D., Letz, S., Orlarey, Y., Askenfelt, A., Falkenberg-

Hansen, K., and Schoonderwaldt, E. (2004). IMUTUS

- an Interactive Music Tuition System. In IRCAM, ed-

itor, Sound and Music Computing Conference, pages

97–103, Paris, France.

Fremery, C., M

¨

uller, M., and Clausen, M. (2010). Handling

repeats and jumps in score performance synchroniza-

tion. In Proc. of the International Conference on Mu-

sic Information Retrieval (ISMIR).

Hogr

¨

afer, M., Heitzler, M., and Schulz, H.-J. (2020). The

state of the art in map-like visualization. In Computer

Graphics Forum, volume 39, pages 647–674. Wiley

Online Library.

Hu, N., Dannenberg, R. B., and Tzanetakis, G. (2003).

Polyphonic audio matching and alignment for music

retrieval. In in Proc. IEEE WASPAA, pages 185–188.

Jiang, Y., Ryan, F., Cartledge, D., and Raphael, C. (2019).

Offline score alignment for realistic music practice. In

Proc. of the Sound Music and Computing Conference

(SMC).

M

¨

uller, M., Kurth, F., and Clausen, M. (2005). Audio

matching via chroma-based statistical features. pages

288–295.

Nakamura, T., Nakamura, E., and Sagayama, S. (2015).

Real-time audio-to-score alignment of music perfor-

mances containing errors and arbitrary repeats and

skips. CoRR, abs/1512.07748.

Pardo, B. and Birmingham, W. P. (2005). Modeling form

for on-line following of musical performances. In

AAAI.

Rabiner, L. R. (1989). A tutorial on hidden Markov models

and selected applications in speech recognition. Pro-

ceedings of the IEEE, 77(2):257–286.

Raphael, C. (2006). Aligning music audio with symbolic

scores using a hybrid graphical model. Machine

Learning, 65:389–409.

Silveira J. M., . G. R. (2016). The effect of audio record-

ing and playback on self-assessment among middle

school instrumental music students. Psychology of

Music, 44(4):880–892.

Vercoe, B. (1984). The synthetic performer in the context

of live performance. In Proceedings of the 1984 In-

ternational Computer Music Conference, ICMC 1984,

Paris, France, October 19-23, 1984. Michigan Pub-

lishing.

Yousician (2022). Yousician. http://yousician.com.

Zhang, Y., Li, Y., Chin, D., and Xia, G. (2019). Adap-

tive multimodal music learning via interactive-haptic

instrument. arXiv preprint arXiv:1906.01197.

Face the Music: Summarizing Unscripted Music Practice from Audio

691