Forensic Psychiatry and Big Data: Towards a Cyberphysical System

in Service of Clinic, Research and Cybersecurity

Mathieu Brideau-Duquette

1,2 a

, Sara Saint-Pierre Côté

2

, Tarik Boukhalfi

3

and Patrice Renaud

1,2

1

Département de Psychoéducation et Psychologie, Université du Québec en Outaouais,

283 Alexandre-Tâché Blvd, Gatineau, Canada

2

Laboratoire d’Immersion Forensique, Institut national de psychiatrie légale Philippe-Pinel, Montréal, Canada

3

{mathieu.brideau-duquette, patrice.renaud}@uqo.ca, sara.saint-pierre-cote.1@ens.etsmtl.ca, tarik.boukhalfi@uqtr.ca

Keywords: Forensic Psychiatry, Cyberphysical System, Extended Reality, Artificial Intelligence, Data Lake,

Cybersecurity, Social Engineering, Prediction, Prevention.

Abstract: The advent of big data and artificial intelligence has led to the elaboration of computational psychiatry. In

parallel, great progress has been made with extended reality (XR) technologies. In this article, we propose to

build a forensic cyberphysical system (CPS) that, with a data lake as its computational and data repository

core, will support clinical and research efforts in forensic psychiatry, this in both intramural and extramural

settings. The proposed CPS requires offender's data (notably clinical, behavioural and physiological), but also

emphasises the collection of such data in various XR contexts. The same data would be used to train machine

and deep learning, artificial intelligence, algorithms. Beyond the direct feedback these algorithms could give

to forensic specialists, they could help build forensic digital twins. They could also serve in the fine tuning of

XR usage with offenders. This paper concludes with human-centered cybersecurity concerns and

opportunities the same CPS would imply. The proximity between a forensic and XR-supported CPS and social

engineering will be addressed, and special consideration will be given to the opportunity for situational

awareness training with offenders. We conclude by sketching ethical and implementation challenges that

would require future inquiring.

1 INTRODUCTION

The recent context, the one motivating the present set

of proposals, is fuelled by four related (or so we

would contend) states of affair: the call for

computational psychiatry (CPsy), the era of big data,

the surge in artificial intelligence (AI) applications,

and the ease of access to rapidly improving extended

reality (XR) technology. Following the brief

introduction of these four developments in the present

section, the next two sections will delve in the crux of

our proposals: a cyberphysical interface for clinical

and research purposes, and its relationship with

human-centred cybersecurity concerns.

The last decade has seen burgeoning discussions

about CPsy. Itself inspired by computational

neuroscience, it characterizes attempts to model

mental illness biologically through multiscale levels

(e.g., genetic, synaptic, neural circuit, social

a

https://orcid.org/0000-0002-0780-8219

environment), all while assuming neuronal

computations are at the core of both healthy and

unhealthy psychology (Huys et al., 2016; Montague

et al., 2012; Wang & Krystal, 2014). It is assumed that

CPsy is to play a part in improving aetiological

understanding and nosology of mental disorders,

notably by liberating psychiatry from (too) stringent

diagnoses, favouring instead data-driven approach

which might help quantify symptoms dimensionally

(Huys et al., 2016); in turn, improvements in

therapeutics would be afforded, and to an extent,

better personalized.

Directly related to both computational

neuroscience and CPsy is the exponentially

accumulating and numerous (big) data. This

accumulation of data in various fields, notably the

health industry (Chen et al., 2022a), is seen by many

as a gold mine, empirical fuel to build better models,

and in turn theories, about mental disorders and

856

Brideau-Duquette, M., Côté, S. S.-P., Boukhalfi, T. and Renaud, P.

Forensic Psychiatry and Big Data: Towards a Cyberphysical System in Service of Clinic, Research and Cybersecurity.

DOI: 10.5220/0013496100003932

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 1, pages 856-864

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

symptomatology. Data mining, the process of

extracting useful information out of larger data sets,

can be considered a component of CPsy (Montague et

al., 2012).

The surge of big data and data mining in the

sciences has led to the proposal of a new science that

transcends standard statistics, “data science” (Dhar,

2012). The intensive need to process enormous

amounts of data quickly and efficiently, algorithms

under the umbrella term of “AI” are now developed

and deployed to tackle this task. A prime example

would be that of machine learning (ML) and deep

learning (DL), and their multiple approaches (see

Jordan & Mitchell, 2015; Mahesh, 2018; Ray, 2019;

Shrestha & Mahmood, 2019). A common

denominator of these approaches is better decision

making, this, either by a human being, or another

algorithm down the line. In the burning actuality, the

public at large but perhaps academia more stingingly

has been stormed by the release of efficient large

language models (LLMs; see Naveed et al., 2024,

notably their figured timeline).

Concluding the exposed context, the 1990’ and

early 2000’ was the period where a first surge of

research involving XR technologies hyped (virtual

reality, then augmented reality, then mixed reality).

However, it is only recently that such technologies

have become, relatively: cheaper, more logistically

versatile (e.g., size and weight of equipment, less

cabling) and more immersive (e.g., better visual

displays). A branch of cyberpsychology is versed into

integrating XR technologies into psychotherapeutic

protocols (e.g., Emmelkamp & Meyerbröker, 2021;

Park et al., 2019; Wiederhold & Bouchard, 2014).

Now, what can this broad context hold for forensic

settings?

2 A FORENSIC MENTAL

HEALTH’ CYBERPHYSICAL

SYSTEM

Forensics is understood here as any technical

expertise or approach that relates to describing or

understanding crime. Conversely, forensic

psychiatry/psychology (FPsy) pertains to

psychological factors (perhaps influenced by biology

or social factors themselves; Barnes et al., 2022) that

constitute risk factors of (re)offending. It is often

assumed that crime is somewhat related to mental

illness and psychopathology (Arboleda-Flórez,

2006).

Given the described context, a promising avenue

for the merger of CPsy and FPsy is through a

cyberphysical system (CPS), which would also be a

mental health-oriented, medical, CPS (Chen et al.,

2022a). Cyberphysics involves the merging of

computational capabilities with physical processes

(Lee, 2006). Jiang and colleagues (2020) position

CPS as different from the Internet of Things (Atzori

et al., 2010), the former having larger computational

capacity, which in turn gives these computations

control over the system (see also Chen et al., 2022a).

As the same authors and others (Alam & El Saddik,

2017) note, data from physical sensors can be sent to

a server, be computed upon, and in turn, give

directives for sensor configurational change, forming

a feedback loop. Such a loop makes CPS useful for

human-machine interaction (HMI; Jiang et al., 2020),

and of special relevance for FPsy, brain-computer

interaction (BCI). Importantly, XR technologies can

be implemented with/be part of HMI or BCI,

implying part of the feedback would include XR

content. So, what is advocated for, in an acronym-

intensive nutshell: FPsy, following the insights of

CPsy, should work within the confines of a CPS, as

the latter leads to a more optimal HMI/BCI. Central

to this are data storage and computational power, the

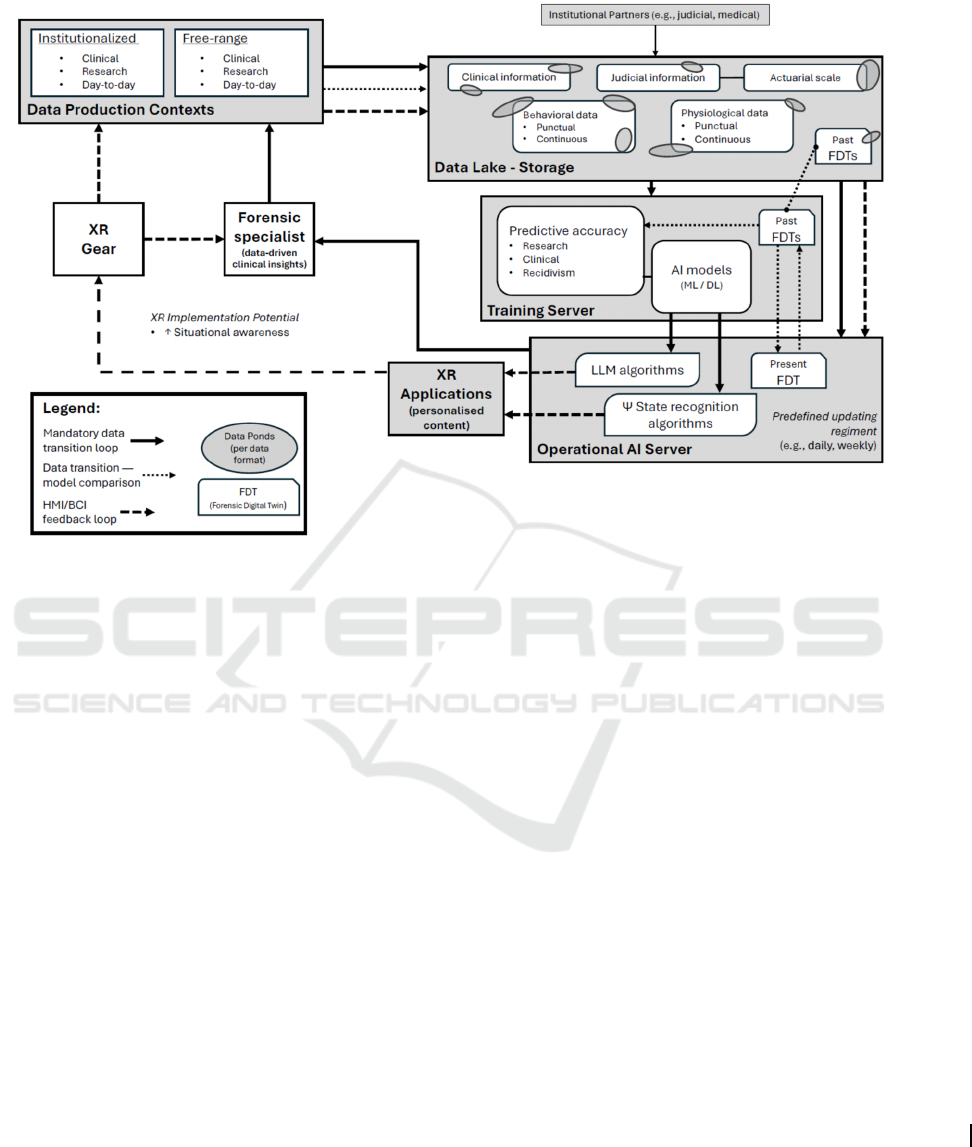

subject of the next subsection. Figure 1 better situates

the elements to be presented within the forensic-

medical CPS framework proposed here.

2.1 Data Lake, Its Basic Structure and

Content

The presented blueprint of data architecture

management heavily relies on establishing a data

lake, a multi-format big data (and any accompanying

metadata) holder and modifier (Nargesian et al.,

2019). A data lake implies a server with high-capacity

storage. Costs for such infrastructure would vary

according to the scope of the implemented CPS. Still,

it is worth noting that forensic and medical (including

psychiatric) institutions already have secured servers

to support day-to-day operations. As such, adding the

proposed data lake-supported CPS should not imply

radical novelty to the existing computer

infrastructure, and punctual adaptation for involved

information technology services. For FPsy purposes,

a list of non-exhaustive examples of retained data for

any given offender would include criminal offense(s),

psychiatric diagnoses and clinical notes,

questionnaire and actuarial results, past and present

physical conditions and diagnoses, medication

schedule and posology, as well as behavioural and

physiological indices. Such clinical information is

Forensic Psychiatry and Big Data: Towards a Cyberphysical System in Service of Clinic, Research and Cybersecurity

857

Figure 1: Data flows of the proposed forensic-medical cyberphysical system.

commonly centralised in medical settings, using

features like the Open Architecture Clinical

Information System (OACIS, Telus Health). More

broadly, detailed considerations for a forensic CPS

would likely benefit from considering medical CPS

(see Chen et al., 2022a). Data-collection-wise, worth

noting are LLM-powered applications that assist

medical professionals when interviewing patients, for

instance, by automatically taking notes. As these

applications already see deployment in the anonymity

bound healthcare service complex (e.g., CoeurWay),

there implementing in forensic settings is arguably

just as realistic.

Focusing on behavioural and physiological data,

non-exhaustive examples: speech prosody and

semantic content, heartrate, electroencephalography

(EEG), electrodermal activity, eye-tracking, blinking,

pupil dilation, brain structural scans and

hemodynamic responses and salivary or blood

hormonal levels. While some measurements can only

be punctual snapshots in time (e.g., salivary cortisol)

or inserted in research protocol efforts or be part of

routine checkups, others can be continuous, perhaps

24/7 measurements (e.g., watch-monitored heartrate).

At first glance, some of these measurements would

appear to require intensive management efforts, such

as laboratory analyses, followed by manual indexing

of results. One should note however the rapid

advancements in quick, app-monitoring-supported

testing (e.g., salivary cortisol level; Eli Health).

To be maximally interpretable or useful,

continuous physiological measurements likely

require some data cleaning. A notorious example

would be EEG, which beyond filtering choices, is

also blighted by eye and other movement artifacts

(Urigüen & Garcia-Zapirain, 2015). While there is no

definitive nor consensual, solution to this challenge,

automatic artifact removal readily exists (e.g., Goh et

al., 2017; Pedroni et al., 2019), and their betterment

is ongoing. The main point here is that for the data

lake to serve in producing quality data autonomously

and quickly (especially considering BCI), such

automatic cleaning is warranted. In any case, the

multiple types of data, and associated varying format

and frequency of acquisition, all suggest highly

individualized pre-processing pipelines and

algorithms, in accordance with the notion of “data

ponds”, a subdividing of processing architecture

differing across data types (Inmon, 2016; Sawadogo

& Darmont, 2021).

2.2 Behavioral and Physiological

Monitoring with XR

The use of XR technologies within forensic settings

offers a unique opportunity to probe the offender's

ERSeGEL 2025 - Workshop on Extended Reality and Serious Games for Education and Learning

858

psyche and behavioural patterns. We identify and

focus on two main living settings: institutionalized

and free-range. In turn, both could serve model

building at both group (e.g., diagnosis) and individual

levels.

2.2.1 Monitoring: Institutionalized or

Free-Range

Institutionalization of forensic populations can be

done in various settings (e.g., prison, secured

psychiatric institutions, transition housing), all of

which having in common a relatively high routine

component (e.g., hours of getting up and curfew,

eating hours, scheduled free-time and activity

periods, etc.). In these controlled settings, it is

relatively easy to integrate physiological

measurements as those described above, again, them

being either continuous or scheduled. It is further

possible, especially in the latter case, to register

subjective self-reports as well as clinical impressions

or observations from caregivers and personnel. As for

the former case, continuous measurements, they can

be extensively investigated via research protocols

incorporating XR technologies (Torous et al., 2021),

said protocols implying stricter experiment control.

Such protocols would have to be designed and

implemented with the specific institutionalisation

settings in mind. The use of XR is already done in

forensic settings (e.g., Boukhalfi et al., 2015; Renaud

et al., 2014), but generally within a strict protocol of

stimulus exposure. Even if more ecologically valid

then, say, desktop tasks (Loomis et al., 1999),

especially if LLMs were to be incorporated, one

caveat of such protocols is that they may nonetheless

influence or predispose offenders to a specific

mindset or narrowed response options. In other

words, offenders are still in a “task” setting, which is

implicitly cognitively constraining. This further

emphasises the relevance of spontaneous context

exposure, as anticipation (conscious or not) on the

offender's part is either absent or as in everyday

living. XR-wise, it is tentatively hypothesized that

AR would perform better than VR here, since the

former keeps the individual more rooted in the real

world. In other words, AR is more easily integrated

as a new way of living then VR, a notion important to

consider in the context of free-range monitoring. In

the same vein, prolonged AR, coupled with speech

recording, affords speech analysis (Corcoran &

Cecchi, 2020) to be integrated within a CPS.

Free-range monitoring has the benefits of

everyday living with little to no alterations. Its use

with psychiatric populations to gain

psychopathological insight has been advocated for,

by using, for instance, social media and smartphone

data (Gillan & Rutledge, 2021; Torous et al., 2021).

In the continuous monitoring of offenders, it can be

of interest to use AR, for three main reasons, its

relatively: aforementioned less impactful disturbing

of natural behaviour and inclinations, lesser

development costs (Baus & Bouchard, 2014),

lessened computational requirements (next

subsection), and it favouring adaptational strategies

(next section). While prone to its own challenges,

free-range (cf, open world) ML might be a necessity

for model quality (Zhu et al., 2024), and in turn, for

any HMI/BCI success. More broadly for ML- and

DL-based models, testing the predictive efficacy, or

the lack-thereof (pushing the investigation towards

the efficacy of each variable or configurations of), of

institutional-data-built models for free-range

situations is most relevant.

2.2.2 Nomothetic and Idiographic Prediction

A recurrent critique of conventional psychiatry is its

generalizing tendency of both aetiology and

treatment, perhaps routed in essentialisation (Brick et

al., 2021; Hitchcock et al., 2022), at the expense of a

more accurate and (perhaps necessary) personalized

approach. Remembering the commitment of CPsy to

overcome this pending issue, having data from

monitoring a same individual at varying constraint

levels (i.e., institutionalized contra free-range) might

give key insights to co-enhance prediction in all

settings (Gillan & Rutledge, 2021). More broadly, it

has been noted that CPsy has had limited success in

part due to an overcommitment to preexisting

category fixations (e.g., as opposed to data-driven

approaches; Rutledge et al., 2019), as well as

insufficient flexibility in modelling approaches

(Hitchcock et al., 2022). Central to the latter point

would be lack of time and contextual consideration,

or as the merger of the two would suggest, the need

for a dynamical understanding of psychopathology

(Hitchcock et al., 2022); the same could be said for

our understanding of offending and any underlying

role of psychopathology. The long-term, so

longitudinal, monitoring advocated for could thus

play a part in ending the gridlock of CPsy.

2.3 Towards Adjustment-Free

HMI/BCI, Digital Twins, and

Training

Assuming success of efforts described in the previous

subsection, the next step in improving both model

Forensic Psychiatry and Big Data: Towards a Cyberphysical System in Service of Clinic, Research and Cybersecurity

859

accuracy (research angle) and therapeutic change

(clinical angle) would be to incorporate a fully-

fledged HMI/BCI. Specifically, it is as if the model

would have learned “all there is to know” about the

individual, and so operate irrespective of continuous

learning from data input. Assumed here, within the

context of finite computational power, is a necessary

trade-off: the more data-intensive (and associated

processing steps) a HMI/BCI is burdened with as its

underlying algorithms are learning, the less it can

adapt quickly the XR content. This is especially true

for VR (e.g., visual field content generation), and

even more so if one is to assume a large deployment

of the proposed platform (i.e., hundreds if not

thousands of HMI/BCIs requiring not only live-

computations, but also learning-serving

computations).

From a pragmatic standpoint, actors should be

aware of an eventual cut-off point, where each

individual HMI/BCI parameters would run

independent, adjustment-free. Importantly though, as

novel situations can arise (especially in free-range),

the collecting and use of these data to continue AI

learning is strongly encouraged. This would likely

involve implementing a routine for HMI/BCI model

updating. Computational-economy-wise, an optimal

moment for model learning and update would be

when both input data and content generation are

minimal, that is, sleep time; if generalized across

offenders to a same (e.g., city) area, that would be

nighttime.

In parallel of these concerns, progress in ML and

DL has further pushed CPsy on the individualized,

idiographic, approach, namely, precision psychiatry

(Bzdok & Meyer-Lindenberg, 2017; Chen et al.,

2022b; Williams et al., 2024). While this approach

has its own merits, given the data to be collected

under the proposed monitoring opportunities, greater

attention will be given to the prospect of forensic

digital twins (FDT). A digital twin is, in principle, an

exact computational replica, a simulation, of an

existing physical system (Batty, 2018), with its

algorithms mimicking said system’s multilevel

dynamics. The integration of digital twins has already

been thought about within a CPS framework (Alam

& El Saddik, 2017) and healthcare (Katsoulakis et al.,

2024), and this exactitude the twin aims for echoes

the previous “all there is to know” about individual

offenders. To be clear, a FDT, once made, has no

bearing on any feedback the CPS might direct

towards the offender. Rather, as the offenders copy, it

could be used to modulate a variable, or series of, that

simulate the offender's environment, generating in

turn a response from the FDT. Two courses can

follow: one uses the FDT’s response to predict the

offender’s response, or one uses the FDT’s “failure”

in mimicking the offender. The former option can

inscribe itself in general efforts of causal ML

(Feuerriegel et al. 2024) and ML/DL approaches to

predict treatment outcome (Chekroud et al., 2021) or

reoffending risk. Validation-wise, three angles

deserve mention (these angles are closely tied to the

data production contexts found in Figure 1). From a

research angel, a FDT could be tested in juxtaposition

of the related offender, directly testing its validity in

this context. From a psychiatric angle, the FDT’s

prediction capacity could be contrasted with clinical

insight (e.g., a specialist’s prognosis). From a

criminological, recidivism angle, the FDT’s

prediction capacity can be contrasted with existing

forensic predictors (e.g., actuarial risk scales). The

failure-oriented option, which can apply for any of the

above angles, could benefit from testing various

iterations of same-offender FDTs, and since these

would not be fully independent from one another, the

events or measures in between consecutive FDT

iterations could themselves be given special ML or

DL treatment for explaining predictive discrepancies.

Naturally, an FDT could be itself updated following

the same scheme as in the previous paragraph, and in

turn, help to the betterment of the proposed XR-

themed HMI/BCI (Barricelli & Fogli. 2024).

The same data and models that served in building

digital twins could help make ecologically valid

artificial patients for a forensic professional's

formation; interactive contexts varying in scope and

ecologically adapting to the offender's behaviours

(e.g., speech content, prosody, gaze direction).

Recent initiatives using chatbots with realistic speech

options and appearance for formation purposes

already exist (e.g., Raiche et al., 2023; Vaidyam et al.,

2019). What is advocated here it to move beyond the

fixed and predetermined response options of chatbots,

towards situationally adapting and personalized

response options. There is great potential on this front

with LLMs. In parallel, the scope of varying

behaviours the artificial agent can modulate would

also grow.

3 CYBERSECURITY

An important underlying assumption of what has

been presented thus far is the approval given by the

regulatory bodies and involved detention institutions,

as well as the obtaining of offenders consent

whenever applicable. Paramount to these approval

status’, one must expect strict protocols and an

ERSeGEL 2025 - Workshop on Extended Reality and Serious Games for Education and Learning

860

infrastructure that secures data anonymity, access and

transfer (Anand et al., 2006; Khaitan & McCalley,

2015; Sarode et al., 2022; Torous et al., 2021). This

involves “traditional” challenges of cybersecurity,

which are beyond the scope of the proposed frame.

The present section will rather focus on the

vulnerability of the human mind in the context of

technology usage, with an emphasis on immersive

(i.e., presence inducing) XR technologies. The

section will conclude with opportunities the same

technologies provide in promoting adaptation.

3.1 From Presence to Social

Engineering

The phenomenon of presence is best summarized as

the feeling and ability to be/do “there”, this in reality

as well as XR (Riva et al., 2011). Presence is at the

core of what can make XR technology useful to

simulate the real world in the first place, guiding the

immersiveness it strives for (Slater, 2003). It is also a

versatile concept with various emphases a clinician or

researcher can inquire upon. For instance, a HMI/BCI

(forensic) psychiatrist enthusiast could be interested

in what causes (or actively maintains): a patient’s

social inaptitude (ties to social presence; Biocca et al.,

2003), paraphilic interests (ties to sexual presence;

Brideau-Duquette & Renaud, 2023), and so forth.

However, the relative ease with which presence

can be induced also makes it a psychological

vulnerability. Akin is the infamous Turing test,

which, at its core, implies something successfully

convincing a human being it has sentience (Saygin et

al., 2000); from “fake world” to “fake being”. A

marked example is the large leap in progress LLMs,

and the often-reported sense that one is interacting

with a comprehending entity when prompting such

LLM (e.g., Shanahan, 2024). As hinted above, the

proposed HMI/BCIs for offenders would capitalize

on such intuited impressions, as they would serve

presence, and so define the XR generated content (see

also Wang et al., 2024a) within the CPS.

With prevention in mind of reoffending, but also

first offense, one should consider that we are not

equal when facing such “in the wild” Turing tests. A

notable example would be of (pre)psychotic

individuals, for whom it is arguably expectable that

LLM-based applications, existing or to be, will

constitute a risk of psychosis triggering. This would

be especially so if coupled with easily accessible and

unsupervised, and presence-inducing, technologies.

In other words, presence while in XR can

(potentially) lead to estrangement when in reality (see

also Aardema et al., 2010). This is arguably a problem

that extends to any interactive platform, as

exemplified by problematic social media usage (Sun

& Zhang, 2021).

These concerns, generalized beyond psychosis,

relate to social engineering. The latter is defined by

Wang and colleagues (2020, 2021) as a cyberattack

where the perpetrator socially engages in some

manner to trick someone into behaving in a certain

way that breaches in place cybersecurity measures.

Concerns have been raised that affective and

cognitive traits could be vulnerabilities to such social

engineering, especially if ML is used to perfect

cyberattack schemes (Wang et al., 2020). The main

point here: in a context of personal data markets

(Spiekermann et al., 2015), and that private interests

could gain the same types of measurements as those

mentioned above (akin to, say, lingering time on a

social media post) with personal XR usage, the same,

optimal presence indicative data could be used for

social engineering; in other words, use the same ideas

elaborated throughout, but for nefarious or unwanted

(e.g., marketing) purposes. A case and point would be

the instillation of so-called dark patterns, this, via

technologies of various immersiveness quality, but

efficient in said instillation as immersiveness grows

(Wang et al., 2024b), presumably because of

presence.

We would extend the earlier definition of social

engineering, so it encompasses more of its original,

top-down normative effort (Duff, 2005). Rather than

considering political approach and ideology, we

would define said top-down influence: the controlling

actor (e.g., hacker, service provider) actively

modulates the technological medium to

psychologically (i.e., cognitively, affectively or

behaviourally) influence an individual without their

knowledge or consent. In fact, the FPsy approach

advocated for here largely fits this extended

definition, with the crucial distinction that offenders

would be both informed about the general aims of the

CPS, and provide consent.

3.2 Adaptation Building, Towards

Autonomy

A necessary goal for any psychiatric intervention is to

promote maximal autonomy of the individual. This is

also true in forensic-related settings, with the equally

prominent concern of the offender’s and others

safety. Merging the two involves making psychiatric

offenders more autonomous in ensuring the safety of

themselves and others. The previous subsection

emphasized the importance of surveilling for negative

impacts of immersive technologies and social

engineering, but as the earlier sections would hint, the

Forensic Psychiatry and Big Data: Towards a Cyberphysical System in Service of Clinic, Research and Cybersecurity

861

poison can be part of the cure: mechanisms that

facilitate social engineering might also facilitate trait

resilience building.

The proposed CPS-XR architecture has much in

common with biofeedback approaches, as in both

cases, continuous physiological or behavioural

measurements take part in influencing some feedback

to be perceived by the individual. Assuming a

genuine willingness to change on the offender's part,

the same data that successfully predicts a near-

imminent issue (e.g., aggressive outburst,

behavioural disorganisation) could be used to

promote situation awareness (Alsamhi et al., 2024;

Endsley, 1995), an important step in de-escalation

and in some cases, long-term problematic pattern

discontinuation.

This assisted situational awareness could serve in

both institutionalized and free-range monitoring

conditions. In the former, one could envision its

common use by the mental health professional and

the offender in a therapeutic setting, allowing in-the-

moment flexibility, as said professional can adapt the

sessions therapeutic target. This would be especially

relevant for mindfulness-based interventions

(Chandrasiri et al., 2020), and more generally, as a

solid base for the learning of de-

escalation/reorienting, self-regulation strategies.

Using XR has the additional value to lessen

abstraction in forming or applying said strategies. For

instance, feedforward cues, perceptually salient and

intuitive instructions about what could be done in the

XR-related environment (Muresan et al., 2023). In a

free-range setting, previously learned strategies can

be put to the test. In collaboration with the offender,

who can give subjective impressions, as well as with

objective criteria of de-escalation/reorienting, the

continued input of behavioural or physiological data

could serve in further modelling both strategy success

and failure, and their respective predictors.

4 CONCLUSION AND FUTURE

DIRECTIONS

The advent in recent years of both conceptual

developments in psychiatry and access to quality XR

technologies converge to stimulating clinical and

research possibilities. Presented here was a CPS

general configuration to better equip FPsy in

capitalizing on these possibilities, and how doing so

also relates to human-centered cybersecurity features,

present and future.

Still, pending issues little to not addressed here

require consideration. Ethical concerns relating to

offenders’ consent, specifically, it being genuine as

opposed to pressured, should be examined; one

should note that any research or psychotherapeutic

intervention within a forensic setting has that exact

issue, as the offender, facing the judicial system, is

imposed a lifestyle and routine, in which, here, the

proposed CPS would happen to inscribe itself in.

To our knowledge, no implementation akin to

what has been proposed was ever attempted in

forensic settings. Perhaps such implementing is not

realistic in all jurisdictions. Where possible, any such

attempts at establishing a forensic CPS should self-

monitor its incremental efforts, so as to give insight

in the challenges ahead. At the crossing of logistical

and ethical concerns overreach, the proposed CPS

scheme might be better implemented in successive

steps. We propose the following such steps as a

general path to the complete CPS: institutionalized

clinical settings and research, institutionalized

offender day-to-day living settings, free-range

clinical and research appointments, day-to-day living

settings. In between each of these steps, one would

consider the same settings with XR integrated to it

(e.g., institutionalized day-to-day would transition to

institutionalized day-to-day complemented with XR

technology).

ACKNOWLEDGEMENTS

The authors would like to thank the Fonds de

recherche du Québec for its funding of the Centre de

recherche et innovation en cybersécurité et société

(CIRICS).

REFERENCES

Aardema, F., O'Connor, K., Côté, S., & Taillon, A. (2010).

Virtual reality induces dissociation and lowers sense of

presence in objective reality. Cyberpsychology,

Behavior, and Social Networking, 13(4), 429-435.

Alam, K. M., & El Saddik, A. (2017). C2PS: A digital twin

architecture reference model for the cloud-based cyber-

physical systems. IEEE access, 5, 2050-2062.

Alsamhi, S. H., Kumar, S., Hawbani, A., Shvetsov, A. V.,

Zhao, L., & Guizani, M. (2024). Synergy of Human-

Centered AI and Cyber-Physical-Social Systems for

Enhanced Cognitive Situation Awareness:

Applications, Challenges and Opportunities. Cognitive

Computation, 1-21.

Anand, M., Cronin, E., Sherr, M., Blaze, M., Ives, Z., &

Lee, I. (2006). Security challenges in next generation

ERSeGEL 2025 - Workshop on Extended Reality and Serious Games for Education and Learning

862

cyber physical systems. Beyond SCADA: Networked

Embedded Control for Cyber Physical Systems, 41.

Arboleda-Flórez, J. (2006). Forensic psychiatry:

contemporary scope, challenges and controversies.

World Psychiatry, 5(2), 87.

Atzori, L., Iera, A., & Morabito, G. (2010). The internet of

things: A survey. Computer networks, 54(15), 2787-

2805.

Barnes, J. C., Raine, A., & Farrington, D. P. (2022). The

interaction of biopsychological and socio-

environmental influences on criminological outcomes.

Justice Quarterly, 39(1), 26-50.

Barricelli, B. R., & Fogli, D. (2024). Digital twins in

human-computer interaction: A systematic review.

International Journal of Human–Computer

Interaction, 40(2), 79-97.

Batty, M. (2018). Digital twins. Environment and Planning

B: Urban Analytics and City Science, 45(5), 817-820.

Baus, O., & Bouchard, S. (2014). Moving from virtual

reality exposure-based therapy to augmented reality

exposure-based therapy: a review. Frontiers in human

neuroscience, 8, 112.

Biocca, F., Harms, C., & Burgoon, J. K. (2003). Toward a

more robust theory and measure of social presence:

Review and suggested criteria. Presence:

Teleoperators & virtual environments, 12(5), 456-480.

Boukhalfi, T., Joyal, C., Bouchard, S., Neveu, S. M., &

Renaud, P. (2015). Tools and techniques for real-time

data acquisition and analysis in brain computer

interface studies using qEEG and eye tracking in virtual

reality environment. IFAC-PapersOnLine, 48(3), 46-

51.

Brick, C., Hood, B., Ekroll, V., & De-Wit, L. (2022).

Illusory essences: A bias holding back theorizing in

psychological science. Perspectives on Psychological

Science, 17(2), 491-506.

Brideau-Duquette, M., & Renaud, P. (2023). Sexual

Presence: A Brief Introduction. In Encyclopedia of

Sexual Psychology and Behavior (pp. 1-9). Cham:

Springer International Publishing.

Bzdok, D., & Meyer-Lindenberg, A. (2018). Machine

learning for precision psychiatry: opportunities and

challenges. Biological Psychiatry: Cognitive

Neuroscience and Neuroimaging, 3(3), 223-230.

Chandrasiri, A., Collett, J., Fassbender, E., & De Foe, A.

(2020). A virtual reality approach to mindfulness skills

training. Virtual Reality,

24(1), 143-149.

Chekroud, A. M., Bondar, J., Delgadillo, J., Doherty, G.,

Wasil, A., Fokkema, M., ... & Choi, K. (2021). The

promise of machine learning in predicting treatment

outcomes in psychiatry. World Psychiatry, 20(2), 154-

170.

Chen, F., Tang, Y., Wang, C., Huang, J., Huang, C., Xie,

D., ... & Zhao, C. (2022a). Medical cyber–physical

systems: A solution to smart health and the state of the

art. IEEE Transactions on Computational Social

Systems, 9(5), 1359-1386.

Chen, Z. S., Galatzer-Levy, I. R., Bigio, B., Nasca, C., &

Zhang, Y. (2022b). Modern views of machine learning

for precision psychiatry. Patterns, 3(11).

CoeurWay. Retrieved from https://www.coeurway.com/en.

Corcoran, C. M., & Cecchi, G. A. (2020). Using language

processing and speech analysis for the identification of

psychosis and other disorders. Biological Psychiatry:

Cognitive Neuroscience and Neuroimaging, 5(8), 770-

779.

Dhar, V. (2012). Data science and prediction.

Communications of the ACM, 56(12), 64-73.

Duff, A. S. (2005). Social engineering in the information

age. The Information Society, 21(1), 67-71.

Eli Health. Retrieved from https://eli.health/

products/cortisol.

Emmelkamp, P. M., & Meyerbröker, K. (2021). Virtual

reality therapy in mental health. Annual review of

clinical psychology, 17(1), 495-519.

Endsley, M. R. (1995). Toward a theory of situation

awareness in dynamic systems. Human factors, 37(1),

32-64.

Feuerriegel, S., Frauen, D., Melnychuk, V., Schweisthal, J.,

Hess, K., Curth, A., ... & van der Schaar, M. (2024).

Causal machine learning for predicting treatment

outcomes. Nature Medicine, 30(4), 958-968.

Gillan, C. M., & Rutledge, R. B. (2021). Smartphones and

the neuroscience of mental health. Annual Review of

Neuroscience, 44(1), 129-151.

Goh, S. K., Abbass, H. A., Tan, K. C., Al-Mamun, A.,

Wang, C., & Guan, C. (2017). Automatic EEG artifact

removal techniques by detecting influential

independent components. IEEE Transactions on

Emerging Topics in Computational Intelligence, 1(4),

270-279.

Hitchcock, P. F., Fried, E. I., & Frank, M. J. (2022).

Computational psychiatry needs time and context.

Annual review of psychology, 73(1), 243-270.

Huys, Q. J., Maia, T. V., & Frank, M. J. (2016).

Computational psychiatry as a bridge from

neuroscience to clinical applications. Nature

neuroscience, 19(3), 404-413.

Inmon, B. (2016). Data Lake Architecture: Designing the

Data Lake and avoiding the garbage dump. Technics

Publications, LLC.

Jiang, C., Ma, Y., Chen, H., Zheng, Y., Gao, S., & Cheng,

S. (2020). Cyber physics system: a review. Library Hi

Tech, 38(1), 105-116.

Jordan, M. I., & Mitchell, T. M. (2015). Machine learning:

Trends, perspectives, and prospects. Science,

349(6245), 255-260.

Katsoulakis, E., Wang, Q., Wu, H., Shahriyari, L., Fletcher,

R., Liu, J., ... & Deng, J. (2024). Digital twins for

health: a scoping review. NPJ Digital Medicine, 7(1),

77.

Khaitan, S. K., & McCalley, J. D. (2015). Design

techniques and applications of cyberphysical systems:

A survey. IEEE systems journal, 9(2), 350-365.

Lee, E. A. (2006, October). Cyber-physical systems-are

computing foundations adequate. In Position paper for

NSF workshop on cyber-physical systems: research

motivation, techniques and roadmap (Vol. 2, pp. 1-9).

Loomis, J. M., Blascovich, J. J., & Beall, A. C. (1999).

Immersive virtual environment technology as a basic

Forensic Psychiatry and Big Data: Towards a Cyberphysical System in Service of Clinic, Research and Cybersecurity

863

research tool in psychology. Behavior research

methods, instruments, & computers, 31(4), 557-564.

Mahesh, B. (2020). Machine learning algorithms-a review.

International Journal of Science and Research

(IJSR).[Internet], 9(1), 381-386.

Montague, P. R., Dolan, R. J., Friston, K. J., & Dayan, P.

(2012). Computational psychiatry. Trends in cognitive

sciences, 16(1), 72-80.

Muresan, A., McIntosh, J., & Hornbæk, K. (2023). Using

feedforward to reveal interaction possibilities in virtual

reality. ACM Transactions on Computer-Human

Interaction, 30(6), 1-47.

Nargesian, F., Zhu, E., Miller, R. J., Pu, K. Q., & Arocena,

P. C. (2019). Data lake management: challenges and

opportunities. Proceedings of the VLDB Endowment,

12(12), 1986-1989.

Park, M. J., Kim, D. J., Lee, U., Na, E. J., & Jeon, H. J.

(2019). A literature overview of virtual reality (VR) in

treatment of psychiatric disorders: recent advances and

limitations. Frontiers in psychiatry, 10, 505.

Pedroni, A., Bahreini, A., & Langer, N. (2019). Automagic:

Standardized preprocessing of big EEG data.

NeuroImage, 200, 460-473.

Raiche, A. P., Dauphinais, L., Duval, M., De Luca, G.,

Rivest-Hénault, D., Vaughan, T., ... & Guay, J. P.

(2023). Factors influencing acceptance and trust of

chatbots in juvenile offenders’ risk assessment training.

Frontiers in Psychology, 14, 1184016.

Ray, S. (2019, February). A quick review of machine

learning algorithms. In 2019 International conference

on machine learning, big data, cloud and parallel

computing (COMITCon) (pp. 35-39). IEEE.

Renaud, P., Trottier, D., Rouleau, J. L., Goyette, M.,

Saumur, C., Boukhalfi, T., & Bouchard, S. (2014).

Using immersive virtual reality and anatomically

correct computer-generated characters in the forensic

assessment of deviant sexual preferences. Virtual

Reality, 18, 37-47.

Riva, G., Waterworth, J. A., Waterworth, E. L., &

Mantovani, F. (2011). From intention to action: The

role of presence. New Ideas in Psychology, 29(1), 24-

37.

Rutledge, R. B., Chekroud, A. M., & Huys, Q. J. (2019).

Machine learning and big data in psychiatry: toward

clinical applications. Current opinion in neurobiology,

55, 152-159.

Sarode, A., Karkhile, K., Raskar, S., & Patil, R. Y. (2022,

November). Secure data sharing in medical cyber-

physical system—a review. In Futuristic Trends in

Networks and Computing Technologies: Select

Proceedings of Fourth International Conference on

FTNCT 2021 (pp. 993-1005). Singapore: Springer

Nature Singapore.

Sawadogo, P., & Darmont, J. (2021). On data lake

architectures and metadata management. Journal of

Intelligent Information Systems, 56(1), 97-120.

Saygin, A. P., Cicekli, I., & Akman, V. (2000). Turing test:

50 years later. Minds and machines, 10(4), 463-518.

Shanahan, M. (2024). Talking about large language models.

Communications of the ACM, 67(2), 68-79.

Shrestha, A., & Mahmood, A. (2019). Review of deep

learning algorithms and architectures. IEEE access, 7,

53040-53065.

Slater, M. (2003). A note on presence terminology.

Presence connect, 3(3), 1-5.

Spiekermann, S., Acquisti, A., Böhme, R., & Hui, K. L.

(2015). The challenges of personal data markets and

privacy. Electronic markets, 25, 161-167.

Sun, Y., & Zhang, Y. (2021). A review of theories and

models applied in studies of social media addiction and

implications for future research. Addictive behaviors,

114, 106699.

Telus Health. Retrieved from https://www.telus.com/en/

health/organizations/health-authorities-and-hospitals/

clinical-solutions/oacis.

Torous, J., Bucci, S., Bell, I. H., Kessing, L. V., Faurholt‐

Jepsen, M., Whelan, P., ... & Firth, J. (2021). The

growing field of digital psychiatry: current evidence

and the future of apps, social media, chatbots, and

virtual reality. World Psychiatry, 20(3), 318-335.

Urigüen, J. A., & Garcia-Zapirain, B. (2015). EEG artifact

removal—state-of-the-art and guidelines. Journal of

neural engineering, 12(3), 031001.

Vaidyam, A. N., Wisniewski, H., Halamka, J. D.,

Kashavan, M. S., & Torous, J. B. (2019). Chatbots and

conversational agents in mental health: a review of the

psychiatric landscape. The Canadian Journal of

Psychiatry, 64(7), 456-464.

Wang, X. J., & Krystal, J. H. (2014). Computational

psychiatry. Neuron, 84(3), 638-654.

Wang, Z., Sun, L., & Zhu, H. (2020). Defining social

engineering in cybersecurity. IEEE Access, 8, 85094-

85115.

Wang, Z., Zhu, H., & Sun, L. (2021). Social engineering in

cybersecurity: Effect mechanisms, human

vulnerabilities and attack methods. Ieee Access, 9,

11895-11910.

Wang, X., Lee, L. H., Bermejo Fernandez, C., & Hui, P.

(2024b). The dark side of augmented reality: Exploring

manipulative designs in AR. International Journal of

Human–Computer Interaction, 40(13), 3449-3464.

Wang, Y., Wang, L., & Siau, K. L. (2024a). Human-

Centered Interaction in Virtual Worlds: A New Era of

Generative Artificial Intelligence and Metaverse.

International Journal of Human–Computer

Interaction, 1-43.

Wiederhold, B. K., & Bouchard, S. (2014). Advances in

virtual reality and anxiety disorders. Springer.

Williams, L. M., Carpenter, W. T., Carretta, C.,

Papanastasiou, E., & Vaidyanathan, U. (2024).

Precision psychiatry and Research Domain Criteria:

Implications for clinical trials and future practice. CNS

spectrums, 29(1), 26-39.

Zhu, F., Ma, S., Cheng, Z., Zhang, X. Y., Zhang, Z., & Liu,

C. L. (2024). Open-world machine learning: A review

and new outlooks. arXiv preprint arXiv:2403.01759.

ERSeGEL 2025 - Workshop on Extended Reality and Serious Games for Education and Learning

864