Pedagogical Agents in Virtual Reality for Training of Biomedical

Engineering Students

Ersilia Vallefuoco

a

, Elisabetta Ponticelli, Alessandro Pepino

b

and Francesco Amato

c

Department of Electrical Engineering and Information Technology, University of Naples Federico II,

{ersilia.vallefuoco, pepino, framato}@unina.it, eli.ponticelli@studenti.unina.it

Keywords:

Pedagogical Agent, Virtual Agent, Conversational Agent, eXtended Reality, Virtual Reality, Engineering

Education, Simulation, Experiential Learning.

Abstract:

Technological advancements have enhanced the development of pedagogical agents (PAs) to support the learn-

ing processes. The present work describes a new application of a PA in a virtual reality (VR) environment for

the education of students in biomedical engineering. The PA, represented by a virtual physician, allows the

student to analyze the healthcare system in an interactive way, favoring the acquisition of professional skills.

A preliminary test was performed with a small sample size. The results show good usability and credibility,

with responses from participants revealing that the PA is effective for reflection on complex healthcare sys-

tems. Future enhancement will be done on the PA’s nonverbal cues, followed by full integration into virtual

environments to improve user engagement and realism.

1 INTRODUCTION

Recent technological advances, particularly following

the COVID-19 pandemic, have facilitated the adop-

tion of new digital learning applications in higher ed-

ucation (Alenezi, 2023). Among these, pedagogical

agents (PAs) have emerged as innovative educational

tools that enhance teaching and learning processes

(Lane and Schroeder, 2022). A PA - also known as an

embodied conversational agent, an intelligent virtual

agent, and a virtual human - can be broadly defined

as a virtual character that can engage and communi-

cate with users for instructional purposes (Dai et al.,

2022). By leveraging artificial intelligence (AI) and

natural language processing, PAs can interact with

users through verbal and nonverbal interactions, while

also processing inputs from multiple sensors (Lugrin

et al., 2022).

Previous studies (Heidig and Clarebout, 2011;

Zhang et al., 2024; Davis et al., 2023) have shown

that PAs can provide personalized learning experi-

ences in which they can present information, support

learners like a tutor, monitor their activities, and im-

prove their motivation (Apoki et al., 2022). Addi-

a

https://orcid.org/0000-0003-3952-1500

b

https://orcid.org/0000-0001-6434-5145

c

https://orcid.org/0000-0002-9053-3139

tionally, communication and social strategies can oc-

cur when learners interact with a PA (Sikstr

¨

om et al.,

2022; Schroeder et al., 2013), especially when the

agent has a human-like appearance and uses nonver-

bal cues (Tao et al., 2022; Septiana et al., 2024).

Technologies of virtual reality (VR) can support

the development of realistic PAs, not only in terms of

appearance but also in terms of believability and so-

cial interaction (Grivokostopoulou et al., 2020). The

possibility for users to share a virtual physical space

with the PA establishes a social context for interac-

tion, enabling also collaboration and increasing the

physical perception of the agent’s social presence

(Guimar

˜

aes et al., 2020). People recognize the so-

cial space of the virtual agent and adjust their in-

terpersonal space based on the agent’s gender and

behavior (Kyrlitsias and Michael-Grigoriou, 2022).

Moreover, previous research (Bergmann et al., 2015;

Nu

˜

nez et al., 2023) has shown that adaptation mech-

anisms presented in human-human interaction (e.g.,

lexical, syntactic, and semantic alignment) can also

be reproduced in interactions with virtual agents. En-

riching VR environments with a PA creates an inter-

active social learning experience that can significantly

foster learning (Petersen et al., 2021).

The integration of PAs in educational activities

varies significantly depending on the higher educa-

tion context. For instance, (Chheang et al., 2024) pro-

Vallefuoco, E., Ponticelli, E., Pepino, A. and Amato, F.

Pedagogical Agents in Virtual Reality for Training of Biomedical Engineering Students.

DOI: 10.5220/0013502400003932

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 1, pages 915-920

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

915

posed a VR application with a PA for medical edu-

cation. The PA, developed using ChatGPT and em-

bodied virtual characters, provides guidance and as-

sistance in learning human anatomy. Similarly, an-

other study (Dai et al., 2024) provided a VR platform

to develop teaching skills. The AI-powered PAs simu-

late student behaviors and facilitate interactive and re-

flective teaching practices. (Grivokostopoulou et al.,

2020) investigated the effectiveness of an embodied

PA in virtual learning environments for the learning

of environmental engineering and renewable energy

production. The findings reveal that the agent en-

hanced the learning experience, increased student en-

gagement, and improved knowledge acquisition and

performance.

This study is part of an ongoing innovation effort

within the biomedical engineering (BME) program at

the University of Naples Federico II, which is work-

ing to integrate new simulation tools aimed at provid-

ing experiential educational opportunities. In partic-

ular, the current study presents an example applica-

tion of a VR-based PA in BME education. The pro-

posed application aims to deliver meaningful learning

experiences for BME students, focusing on the de-

velopment of soft skills. A critical aspect of BME

education is preparing students for multidisciplinary

roles that require effective interaction and collabo-

ration with diverse healthcare professionals (Mon-

tesinos et al., 2023). The proposed PA has been devel-

oped and integrated into the practical activities of the

“Healthcare Organization Models” course, part of the

BME program at the University of Naples Federico

II. The virtual PA is accessible through the course’s

Moodle platform via a web application. A prelimi-

nary test was conducted to identify potential usability

issues and explore how learners perceive the PA.

2 METHODS

The course “Healthcare Organization Models”, of-

fered in the Masters’ program of BME at the Uni-

versity of Naples Federico II, is designed to provide

knowledge and develop skills needed to understand

and manage the services and structures of healthcare

systems. At the end of the course, students will be

able to analyze complex healthcare organization mod-

els, measure their performance, and propose solutions

to optimize them via simulation tools.

However, analyzing healthcare organizational sys-

tems requires continuous interaction with various

healthcare professionals to gather and evaluate infor-

mation and/or data and then propose potential im-

provements. For this interaction to be effective,

biomedical engineers need soft skills that enable them

to ask the right questions and identify key issues and

critical points within the system.

Based on these considerations, an instructional ac-

tivity was designed using a PA in a VR environment to

train students’ soft skills, especially communication,

in collecting information and data necessary for ana-

lyzing healthcare processes. The activity was struc-

tured to be conducted both in the classroom and at

home through the course’s Moodle platform. In the

classroom, the instructor and students interact with

the PA to analyze and model a healthcare system. Fol-

lowing the lesson, students can independently interact

with the virtual agent to practice and develop their soft

skills further, applying them to the analysis and mod-

eling of other healthcare systems.

2.1 VR Application

The main objective of the proposed application is to

train BME students to interact effectively with health

professionals. The proposal is aimed at Italian BME

master students.

The VR scenario has been designed to simulate

a hospital doctor’s office. As shown in Fig. 1, the

room has a rectangular layout and is furnished with

objects typically found in a real office. All 3D mod-

els of the objects were downloaded from (Sketchfab,

2024). The players can move freely using the direc-

tional keys on the keyboard, and they can interact with

the PA by typing text from the keyboard into the chat

or vocally pressing the T key.

The VR application has been developed as a web

application and is accessible via URL on Moodle’s

platform. In particular, (PlayCanvas, 2024) was used

as a WebGL game engine, with the integration of

WebXR, to develop the virtual environment, whereas

(Convai, 2024) was used to implement the PA. Convai

facilitates the development of a virtual agent, allow-

ing for the customization of its narrative, personality,

knowledge base, and large language models (LLMs).

Using the character description, a brief back-

ground on the character’s story, personality traits, and

distinctive features was provided. More specifically,

the PA is a 40-year-old internist physician with 10

years of experience in the Internal Medicine Depart-

ment of the Antonio Cardarelli Hospital in Naples.

The agent is female and is named Sofia (Fig. 2).

Sofia’s body was created using (Ready Player Me,

2024) and is dressed as a doctor. Sofia has been de-

signed to provide information exclusively about the

activities, services, and examinations in her depart-

ment and hospital. Hence, users can ask questions

related to these topics. If inquiries are made about un-

ERSeGEL 2025 - Workshop on Extended Reality and Serious Games for Education and Learning

916

Figure 1: The virtual environment designed for the VR application. The top view (top left) provides an overview of the

layout, while detailed views (top right and bottom) showcase specific areas, including a medical examination corner and the

physician’s desk setup for consultations.

Figure 2: The developed pedagogical agent. The figure il-

lustrates Sofia, a virtual internist physician.

related subjects, Sofia’s standard response is: “Sorry,

but I can’t help you”.

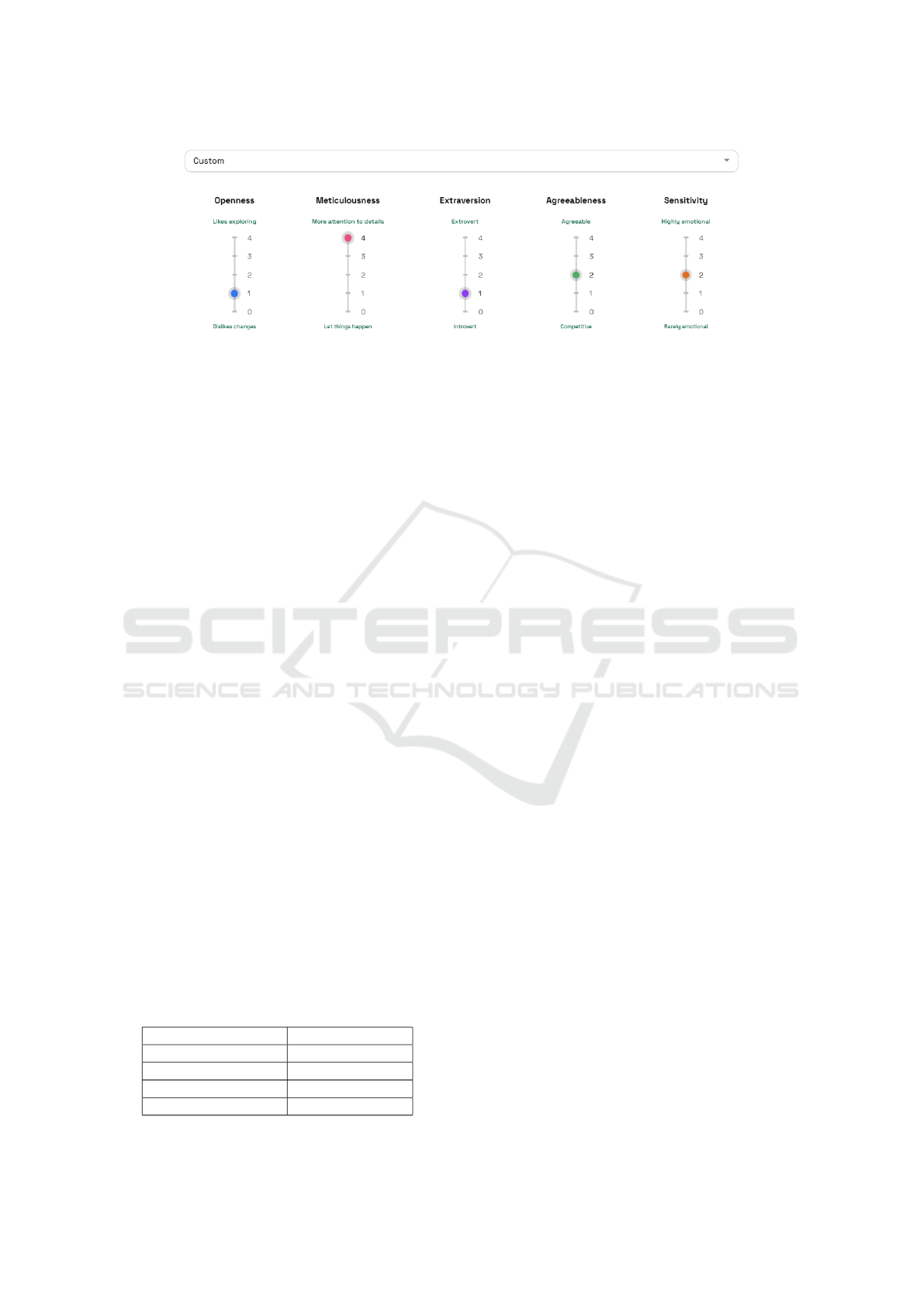

Regarding personality, Fig. 3 shows the settings

of personality traits. The speaking style was set to

be formal and knowledgeable, with examples of pos-

sible sentences. The language was set to Italian and

a default multilingual voice from Convai was used.

For the LLM, the Claude 3-5-Sonnet was chosen af-

ter comparing several proposed models, specifically

GPT-4o and Gemini-Pro. The comparison was made

using the same set of questions, and the authors eval-

uated the accuracy and relevance of the answers for

each LLM model. Filters were enabled to prevent po-

tential violations. In addition, additional knowledge

in the form of text files was integrated into the agent’s

knowledge base. These cover the topics of services,

activities, and data of Sofia’s department and hospital.

After the design phase, conversation training ses-

sions were conducted with the PA to identify and cor-

rect any deviations or errors in the interaction. The

authors conducted this training phase individually.

3 PRELIMINARY TEST

An initial exploratory evaluation was conducted to

gather preliminary feedback on the VR application

and users’ perceptions of the PA. 7 BME students

Pedagogical Agents in Virtual Reality for Training of Biomedical Engineering Students

917

Figure 3: Personality trait settings of the pedagogical agent. The figure shows the personality customization of the virtual

agent, across five dimensions: Openness, Meticulousness, Extraversion, Agreeableness, and Sensitivity.

(6 females and 1 male) were recruited by posting an

announcement on the latest Moodle course. None

of the participants had previous experience with PAs

in VR, but 4 reported having experience with VR

games. The announcement provided participants with

comprehensive information about the study’s pur-

pose, data collection procedures, and privacy protec-

tion measures. All participants provided their consent

before participating in the test.

The test was conducted as an unmoderated remote

test (Black and Abrams, 2018), where participants re-

ceived clear instructions and task guidelines for us-

ing the application. Specifically, they accessed the

VR application through Moodle and used their per-

sonal computers to run the web application. The in-

teraction task required participants to ask the agent

questions useful for analyzing and modeling a health-

care process, with interaction limited to 10 questions.

At the end, participants completed two different ques-

tionnaires: the System Usability Scale (SUS) (Brooke

et al., 1996) and the Agent Persona Instrument (API)

(Baylor and Ryu, 2003; Ryu and Baylor, 2005). The

SUS is a 5-point Likert scale designed to assess vari-

ous usability aspects, including ease of use, efficiency,

and user confidence in the system. The API is used to

investigate how the PA is perceived by learners. The

instrument was organized into four dimensions: facil-

itation of learning, credible, human-like, and engag-

ing. Additionally, we included an open-ended section

where participants could freely express their opinions

and provide feedback on their experiences.

The mean score for SUS was 92.5 (SD = 5.2). The

results of the API are summarized in Table 1.

Table 1: Results of the Agent Persona Instrument (API).

The table presents the mean scores for each API domain.

API Mean score (SD)

Facilitating Learning 4.3 (0.6)

Credible 4.6 (0.2)

Human-like 2.8 (0.8)

Engaging 3.1 (0.8)

Participants reported no problems during the test

session, except for one session where the agent

stopped working. It was necessary to close the web

application to restore proper functionality.

In general, the questions asked by users were var-

ied and touched on different aspects of healthcare pro-

cesses. Topics ranged from hospital activities to logis-

tical and structural aspects of the hospital to potential

critical issues. In all cases, the PA responded accu-

rately. In particular, Sofia provided general estimates

of wait times and patient flow between activities when

requested. The PA also provided possible solutions

to critical health issues and a general estimate of re-

sources and beds in its department and the hospital.

Critical issues identified by the PA included a lack of

beds relative to the number of patients, excessive wait

times for specialized tests, and a lack of staff.

4 DISCUSSION AND

CONCLUSION

Several studies (Dai et al., 2022; Zhang et al., 2024)

have shown that PAs can be used to support and guide

learners during instructional activities. VR technolo-

gies can enhance users’ sense of agency and trust as

well as increase their motivation and engagement (Lu-

grin et al., 2022; Chiou et al., 2020).

In the present study, we propose an application of

embodied PA in a VR environment. The application

is designed to provide a training tool for BME stu-

dents to develop skills for interacting with healthcare

professionals. The PA is a physician that users can

question to collect data and information useful for an-

alyzing a healthcare process. The PA was designed to

be used via web application in a specific course within

the Master’s program at the University of Naples Fed-

erico II. A preliminary test was conducted to investi-

gate the users’ perception of the VR application and

the PA.

ERSeGEL 2025 - Workshop on Extended Reality and Serious Games for Education and Learning

918

All participants indicated an excellent level of us-

ability (SUS score > 80.5): the application was user-

friendly and easy to use. The API results showed that

the PA encouraged participants to reflect and focus

on the complexity of healthcare processes (the central

topic of their discussion). In addition, most partici-

pants indicated that the PA was interesting and knowl-

edgeable. As expected based on the PA design, the

level of humanness was low; in fact, participants rec-

ognized that the PA did not exhibit particular emo-

tions and was neither friendly nor entertaining.

These results aligned with participants’ feedback,

which was generally positive, particularly regarding

the agent’s credibility. For instance, one participant

stated: “Although the agent did not have a realistic ap-

pearance, its behavior was in line with its role”. An-

other participant indicated the usefulness of the PA in

instructional activities: “Very valuable tool that could

provide good support to the student in studying and

developing a simulation model for the management

of healthcare organizational exam”.

To our knowledge, this is the first study to explore

the use of a virtual PA for training BME students.

Consistent with previous research (Petersen et al.,

2021; Zhang et al., 2024; Kyrlitsias and Michael-

Grigoriou, 2022), our findings highlight the poten-

tial of VR-based PAs in creating dynamic and inter-

active learning environments. BME students tradi-

tionally face limited opportunities to develop com-

munication skills needed for interaction with health-

care professionals (Montesinos et al., 2023), PAs not

only provide a valuable platform for communication

skill training but also offer a unique avenue for stu-

dents to explore and acquire new knowledge through

interactive experiences. Following previous studies

(Chheang et al., 2024; Grivokostopoulou et al., 2020),

participants responded positively to the application,

emphasizing its engaging nature, real-time feedback

capabilities, and the flexibility to practice interviews

at their convenience. Another important aspect of

the proposed system is its integration with Moodle.

This integration makes the system particularly valu-

able for supporting open-source initiatives and open

educational resources.

Despite the promising results, some limitations

should be acknowledged. First, the study involved a

small sample size, limiting the generalization of the

findings. Future research should conduct larger-scale

evaluations to validate the effectiveness of the PA.

Second, while the PA provided accurate and struc-

tured responses, its limited nonverbal cues (e.g., body

gestures, lip synchronization) affected perceived en-

gagement. Future improvements could enhance the

agent’s interactive cues and fidelity to improve the

learning experience (Nu

˜

nez et al., 2023). Moreover,

as a future development, we aim to integrate the appli-

cation into a fully immersive virtual environment, fur-

ther increasing engagement and realism in the learn-

ing experience. Furthermore, recognizing the current

limitations of the agent’s knowledge base, we plan to

expand its available data to improve the accuracy and

comprehensiveness of its responses.

REFERENCES

Alenezi, M. (2023). Digital learning and digital institution

in higher education. Education Sciences, 13(1):88.

Apoki, U. C., Hussein, A. M. A., Al-Chalabi, H. K. M.,

Badica, C., and Mocanu, M. L. (2022). The role of

pedagogical agents in personalised adaptive learning:

A review. Sustainability, 14(11):6442.

Baylor, A. and Ryu, J. (2003). The api (agent persona in-

strument) for assessing pedagogical agent persona. In

EdMedia+ innovate learning, pages 448–451. Associ-

ation for the Advancement of Computing in Education

(AACE).

Bergmann, K., Branigan, H. P., and Kopp, S. (2015).

Exploring the alignment space–lexical and gestural

alignment with real and virtual humans. Frontiers in

ICT, 2:7.

Black, J. and Abrams, M. (2018). Remote usability testing.

The Wiley Handbook of Human Computer Interaction,

1:277–297.

Brooke, J. et al. (1996). Sus-a quick and dirty usability

scale. Usability evaluation in industry, 189(194):4–7.

Chheang, V., Sharmin, S., M

´

arquez-Hern

´

andez, R., Patel,

M., Rajasekaran, D., Caulfield, G., Kiafar, B., Li,

J., Kullu, P., and Barmaki, R. L. (2024). Towards

anatomy education with generative ai-based virtual as-

sistants in immersive virtual reality environments. In

2024 IEEE International Conference on Artificial In-

telligence and eXtended and Virtual Reality (AIxVR),

pages 21–30. IEEE.

Chiou, E. K., Schroeder, N. L., and Craig, S. D. (2020).

How we trust, perceive, and learn from virtual hu-

mans: The influence of voice quality. Computers &

Education, 146:103756.

Convai (2024). https://www.convai.com, Accessed Jan. 25,

2025.

Dai, C.-P., Ke, F., Zhang, N., Barrett, A., West, L.,

Bhowmik, S., Southerland, S. A., and Yuan, X.

(2024). Designing conversational agents to support

student teacher learning in virtual reality simulation: a

case study. In Extended Abstracts of the CHI Confer-

ence on Human Factors in Computing Systems, pages

1–8.

Dai, L., Jung, M. M., Postma, M., and Louwerse, M. M.

(2022). A systematic review of pedagogical agent re-

search: Similarities, differences and unexplored as-

pects. Computers & Education, 190:104607.

Davis, R. O., Park, T., and Vincent, J. (2023). A meta-

analytic review on embodied pedagogical agent de-

Pedagogical Agents in Virtual Reality for Training of Biomedical Engineering Students

919

sign and testing formats. Journal of Educational Com-

puting Research, 61(1):30–67.

Grivokostopoulou, F., Kovas, K., and Perikos, I. (2020).

The effectiveness of embodied pedagogical agents and

their impact on students learning in virtual worlds. Ap-

plied Sciences, 10(5):1739.

Guimar

˜

aes, M., Prada, R., Santos, P. A., Dias, J., Jhala, A.,

and Mascarenhas, S. (2020). The impact of virtual re-

ality in the social presence of a virtual agent. In Pro-

ceedings of the 20th ACM International Conference

on Intelligent Virtual Agents, pages 1–8.

Heidig, S. and Clarebout, G. (2011). Do pedagogical agents

make a difference to student motivation and learning?

Educational Research Review, 6(1):27–54.

Kyrlitsias, C. and Michael-Grigoriou, D. (2022). Social in-

teraction with agents and avatars in immersive virtual

environments: A survey. Frontiers in Virtual Reality,

2:786665.

Lane, H. C. and Schroeder, N. L. (2022). Pedagogical

agents. In The Handbook on Socially Interactive

Agents: 20 years of Research on Embodied Conver-

sational Agents, Intelligent Virtual Agents, and Social

Robotics Volume 2: Interactivity, Platforms, Applica-

tion, pages 307–330.

Lugrin, B., Pelachaud, C., and Traum, D. (2022). The

Handbook on Socially Interactive Agents: 20 years of

Research on Embodied Conversational Agents, Intel-

ligent Virtual Agents, and Social Robotics Volume 2:

Interactivity, Platforms, Application. ACM.

Montesinos, L., Salinas-Navarro, D. E., and Santos-Diaz,

A. (2023). Transdisciplinary experiential learning

in biomedical engineering education for healthcare

systems improvement. BMC Medical Education,

23(1):207.

Nu

˜

nez, T. R., Jakobowsky, C., Prynda, K., Bergmann, K.,

and Rosenthal-von der P

¨

utten, A. M. (2023). Vir-

tual agents aligning to their users. lexical alignment

in human–agent-interaction and its psychological ef-

fects. International Journal of Human-Computer

Studies, 178:103093.

Petersen, G. B., Mottelson, A., and Makransky, G. (2021).

Pedagogical agents in educational vr: An in the wild

study. In Proceedings of the 2021 CHI Conference on

Human Factors in Computing Systems, CHI ’21, New

York, NY, USA. Association for Computing Machin-

ery.

PlayCanvas (2024). https://playcanvas.com/, Accessed Jan.

25, 2025.

Ready Player Me (2024). https://https://readyplayer.me/it,

Accessed Jan. 25, 2025.

Ryu, J. and Baylor, A. L. (2005). The psychometric struc-

ture of pedagogical agent persona. Technology In-

struction Cognition and Learning, 2(4):291.

Schroeder, N. L., Adesope, O. O., and Gilbert, R. B. (2013).

How effective are pedagogical agents for learning? a

meta-analytic review. Journal of Educational Com-

puting Research, 49(1):1–39.

Septiana, A. I., Mutijarsa, K., Putro, B. L., and Rosman-

syah, Y. (2024). Emotion-related pedagogical agent:

A systematic literature review. IEEE Access.

Sikstr

¨

om, P., Valentini, C., Sivunen, A., and K

¨

arkk

¨

ainen, T.

(2022). How pedagogical agents communicate with

students: A two-phase systematic review. Computers

& Education, 188:104564.

Sketchfab (2024). https://sketchfab.com/, Accessed Jan. 25,

2025.

Tao, Y., Zhang, G., Zhang, D., Wang, F., Zhou, Y., and Xu,

T. (2022). Exploring persona characteristics in learn-

ing: A review study of pedagogical agents. Procedia

computer science, 201:87–94.

Zhang, S., Jaldi, C. D., Schroeder, N. L., L

´

opez, A. A.,

Gladstone, J. R., and Heidig, S. (2024). Pedagogical

agent design for k-12 education: A systematic review.

Computers & Education, page 105165.

ERSeGEL 2025 - Workshop on Extended Reality and Serious Games for Education and Learning

920